* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download An Analysis of Automatic Chord Recognition Procedures for Music

Survey

Document related concepts

Transcript

An Analysis of

Automatic Chord

Recognition Procedures

for Music Recordings

Motivation

• Recognition task is so time-consuming and tedious work for musician

• Chord Recognition is consider as a mid-level feature representation will

largely help the high-level information retrieval task.

• Automated System can assist people to transcribe an audio file more quickly

and precisely .

Something interesting about chord recognition

• Guitar Chord Recognition

• 卡拉OK 評分系統

Entities

•

•

•

•

Introduction

Feature Extraction

Chord Recognition Methods

Experiment

Introduction

•

•

•

•

Definition of chord

Music theory about chord

Task of Chord Recognition

Example

Chord

• Chord : an aggregate of musical pitches sounded simultaneously

the simultaneous sound of two more different notes

Music Theory – Chord (cont’d)

• By looking at the number of distinct notes which xompose a chord, we

categorize the chord as triad, seventh, ninth ,etc.

• Bass Root : The lowest note of a chord.

Triad

Seventh

This Figure shows that the

three type of chord of bass

root C

Ninth

Music Theory – Chord (cont’d)

Chromic Circle

Shepards helix of pitch perception

Music Theory – Chord (cont’d)

• By looking at the structure of chords , we can categorize the chord as major,

minor, augmented, diminished

Music Theory – Chord (cont’d)

Task about chord recognition

• Chord Recognition : Analyze the harmonic content of sound.

System Frame Work

Chord Label Table

Bach BWV846

Ground truth chord label

Mathematical Representation

• Definition 1.1

Suppose a music audio file ie represented as A with its duration represented as T (T>0 ,

T∈R ) . Then the audio file is a function A: [0,T) R. [0,T) is called the temporal

domain time line of A .

Mathematical Representation(cont’d)

• Definition 1.2

For a given time line [0,T ) , we associate a segmentation into frames as

follows. Given a frame length parameter d (d ∈R) ,we define

f := [𝑡𝑛−1 , 𝑡𝑛 ) for n (n ∈Z) .

Then the frames associate to [0,T) are given by the F

F = {𝑓𝑛 | 𝑛∈[0:n],

𝑇

N:=[ ]

𝑑

}

Mathematic Representation(cont’d)

• Definition 1.3

We define a finite set Λ refered to chord label set. Furthermore,we define

that Λ consisy of chord labels λ ( λ ∈ Λ) that refer to the twelve major and

minor triads, i.e. ,

Λ ={C , 𝐶 # , … , 𝐵, 𝐶𝑚, 𝐶 # 𝑚, … , 𝐵𝑚}.

( 1.1)

Mathematic Representation(cont’d)

• Definition 1.4

For an arbitrary time frame 𝑓𝑛 , the chord revognition for a frame is to assign

a chord label λ𝑓𝑛 ∈ Λ to frame 𝑓𝑛 . Furthemore, suppose the temporal domain

of A is represented by a sequence of frames as [𝑓1 , 𝑓2 , 𝑓3 ,…𝑛], then the chord

recognition task for A consist in assigning to ezch frame 𝑓𝑛 , a chord label

λ𝑓𝑛 ∈A .

Feature Extraction

•

•

•

•

•

Pitch Features

Chroma Features

Chroma Feature with Logarithmic Compression

CENS Features

CRP Features

Pitch

• Pitch: the perceived frequency of sound

• Pitch Feature is very important

• Before conducting any experiment of music recognition ,it should be done

and make sure the correctness at first

• Something Special in Pitch

• Play piano song in C is the same as play the other instrument song in C.

• Play the song with A# in speed 60 is the same as play the song with A# in speed 140.

Pitch Feature

• Pitch Feature

• Is Able to recognize the frequency of note.

• Is Able to recognize the center frequency of chord or music segment.

• Unable to recognize the instrument.

• Unable to recognize the speed of playing. (cannot detect tempo)

• The strong onsets or string vibratos will lead to energy spreading in a large frequency

range. It may cause some problem . smoothing frequency fluctuation

Pitch Feature – Decomposed pitch

• Decomposed the audio signal into 88 frequency bands.

• It corresponding to 88 musical notes from A0 to C8 .

• Each has equal-tempered scale.

• From the Equal temperament , let p denote as the pitch number p∈[1:120]

,and let 𝑓𝑝 denote the center frequency of the pitch p , then we have the

relation :

𝒇𝒑 = 𝟐

𝒑−𝟔𝟗

𝟏𝟐

∗ 𝟒𝟒𝟎 𝑯𝒛

≒ 1.059 * 𝒇𝒑−𝟏

(2.1)

Feature – Decompose pitch(cont’d)

• Conclusion about pitch feature

• The equation (2.1) reveals that the higher the pitch is, the frequency range occupied .

• In another words ,as the pitch get higher the bandwidth of corresponding bandpass

filters get wider.

Pitch Feature – Decomposed Pitch(cont’d)

• The unit of measurement is always not clear , therefore, to solve this

problem , the paper also compute the short-time mean square power of

note. (it means the energy of each note)

C4 : 261.60 | E4 : 329.63 | G4: 392.00

Pitch Feature – Local Energy(STMSP)

• Definition

• Denote a specific subband signal corresponding the pitch p as 𝒙𝒑 ,k as the index of

the sample point inside the window, n as the starting position of the window on the

signal, Then the STMSP is defined as 𝑘∈[𝑛:𝑛+𝑤] |𝑥𝑝 (𝑘)|2

Pitch Feature – Local Energy(STMSP)

• Implementation

• Slice audio into many windows

• Each window consistently shifted on the signal until the end of each time shifting it by a

hop size h =

𝑤𝑖𝑛𝑑𝑜𝑤 𝑠𝑖𝑧𝑒

2

, yielding 50% overlap.

• Each window size is closely related to the hop size. (Much size may cost much computation)

• Compute its STMSP

𝑘∈[𝑛:𝑛+𝑤]

|𝑥𝑝 (𝑘)|2

Pitch Feature – Local Energy(STMSP)

C4:60 | E4:64 | G4:67

Pitch Feature – Local Energy(STMSP)

• After extracting ,in some frequency, still has low intensity. It will affect the

result of note identification. (Harmonics)

• In the case , only consist one type instrument will affect the identification of

notes .

How about the real audio ?

• In fact, this phenomenon is common challenge in recognition task.

Pitch Feature – Local Energy(STMSP)

• Harmonics

• A harmonics of a wave is a component frequency

of the signal that is an integer multiple of the

fundamental frequency.

Chroma Features

• As we know that human’s perception of notes has a certain character:

• if a note is one or more octave higher than another notem ,then the two

notes sounds has the same tone pitch but has different tone height

• For example : A4 A5 has the same pitch and has different tone height

Chroma Features

• In chord recognition : we are more interesting on tone pitch

don’t care about the tone height.

we can reduce 88 pitches into 12 pitches

Chroma Feature

• According to Equal temperament, the note which is octave higher than

another note , this note is twice of the frequency of another .

• As we know thatA harmonics of a wave is a component frequenc of the

signal that is an integer multiple of the fundamental frequency.

Chroma Feature

• Let the 12-dimention vector x = (x(1),x(2),x(3),…,x(12))𝑇

• x(1) represent C , x(2) represent C# ,and so on

• After Extract Pitch Feature , we have the short-time energy of each pitch ,

therefore we do FFT on the spectrum to transform Spectrum from pitch

domain into chroma-domain . And Create a spectrum called chromatrum

Chroma Feature

• After transformation ( see left ), in

order to reduce the effect of

harmonics , the paper perform L2normailization on the resulting

vectors .

• Finally ,Set the threshold ,if the

sum falls below a certain threshold,

replace the original chroma vector

by the unit vector.

Chroma Feature

• In Figure (a) the onset is clearly seen in

first 0.5 sec. Thus we cannot know the

condition of the note (Is it keep silence or

has remaining sound)

• In Figure (b) , if it is keep silence , we can

easily identified the unit vector whose

energe is same for all chromas.

Chroma Feature

• This feature is still imperfect .

from 5.8 to 7.5 sec , the root note

G is much more stronger than

note C and note B , after l2-norm

it will cause the difference grow up

fail to falls in threshold

make an problem !!

Chroma Feature with logarithmic Compression

• Logarithmic Compression

• To adjust the dynamic range if the original signal to enhance the clarity of weaker

transients, especially in high frequency.

• In other word, there are some weak chroma has very low intensity, we can try to

enhance it.

Chroma Feature with logarithmic Compression

CENS Features

• The Chroma feature is already achieved the goal, but it is still be further

improved considering variations of music property.

• E.g. dynamics , timbres, articulation ,… etc..

• In order to be robust against ,we add short-time statistics feature (CENS)

over energy distribution.

CENS Feature(cont’d)

• 5 stages for computing CENS Features

1. Normalization

• L1-Normalization

• To absorb difference in the sound intensity or dynamics.

2. Quantization

• Quantize the component base on logarithmically chosen threshold to simulate the human’s

perception of loudness (i.e. dB )

CENS Features(cont’d)

• 5 stages for computing CENS Feature

3. Smoothing

• To blend in the context information and reduce the influence of local error

4. DownSampling

• Reduce the sampling rate by integer factor

• To enhance the performance of computation

CENS Features(cont’d)

• 5 stages for computing CENS Feature

5. Normalization

• L2-normalization

•

Then, denote the result CENS feature by CENS(w,d)

• w : size of convolution window

• d: downsampling rate

CENS Features(cont’d)

CRP Features

• The general idea: to discard timbre-related information

•

MFCCs PFCCs

• Applies a logarithmical compression and uses Discrete Cosine Transform

(DCT) to transform logarithmized pitch representation

CRP Features

CISP Features

• While generating the chroma representation features

Loss of detail

• Adapt the chroma feature extraction according to Dan Elli’s instantaneous

frequency-based chromagram . The paper name it CISP Features.

• CISP can get finely estimation of frequency.

CISP Features

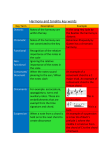

Feature Extraction -Conclusion

Feature

Means

Problem Conquer

Decomposed pitch

Precise Frequency

The result is always not clear .

STMSP Features

Energy Spreading in pitch space

Harmonics

Chroma Features

Energy Spreading in

chroma space

Erase of the note which is much

more weaker than root note

Chroma Features with

Log Compression

Enhance low intensity

In high frequency.

Already achieved the goal ,but lack of

some variety of music property

CENS Features

Add further degree for robusting the

utility

CRP Features

Boost the degree of timbre

invariance

CISP Features

Identiy Strong tonal components in

spectrum

Method for Chord Recognition

Template-Based Chord Recognition

• Template matching

• A technique for finding small parts of the image which match a template image

• Template-Based Approach

• For templates without strong features, or for when the bulk of the template image

constitutes the matching image.

Template-Based Chord Recognition

• Template-Based Chord Recognition

• Step1. Define the set of template

• Step2. Distance measure

• Step3. Compute distance between feature vector and chord template feature

Template-Based Chord Recognition

• Step1. Define the set of template

• Specify the chord template set

• Binary Templates

• Harmonically Enriched Templates

• Averaged Templates

Step1. Define the set of template

• Binary Template

• Set the template Г is a subset of Feature set F = 𝑹12

• Set of binary templates Г𝑏 with element 𝑡λ𝑏 (λ is a element of chord label set Λ)

• E.g. The Chord label C ={C,E,G }

𝒕𝒃c = (1, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0)T

Step1. Define the set of template

• Harmonically Enriched Template

• Set of harmonically enriched templates: T h with elements thλ

• Weight of each harmonic assigned by a multiple of empirical decay factor s and

amplitude.

• From the experiment of Emilia G´omez , s = 0.6

• In order to avoid the zero weight , set the weights

of all note to very small value 0.005 at first .

Step1. Define the set of template

• E.g. The chord label C = {C,E,G}

𝑡λℎ = (0.254, 0.005, 0.061, 0.005, 0.272, 0.005,

0.005, 0.315, 0.018, 0.005, 0.005, 0.079)T

Step1. Define the set of

template

Averaged Template

1.

2.

3.

4.

Divide training data into several parts .

Extract Feature

Match label

If fail to match ,Cyclically Shift(label,C)

then go to 3

5. Average it and generate to template.

Step1. Define the set of template

• Algorithm Cyclically Shift(chord label λ1 , 𝑐ℎ𝑜𝑟𝑑 𝑙𝑎𝑏𝑒𝑙 λ2 ) : return feature

frame 𝑥2

1. Set 𝑑𝑐ℎ𝑜𝑟𝑑 be how many interval between λ1 and λ2

2. Let M : Λ Z , be a mapping function which maps note to integer i ( i = [1:24])

3. Denote 𝑑𝑐ℎ𝑜𝑟𝑑 = |M( λ1 )-M( λ2 )|

4. Denote the feature frame corresponding to λ𝑖 is 𝑥𝑖

5. Then the feature frame 𝑥 = σ(𝑑𝑐ℎ𝑜𝑟𝑑( λ1, λ2)) (x1)

2

end

Averaged Templates- Sample

Feature of audio files segment sequence

Averaged Templates- Sample

Feature of audio files segment sequence

Step 2. Distance Measure

• Use Cosine measure to compre how similar a testing data and templates .

• Cosine measure

𝑑𝐶 𝑥, 𝑦 = 1 −

if

<𝑥|𝑦>

𝑥 .| 𝑦 |

𝑥 =0 or 𝑦 =0 , 𝑑𝐶 𝑥, 𝑦 =

𝑥−𝑦

2

Step3. Compute distance between

feature vector and chord template feature

• For binary template

• For harmonically enriched templates

• For averaged templates

Result

(a) Binary templates

(b) Harmonically enriched templates

(c) Averaged templates

Result

(c) Averaged templates

Statistical Model-based Chord Recognition

• Statistical Model

• a formalization of relationships between variables in the form of mathematical

equations.

• To describe how one or more random variable are related to one or more other

variables.

Statistical Model-based Chord Recognition

• Statistical Model-based Chord Recognition

• Introduce the multivariate Gaussian model

• Present Three Chord Recognition base on this model

• Mahalanobis Distance Based model

• Gaussian Probability based-Method

• Hidden Markov Models-based Method

Multivariate Gaussian Distribution

• Consider the feature vector to be 12-dimention vector follows the Gaussian

distribution.

• The chord pattern can be described by 12-dimentional distribution.

• The Gaussian distribution can be completed determined by the mean vector

and the convariance.

Multivariate Gaussian Distribution

• Mean vector

• Let x ∈ RD be a D-dimensional random vector and all its entries have finite variance.

• The mean vector of x is a vector µ consisting of the expectation of each element of x,

concretely,

The mean vector

means the centroid of

a distribution.

Multivariate Gaussian Distribution

• Convariance Matrix

• Let x ∈ RD be a D-dimensional random vector, and all of its elements have finite

variance, then the covariance matrix Σ ∈ RD×D is the matrix with the value at (i, j)th

entry being the covariance:

𝑖𝑗

= 𝑐𝑜𝑣 𝑥 𝑖 , 𝑥 𝑗

=𝐸 𝑥 𝑖 −μ 𝑖

𝑋 𝑗 −μ 𝑗

where μ(i)=E(x(i))

This definition can be rewrite to

Σ = E( (x-E[x])(x-E[x])’ )

,

Multivariate Gaussian Distribution

• Specify as chord model

• Denote the convariance of C Cm as Σ𝑐 Σ𝑐𝑚

• Denote the mean vector of C Cm as μ𝑐 μ𝑐𝑚

• Compute the density function of C Cm and denote it as 𝑓𝑐 , 𝑓𝑐𝑚

Multivariate Gaussian

Distribution

1. Use Gaussian Distribution to interpret

the data .

2. Training Labels use some method.

3. Match Label

4. If fail to match, Cyclically Shift(), go

to 3

5. Testing

Mahalanobis Distance Based-Method

• Is a very useful way of determining the similarity of a set of values from an

unknown sample to a set of values measured from a collection of known samples.

• Def :

• Let x be a feature vector, x ∈ F \ {0} and D be the dimension of F, and let µ ∈ RD be the

mean value and Σ ∈ R D×D be the covariance matrix of a multivariate normal distribution.

Then, we define the Mahalanobis distance dM = dµ,SM : F × F → R with respect to µ, Σ by

•

Mahalanobis Distance Based-Method

• if the matrix S is the identity matrix, the Mahalanobis distance reduces to the Euclidean

distance. If the Σ is diagonal, then the resulting distance measure reduces to the

normalized Euclidean distance:

Gaussian Probability based-Method

• This method is to quantify the relationship between a feature vector and a

distribution by probability.

• The density function of Gaussian Probability

• The density function shows that how possible that the feature vector fit the

distribution of a chord pattern.

Hidden Markov Models-based Method

• Hidden Markov Models-based (HMM-based ) Method

• A stochastic model which fulfills the Markov property.

• HMM can be represented by its initial probability ,observation probability and transition

probability .

• hidden state, observation and transition

• Adapting these parameter to chord recognition

• Hidden state : a chord state

• Observation probability : feature vector

𝑡ℎ𝑒 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑐ℎ𝑜𝑟𝑑 𝑗𝑢𝑚𝑝 𝑓𝑟𝑜𝑚 𝑠𝑡𝑎𝑡𝑒 1 𝑡𝑜 𝑠𝑡𝑎𝑡𝑒 2

• Transition probability : 𝑡ℎ𝑒 𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑐ℎ𝑜𝑟𝑑 𝑗𝑢𝑚𝑝 𝑓𝑟𝑜𝑚 𝑠𝑡𝑎𝑡𝑒 1 𝑡𝑜 𝑡ℎ𝑒 𝑜ℎ𝑡𝑒𝑟

Hidden Markov Models-based Method

• Viterbi algorithm

• A DP algorithm for finding the most likely sequence of hidden state which called

Viterbi path

Experiment

•

•

•

•

Dataset

Evaluation

Experiment Result of features

Experiment on Chord recognition Method

Dataset

• Take all 180 songs as a whole Dataset : 𝐷𝐵𝑒𝑒𝑡𝑙𝑒𝑠

• And further partition it into three parts

• 𝐷1𝐵𝑒𝑒𝑡𝑙𝑒𝑠 : 60songs

• 𝐷2𝐵𝑒𝑒𝑡𝑙𝑒𝑠 : 60songs

• 𝐷3𝐵𝑒𝑒𝑡𝑙𝑒𝑠 : 60songs

Dataset

• Select 4 songs represent classical music

• And Select Beetles’s Album represent Pop music

Dataset

Evaluation

• Using F1-measure

• Precision =

• Recall =

• F=

|{𝑟𝑒𝑙𝑒𝑣𝑛𝑡 𝑑𝑜𝑐𝑢𝑚𝑒𝑛𝑡}∩{retrieved

document}|

{𝑟𝑒𝑡𝑟𝑢𝑒𝑣𝑒𝑑 𝑑𝑜𝑐𝑢𝑚𝑒𝑛𝑡}

|{𝑟𝑒𝑙𝑒𝑣𝑛𝑡 𝑑𝑜𝑐𝑢𝑚𝑒𝑛𝑡}∩{retrieved

{𝑟𝑒𝑙𝑒𝑣𝑎𝑛𝑡 𝑑𝑜𝑐𝑢𝑚𝑒𝑛𝑡}

2∗𝑝𝑟𝑒𝑐𝑖𝑠𝑖𝑜𝑛∗𝑟𝑒𝑐𝑎𝑙𝑙

𝑝𝑟𝑒𝑐𝑖𝑠𝑖𝑜𝑛+𝑟𝑒𝑐𝑎𝑙𝑙

document}|

Experiment on Feature

Extraction

This Table shows an accuracy of all features

and all method

Training Data : 𝐷1𝐵𝑒𝑒𝑡𝑙𝑒𝑠

Testing Data : 𝐷𝐵𝑒𝑒𝑡𝑙𝑒𝑠

Experiment on Feature

Extraction

This Table shows an accuracy of all features

and all method

Training Data : 𝐷2𝐵𝑒𝑒𝑡𝑙𝑒𝑠

Testing Data : 𝐷𝐵𝑒𝑒𝑡𝑙𝑒𝑠

Experiment on Feature Extraction

• Effect of Logarithmic Compression

Effect of logarithmic compression

Comparisons of

different

logarithmic

compression on

chroma feature

Experiment on Chord Recognition Method

Error Analyze

Error Analyze

Tuning

• Sometimes , the music recording is deviate from the standard tuning. Thus,

we give it some deviation in band-pass filter

• Use 6 different filter to extract the frequency and give it a deviation

[0,0.25,0.33,0.5,0.67,0.75] and applies it .

Effect of tuning

(Training Data : 𝑫𝑩𝒆𝒆𝒕𝒍𝒆𝒔

)

𝟏

(Testing Data : 𝑫𝑩𝒆𝒆𝒕𝒍𝒆𝒔 )

+ : tuning

- : do nothing

Histogram of recognition with

tuning and without tuning

Using new Feature :

Beat Synchronized

Feature for HMM

and Binary

templates

Using Different Data Set

Harmonic Percussive Source Separation(HPSS)

• remove the percussive component from the original audio file and to

perform a more robust chord recognition on the remaining harmonic

component.

• Recompute the features from the separated harmonic component of each

audio file and then evaluated the recognition accuracies using the same

recognizers as before.

Shows differences between features with

and without HPSS

Maximum increased recognition

difference with and without HPSS.

Maximum decreased recognition

difference with and without HPSS.

Histogram of recognition

difference with HPSS, using

feature CLP(1000).

Recognition accuracies of

classical dataset using 𝑻𝑫 . Test:

𝑫𝟒 .

Recognition accuracies of classical dataset using HMM.

Summary

• Try to enhance Feature

• Using HPSS pre-processing

• Add more information : bass note of the chord and the key information in

the feature.