* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Ch15-Notes

Expectation–maximization algorithm wikipedia , lookup

Data assimilation wikipedia , lookup

Time series wikipedia , lookup

Interaction (statistics) wikipedia , lookup

Choice modelling wikipedia , lookup

Instrumental variables estimation wikipedia , lookup

Regression toward the mean wikipedia , lookup

Linear regression wikipedia , lookup

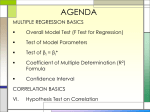

Chapter 15: Multiple Regression §15.1 Regression Model and Regression Equation Multiple Regression Model y = Y/X + = 0 + 1 X1 + 2 X2+ ….. + p Xp + Multiple Regression Equation E(y) = Y/X = 0 + 1 X1 + 2 X2+ ….. + p Xp Estimated Multiple Regression Equation ŷ = b0 + b1 X1 + b2 X2+ ….. + bp Xp where b0, b1, b2, … bp are the estimates of 0, 1, 2, ….. + p and ŷ = predicted value of y §15.2 Least Squares Method Determining the values of where b0, b1, b2, … bp from sample data such that the least squares criterion, i.e. Sum of Squares due to Error (SSE) = (Yi – Ŷi)2 is minimum. We will use Excel Data Analysis to develop these estimates. §15.3 Multiple Coefficient of Determination Sums of Squares SST = Total Sum of Squares = (Yi – i)2 SSR = Sum of Squares due to Regression = (Ŷi – SSE = Sum of Squares due to Error = (Yi – Ŷi)2 SST = SSR + SSE ANOVA Table Source Sum of squares Regression SSR Error SSE Total SST Df p n-p-1 n-1 i)2 Mean Square MSR = SSR/p MSE = SSE/(n-p-1) Multiple Coefficient of Determination (R2) = F-test F = MSR/MSE SSR SST R2 is a measure of the goodness of fit for the estimated regression equation. Adjusted Multiple Coefficient of Determination (R2a ) = 1 – (1 – R2) n-1 n-p-1 is a measure of the goodness of fit for the estimated regression equation which accounts for the number of independent variables in the model. R2a §15.4 Model Assumptions Multiple Regression Model: y = Y/X + = 0 + 1 X1 + 2 X2+ ….. + p Xp + 1. 2. 3. 4. The error term is a random variable with a mean or expected value of zero; i.e. E() = 0. This also means E(y) = Y/X = 0 + 1 X1 + 2 X2+ ….. + p Xp The variance of , denoted by 2, is the same for all values of the independent variables, X1, X2, ….. , Xp. The values of are independent. The error term is a normally distributed random variable. Therefore, Y is also a normally distributed random variable. §15.5 Testing for significance Ho: 1 = 2 = ….. = p = 0 Ha: One or more of the parameters is not equal to zero. F-test for significance of regression MSR = Mean Squares due to regression = SSR p MSE = Mean Squares due to error = Test statistic = Fcalc = SSE n-p-1 MSR MSE p-value = F.DIST.RT(Fcalc,df1,df2), where df1 of MSR = p and df2 = df of MSE = n – p -1 t-test for individual significance for any parameter i: Ho: i = 0 Ha: i ≠ 0 t-Test Test statistic = tcalc = b1 Sb1 p-value = T.DIST.2T(ABS(tcalc),df), where df = n – p - 1 Multicollinearity A term used to describe the case when the independent variables in a multiple regression model are correlated. §15.6 Using the Estimated Regression Equation for Estimation and Prediction Point estimate of Y for a given set of values X1, X2, ….., Xp ŷ = b0 + b1 X1 + b2 X2+ ….. + bp Xp Estimation and prediction in Excel Prediction interval for an individual Y for a given set of values of X1, X2, ….., Xp using the special regression output. Confidence Interval Estimate of yp : Ŷ± t/2 SŶp 2 where SŶp = √Sind − MSE and df = df of MSE, and Sind = From special regression output §15.7 Categorical Independent Variables When independent variables contain categorical data dummy variables are used to represent the categories contained in the variable. Dummy variables have only two values one and zero, with the value one representing the observation belonging to a given category and zero representing the observation not belonging to it. Example: Johnson Filtration, Inc. (Table 15.5) Dependent variable: Y = Repair time in hours Independent variables: X1 = Number of months since last service X2 = Type of repair (1 = Mechanical, 0 = Electrical) Model: Y = 0 + 1X1 + 2X2 + Predictor equation = Ŷ = b0 + b1X1 + b2X2 More Complex Categorical Variables These are categorical variables with more than two categories. Dummy variables (i.e. a variable with 0 = not a member of the category, 1 = member of the category) are defined to represent these complex categorical variables. Number of dummy variables requird is one less than the number of categories present. Example: Extension to the Johnson Filtration, Inc. example (Not in the text) Dependent variable: Y = Repair time in hours Independent variables: X1 = Number of months since last service X2 = Type of repair (Mechanical, Electrical, Electronic) Dummy variables for X2: Number of categories = 3 Number of dummy variables required = 3 – 1 = 2 Let D1 = 1 if Mechanical repair, 0 otherwise Let D2 = 1 if Electrical repair, 0 otherwise Note: The case when both D1 and D2 are zero will represent Electronic repair. Model: Y = 0 + 1X1 + 2D1 + 3D2 + Predictor equation = Ŷ = b0 + b1X1 + b2D1 + b3D2 §15.8 Residual Analysis Residual = Yi – Ŷi. ̂i Y −Y Standardized residual = S i yi −ŷi where Syi−ŷi = the standard deviation of residual i = S√1 - hi Given the regression model: Y = 0 + 1 X1 + 2 X2+ ….. + p Xp + Model assumptions: 1. The error term is a random variable with a mean or expected value of zero; i.e. E() = 0. This also means E(y) = Y/X = 0 + 1 X1 + 2 X2+ ….. + p Xp 2. The variance of , denoted by 2, is the same for all values of the independent variables, X1, X2, ….. , Xp. 3. The values of are independent. 4. The error term is a normally distributed random variable. Therefore, Y is also a normally distributed random variable. Plots to study: 1. Residuals against each X-variable 2. Standardized residuals against predicted Y values 3. Normal probability plot Plot #1 and 2: If random pattern is observed with about the same variability for values X and Ŷ on the X-axis, first three assumptions may be deemed validated. Plot #2: If 95% of the standardized residuals fall within ±2, the normal probability assumption may be considered valid. Plot #3: Normal Probability Plot Another approach for determining the validity of the assumption that the error term has a normal distribution is the normal probability plot. Detecting Outliers If a standardized residual falls outside ±2 that corresponding observations may be considered an outlier. Studentized residuals is another way to standardize residuals that can detect outliers more accurately. i. ii. iii. Outliers may represent erroneous data; if so, the data should be corrected. Outliers may signal a violation of model assumptions; if so, another model should be considered. Outliers may simply be unusual values that occurred by chance. In this case, they should be retained. Influential Observations Leverage or Cook’s Distance may be used to detect influential observations. §15.9 Logistic Regression When the dependent variable Y can only take 0 or 1 as possible values, we have to use Logistic Regression. Dependent variable: Y = 0 or 1 Independent variables: Interval and categorical variables (defined as dummy variables) Examples: Problem Marketing Insurance fraud Loan approval Dependent variable Make a sale (0 = No, 1 = Yes) Fraudulent claim (0 = No, 1 = Yes) Good credit (0 = No, 1 = yes) Independent variables Gender, last sales amount, No. of orders last year, etc. Nature of claim, claim amount, how long insured, etc. Income, credit score, owns house, etc. Define L = 0 + 1X1 + 2X2 + …. pXp 𝑒𝐿 Then, Logistic Regression model is defined as E(Y) = 1+𝑒 𝐿 Note E(Y) = 0 or 1, and is defined as P(Y = 1|X1, X2, …. Xp) = Py Estimation: ̂= Let Y 𝑒 b0+b1 x1 +⋯+bp xp 1+𝑒 b0 +b1 x1 +⋯+bp xp Then, the objective is to find the estimates b0, b1, b2 … bp, such that Ŷ deviates from actual Y values the least. This is not a linear function. Therefore Data Analysis Regression command cannot be used. We will generate these estimates using SAS software package. I will provide the SAS results. Test of overall significance: Ho: 1 = 2 = ….. = p = 0 Ha: One or more of the parameters is not equal to zero. p-value = SAS results Test of individual significance: Ho: i = 0 Ha: i ≠ 0 p-value = SAS results Odds and Odds Ratio Odds = 𝑃𝑌 where Py = Ŷ 1−𝑃𝑌 Odds ratio measures the impact on the odds of 1 unit increase in a given independent variable, i.e. X-variable, with the values of all other independent variable being the same. Odds Odds ratio = Odds1 = eb1 0 where, Odds0 = Odds with some Xi = a, and Odds1 = Odds with Xi = a + 1