* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Entropy, Strings, and Partitions of Integers

Path integral formulation wikipedia , lookup

Casimir effect wikipedia , lookup

Canonical quantization wikipedia , lookup

X-ray photoelectron spectroscopy wikipedia , lookup

Wave–particle duality wikipedia , lookup

Particle in a box wikipedia , lookup

Renormalization group wikipedia , lookup

Theoretical and experimental justification for the Schrödinger equation wikipedia , lookup

Entropy, Strings, and Partitions of Integers

Jonathan Tannenhauser

ANOMALY

Wellesley College

November 4, 2010

1

1. Preliminaries

A partition of a positive integer N is a way of writing N as a sum

of positive integers.

1. Preliminaries

A partition of a positive integer N is a way of writing N as a sum

of positive integers.

How many partitions does the positive integer N have?

Call this number p(N).

1. Preliminaries

A partition of a positive integer N is a way of writing N as a sum

of positive integers.

How many partitions does the positive integer N have?

Call this number p(N).

Example. p(4) = 5:

I

4

I

3+1

I

2+2

I

2+1+1

I

1+1+1+1

There’s a formula for p(N):

∞

1 X

√

d

p(N) = √

Ak (N) k

dN

π 2 k=1

where

X

Ak (N) =

sinh

q

π

2

k

È

3

N−

N−

1

24

1

24

,

e πi(s(m,k)−2Nm/k)

0≤m<k , (m,k)=1

and

s(m, k) =

k−1

X

j=1

j

k

jm

jm

1

−

−

k

k

2

.

(Rademacher 1937)

3

In 1918, Hardy and Ramanujan proved an asymptotic formula for

p(N):

For large N,

1

√ exp 2π

p(N) ∼

4N 3

Ê !

N

6

4

In 1918, Hardy and Ramanujan proved an asymptotic formula for

p(N):

For large N,

1

√ exp 2π

p(N) ∼

4N 3

Ê !

N

6

Our Goal: To prove (the red part of) this formula.

In 1918, Hardy and Ramanujan proved an asymptotic formula for

p(N):

For large N,

1

√ exp 2π

p(N) ∼

4N 3

Ê !

N

6

Our Goal: To prove (the red part of) this formula.

Ê

ln p(N) ∼ 2π

N

.

6

Proposition. (Euler)

∞

X

N=0

p(N)x N =

∞

Y

1

.

1 − x`

`=1

5

Proposition. (Euler)

∞

X

p(N)x N =

N=0

∞

Y

1

.

1 − x`

`=1

Proof. Expand the right-hand side:

(1 + x 1 + (x 1 )2 + · · · ) (1 + x 2 + (x 2 )2 + · · · ) (1 + x 3 + (x 3 )2 · · · ) · · · .

`=1

`=2

`=3

5

Proposition. (Euler)

∞

X

p(N)x N =

N=0

∞

Y

1

.

1 − x`

`=1

Proof. Expand the right-hand side:

(1 + x 1 + (x 1 )2 + · · · ) (1 + x 2 + (x 2 )2 + · · · ) (1 + x 3 + (x 3 )2 · · · ) · · · .

`=1

`=2

`=3

Each partition of N gives one way of realizing x N in the product.

5

Proposition. (Euler)

∞

X

p(N)x N =

N=0

∞

Y

1

.

1 − x`

`=1

Proof. Expand the right-hand side:

(1 + x 1 + (x 1 )2 + · · · ) (1 + x 2 + (x 2 )2 + · · · ) (1 + x 3 + (x 3 )2 · · · ) · · · .

`=1

`=2

`=3

Each partition of N gives one way of realizing x N in the product.

For example, the partition 7 = 1 + 1 + 2 + 3 is realized by the blue

terms.

5

Proposition. (Euler)

∞

X

p(N)x N =

N=0

∞

Y

1

.

1 − x`

`=1

Proof. Expand the right-hand side:

(1 + x 1 + (x 1 )2 + · · · ) (1 + x 2 + (x 2 )2 + · · · ) (1 + x 3 + (x 3 )2 · · · ) · · · .

`=1

`=2

`=3

Each partition of N gives one way of realizing x N in the product.

For example, the partition 7 = 1 + 1 + 2 + 3 is realized by the blue

terms.

Therefore, the coefficient of x N in the product is the number of

partitions of N.

5

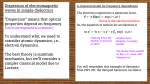

2. The Quantum String

6

2. The Quantum String

A harmonic oscillator is a system that vibrates back and forth at

some frequency ω, such as a mass on a spring.

6

2. The Quantum String

A harmonic oscillator is a system that vibrates back and forth at

some frequency ω, such as a mass on a spring.

In classical mechanics, the energy of an oscillator can be any

non-negative number.

6

2. The Quantum String

A harmonic oscillator is a system that vibrates back and forth at

some frequency ω, such as a mass on a spring.

In classical mechanics, the energy of an oscillator can be any

non-negative number.

In quantum mechanics, the only permitted energies are of the form

En = n~ω ,

where n is a non-negative integer and ~ = 1.05 × 10−34 J·s is

Planck’s constant.

6

2. The Quantum String

A harmonic oscillator is a system that vibrates back and forth at

some frequency ω, such as a mass on a spring.

In classical mechanics, the energy of an oscillator can be any

non-negative number.

In quantum mechanics, the only permitted energies are of the form

En = n~ω ,

where n is a non-negative integer and ~ = 1.05 × 10−34 J·s is

Planck’s constant.

Terminology: An oscillator with energy En “is in state n” or

“has n excitations at frequency ω.”

6

A classical string has some fundamental frequency ω, set by the

requirement that the ends of the string are fixed.

7

A classical string has some fundamental frequency ω, set by the

requirement that the ends of the string are fixed.

The string’s allowed frequencies of vibration are positive integer

multiples of this fundamental frequency:

ω, 2ω, 3ω, . . . .

7

So we can think of a string as being made up of an infinite

collection of harmonic oscillators, with frequencies ω, 2ω, 3ω, . . . .

So we can think of a string as being made up of an infinite

collection of harmonic oscillators, with frequencies ω, 2ω, 3ω, . . . .

Any pattern of vibration can be decomposed as a linear

combination of these basic modes of vibration,

y (x) = a1 sin ωx + a2 sin 2ωx + a3 sin 3ωx + · · · .

A quantum string is a collection of oscillators with frequencies `ω,

where ` is a positive integer.

A quantum string is a collection of oscillators with frequencies `ω,

where ` is a positive integer.

Each oscillator has a discrete series of allowed energies

E`,n` = n` ~(`ω) .

(n` = 0, 1, 2, . . . .)

A quantum string is a collection of oscillators with frequencies `ω,

where ` is a positive integer.

Each oscillator has a discrete series of allowed energies

E`,n` = n` ~(`ω) .

(n` = 0, 1, 2, . . . .)

Therefore, the state of a quantum string is specified by a sequence

ψ of non-negative integers,

ψ = (n1 , n2 , n3 , . . . ) ,

where n` is the state number of the oscillator with frequency `ω.

A quantum string is a collection of oscillators with frequencies `ω,

where ` is a positive integer.

Each oscillator has a discrete series of allowed energies

E`,n` = n` ~(`ω) .

(n` = 0, 1, 2, . . . .)

Therefore, the state of a quantum string is specified by a sequence

ψ of non-negative integers,

ψ = (n1 , n2 , n3 , . . . ) ,

where n` is the state number of the oscillator with frequency `ω.

The total energy of a string in state ψ is the sum of the energies in

each of the individual oscillators,

E = n1 ~ω + n2 ~(2ω) + n3 ~(3ω) + · · · ≡ N~ω ,

where

N = n1 + 2n2 + 3n3 + · · · .

There is a correspondence between states ψ of the string and

partitions of N = n1 + 2n2 + 3n3 + · · · :

The state ψ = (n1 , n2 , n3 , . . . ) corresponds to the partition

z

n1 summands

}|

{

z

n2 summands

}|

{

z

n3 summands

}|

{

N = 1 + 1 + ··· + 1+2 + 2 + ··· + 2+3 + 3 + ··· + 3+··· .

10

There is a correspondence between states ψ of the string and

partitions of N = n1 + 2n2 + 3n3 + · · · :

The state ψ = (n1 , n2 , n3 , . . . ) corresponds to the partition

z

n1 summands

}|

{

z

n2 summands

}|

{

z

n3 summands

}|

{

N = 1 + 1 + ··· + 1+2 + 2 + ··· + 2+3 + 3 + ··· + 3+··· .

So if we can count how many string states there are with energy

N~ω, we’ll have solved our partition problem!

10

3. Statistical Mechanics

11

3. Statistical Mechanics

In classical physics, if we know the position and velocity of every

particle in a system at a given time, then we can calculate the

course of that system forever after.

11

3. Statistical Mechanics

In classical physics, if we know the position and velocity of every

particle in a system at a given time, then we can calculate the

course of that system forever after.

In practice, macroscopic systems contain too many particles to

make such a calculation feasible.

11

3. Statistical Mechanics

In classical physics, if we know the position and velocity of every

particle in a system at a given time, then we can calculate the

course of that system forever after.

In practice, macroscopic systems contain too many particles to

make such a calculation feasible.

But what we really care about is not the microscopic behavior of

each of the constituent particles, but the macroscopic features of

the system—its average energy, its temperature, its pressure.

11

3. Statistical Mechanics

In classical physics, if we know the position and velocity of every

particle in a system at a given time, then we can calculate the

course of that system forever after.

In practice, macroscopic systems contain too many particles to

make such a calculation feasible.

But what we really care about is not the microscopic behavior of

each of the constituent particles, but the macroscopic features of

the system—its average energy, its temperature, its pressure.

These features depend only on the aggregate properties of the

particles, so we can use statistical methods to study them.

11

A. Entropy

12

A. Entropy

Consider an isolated physical system in a particular macroscopic

state.

12

A. Entropy

Consider an isolated physical system in a particular macroscopic

state.

There are many microstates—call their number W —that give rise

to this macrostate.

A. Entropy

Consider an isolated physical system in a particular macroscopic

state.

There are many microstates—call their number W —that give rise

to this macrostate.

The entropy of the system is defined as

S = k ln W ,

where k = 1.38 × 10−23 J/K is Boltzmann’s constant.

12

13

In writing S = k ln W , we have assumed each microstate is equally

probable.

14

In writing S = k ln W , we have assumed each microstate is equally

probable.

In many systems, the microstates that give rise to the same

macrostate are not equally probable.

14

In writing S = k ln W , we have assumed each microstate is equally

probable.

In many systems, the microstates that give rise to the same

macrostate are not equally probable.

If the probability of microstate ψ is pψ , then we define the

system’s entropy to be

S = −k

X

pψ ln pψ .

ψ

14

In writing S = k ln W , we have assumed each microstate is equally

probable.

In many systems, the microstates that give rise to the same

macrostate are not equally probable.

If the probability of microstate ψ is pψ , then we define the

system’s entropy to be

S = −k

X

pψ ln pψ .

ψ

Note. For a system with W equally probable microstates,

pψ = 1/W for all ψ, so that

S = −k

W

X

1

ψ=1

W

ln

1

W

= −kW

1

W

(− ln W ) = k ln W .

14

B. The Partition Function

B. The Partition Function

Consider a non-isolated system, at fixed temperature T , that is

free to exchange energy with its surroundings, so that its energy is

not fixed.

B. The Partition Function

Consider a non-isolated system, at fixed temperature T , that is

free to exchange energy with its surroundings, so that its energy is

not fixed.

We can achieve these conditions by keeping our system in contact

with a heat bath at temperature T .

B. The Partition Function

Consider a non-isolated system, at fixed temperature T , that is

free to exchange energy with its surroundings, so that its energy is

not fixed.

We can achieve these conditions by keeping our system in contact

with a heat bath at temperature T .

What is the probability pψ of finding our system in microstate ψ

with energy Eψ ?

Imagine an ensemble consisting of many copies of our system in

contact with the heat bath.

Imagine an ensemble consisting of many copies of our system in

contact with the heat bath.

Let

aψ1

aψ2

aψ3

be the number of copies in state

ψ1

ψ2

ψ3

with energy

Eψ1 ,

Eψ2 ,

Eψ3 .

17

Let

Then

aψ1

aψ2

aψ3

be the number of copies in state

ψ1

ψ2

ψ3

with energy

Eψ1 ,

Eψ2 ,

Eψ3 .

aψ2

= f (Eψ1 , Eψ2 ) = f (Eψ2 − Eψ1 ).

aψ1

17

Let

aψ1

aψ2

aψ3

be the number of copies in state

ψ1

ψ2

ψ3

Then

aψ2

= f (Eψ1 , Eψ2 ) = f (Eψ2 − Eψ1 ).

aψ1

Similarly,

aψ3

= f (Eψ3 − Eψ2 )

aψ2

and

aψ3

= f (Eψ3 − Eψ1 ).

aψ1

with energy

Eψ1 ,

Eψ2 ,

Eψ3 .

Let

aψ1

aψ2

aψ3

be the number of copies in state

ψ1

ψ2

ψ3

Then

aψ2

= f (Eψ1 , Eψ2 ) = f (Eψ2 − Eψ1 ).

aψ1

Similarly,

aψ3

= f (Eψ3 − Eψ2 )

aψ2

and

aψ3

= f (Eψ3 − Eψ1 ).

aψ1

Since

aψ3

aψ aψ

= 2 · 3,

aψ1

aψ1 aψ2

with energy

f (Eψ3 − Eψ1 ) = f (Eψ2 − Eψ1 )f (Eψ3 − Eψ2 ) .

Eψ1 ,

Eψ2 ,

Eψ3 .

The only function for which

f (Eψ3 − Eψ1 ) = f (Eψ2 − Eψ1 )f (Eψ3 − Eψ2 )

holds for all Eψi is an exponential function.

18

The only function for which

f (Eψ3 − Eψ1 ) = f (Eψ2 − Eψ1 )f (Eψ3 − Eψ2 )

holds for all Eψi is an exponential function.

Therefore, for each state ψ,

f (Eψ ) ∝ exp(−βEψ ) ,

for some constant β, and likewise

aψ ∝ exp(−βEψ ) .

18

The only function for which

f (Eψ3 − Eψ1 ) = f (Eψ2 − Eψ1 )f (Eψ3 − Eψ2 )

holds for all Eψi is an exponential function.

Therefore, for each state ψ,

f (Eψ ) ∝ exp(−βEψ ) ,

for some constant β, and likewise

aψ ∝ exp(−βEψ ) .

But aψ ∝ pψ , the probability of finding the system in state ψ.

18

The only function for which

f (Eψ3 − Eψ1 ) = f (Eψ2 − Eψ1 )f (Eψ3 − Eψ2 )

holds for all Eψi is an exponential function.

Therefore, for each state ψ,

f (Eψ ) ∝ exp(−βEψ ) ,

for some constant β, and likewise

aψ ∝ exp(−βEψ ) .

But aψ ∝ pψ , the probability of finding the system in state ψ.

Therefore, pψ ∝ exp(−βEψ ) .

18

Fact. β = 1/kT : that is,

pψ ∝ exp −

Eψ

kT

.

Fact. β = 1/kT : that is,

pψ ∝ exp −

Eψ

kT

.

Intuition. kT is the characteristic energy scale of the system.

States with energy . kT are probable; states with energy & kT

are improbable.

Fact. β = 1/kT : that is,

pψ ∝ exp −

Eψ

kT

.

Intuition. kT is the characteristic energy scale of the system.

States with energy . kT are probable; states with energy & kT

are improbable.

If we call the constant of proportionality 1/Z , then

Fact. β = 1/kT : that is,

pψ ∝ exp −

Eψ

kT

.

Intuition. kT is the characteristic energy scale of the system.

States with energy . kT are probable; states with energy & kT

are improbable.

If we call the constant of proportionality 1/Z , then

Eψ

1

pψ = exp −

Z

kT

.

Fact. β = 1/kT : that is,

pψ ∝ exp −

Eψ

kT

.

Intuition. kT is the characteristic energy scale of the system.

States with energy . kT are probable; states with energy & kT

are improbable.

If we call the constant of proportionality 1/Z , then

Eψ

1

pψ = exp −

Z

kT

.

This probability distribution is called the Boltzmann distribution.

In the expression

Eψ

1

pψ = exp −

Z

kT

,

we can determine Z by noting that our system must be in some

microstate, so

X

ψ

pψ =

Eψ

1X

exp −

= 1.

Z ψ

kT

In the expression

Eψ

1

pψ = exp −

Z

kT

,

we can determine Z by noting that our system must be in some

microstate, so

X

ψ

pψ =

Eψ

1X

exp −

= 1.

Z ψ

kT

So

Z=

X

ψ

Eψ

exp −

kT

.

In the expression

Eψ

1

pψ = exp −

Z

kT

,

we can determine Z by noting that our system must be in some

microstate, so

X

ψ

pψ =

Eψ

1X

exp −

= 1.

Z ψ

kT

So

Z=

X

ψ

Eψ

exp −

kT

.

The factor Z is called the partition function. It enables us to

compute any macroscopic property of our system.

C. Average Energy, Entropy, and Free Energy

21

C. Average Energy, Entropy, and Free Energy

We can now compute various physically important macroscopic

quantities, starting from

pψ =

Eψ

1

exp −

Z

kT

(probability of microstate ψ)

and

Z=

X

ψ

Eψ

exp −

kT

(the partition function)

21

C. Average Energy, Entropy, and Free Energy

We can now compute various physically important macroscopic

quantities, starting from

pψ =

Eψ

1

exp −

Z

kT

(probability of microstate ψ)

and

Z=

X

ψ

Eψ

exp −

kT

(the partition function)

The system’s average energy hE i is the expected value of Eψ ,

hE i =

X

ψ

Eψ

1X

pψ Eψ =

exp −

Eψ .

Z ψ

kT

Let us compute the entropy

S = −k

X

ψ

pψ ln pψ .

Let us compute the entropy

S = −k

X

pψ ln pψ .

ψ

Since

we find

Eψ

1

pψ = exp −

Z

kT

,

Let us compute the entropy

S = −k

X

pψ ln pψ .

ψ

Since

Eψ

1

pψ = exp −

Z

kT

,

we find

S = −k

X

ψ

pψ

Eψ

−

− ln Z

kT

=

hE i

+ k ln Z .

T

Let us compute the entropy

S = −k

X

pψ ln pψ .

ψ

Since

Eψ

1

pψ = exp −

Z

kT

,

we find

S = −k

X

ψ

pψ

Eψ

−

− ln Z

kT

=

hE i

+ k ln Z .

T

Rearranging, we get

hE i − TS = −kT ln Z ≡ F ,

which defines the free energy F .

Let us compute the entropy

S = −k

X

pψ ln pψ .

ψ

Since

Eψ

1

pψ = exp −

Z

kT

,

we find

S = −k

X

ψ

pψ

Eψ

−

− ln Z

kT

=

hE i

+ k ln Z .

T

Rearranging, we get

hE i − TS = −kT ln Z ≡ F ,

which defines the free energy F .

Fact. S = −

dF

dT

22

4. The Main Calculation

4. The Main Calculation

A quantum string in state ψ = (n1 , n2 , n3 , . . . ) with

n1 excitations at frequency ω,

n2 excitations at frequency 2ω,

n3 excitations at frequency 3ω, etc.,

has energy

Eψ = ~ωN ,

where

N = n1 + 2n2 + 3n3 + · · · =

∞

X

`=1

`n` .

4. The Main Calculation

A quantum string in state ψ = (n1 , n2 , n3 , . . . ) with

n1 excitations at frequency ω,

n2 excitations at frequency 2ω,

n3 excitations at frequency 3ω, etc.,

has energy

Eψ = ~ωN ,

where

N = n1 + 2n2 + 3n3 + · · · =

∞

X

`n` .

`=1

This string state corresponds to the partition

z

n1 summands

}|

{

z

n2 summands

}|

{

z

n3 summands

}|

{

N = 1 + 1 + ··· + 1+2 + 2 + ··· + 2+3 + 3 + ··· + 3+··· .

23

Therefore, counting the number of partitions of N is equivalent to

counting the number of string states with energy N~ω.

24

Therefore, counting the number of partitions of N is equivalent to

counting the number of string states with energy N~ω.

But (k times the logarithm of) the number of the string states is

the entropy.

Therefore, counting the number of partitions of N is equivalent to

counting the number of string states with energy N~ω.

But (k times the logarithm of) the number of the string states is

the entropy.

Strategy: Use statistical mechanics to calculate the entropy of the

string, and thereby to count the number of partitions of N.

24

Everything begins with the partition function

Z

=

X

ψ

Eψ

exp −

kT

25

Everything begins with the partition function

Z

=

X

ψ

=

Eψ

exp −

kT

∞ X

∞

X

n1 =0 n2

~ω

· · · exp −

(n1 + 2n2 + · · · )

kT

=0

25

Everything begins with the partition function

Z

=

X

ψ

=

Eψ

exp −

kT

∞ X

∞

X

n1 =0 n2

2

~ω

· · · exp −

(n1 + 2n2 + · · · )

kT

=0

32

3

∞

X

~ω

~ω

5

4

4

· n1

· 2n2 5 · · ·

=

exp −

exp −

kT

kT

n =0

n =0

∞

X

2

3

∞

∞

Y

X

~ω

4

=

exp −

· `n` 5

1

`=1

2

n` =0

kT

25

Everything begins with the partition function

Z

=

X

ψ

=

Eψ

exp −

kT

∞ X

∞

X

n1 =0 n2

2

~ω

· · · exp −

(n1 + 2n2 + · · · )

kT

=0

32

3

∞

X

~ω

~ω

5

4

4

· n1

· 2n2 5 · · ·

=

exp −

exp −

kT

kT

n =0

n =0

∞

X

2

3

∞

∞

Y

X

~ω

4

=

exp −

· `n` 5

1

=

`=1 n` =0

∞ Y

`=1

2

kT

~ω

1 − exp −

·`

kT

−1

25

Everything begins with the partition function

Z

=

X

ψ

=

Eψ

exp −

kT

∞ X

∞

X

n1 =0 n2

2

~ω

· · · exp −

(n1 + 2n2 + · · · )

kT

=0

32

3

∞

X

~ω

~ω

5

4

4

· n1

· 2n2 5 · · ·

=

exp −

exp −

kT

kT

n =0

n =0

∞

X

2

3

∞

∞

Y

X

~ω

4

=

exp −

· `n` 5

1

=

`=1 n` =0

∞ Y

`=1

2

kT

~ω

1 − exp −

·`

kT

−1

∞

X

1

Note. If we define x = e

, then Z =

=

p(N)x N

`

1

−

x

`=1

N=0

is exactly the generating function for partitions!

− ~ω

kT

∞

Y

25

The free energy is

F

= −kT ln Z

26

The free energy is

F

= −kT ln Z

= kT ln

∞ Y

`=1

~ω

1 − exp −

·`

kT

−1

26

The free energy is

F

= −kT ln Z

= kT ln

∞ Y

`=1

~ω

1 − exp −

·`

kT

∞

X

~ω`

= kT

ln 1 − exp −

kT

`=1

−1

.

26

The free energy is

F

= −kT ln Z

= kT ln

∞ Y

`=1

~ω

1 − exp −

·`

kT

∞

X

~ω`

= kT

ln 1 − exp −

kT

`=1

−1

.

We now take the limit T → ∞, or equivalently, set

~ω

1.

kT

We’ll see later that this is tantamount to assuming N 1.

26

The free energy is

F

= −kT ln Z

= kT ln

∞ Y

`=1

~ω

1 − exp −

·`

kT

∞

X

~ω`

= kT

ln 1 − exp −

kT

`=1

−1

.

We now take the limit T → ∞, or equivalently, set

~ω

1.

kT

We’ll see later that this is tantamount to assuming N 1.

In this limit, the summand ln 1 − exp − ~ω`

kT

with `.

changes slowly

26

So we may approximate the sum by an integral,

Z∞ ~ω`

F ≈ kT

ln 1 − exp −

d` .

kT

0

27

So we may approximate the sum by an integral,

Z∞ ~ω`

F ≈ kT

ln 1 − exp −

d` .

kT

0

Changing variables to q =

~ω`

kT ,

27

So we may approximate the sum by an integral,

Z∞ ~ω`

F ≈ kT

ln 1 − exp −

d` .

kT

0

Changing variables to q =

~ω`

kT ,

(kT )2

F ≈

~ω

Z

∞

ln(1 − e −q ) dq .

0

27

So we may approximate the sum by an integral,

Z∞ ~ω`

F ≈ kT

ln 1 − exp −

d` .

kT

0

Changing variables to q =

~ω`

kT ,

(kT )2

F ≈

~ω

Z

∞

ln(1 − e −q ) dq .

0

Taylor-expanding the logarithm via

y2 y3

ln(1 − y ) = − y +

+

+ ···

2

3

,

27

So we may approximate the sum by an integral,

Z∞ ~ω`

F ≈ kT

ln 1 − exp −

d` .

kT

0

Changing variables to q =

~ω`

kT ,

(kT )2

F ≈

~ω

Z

∞

ln(1 − e −q ) dq .

0

Taylor-expanding the logarithm via

y2 y3

ln(1 − y ) = − y +

+

+ ···

2

3

F

≈ −

(kT )2

~ω

Z

∞

0

,

(e −q + 12 e −2q + 13 e −3q + · · · ) dq

27

So we may approximate the sum by an integral,

Z∞ ~ω`

F ≈ kT

ln 1 − exp −

d` .

kT

0

Changing variables to q =

~ω`

kT ,

(kT )2

F ≈

~ω

Z

∞

ln(1 − e −q ) dq .

0

Taylor-expanding the logarithm via

y2 y3

ln(1 − y ) = − y +

+

+ ···

2

3

,

Z

F

(kT )2 ∞ −q 1 −2q 1 −3q

(e + 2 e

+ 3e

+ · · · ) dq

~ω 0

(kT )2

1

1

= −

1 + 2 + 2 + ···

~ω

2

3

≈ −

27

So we may approximate the sum by an integral,

Z∞ ~ω`

F ≈ kT

ln 1 − exp −

d` .

kT

0

Changing variables to q =

~ω`

kT ,

(kT )2

F ≈

~ω

Z

∞

ln(1 − e −q ) dq .

0

Taylor-expanding the logarithm via

y2 y3

ln(1 − y ) = − y +

+

+ ···

2

3

F

(kT )2

~ω

(kT )2

= −

~ω

(kT )2

= −

~ω

Z

∞

≈ −

0

,

(e −q + 12 e −2q + 13 e −3q + · · · ) dq

1+

·

π2

.

6

1

1

+

+ ···

22 32

27

The entropy is

d

dF

=−

S =−

dT

dT

(kT )2 π 2

−

·

~ω

6

=

k 2T π2

·

.

~ω

3

28

The entropy is

d

dF

=−

S =−

dT

dT

(kT )2 π 2

−

·

~ω

6

=

k 2T π2

·

.

~ω

3

The average energy is

hE i = F + TS

(kT )2 π 2 (kT )2 π 2

·

+

·

= −

~ω

6

~ω

3

2

2

π

kT

=

· ~ω

6 ~ω

The entropy is

d

dF

=−

S =−

dT

dT

(kT )2 π 2

−

·

~ω

6

=

k 2T π2

·

.

~ω

3

The average energy is

hE i = F + TS

(kT )2 π 2 (kT )2 π 2

·

+

·

= −

~ω

6

~ω

3

2

2

π

kT

=

· ~ω

6 ~ω

Since E = N~ω, we can now see that the large-T limit is the same

as the large-N limit.

The entropy is

d

dF

=−

S =−

dT

dT

(kT )2 π 2

−

·

~ω

6

=

k 2T π2

·

.

~ω

3

The average energy is

hE i = F + TS

(kT )2 π 2 (kT )2 π 2

·

+

·

= −

~ω

6

~ω

3

2

2

π

kT

=

· ~ω

6 ~ω

Since E = N~ω, we can now see that the large-T limit is the same

as the large-N limit.

In this limit, the string is overwhelmingly likely to be in a state

near its average energy, so we may freely interchange E and hE i.

The entropy is

d

dF

=−

S =−

dT

dT

(kT )2 π 2

−

·

~ω

6

=

k 2T π2

·

.

~ω

3

The average energy is

hE i = F + TS

(kT )2 π 2 (kT )2 π 2

·

+

·

= −

~ω

6

~ω

3

2

2

π

kT

=

· ~ω

6 ~ω

Since E = N~ω, we can now see that the large-T limit is the same

as the large-N limit.

In this limit, the string is overwhelmingly likely to be in a state

near its average energy, so we may freely interchange E and hE i.

Written as a function of the energy, the entropy is

Ê

S(E ) = kπ

2E

.

3~ω

Ê

Substituting E = N~ω into S(E ) = kπ

2E

, we get

3~ω

29

Ê

Substituting E = N~ω into S(E ) = kπ

Ê

S = k · 2π

2E

, we get

3~ω

N

.

6

29

Ê

Substituting E = N~ω into S(E ) = kπ

Ê

S = k · 2π

2E

, we get

3~ω

N

.

6

On the other hand, S = k ln W , where W is the number of states

of the string;

29

Ê

Substituting E = N~ω into S(E ) = kπ

Ê

S = k · 2π

2E

, we get

3~ω

N

.

6

On the other hand, S = k ln W , where W is the number of states

of the string;

and W = p(N);

29

Ê

Substituting E = N~ω into S(E ) = kπ

Ê

S = k · 2π

2E

, we get

3~ω

N

.

6

On the other hand, S = k ln W , where W is the number of states

of the string;

and W = p(N);

Ê

so

k ln p(N) = k · 2π

N

,

6

29

Ê

Substituting E = N~ω into S(E ) = kπ

Ê

S = k · 2π

2E

, we get

3~ω

N

.

6

On the other hand, S = k ln W , where W is the number of states

of the string;

and W = p(N);

Ê

so

k ln p(N) = k · 2π

or

Ê

ln p(N) = 2π

N

,

6

N

,

6

Q.E .D.!

29