* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

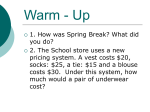

Download 1 Introduction to Optics and Photophysics - Wiley-VCH

Optical flat wikipedia , lookup

Ellipsometry wikipedia , lookup

Ray tracing (graphics) wikipedia , lookup

Photon scanning microscopy wikipedia , lookup

Optical coherence tomography wikipedia , lookup

X-ray fluorescence wikipedia , lookup

Franck–Condon principle wikipedia , lookup

Upconverting nanoparticles wikipedia , lookup

Fourier optics wikipedia , lookup

Diffraction grating wikipedia , lookup

Interferometry wikipedia , lookup

Astronomical spectroscopy wikipedia , lookup

Atmospheric optics wikipedia , lookup

Ultrafast laser spectroscopy wikipedia , lookup

Surface plasmon resonance microscopy wikipedia , lookup

Optical aberration wikipedia , lookup

Birefringence wikipedia , lookup

Ultraviolet–visible spectroscopy wikipedia , lookup

Nonimaging optics wikipedia , lookup

Thomas Young (scientist) wikipedia , lookup

Anti-reflective coating wikipedia , lookup

Magnetic circular dichroism wikipedia , lookup

Diffraction wikipedia , lookup

Wave interference wikipedia , lookup

Retroreflector wikipedia , lookup

1 1 Introduction to Optics and Photophysics Rainer Heintzmann In this chapter, we first introduce the properties of light as a wave by discussing interference, which explains the laws of refraction, reflection, and diffraction. We then discuss light in the form of rays, which leads to the laws of lenses and the ray diagrams of optical systems. Finally, the concept of light as photons is addressed, including the statistical properties of light and the properties of fluorescence. For a long time, it was believed that light travels in straight lines, which are called rays. With this theory, it is easy to explain brightness and darkness, and effects such as shadows or even the fuzzy boundary of shadows due to the extent of the sun in the sky. In the sixteenth century, it was discovered that sometimes light can ‘‘bend’’ around sharp edges by a phenomenon called diffraction. To explain the effect of diffraction, light has to be described as a wave. In the beginning, this wave theory of light – based on Christiaan Huygens’ (1629–1695) work and expanded (in 1818) by Augustin Jean Fresnel (1788–1827) – was not accepted. Poisson, a judge for evaluating Fresnel’s work in a science competition, tried to ridicule it by showing that Fresnel’s theory would predict a bright spot, now called the Poisson’s spot, in the middle of a round dark shadow behind a disk object, which he obviously considered wrong. Another judge, Arago, then showed that this spot is indeed seen when measurements are done carefully enough. This was a phenomenal success of the wave description of light. Additionally, there was also the corpuscular theory of light (Pierre Gassendi *1592, Sir Isaac Newton *1642), which described light as particles. With the discovery of Einstein’s photoelectric effect, the existence of these particles, called photons, could not be denied. It clearly showed that a minimum energy per such particle is required as opposed to a minimum strength of an electric field. Such photons can even directly be ‘‘seen’’ as individual spots, when using a camera with a very sensitive film imaging a very dim light distribution or modern image intensified or emCCD cameras. Since then, the description of light maintained this dual (wave and particle) nature. When it is interacting with matter, one often has to consider its quantum (particle) nature. However, the rules of propagation of these particles are described by Maxwell’s wave equations of electrodynamics, identifying oscillating electric fields as the waves responsible for what we call light. The wave-particle duality of Fluorescence Microscopy: From Principles to Biological Applications, First Edition. Edited by Ulrich Kubitscheck. © 2013 Wiley-VCH Verlag GmbH & Co. KGaA. Published 2013 by Wiley-VCH Verlag GmbH & Co. KGaA. 2 1 Introduction to Optics and Photophysics light still surprises with interesting phenomena and is an active field of research (known as quantum optics). It is expected that exploiting related phenomena will form the basis of future devices such as quantum computers, quantum teleportation, and even microscopes based on quantum effects. To understand the behavior of light, the concepts of waves are often required. Therefore, we start by introducing an effect that is observed only when the experiment is designed carefully: interference. For understanding the basic concepts as detailed below, only a minimum amount of mathematics is required. Previous knowledge of complex numbers is not required. However, for quantitative calculations, the concepts of complex numbers will be needed. 1.1 Interference: Light as a Wave Suppose that we perform the following experiment (Figure 1.1). We place any light source on the input side of the instrument, as illustrated in Figure 1.1. This instrument consists of only two 50% beam splitters and two ordinary fully reflecting mirrors. However, if these are very carefully adjusted such that the path lengths along the two different paths are exactly equal, something strange happens: all the light entering the device will leave only one of the exit ports. In the second output port, we will observe absolute darkness. This is very strange indeed, as naı̈vely one would expect 2 × 0.5 × 0.5 = 50% of the light emerging on either side. Even more surprising is what happens if one inserts a hand into one of the two beams inside this device. Now, obviously the light will be absorbed by our hand, but nevertheless, some light (25%) will suddenly leave the top output of the device, where we previously had only darkness. As we would expect, another 25% light will leave the second output. The explanation of this effect of interference lies in the wave nature of light: brightness and brightness can indeed yield darkness (called destructive interference), if the two superimposed electromagnetic waves are always oscillating in opposite directions, that is, they have opposite phases (Figure 1.1b). The frequency ν is given as the reciprocal of time between two successive maxima of this oscillation. The amplitude of a wave is given by how much the electric field oscillates while it is passing by. The square of this amplitude is what we perceive as irradiance or brightness (sometimes also referred to, in a slightly inaccurate way, as intensity) of the light. If one of the two waves is then blocked, this destructive cancellation will cease and we will get the 25% brightness, as ordinarily expected by splitting 50% of the light again in two. Indeed, if we remove our hand and just delay one of the two waves by only half a wavelength (e.g., by introducing a small amount of gas in only one of the two beam paths), the relative phase of the waves changes, which can invert the situation, that is, we observe constructive interference (on top side) where we previously had destructive interference and darkness where previously there was light. 1.1 Interference: Light as a Wave Stic k ha her nd in e 0% Destructive interference Mirror 50% Beam splitter 100% Constructive interference Light source Mirror (a) 50% Beam splitter E Field 1 T = 1/n Amplitude t E Field 2 t E Field of destructive interference (b) Figure 1.1 Interference. (a) In the interferometer of Mach–Zehnder type, the beam is split equally in two paths by a beam splitter and after reflection rejoined with a second beam splitter. If the optical path lengths of the split beams are adjusted to be exactly equal, constructive interference results in the right path, whereas the light in the other path cancels by destructive t interference. (b) Destructive interference. If the electric field of two interfering light waves (top and bottom) is always of opposite value (p phase shift), the waves cancel and the result is a zero value for the electric field and, thus, also zero intensity. This is termed destructive interference. The aforementioned device is called an interferometer of Mach–Zehnder type. Such interferometers are extremely sensitive measurement devices capable of detecting a sub nanometer relative delay of light waves passing the two arms of the interferometer, for example, caused by a very small concentration of gas in one arm. 3 4 1 Introduction to Optics and Photophysics Sound is also a wave, but in this case, instead of the electromagnetic field, it is the air pressure that oscillates at a much slower rate. In the case of light, it is the electric field oscillating at a very high frequency. The electric field is also responsible for hair clinging to a synthetic jumper, which has been electrically charged by friction or walking on the wrong kind of floor with the wrong kind of socks and shoes. Such an electric field has a direction not only when it is static, as in the case of the jumper, but also when it is dynamic, as in the case of light. In the latter case, the direction corresponds to the direction of polarization of the light, which is discussed later. Waves, such as water waves, are more commonly observed in nature. Although these are only a two-dimensional analogy to the electromagnetic wave, their crests (the top of each such wave) are a good visualization of what is referred to as a phase front. Thus, phase fronts in 3 dimensional electromagnetic waves refer to the surfaces of equal phase (e.g., a local maximum of the electric field). Similar to what is seen in water waves, such phase fronts travel along with the wave at the speed of light. The waves we observe close to the shore can serve as a 2D analogy to what is called a plane wave, whereas the waves seen in a pond, when we throw a stone into the water, are a two-dimensional analogy to a spherical wave. When discussing the properties of light, one often omits the details about the electric field being a vectorial quantity and rather talks about the scalar ‘‘amplitude’’ of the wave. This is just a sloppy, but a very convenient way of describing light when polarization effects do not matter for the experiment under consideration. Light is called a transverse wave as in vacuum and homogeneous isotropic media, the electric field is always oriented perpendicular to the local direction of propagation of the light. However, this is merely a crude analogy to waves in media, such as sound, where the particles of the medium actually move. In the case of light, there is really no movement of matter necessary for its description as the oscillating quantity is the electric field, which can even propagate in vacuum. The frequency ν (measured in hertz, i.e., oscillations per second, see also Figure 1.1b), at which the electric field vibrates, defines its color. Blue light has a higher frequency and energy hν per photon than green, yellow, red, and infrared light. Here, h is Planck’s constant and ν is the frequency of the light. Because in vacuum, the speed of light does not depend on its color, the vacuum wavelength λ is short for blue light (∼ 450 nm) and gets longer for green (∼ 520 nm), yellow (∼ 580 nm), red (∼ 630 nm), and infrared (∼ 800 nm), respectively. In addition, note that the same wave theory of light governs all wavelength ranges of the electromagnetic spectrum from radio waves over microwaves, terahertz waves, infrared, visible, ultraviolet, vacuum-ultraviolet, and soft and hard X-rays to gamma rays. In many cases, we deal with linear optics, where all amplitudes will have the time dependency exp(iωt), as given above. Therefore, this time-dependent term is often omitted, and one concentrates only on the spatial dependency while keeping in mind that each phasor always rotates with time. 1.1 Interference: Light as a Wave Box 1.1 Phasor Diagrams and the Complex Wave This box mathematically describes the concept of waves. For this, the knowledge of complex numbers is required. However, a large part of the main text does not require this understanding. For a deeper understanding of interference, it is useful to take a look at the phasor diagrams. In such a diagram, the amplitude value is pictured as a vector in the complex plane. This complex amplitude is called a phasor. Even though the electric field strength is just the real part of the phasor, the complex-valued phasor concept makes all the calculations a lot simpler. The rapidly oscillating wave corresponds to the phasor rotating at a constant speed around the center of the complex plane (see Figure 1.2 for a depiction of a rotating complex amplitude vector and its real part being the electric field over time). Mathematically, this can be written as A (t) = A0 ei t Imaginary with the frequency = 2πν, and the complex-valued amplitude A0 = |A0 | exp(iϕ) depending on its strength |A0 | and phase ϕ of the wave at time 0. The phase or phase angle of a wave corresponds to the arguments of the exponential functions, thus in our case ϕ + t, whereas the strength |A0 | is often simply referred to as amplitude, which is a bit ambiguous to use with the above complex amplitude. i Imaginary part of A0 0 −1 Real part 1 of A0 Real Re[A 0 ei ω t ] = |A 0| cos(ω t + ϕ) t Figure 1.2 The phasor in the complex plane. An electro-optical wave can be seen as the real part of a complex-valued wave (lower part) A0 exp(iωt). This wave has the complex-valued phasor A0 , which is depicted in the complex plane. It is characterized by its length |A0 | and its phase ϕ. 5 1 Introduction to Optics and Photophysics To describe what happens if two waves interfere, we simply add the two corresponding complex-valued phasors. Such a complex-valued addition means to simply attach one of the vectors to the tip of the other to obtain the resulting amplitude (Figure 1.3). Imaginary 6 i A2 A1 A1 + A2 −1 0 1 Real A1 : Phasor of wave 1 A2 : Phasor of wave 2 A1 + A2 : Wave 1 interfering with wave 2 Figure 1.3 Addition of phasors. The addition of two phasors A1 and A2 is depicted in the complex plane. As can be seen, the phasors (each being described by a phase angle and a strength) add like vectors. As light oscillates extremely fast, we have no way of measuring its electric field directly, but we do have means to measure its ‘‘intensity,’’ which relates to the energy in the wave that is proportional to the square of the absolute amplitude (square of the length of the phasor). This absolute square of the amplitude can be obtained by adding the square of its real part to the square of its imaginary part, which is identical to writing (for a complex-valued amplitude A) I = A A∗ with the asterisk denoting the complex conjugate. Waves usually propagate through space. If we assume that a wave oscillates at a well-defined frequency and travels with a speed c/n (the vacuum speed of light c and the refractive index of the medium n), we can write such a traveling plane wave as A (x, t) = A0 e−i(kx− t) with k = 2πn/λ = n/c being the wavenumber, which is related to the spatial frequency k as k = k/2π. Note that both k and x are vectorial quantities if working in two or three dimensions, in which case their product refers to 1.2 Two Effects of Interference: Diffraction and Refraction the scalar product of the vectors. The spatial frequency counts the number of amplitude maxima per meter in the medium in which it is traveling (Figure 1.2). The spatial position x denotes the coordinate at which we are observing. In many cases, we deal with linear optics, where all amplitudes will have the time dependency exp(iωt) as given above. Therefore, this time-dependent term is often omitted and one concentrates only on the spatial dependency while keeping in mind that each phasor always rotates with time. 1.2 Two Effects of Interference: Diffraction and Refraction We now know the important effect of constructive and destructive interference of light, explained by its wave nature. As discussed below, the wave nature of light is capable of explaining two aspects of light: diffraction and refraction. Diffraction is a phenomenon that is seen when light interacts with a very fine (often periodic) structure such as a compact disk (CD). The emerging light will emerge under different angles, dependent on its wavelengths and giving rise to the colorful experience when looking at light diffracted from the surface of a CD. On the other hand, refraction refers to the effect where light rays seem to change their direction when the light passes from one medium to another. This is, for example, seen when trying to look at a scene through a glass full of water. Even though these two effects may look very different at first glance, both of these effects are ultimately based on interference, as discussed here. Diffraction is most prominent when light illuminates structures (such as a grating) of a feature size (grating constant) similar to the wavelength of light. In contrast, refraction (e.g., the bending of light rays caused by a lens) dominates when the different media (such as at the air and glass) have constituents (molecules) that are much smaller than the wavelength of light (homogeneous media), but these homogeneous areas are much larger in feature size (i.e., the size of a lens) than in the wavelength. To describe diffraction, it is useful to first consider the light as emitted by a pointlike source. Let us look at an idealized source, which is infinitely small and emits only a single color of light. This source would emit a spherically diverging wave. In vacuum, the energy flows outward through any surface around the source without being absorbed; thus, spherical shells at different radii must have the same integrated intensity. Because the surface of these shells increases with the square of the distance to the source, the light intensity decreases with the inverse square such that energy is conserved. To describe diffraction, Christiaan Huygens had an ingenious idea: to find out how a wave will continue on its path, we determine it at a certain border surface and can then place virtual point emitters everywhere at this surface, letting the light of these emitters interfere. The resulting interference pattern will reconstitute the original wave beyond that surface. This ‘‘Huygens’ principle’’ can nicely explain that parallel waves stay parallel, as we find constructive interference only in the 7 1 Introduction to Optics and Photophysics D sin α = Nλ S or eco de nd r d iff ra ct io n direction following the propagation of the wave. Strictly speaking, one would also find a backwards propagation wave. However, when Huygen’s idea is formulated in a more rigorous way, the backward propagating wave is avoided. Huygens’ principle is very useful when trying to predict the scenario when a wave hits a structure with feature size comparable to the wavelength of light, for example, a slit aperture or a diffraction grating. In Figure 1.4, we consider the example of diffraction at a grating with the lowest repetition distance D. D is designated as the grating constant. As is seen from the figure, circular waves corresponding to Huygens’ wavelets originate at each aperture and they join to form new wave fronts, thus forming plane waves oriented in various directions. These directions of constructive interference need to fulfill the following condition (Figure 1.4): λ sin α = N D with N denoting the integer (number of the diffraction orders) multiples of wavelengths λ to yield the same phase (constructive interference) at angle α with respect to the incident direction. Note that the angle α of the diffracted waves depends on the wavelength and thus on the color of light. In addition, note that the crests of the waves form connected lines (indicated as dashed-dotted line), which are called phase fronts or wave fronts, whereas the dashed lines perpendicular to these phase fronts can be thought of as corresponding to the light rays of geometrical optics. D = N= λ Light ray λ n ctio 2 Phase fronts N 8 1 ffra t di s r i F er ord α N=0 Zero order Figure 1.4 Diffraction at a grating under normal incidence. The directions of constructive interference (where maxima and minima of one wave interfere with the respective maxima and minima of the second wave) are shown for several diffraction orders. The diffraction equation D sin α = Nλ, which is derived from the geometrical construction of Huygens’ wavelets, is shown. 1.2 Two Effects of Interference: Diffraction and Refraction A CD is a good example of such a diffractive structure. White light is a mixture of light of many wavelengths. Thus, illuminating the CD with a white light source from a sufficient distance will cause only certain colors to be diffracted from certain places on the disk into our eyes. This leads to the observation of the beautiful rainbow-like color effect when looking at it. Huygens’ idea can be slightly modified to explain what happens when light passes through a homogeneous medium. Here, the virtual emitters are replaced with the existing molecules inside a medium. However, contrary to Huygens’ virtual wavelets, in this case, each emission from each molecule is phase shifted with respect to the incoming wave (Figure 1.5). This phase shift depends on the exact nature of the material. It stems from electrons being wiggled by the oscillating electric field. The binding to the atomic nuclei will cause a time delay in this wiggling. These wiggling electrons constitute an accelerated charge that will radiate an electromagnetic wave. Even though each scattering molecule generates a spherical wave, the superposition of all the scattered waves from atoms at random positions will only interfere all constructively in the forward direction. Thus, each very thin layer of molecules generates another parallel wave, which differs in phase from the original wave. The The origin of the refractive index Glass Incoming wave Transmitted wave Scattered wave (phase delayed) λvacuum Molecules λmedium = λvacuum/n Superposition of incoming and scattered waves from first layer Figure 1.5 The refractive index of a homogeneous material seen as the superposition of waves. Scattering with a (frequencydependent) phase shift at the individual molecules leads to a phase that constantly accumulates over distance. This λvacuum continuous phase delay is summarized by a change in wavelength λmedium inside a medium compared to the wavelength in vacuum λvacuum as given by λmedium = λvacuum /n with the refractive index n. 9 1 Introduction to Optics and Photophysics sum of the original sinusoidal wave and the interfering wave of scattered light will result in a forward-propagating parallel wave with sinusoidal modulation, however, lagging slightly in phase (Figure 1.5). In a dense medium, this phase delay is continuously happening with every new layer of material throughout the medium, giving the impression that the wave has ‘‘slowed down’’ in this medium. It can also be seen as an effectively modified wavelength λmedium inside the medium. This change in the wavelength is conveniently described by the refractive index (n): λ λmedium = vacuum n with the wavelength in vacuum λvacuum . This is a convenient way of summarizing the effect a homogeneous medium has on the light traveling through it. Note that the temporal frequency of vibration of the electric field does not depend on the medium. However, the change in wavelength may itself be dependent on the frequency of the light n(ν) (and thus on the color or wavelength of the light); an effect that is called dispersion. Now that we understand how the interference of the waves scattered by the molecules of the medium can explain the change in effective wavelength, we can use this concept to explain the effect of refraction, the apparent bending of light rays at the interface between different media. Interface 10 Medium 1 (n1 = 1), air x sin α1 = λ1 Incoming wave fronts λ1 Medium 2 (n2 = 1.5), glass x sin α 2 = λ 2 α1 α2 α2 x α1 Refracted wave fronts λ2 Figure 1.6 Refraction as an effect of interference. This figure derives Snell’s law of diffraction from the continuity of the electric field over the border between the two different materials. 1.2 Two Effects of Interference: Diffraction and Refraction We thus analyze what happens when light travels at an angle across an interface between two different refractive index media (Figure 1.6). To this aim, we consider a plane wave. As the wave directly at the interface is given simply by the incident wave, we need to ensure that the first layer of molecules still sees this wave. In other words, the phase has to be continuous across that boundary. It must not have any jumps. However, as we require a change in the wavelength from λ1 = λvacuum /n1 in the first medium to λ2 = λvacuum /n2 in the second medium, the angle of the phase front has to change. As seen in Figure 1.6, we enforce continuity by introducing a distance x along the interface corresponding to one projected wavelength, at both ends of which we must have a maximum of a wave. This yields sin α1 n λ1 = 2 = λ2 n1 sin α2 where α 1 and α 2 are the angles between the direction of propagation of the plane wave and the line orthogonal to the medium’s surface called the surface normal. This is Snell’s famous law of refraction, which forms the foundation of geometrical optics. We can now move to the concept of light rays as commonly drawn in geometrical optics. Such a ray represents a parallel wave with a size small enough to look like a ray, but large enough to not show substantial broadening by diffraction. Practically, the beam emitted by a laser pointer can serve as a good example. As our light rays are thought of as being very thin, we can now apply Snell’s law to different parts of a lens to explain what happens to the light when it hits such a lens. Note that because of Snell’s law, the ray bends toward the surface normal at the transition from an optically thin medium (such as air, n1 = 1) to the optically thicker medium (such as glass, n2 = 1.5, n2 > n1 ), whereas it is directed further away from the surface normal at dense-to-thin transitions such as the glass–air interface. Box 1.2 Polarization As described above, light in a homogeneous isotropic medium is a transverse electromagnetic wave. This means that the vector of the electric field is perpendicular to the direction of propagation. Above, we simplified this model by introducing a scalar amplitude, that is, we ignored the direction of the electric field. In many cases, we do not care much about this direction; however, there are situations where the direction of the electric field is important. One example is the excitation of a single fluorescent dye that is fixed in orientation, for example, being integrated rigidly into the cell membrane and perpendicular to the membrane. Such a fluorescent dye has an orientation of the oscillating electric field of the light wave, with which it can best be excited, which is referred to as its transition dipole moment. If the electric field oscillates perpendicularly to this direction, the molecule cannot be excited. When referring to the way the electric field in a light wave oscillates, one specifies its mode of polarization. 11 12 1 Introduction to Optics and Photophysics For a wave of a single color, every vector component of the electric field Ex , Ey , and Ez , oscillates at the same frequency, but each component can have an independent phase and magnitude. This means that we can use the same concept of the complex-valued amplitude to describe each field vector component. There are a variety of possible configurations, but two of them are noteworthy: linear and circular polarization. If we consider a homogeneous isotropic medium, the electric field vector is always perpendicular to the direction of propagation. Therefore, we can orient the coordinate system at every spatial position such that the field vector has no Ez component, that is, the polarization lies in the XY-plane. If the Ex and Ey components now oscillate simultaneously with no phase difference, we obtain linear polarization (Figure 1.7). This means that there is a specific fixed orientation in space toward which the electric field vector points. It is worth noting that the directions that differ by 180◦ are actually identical directions except for a global phase shift of the oscillation by π. Ey Ex (a) Ey Ey Ex (b) Ey Ex (c) Ex (d) Figure 1.7 Various modes of polarization. Shown here are the paths that the electric field vector describes. (a,b) Two different orientations of linearly polarized light: (a) linear along X and (b) linear 45◦ to X. (c) An example of left circular polarization. (d) An example of elliptically polarized light. Obviously, other directions and modes of polarization (e.g., right circular) are also possible. In contrast, let us consider the other extreme in which the Ex and Ey components are 90◦ out of phase, that is, when Ex has a maximum, Ey is 0, and vice versa. In this case, the orientation of the electric field vector describes a circle, and thus, this is termed circular polarization. Intermediate phase angles (not 0◦ , 90◦ , 180◦ , or 270◦ ) are called elliptic polarization. Note that we again obtain linear polarization, if the field vectors are 180◦ out of phase (Figure 1.7). We now discuss a number of effects where polarization of light plays a significant role. Materials such as glass will reflect a certain amount of light at their surface, even though they are basically 100% transparent once the light is inside the material. At perpendicular incidence, about 4% of the incident light gets reflected, while at other angles, this amount varies. More noticeably, under oblique angles, the amount and the phase of the reflected light strongly depend on the polarization of the input light. This effect is summarized by the Fresnel 1.2 Two Effects of Interference: Diffraction and Refraction reflection coefficients (Figure 1.8). There is a specific angle at which the component of the electric field, which is parallel (p-component) to the plane where the incident and reflected beams lie, is entirely transmitted. This angle is called the Brewster angle (Figure 1.8a). Thus, the reflected light under this angle is 100% polarized in the direction perpendicular to the plane of the beams (s-polarization from the German term senkrecht for perpendicular). In addition, note that the Fresnel coefficients for the glass–air interface predict a range of total internal reflection (Figure 1.8b). Air – glass interface: n1 = 1.0, n2 = 1.52 Perpendicular polarization 80 Parallel polarization 60 Brewster angle Reflection coefficent (%) 100 40 20 0 0 20 (a) 40 60 Angle of incidence 80 100 Perpendicular polarization Parallel polarization 100 50 0 (b) Critical angle Reflection coefficent (%) Glass – Air interface: n1 = 1.52, n2 = 1.0 0 20 Total internal reflection 40 60 Angle of incidence Figure 1.8 An example of the reflectivity of an air–glass interface (a) as described by the Fresnel coefficients dependent on the direction of polarization. Parallel means that the polarization vector is in the plane that the incident and the reflected beam would span, whereas perpendicular means that the polarization vector is perpendicular to this plane. The 80 100 incident angle of 0◦ means the light is incident perpendicular to the interface. The Brewster angle is the angle at which the parallel polarization is perfectly transmitted into the glass (no reflected light), and (b) depicts the corresponding situation on the glass to air interface, which leads to the case of total internal reflection for a range of supercritical angles. 13 14 1 Introduction to Optics and Photophysics There are crystalline materials that are not isotropic, that is, their molecules or unit cells have an asymmetry that leads to different refractive indices for different polarization directions (birefringence). Especially noteworthy is the fact that an input beam entering this material will usually be split into two beams in the birefringent crystal, traveling into different directions inside the material. By cutting crystal wedges along different directions and joining them together, one can make a Wollaston or a Normaski prism, where too the beams leaving the crystal will be slightly tilted with respect to each other for p- and s-polarization. Such prisms are used in a microscopy mode called differential interference contrast (DIC) (Chapter 2). For the concept of a complex amplitude, we introduced the phasor diagram as an easy way to visualize it. If we want to extend this concept to full electric field vectors and the effects of polarization, we need to draw a phasor for each of the oscillating electric field components. The relative phase difference (the angle of the phasors to the real axis) determines the state of polarization. When dealing with microscope objectives of high performance, light will often be focused onto the sample under very high angles. This usually poses a problem for methods such as polarization microscopy because for some rays, the polarization will be distorted for geometrical reasons and because of the influence of Fresnel coefficients. Especially affected are the rays at high angles positioned at 45◦ to the orientation defined by the linear polarization. This effect is, for example, seen when trying to achieve light extinction in a DIC microscope (without a sample): a ‘‘Maltese cross’’ becomes visible, stemming from such rays with insufficient extinction. There are ways to overcome this problem of high-aperture depolarization. One such possibility is an ingenious design of a polarization rectifier based on an air-meniscus lens (Inoué and Hyde, 1957). Another possibility to correct the depolarization is the use of appropriate programmable spatial light modulators in the conjugate back focal plane of an objective. 1.3 Optical Elements In this section, we consider the optical properties of several optical elements: lenses, mirrors, pinholes, filters, and chromatic reflectors. 1.3.1 Lenses Here we will analyze a few situations to understand the general behavior of lenses. In principle, we could use Snell’s law to calculate the shape of an ideal lens to perform its focusing ability. However, this would be outside the scope of this chapter and we assume that a lens with refractive index n and radii of curvature R1 1.3 Optical Elements and R2 (each positive for a convex surface) focuses parallel light to a point at the focal distance f as given by Hecht (2002) 1 1 1 (n − 1) d = (n − 1) + − f R1 R2 nR1 R2 with d denoting the thickness of the lens measured at its center on the optical axis. The above equation is called the lensmaker’s equation for air. If the lenses are thin and the radii of curvature are large, the term containing d/(R1 R2 ) can be neglected, yielding the equation for ‘‘thin’’ lenses: f = 1 (n − 1) ( 1/R1 + 1/R2 ) This approximation is usually made and the rules of geometrical optics as stated below apply. The beauty of geometrical optics is that one can construct ray diagrams and can graphically work out what happens in an optical system. In Figure 1.9a, it can be seen how all the rays parallel to the optical axis focus at the focal distance of the lens, as this is the definition of the focus of a lens. The optical axis refers to the axis of symmetry of the lenses as well as to the general direction of propagation of the rays. A spherically converging wave is generated behind the lens, which then focuses at the focal point of the lens. In Figure 1.9b, it can be seen that this is also true for parallel rays entering the lens at an oblique angle, as they also get focused in the same plane. What can also be seen here are two more basic rays used for geometrical construction of ray diagrams. The ray going through the center of a thin lens is always unperturbed. This is easily understood, as the material is oriented at the same angle on its input and exit side. For a thin lens, this ‘‘slab of f (a) Parall Front focal point el to c (b) entral ray Centra l ray f Optical axis Parallel to optical axis f Focal length Figure 1.9 Focus of a lens under parallel illumination. (a) Illumination parallel to the optical axis. (b) Parallel illumination beams tilted with respect to the optical axis, leading to a focus in the same plane but at a distance from the optical axis. 15 16 1 Introduction to Optics and Photophysics glass’’ has to be considered as infinitely thin, thus yielding no displacement effect of this central ray and we can draw the ray right through the center of the lens. The other important ray used in this geometrical construction is the ray passing through the front focal point of the lens. In geometrical optics of thin lenses, lenses are always symmetrical; thus, the front focal distance of a thin lens is the same as the back focal distance. The principle of optical reciprocity states that one can always retrace the direction of the light rays in geometrical optics and get the identical paths of the rays. Obviously, this is not strictly true for any optical setup. For example, absorption will not lead to amplification when the rays are propagated backward. However, from this principle, it follows that if an input ray parallel to the optical axis always goes through the image focal point on the optical axis, a ray (now directed backward) going through such a focal point on the optical axis will end up being parallel to the optical axis on the exit side of the lens. Parallel light is often referred to as coming from sources at infinity, as this is the limiting case scenario when moving a source further and further away. Hence, a lens focuses an image at infinity to its focal plane because each source at an ‘‘infinite distance’’ generates a parallel wave with its unique direction. Thus, a lens will ‘‘image’’ such sources to unique positions in its focal plane. The starry night sky is a good example of light sources at almost infinite distance. Therefore, telescopes have their image plane at the focal plane of the lens. In a second example (Figure 1.10), we now construct the image of an object at a finite distance. As we consider this object (with its height S) to be emitting the Do Di Ray 1 Ray 3 f S Ray 1 Back focal point f Front focal point Ray 3 Similar triangles: MS S =− Do Di Magnification: M=− Magnified image at: D i = − -MS Ray 4 Ray 2 Ray 2 S MS =− f Do − f Di MS =+ Do S 1 1 − f Do −1 Figure 1.10 A single lens imaging an object as an example for drawing optical ray diagrams. Ray 1 starts parallel and will thus be refracted toward the back focal point by the lens, ray 2 goes through the front focal point and will thus be parallel, and ray 3 is the central ray, which is unaffected by the lens. By knowing that this idealized lens images a point (the tip of S) to a point (the tip of –MS), we can infer the refraction of rays such as ray 4. 1.3 Optical Elements light, we can draw any of its emitted rays. The trick now is to draw only those rays for which we know the way they are handled by the lens: • A ray parallel to the optical axis will go through the focus on the optical axis (ray 1). • A ray going through the front focal point on the optical axis will end up being parallel to it (ray 2). • A ray going through the center of the lens will pass through it unperturbed (ray 3). Furthermore, we trust that lenses are capable of generating images, that is, if two rays cross each other at a certain point in the space, all rays will cross at this same point. Thus, if such a crossing is found, we are free to draw other rays from the same source point through this same crossing (ray 4). In Figure 1.10, we see that the image is flipped over and has generally a different size (MS) compared to the original, with M denoting the magnification. We find two conditions for similar triangles as shown in the figure. This leads to the description of the imaging properties of a single lens: 1 1 D 1 = + , with the magnification M = − i , f Do Di Do where Do is the distance from the lens to the object in front of it, Di is the distance from the lens to the image, and f is the distance from the lens to its focal plane (where it would focus parallel rays). Using the aforementioned ingredients of optical construction, very complicated optical setups can be treated with ease. However in this case, it is often necessary to consider virtual rays that do not physically exist. Such rays, for example, are drawn backward through a system of lenses, ignoring them completely with the sole purpose of determining where a virtual focal plane would lie (i.e., the rays after this lens system look like they came from this virtual object). Then the new rays can be drawn from this virtual focal plane, adhering to the principal rays that are useful for the construction of image planes in the following lenses. 1.3.2 Metallic Mirror In the examples of refraction (Figure 1.5), we considered the so-called dielectric materials where the electrons are bound to their nuclei. This leads to a phase shift of the scattered wave with respect to the incident wave. For metals, the situation is slightly different, as the valence electrons are essentially free (in a so-called electron gas). As a result, they always oscillate such that they essentially emit a 180◦ phase shifted wave, which is destructive along the direction of propagation without really phase-shifting the incident wave. The reason for this 180◦ phase shift is that the conducting metal will always compensate for any electric field inside it by moving charges. Thus, at an interface, there is no transmitted wave, but only a reflected wave from the surface. Huygens’ principle can also be applied here to explain the 17 18 1 Introduction to Optics and Photophysics law of reflection, which states that the wave will leave the mirror at the same angle to the mirror normal but reflected on the opposite side of the normal. However, there is a small current induced by the light wiggling at the electrons and that the material of the mirror has some resistance. This will, in effect, lead to absorption losses. The reflectivity of a good quality front-silvered mirror is typically 97.5% (for λ = 450 nm to 2 μm). These mirrors are quite delicate and easy to damage, often leading to much higher losses in practice. 1.3.3 Dielectric Mirror Nonmetallic materials also show a certain amount of reflection. For example, glass (n = 1.518) at normal incidence shows about 4% of reflection from its surface owing to the interference effects of the waves generated in the material. Through careful design, depositing multiple layers of well-defined thicknesses and different refractive index materials, it is possible to reach a total reflectivity of very close to 100% for a very defined spectral range (as for interference filters and dichromatic beam splitters) or alternatively over a significant band of colors and incidence angles (as in broad band mirrors). Interestingly, the absorptive losses can be much smaller than for metallic (e.g., silver coated) mirrors, leading to overall higher performances. In addition, these mirrors are also less prone to scratches on their surfaces. However, an important point to consider is that waves of different polarizations will penetrate the mirror material to a different amount when incident at an angle not perpendicular to the surface. This will lead to the phase shift being different for p- and s-polarizations. A linear polarization, when oriented at 45◦ to common plane of the incident and reflected beam for instance, will not be conserved owing to this phase shift and will be converted to elliptical polarization. This effect is particularly prominent when the wavelength is close to the wavelength edge of the coating. With such multilayer coatings, an effect opposite to reflection can also be achieved. The usual 4% reflection at each air–glass and glass–air surface of optical elements (e.g., each lens) can be significantly reduced by coating the lenses with layers of well-defined thickness and refractive indices, the so-called antireflection (AR) coating. When viewed at oblique angles, such coated surfaces often have a blue oily shimmer, as one might have seen on photography camera lenses. 1.3.4 Pinholes These are, for example, used in confocal microscopy to define a small area transmitting light to the spatially integrating detector. Typically, these pinholes are precisely adjustable in size. This can be achieved by translating several blades positioned behind each other. However, an important application of a pinhole is to generate a uniformly wide clean laser beam of Gaussian shape. For more details, see Section A.2. 1.3 Optical Elements Pinholes can be bought from several manufacturers, but it is also possible to make a pinhole using a kitchen tin foil. The tin foil is tightly folded a number of times (e.g., four times). Then a round sharp needle (e.g., a pin is fine, but do not use a syringe) is pressed into that stack, penetrating a few layers, but not going right through it. After removing the needle, the tin foil is unfolded, and by holding it in front of a bright light source, the smallest pinhole in the series of generated pinholes can be identified by eye. This is typically a good size for being used in a beam expansion telescope for beam cleanup. 1.3.5 Filters There are two different types (and combinations thereof) of filters. Absorption filters (sometimes called color glass filters) consist of a thick piece of glass in which a material with strong absorption is embedded. An advantage is that a scratch will not significantly influence the filtering characteristics. However, a problem is that the spectral edge of the transition between absorption and transmission is usually not very steep and the transmission in the transmitted band of wavelengths is not very high. Note that when one uses the term ‘‘wavelengths’’ sloppily, as in this case, one usually refers to the corresponding wavelength in vacuum and not the wavelength inside the material. Therefore, high-quality filters are always coated on at least one side with multilayer structure of dielectric materials. These coatings can be tailored precisely to reflect exactly a range of wavelength while effectively transmitting another range. A problem here is that, as such coatings work owing to interference, there is an inherent angular and wavelength dependence. In other words, a filter placed at a 45◦ angle will transmit a different range of wavelengths than that placed at normal incidence. In a microscope, the position in the field of view in the front focal plane of the objective lens will define the exact angle under which the light leaves the objective. Because such filters are typically placed in the space between objective and tube lens (the infinity space), this angle is also the angle of incidence on the filter. Thus, there could potentially be a position-dependent color sensitivity owing to this effect. However, because the fluorescence spectra are usually rather broad and the angular spread for a typical field of view is in the range of only ±2◦ , this effect can be completely neglected for fluorescence microscopy. It is important to reduce background as much as possible. Background can stem from the generation of residual fluorescence or Raman excitation even in glass. For this reason, optical filters always need to have their coated side pointing toward the incident light. Because the coatings usually never reach completely to the edge of the filter, one can determine the coated side by careful inspection. When building home-built setups, one also has to assure that no light can possibly pass through an uncoated bit of a filter, as this would have disastrous effects on the suppression of unwanted scattering. 19 20 1 Introduction to Optics and Photophysics 1.3.6 Chromatic Reflectors Similarly, chromatic reflectors are designed to reflect a range of wavelength (usually shorter than a critical wavelength) and transmit another range. Such reflectors are often called dichroic mirrors, which is a confusing and potentially misleading term, as the physical effect ‘‘dichroism’’, which refers to a polarization-dependent absorption, has nothing to do with it. Chromatic reflectors are manufactured by multilayer dielectric coating. The comments above about the wavelength and angular dependence apply equally well here; even more so because for 45◦ incidence, the angular dependence is much stronger and the spectral steepness of the edges is much softer than for angles closer to normal incidence. This has recently lead microscope manufacturers to redesign setups with the chromatic reflectors operating closer to normal incidence (with appropriate redesign of the chromatic reflectors). 1.4 The Far-Field, Near-Field, and Evanescent Waves In the discussion above, we considered light as a propagating wave, which led to the introduction of the concept of light rays. Propagating waves, such as those in vacuum or in a homogeneous medium, are often the only solution to Maxwell’s equations. In this situation, known as the far field, all allowed distributions of light can be described as a superposition of propagating plane waves – an approach that is explored further in Chapter 2. However, if we have inhomogeneities or sources of light in very close proximity (maximally a few wavelengths distance) to the point of observation, the situation changes and we have to also consider the so-called nonpropagating (evanescent) waves. This regime is called the near field. A simple example is an emitting scalar source, whose scalar amplitude can be written as A = e2πikr /r. At a distance of several wavelengths from the center, this amplitude can well be described by a superposition of plane waves, each with the same wavelength. However, when it is close to the source, this approximation cannot be made. We will also need waves of lateral wavelengths components that are smaller than the total wavelength to synthesize the above r −1 -shaped amplitude distribution. This, however, is possible only by using an imaginary value for the axial (z) component of the wave vector, which then forces these waves to decay exponentially with distance (hence the name ‘‘evanescent’’ components). Surprisingly with this trick, a complete synthesis of the amplitude A remains possible in the right-hand side of the space around next to the source, for instance. This synthesis is called the Weyl expansion. The proximity of the source influences the electric field in its surrounding. A spherically focused wave in a homogeneous medium will remain a far-field wave with a single wavelength and generate quite a different amplitude pattern as compared to the above emitted wave, which again shows the importance 1.4 The Far-Field, Near-Field, and Evanescent Waves of the near-field waves in the vicinity of sources of light, inhomogeneities, or absorbers. Another important point is that the near field does not dissipate energy. The oscillating electrical field can be considered as ‘‘bound’’ to the emitter or inhomogeneity, as long as it remains in a homogeneous medium with no absorbers present. A few examples of such near-field effects are given as follows: • First, let us consider total internal reflection. From Snell’s law, we can predict that a highly inclined beam of light hitting a glass–air interface would not be allowed to exist on the air side, as there would be no solution to a possible angle of propagation. The phase-matching condition at the interface cannot be fulfilled (Figure 1.11). Indeed, the experiments show exactly this. The beam is 100% reflected from that interface (Figure 1.8). However, because of the presence of the interface, there will be a bound near-field ‘‘leaking’’ a few wavelengths into the air side. This is precisely described by Maxwell’s equations, yielding an exponentially evanescent oscillation of the electric field. This field normally does not transport any energy, and the reflection remains 100%. However, as soon as an absorber such as a fluorophore is placed into this field just about 100 nm away from the interface, it will strongly absorb light and fluoresce. Obviously, in this case, the reflected light will no longer correspond to 100% of the incident light. • As a second example, we consider what happens when the same fluorophore emits light. Similar to the description above, the dipole emission electric field will Medium 2 (n2 = 1), air Interface Medium 1 (n1 = 1.5), glass λ 2 Reflected beam λ1 Shortest possible wavelength I d Figure 1.11 Total internal refraction. If a parallel beam of light hits the glass to air interface at a supercritical angle (Figure 1.8), no possible wave can exist in the optically less dense medium (right side) to form a continuous electric field with the incoming wave across the interface. Note that the λ2 in air is determined by the medium and the vacuum wavelength, and tilting it further will only make its effective wavelength along the interface larger. 21 22 1 Introduction to Optics and Photophysics contain near-field components. Normally, these components are ‘‘bound’’ and do not carry energy away from the source. However, an air-to-glass interface in its vicinity will change the situation. It allows ‘‘bound’’ near-field components to be converted into propagating far-field waves on the glass side. These can be exactly the waves that would normally not propagate on the air side, but the waves of the near field can fulfill the phase-matching condition at the air–glass interface. In effect, more energy per time is withdrawn from the emitter than that drawn if it was homogeneously embedded in air. For a single molecule, the result is a quicker emission of the fluorescent photon; thus, a shortened fluorescence decay time that can indeed be measured. • A third example is a bare glass fiber. Here, the light is constantly totally internally reflected at the glass–air interface and, therefore, guided by the fiber. However here, the evanescent light also leaks to the outside of the fiber. If a second fiber is brought in its close vicinity (without touching it), light can couple into this fiber. In quantum mechanical terms, this situation is often referred to as tunneling, as the energy is able to tunnel through the ‘‘forbidden region’’ of air from one material into the next, where the wave can again propagate. To prevent this, the fiber is therefore usually surrounded by a material with lower refractive index (‘‘cladding’’), as shown in Figure 1.12. • Another important example is the field behind a subwavelength aperture, as is the case in scanning near-field optical microscopy (SNOM). Here, the aperture is formed by a tiny hole at the end of a metal-coated glass tip. A fluorophore will be significantly excited only if it lies within this near field, as the field strengths are far greater here than anywhere else. Furthermore, this near field has a much better confinement (roughly the size of the aperture, practically down to about Direction of light propagation Core (n1) y |E|2 x x Cladding (n2) z x Figure 1.12 A glass fiber is shown sliced along its maximal diameter (a) and in a cross-sectional view (b). It consists of a central core and the cladding with a lower refractive index also providing mechanical protection of the core. In the middle left, an example of a typical intensity distribution of the light guided in this single-mode fiber is shown. Note that this intensity and the electric field extend a little bit into the cladding. 1.5 Optical Aberrations 20 nm) than any far-field optical wave (due to the restrictions of the famous Abbe limit discussed in Chapter 2). Thus, SNOM can resolve structures on membranes of cells at a much higher resolution, only exciting fluorescence a few nanometers away from the tip. The image is formed by scanning this tip carefully over the surface of the object. As a final remark, it is interesting to note that these near fields can be very strong, as long as they are not absorbed. Already in the case of evanescent waves, the fields are usually stronger than the plane wave in a homogeneous medium would be. However, in some situations, these waves can be made incredibly strong. This is the basis for Pendry’s near-field lens, which is theoretically capable of precompensating the quick drop in the strength of the near field by making this near field very strong before it leaves the lens. In theory, such a super lens could reconstruct the field, including the near-field components, at the image plane, which means that the optical resolution could be arbitrarily good. However, a potential problem is that the tiniest absorption or scattering loss will lead to a breakdown of this field with severe effects on the imaging ability of this near-field lens. 1.5 Optical Aberrations High-resolution microscopes are difficult to manufacture and usually come with quite tight tolerances on experimental parameters (e.g., the temperature and the refractive index of the mounting medium). Thus, in the real world, the microscope will almost always show imperfections. For a microscopist, it is very useful to be able to recognize such optical aberrations and classify them. One can then try to remove them through modifications to the setup or in the extreme case, by using special methods called adaptive optics, which measure and correct such aberrations using actuators (e.g., a deformable mirror) that modify the wave front before it passes through the optics and the sample. Optical aberrations are usually classified by Zernike modes. The corresponding equations describe the modifications of the phase of light in the back focal plane of the idealized objective lens as compared to the ideal plane wave that would generate a spherical wave front at the focus. The most basic modes of aberration (called tip, tilt, and defocus) are usually not noticed, as they simply displace the image by a tiny bit along the X-, Y-, and/or Z-direction. They are also not a problem, as the image quality remains unaffected. The most important aberration that frequently occurs is spherical aberration. In fact, a normal thick lens with spherical surfaces to which the above lensmaker’s equation would apply will show spherical aberration: rays from the outer rim of the lens focus to a slightly different position than rays from the inner area. This blurs the focus and decreases the brightness of pointlike objects. A modern objective can achieve an image quality free of spherical aberrations by careful optical design. However, even for highly corrected objectives such as a Plan Apochromat 23 24 1 Introduction to Optics and Photophysics (Chapter 2), spherical aberration is commonly observed in praxis. This is because of a mismatch of the room temperature to the design temperature, or because the objective is not used according to its specifications (e.g., the sample is embedded in water, n = 1.33, even though the objective is an oil-immersion objective designed for embedding in oil, n = 1.52). Yet another common reason for spherical aberrations is using the wrong thickness of the coverslip, that is, using a number one (#1) coverslip, even though the objective is designed for 0.17 mm thickness, which corresponds to a number 1.5 (#1.5) coverslip. A convenient way to notice spherical aberration is to pick a small (ideally pointlike) bright object and manually defocus up and down. If both sides of defocus essentially show the same behavior (a fuzzy pattern of rings), the setup is free of spherical aberrations. However, if an especially nice pattern of rings is seen on one side and no rings (or very fuzzy rings) or a ‘‘smudge’’ is seen on the other side, there are strong spherical aberrations present. Some objectives (especially objectives for total internal reflection fluorescence (TIRF) microscopy) have a correction collar that can be rotated until the image is free of spherical aberrations. Another very common aberration is astigmatism. When defocusing a small pointlike object up and down, one sees an ellipse oriented in a particular direction above the plane of focus and a perpendicular orientation of the ellipse below the focus. Astigmatism is often seen in images at positions far away from the center of the ideal field of view. A close observation of the in-focus point-spread function shows a star-shaped (or cross-shaped) appearance. The last position-independent aberration to mention here is coma. Coma can arise from misaligned (tilted) lenses. An in-focus point-spread function showing coma looks like an off-center dot opposed by an asymmetric quarter-moon shaped comet when in focus. Aberrations can also result in a position-dependent displacement, called the curvature of field. In addition, displacements can often depend on the color, which is called chromatic aberrations. It should be noted that in confocal microscopes, one often sees a banana-shaped 3D point-spread function when looking in the XZ-plane, which is also a sign of aberrations. The ideal confocal point-spread function in 3D should have the shape of an American football. 1.6 Physical Background of Fluorescence Fluorophores are beautiful to look at, as they absorb light at one color and emit it at another color. This effect is well known from the nice bluish color that freshly washed T-shirts emit, when illuminated with ultraviolet light, such as the so-called black light used in some night clubs. Essentially almost any molecular system shows fluorescence. However, most things fluoresce very weakly, such that their fluorescence is usually undetectable or their excitation is far in the ultraviolet region of the spectrum. 1.6 Physical Background of Fluorescence Let us consider a simple fluorescent molecule that emits green light when illuminated with blue light. In the language of quantum physics, the molecule is excited by a blue photon and emits a green photon, which has lower energy. Hence, one might ask, where the energy went, to which the answer is that it will be dissipated mostly as heat to the surrounding medium. Looking a little bit more into the detail, the following model explains the effect (Figure 1.13). The molecule consists of a number of atomic nuclei, which we consider to be at well-defined positions. However, the electrons, being of far lighter weight, are whizzing about in their respective clouds. When a photon gets absorbed by the molecule, this leads to one electron being displaced from one orbital into a previously unoccupied orbital. This happens almost instantaneously – in the range of femtoseconds, with the effect that the nuclei did not have a chance to move to the new equilibrium position. As the charge distribution around the nuclei changed, they will feel net forces and thus, start to vibrate. If this relatively high energy for a vibrational mode is interpreted as a temperature, one can think of a very high (>100 ◦ C) but very local temperature. The vibrating nuclei will then in a time span of only a few picoseconds redistribute their vibrational energy over the whole molecule and then to the surrounding medium until they have found a new equilibrium position with the changed electronic charge distribution. In ordinary quantum mechanics, this new situation would be stable forever, as there are no oscillating charges and no light would be emitted. However, surprisingly because of some effects that only an advanced theory called quantum electrodynamics can explain, there is still a chance for this situation to change with the result of the emission of a photon and a second redistribution of the electron back into its original cloud. Because this cloud was also deformed, due to the changed position of the nuclei, it will have a higher energy. Again, the nuclei will feel the forces and start vibrating and dissipating their energy to the surrounding. In summary, after resting for some time (approximately nanoseconds) in the excited state, the fluorophore emits a redshifted photon (here the green photon after having absorbed a blue one), for a second time leaving some energy in Electron cloud Atomic nuclei (a) Nuclei cool (at room temperature) Figure 1.13 An example of a simple two-atom molecule with its electrons in the electronic ground state S0 (a) and electronic excited state S1 (b). The redistribution of electrons upon excitation (b) Nuclei hot (vibrationally excited, >100 °C) (b) leads to effective forces (little arrows) to the atomic nuclei, causing a molecular vibration right after the excitation event, which is rapidly cooled down by the surrounding. 25 1 Introduction to Optics and Photophysics Electron spins ∼ps Internal conversion (∼ps) Electron spins S0 ∼μs Inter system S1 ∼Ps0σIex T1 ∼Ps τ 1 −1 crossing T0 ∼ Iex ∼ns ∼ps Electron spins Electron spins S2 Electron spins the vibrating molecular nuclei in the electronic ground state (S0 ). The shift in wavelength between absorbed and emitted photon is called the Stokes shift. Figure 1.14 shows a summary of these effects in an energy level diagram called the Jablonski diagram. In this diagram, the rotational, vibrational, and electronic energy stored in the fluorophore are all summed together and represented as possible states of the molecule. At room temperature, each degree of freedom gets about 0.5 kT of energy, which amounts to about 0.05 eV. This amount of energy is far less than the energy of a photon or the energy between the electronic transitions. The surface of the sun at a temperature of 5778 K gives a good example of the sort of temperature required to emit visible light. In addition, the vibrational modes of most molecules require more energy than the room temperature provides. However, this is not true for rotational modes, some of which are excited in a typical molecule even at room temperature. After excitation by a photon, the molecule will usually end up in a vibrationally excited mode (Figure 1.13). Considering these vibrations, one can say that these modes possess vibrational energy corresponding to a temperature of several hundred degrees centigrade. After a quick cooling down (in the range of picoseconds) of these vibrations, the molecule will emit another photon from the vibrational ground, but electronically excited state. This emission process is often described by a single exponential decay with a characteristic fluorescence lifetime τ of the fluorophore, which is typically in the range of τ = 1–5 ns. After emission, Total energy 26 Phosphorescence (∼μs) Singlet Figure 1.14 The Jablonski diagram of states. This diagram shows the total energy of a dye molecule in various states. The groups of levels correspond to the electronic excited states and the thinner lines denote the various nuclear vibrational levels. Various Triplet transitions between the different levels are indicated by arrows. The order of magnitude for the timescales is indicated (ps, ns, and μs), and the probability for the respective transition is indicated. PS means ‘‘the prob0 ability of the molecule to be in state S0 .’’ 1.6 Physical Background of Fluorescence the molecule will again end up being vibrationally excited, followed by another cooling. Normally molecules are in the singlet manifold of electronic states. This means that the spin of the excited electron is oriented antiparallel to the spin of the single electron left behind in its original electron cloud. However, because of the spin-orbit coupling (enhanced in the presence of heavy atoms), a single electron can undergo a spin flip and end up on the triplet state T0 (Figure 1.14, righthand side). There, it is trapped for a longer time, as the radiative transition (phosphorescence) back to the S0 electronic ground state is spin-forbidden and only possible due to another event of spin-orbit coupling. Owing to the often long lifetime of triplet states (approximately microseconds) and their enhanced reactivity, this triplet population of molecules has an enhanced chance of undergoing photobleaching. In Figure 1.15, we take an even closer look at the process of excitation and de-excitation. The curves represent the potential energy of the electronic ground state and the electronic excited state as a function of internuclear distance. In this curve, the energy levels of the quantum mechanical eigenstates are shown along with their associated probability density. These thus signify how probable it is to find the nuclei at a certain relative distance, depending on its vibrational state. If we now illuminate the molecule with light of a well-defined frequency, that is, a well-defined energy of the photon, we would like to know what the Wave function Probable transition Vibrational energy levels Total energy Potential energy S1 Vibrational energy levels S0 Nuclear distance Figure 1.15 The Franck Condon principle. It is displayed that the equilibrium position of atomic nuclei depends on the excitation state of its electrons. The electronic excited state leads to shifted potential. As the probability of excitation depends on the spatial overlap of the probability of nuclei positions (‘‘wave functions’’) in the ground and excited electronic states, excitation and emission are most probably for the excited vibrational levels of the molecules. The excitation and emission spectra are thus separated by the Stokes shift. 27 28 1 Introduction to Optics and Photophysics probability of its absorption is and in which state the molecule will be after its absorption. This would allow us to predict the absorption spectrum of the fluorophore. As the electronic transition can be assumed to have happened instantaneously because the electrons are several thousand times lighter than the nuclei, we need to consider the probability that the nuclei go from one electronic S0 configuration into the electronic S1 configuration. This is given by the overlap integral of the nuclei with the electrons in the S0 state and the final state of the nuclei with the electrons in the S1 state. As the molecules may themselves be in a range of possible energy states owing to solvent interactions or rotational states, the second ingredient needed is the density of states around this transition energy that is defined by the photon. The product of the state density and the transition dipole moment describes the absorption probability of a photon. This is called Fermi’s golden rule. Note that the transition dipole moment in the approximation of fast electrons, always seeing a potential (and thus feeling forces) as defined by the current positions of the vibrating nuclei (the Born–Oppenheimer approximation), is composed of the overlap integral of vibrational states and the respective electronic transition dipole moment. It is now interesting to see that for the process of emission, a very similar overlap integral has to be calculated, which determines the emission spectrum of the fluorophore. If we assume that the structures of the vibrational states in the electronic excited and the electronic ground state are similar (same harmonicity and negligible excitation to higher electronic states), we can predict that the features of the absorption and emission spectrum should be roughly like mirror copies of each other when plotted over energy. Indeed, this approximate mirror symmetry between excitation and emission spectrum is often seen. For a detailed discussion, see Schmitt, Mayerhöfer, and Popp (2011). Figure 1.16 displays an example of a fluorescence spectrum. The approximate mirror symmetry is clearly visible. Note that the excitation spectrum is typically measured by keeping the emission at the wavelength of maximum fluorescence emission and scanning the excitation wavelength. Similarly, the emission spectrum is recorded by fixing the excitation to the wavelength of maximum excitation. Owing to the heterogeneity of molecular conformations, each molecule with slightly shifted spectrum, there is an apparent overlap of the excitation with emission spectrum. In many cases, the intramolecular details are less important and one is interested only in the overall photophysical properties of the molecule under study. Phenomena such as fluorescence saturation or Förster transfer can best be described by summarizing molecular substates and by modeling only transitions between them. In Figure 1.17, the vibrational states are omitted and all that matters in this simple model are the singlet ground (S0 ) and excited (S1 ) state, the triplet state (T0 ), and the destroyed state (bleached) of the molecule. From such schemes, one can construct a set of differential equations that govern the population behavior of an ensemble of many such molecules with similar states. Essential for this are 1.6 Physical Background of Fluorescence 100 90 80 Efficiency (%) 70 60 50 40 30 20 10 0 300 400 500 600 Wavelength (nm) Figure 1.16 The excitation and emission spectrum of the enhanced green fluorescent protein (for details on fluorescent proteins, see Chapter 4). The position of the excitation and emission maximum are indicated by dotted lines. They usually serve as the reference point for measuring the other curve, S1 kex that is, the emission curve is measured by exciting at the excitation maximum and the excitation curve by measuring at the emission maximum. (Source: The curves were redrawn after a plot from the online Zeiss fluorophore database; http://www.microshop.zeiss.com/us/us_en/spektral.php.) kisc kn kf T0 kbleach kp S0 Bleached Figure 1.17 A simple state diagram with transition rates between the states. Such diagrams summarize levels in the Jablonski diagram and can be used to construct rate equations to describe the photophysical behavior of the molecule. Rate constants are as follows: kf : radiative rate, kex : excitation rate, kn : non-radiative decay rate, kisc : inter system crossing rate, kp : phosphorescence rate, kbleach : bleaching rate. the transition rates between the states that are written near the various arrows. Such a ‘‘rate,’’ which is usually denoted by k, represents the differential probability per unit time for a molecule to go from one state to another. The unit of these rates is s−1 . Thus, a set of differential equations is obtained by writing down one rate equation per state, considering all the outgoing and incoming arrows. As an 29 30 1 Introduction to Optics and Photophysics example, consider the rate equation for the excited state S1 : ∂ S1 = kex S0 − kf + kn + kisc S1 , ∂t where the pointed brackets denote the probability of a molecule to be in the particular S0 or S1 electronic state and the various ks denote the transition rates as shown in Figure 1.17. Similarly, a rate equation is constructed for each of the other states, which can then together serve to determine steady-state (e.g., for continuous excitation) or time-dependent models (e.g., after pulsed excitation) for various photophysical processes. In addition, note that the relative chance of a specific process to happen is given by that rate in relation to all the rates leaving the state. For example, the quantum efficiency, that is, the chance of a molecule to emit a fluorescence photon after excitation, is given in the example of Figure 1.17 by QE = kf kf + kn + kisc When dealing with several interacting molecules, which is the case for Förster transfer (Chapter 7), the correct way of describing the molecular states is to consider each combination of molecule states (e.g., molecule A at S1 , molecule B at S0 ) as one combined state (AS1 BS0 ) and denoting the appropriate transitions between such combined states. Furthermore, the lifetime of a state (after the molecule has reached this state) is given by the inverse of the sum of all the rates of the processes leaving this state. This means that the fluorescence lifetime is here obtained by τ= 1 kf + kn + kisc As a final note, the amount of emitted fluorescence photons per second is given by the radiative rate kf multiplied with the probability of a molecule to be in the excited (S1 ) state. 1.7 Photons, Poisson Statistics, and AntiBunching In the above treatment of optics, we have treated light as waves and rays, but have not really required its description in terms of photons. However, the description of fluorescence already required the quantized treatment of absorption and emission events. In this section, we investigate the experimental effects caused by the photon nature of light in a bit more depth. As seen above, the concept of ‘‘photons’’ is required owing to the observation that light gets absorbed and emitted in integer packages of energy. As these absorption and emission events are often observed to be uniformly but randomly distributed over time, detecting for a certain integration time T will count a number of References photons N. If such a photon-counting experiment was repeated many times, the average will approximate the expectation μ. More precisely, the probability P(N) to count N photons with a given expectancy μ is expressed by the Poisson distribution P (N) = μN −μ e N! Interestingly, this probability distribution has the property that its variance σ 2 is equal to the expectancy μ. Furthermore, it closely approximates a Gaussian distribution (with σ 2 = μ) for expected photon numbers larger than about 10 photons. If a single fluorescent molecule is used for the generation of photons, things can be different. The reason is that a fluorophore typically needs one or a few nanoseconds to be excited again and emit a photon. As a consequence, the photons are emitted a bit in a more clocklike manner. In other words, the probability of detecting two photons quasi-simultaneously in a very short time interval is lower than what would normally be expected. This phenomenon is called antibunching. It can be seen as a dip (near difference time zero) in the temporal cross correlation of the signal of two photon-counting detectors directed at the emission of a single fluorophore, split by a 50% beam splitter. In summary, this chapter covered the basics of ray optics, wave optics, optical elements, and fluorescence to help the reader with a better understanding of the following chapters where many of the principles described above are used and applied. If the aim is to build an optical instrument or to align it, see the Appendix 1 which contains an in-depth discussion and tips and tricks on how to align optical systems. References Hecht, E. (2002) Optics, 4th Chapter 6.2 edn, Addison-Wesley. ISBN: 0-32118878-0. Inoué, S. and Hyde, W.L. (1957) J. Biophys. Biochem. Cytol., 3, 831–838. Schmitt, M., Mayerhöfer, T., and Popp, J. (2011) in Handbook of Biophotonics, Vol. 1. Basics and Techniques (eds J. Popp, V.V. Tuchin, and S.H. Heinemann), Wiley-VCH Verlag GmbH, Weinheim, pp. 87–261. 31