* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download The Physics of Quantum Mechanics

Relational approach to quantum physics wikipedia , lookup

Introduction to gauge theory wikipedia , lookup

EPR paradox wikipedia , lookup

Nuclear physics wikipedia , lookup

Equations of motion wikipedia , lookup

Quantum vacuum thruster wikipedia , lookup

Renormalization wikipedia , lookup

History of quantum field theory wikipedia , lookup

Electromagnetism wikipedia , lookup

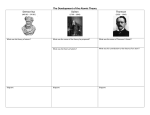

History of physics wikipedia , lookup

History of subatomic physics wikipedia , lookup

Perturbation theory wikipedia , lookup

Classical mechanics wikipedia , lookup

Path integral formulation wikipedia , lookup

Time in physics wikipedia , lookup

Four-vector wikipedia , lookup

Quantum electrodynamics wikipedia , lookup

Probability amplitude wikipedia , lookup

Old quantum theory wikipedia , lookup

Canonical quantization wikipedia , lookup

Photon polarization wikipedia , lookup

Matrix mechanics wikipedia , lookup

Eigenstate thermalization hypothesis wikipedia , lookup

Hydrogen atom wikipedia , lookup

Symmetry in quantum mechanics wikipedia , lookup

Atomic theory wikipedia , lookup

Relativistic quantum mechanics wikipedia , lookup

Theoretical and experimental justification for the Schrödinger equation wikipedia , lookup