* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Basics

Survey

Document related concepts

Transcript

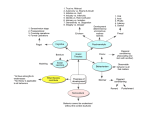

Syllabus Syllabus Requirements Readings Topics Goals http://www.uni.edu/harton/ResDes17.htm Chapter 1--basics Types of studies Descriptive Relational Causal Cross-sectional vs. longitudinal Repeated measures vs. time series Positive vs. negative relationship Third variable problem—examples? Independent vs. dependent variable Exhaustive and mutually exclusive categories (and relevant ones) Basics and Operational Definitions What is qualitative vs. quantitative research? When might your unit of analysis not be individuals? What are examples of the ecological (conclusions about groups based on individuals) and exception (group conclusion based on individuals) fallacy in research? Why are operational definitions important? Philosophy and validity How do inductive and deductive research fit together? Explain metaphysics, positivism, determinism, post- positivism, critical realism, constructivism, and evolutionary epistemology. What do these approaches have to do with how science is conducted and interpreted? What are the differences between conclusion, internal, construct, and external validity? How can each be assessed? Fig. 1-9 What are threats to conclusion validity? Theory What is good about theories? What is bad about theories? What makes a good hypothesis? What makes a good theory? Greenwald, 2012 What is “strong inference”? Why doesn’t strong inference always work? What does Greenwald (2012) suggest to improve theory in psychology? Meehl, 1990 What types of studies is he referring to? Would these factors also affect other types of studies? 10 Factors: “loose derivation chain” “problematic auxiliary theories” “problematic ceteris paribus clause” “experimenter error” “inadequate statistical power” “crud factor” “pilot studies” “selective bias in submitting reports” “selective editorial bias” “detached validation claim for psychometric instruments” Meehl, 1990 What are the implications of his points for the field? Are these issues more of a problem in psychology than in other fields? Are we just doing “a bunch of nothing?” HARKing (Kerr, 1998) What is HARKing? What is the alternative? What examples have you seen? How often do you think it occurs? How can it be identified? Do scientists approve of it? What are the reasons for HARKing? Do we always need a hypothesis? Why don’t we put more emphasis on disconfirmation? What are the costs of HARKing? How does this relate to the file drawer problem? Is it ethical? Does it negatively affect our perceptions by others (cases of fraud, use as criticism)? Are the benefits greater than the costs? How can we discourage it? LeBel & Peters, 2011 Background on Bem (2011) How does interpretation bias (LeBel & Peters, 2011) affect theory? What are the advantages and disadvantages of conceptual replications? Do we do enough to test R/V in psych research? What are some problems with NSHT? Ways to fix things Be more precise in specifying theories (effect size predictions) (M, LB&P) Make null hypotheses really alternatives (LB&P) Use more than just NHST (LB&P) Make hypotheses falsifiable (M, LB&P) Test multiple hypotheses (M, K) Use stronger methods so that failures to support can’t be put off on methods (LB&P) Do more to check R/V in studies (invariance across conditions, measures of comprehension, noncompliance) (M, LB&P) Report pilot studies (M, LB&P) Require replication (M, K, LB&P) Set power at .90 or higher (M) Report power and power analyses in articles (M) Report confidence intervals (M) Report overlap stats (M) Report negative results (M) Look at multiple measures and see whether you get the same effects across them (M) Educate students on HARKing and negative practices (K) Address in codes of ethics (K) Reject HARKing articles (K) Make exploratory/post-hoc research okay (K) Learn more math (M) Change the system (pubs=everything) (M, K) Reviewers should be more critical of lit (M, K) Realize that not everything can be tested (M) Idea generation How do you come up with ideas? How do you know if they are good? What types of feasibility issues should you consider in research? Why is a literature review important? How should it be done? How can you use concept mapping to help you plan your research project? Next week Class on Tuesday, 12:30-3:15 Thought papers due by Monday at midnight Ethics—for researchers and participants (4 articles, one website) Proposal topics due. Paragraph on what you plan to do.