* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download OLS assumption(unbiasedness) An estimator, x, is an unbiased

Expectation–maximization algorithm wikipedia , lookup

Discrete choice wikipedia , lookup

Regression analysis wikipedia , lookup

Bias of an estimator wikipedia , lookup

Interaction (statistics) wikipedia , lookup

Instrumental variables estimation wikipedia , lookup

Data assimilation wikipedia , lookup

Time series wikipedia , lookup

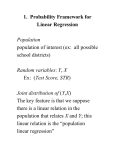

OLS assumption(unbiasedness) An estimator, x, is an unbiased estimator for an unknown parameter, if E(x^) = x. This does not mean that x^ = x, rather, it implies if we could indefinitely draw random sample from on y from the entire population, and compute an estimate each time and then average all estimates over all random samples on y, we would obtain the unknown estimator. 1. Linear in parameters: the dependent variable is a linear combination of various independent variables and the unobservable error term. 2. Random sampling: a random sampling of size n. This assumption has to be established to for the errors to be randomly distributed. 3. Sample variation in the explanatory variable: the sample outcome corresponding to x should not be of the same value 4. Zero conditional mean: this is to say using the same values of x to explain a randomly choosen sample y, the expect value of error should be zero. This implicitly implies that X ╨ u 5. Homoskedasticity: the error has the same variance, var(u|x) = σ², given any value of the explanatory variable Proof: because Var(u|x) = E(u²|x) – [E(u|x)]², because E(u|x) = 0, and σ² = E(u²|x), which implies thatσ² is an unconditional expectation of u². This means σ² = E(u²) = var(u). Essentially, σ² is the unconditional variance of u. We can write a linear function as E(Y|X) = B0 + B1X, Var(y|x) = σ², Which implies that the conditional expectation of y given x is linear in x, while the variance of y given x is constant. Heteroskedasticity is present whenever var(y|x) is a function of x. Expected value of the OLS estimators 1. Linear in parameters: 2. Random sampling: a random sample over n obs 3. No perfect collinearity: none of the IVs is constant and there’re no exact relationship among the IVs. (one of them cannot be a lincom of another (constant multiplier)) 4. Zero conditional mean: can fail if the functional relationship between Y and X is mis-specified. Omitted variable bias can also lead this assumption to fail Omitted variable bias/underspecified model Y = b0 + b1x1 + b2x2 + u, excluding x2 So that b1~ = b1^ + b2^δ2 E(b1~) = E(b1^ + b2^δ2) = E(b1^) + E(b2^)δ2 = b1 + b2δ2 So the bias in b1~ is E(b1~) – b1 = b2δ2 δ2 is the sample covariance between x1 and x2 over the sample variance of x1, δ2 = 0 iff x1 and x2 are orthogonal in the sample. This will lead to biased estimate. 5. Homoskedasticity: the variance of the unobserved error u does not depend on the levels of explanatory variables. *if var(u|x) is not constant, then t stat and CI are invalid no matter how large the sample size is 6. Normality (including 4 and 5): u ~ N(0, σ²) and iid. Y has a normal distribution with mean linear in x and a constant variance. Disadvantage: assuming all u affect y in a separate, additive fashion ( but noted that u is unobserved) Because var(u) = plim (𝑁 −1 ∑𝑛𝑖=1(𝑥𝑖 − 𝑥 )²), therefore, when N is not very large. Variance will become substantial and t distribution will be a poor approximation to the distribution of t stat when u is not distributed normally. Conversely, as n grows, σ²^ will converge in prob to the constantσ². And so is the F stat. Inconsistency E(u|X1,…,Xn) = 0 If the zero conditional mean assumption is violated (i.e., the error term is correlated with any of the independent variables), then OLS is biased and inconsistent. And because this deficiency is inherent in the model specification, this bias will persist even if the sample size grows. An estimator is called consistent if it converges in probability to the quantity being estimated in which Plim(b^ - b) = cov(x, u)/var(x), Since u is unknown, we cannot estimate cov(x, u) # # plim b^ = b1 + Cov(x1, u)/var(x1) = b1 because Cov(x1, u) = 0 OLS is consistent only if we assume zero correlation between x1 and u. Assuming y = b0 + b1x1 + b2x2 + u Now we omit x2 so that u = b2x2 + r and assuming the regression coefficient in the new model is b1~, which equals b1 + b2v, and assuming that b2 is positively correlated to x1, then ß = cov(x1, x2)/var(x1), since var(x1) > 0, the direction of the relationship between x1 and x2 determine the direction of the inconsistency of this asymptotic bias. If b^ is consistent, then the distribution of b^ becomes more and more tightly distributed around b as n increases. Gauss markov theorem (BLUE) Linear: b^ of b is linear iff, it can be expressed as a linear function of the data on the DV B = (𝑋 ′ 𝑋)−1X’Y Best: smallest variance For any estimator b~ that is linear and unbiased, var(b^) < var(b~) Efficiency (smallest asymptotic variance) OLS is asymptotically efficient among a certain class of estimators under the GAUSS-MARKOV assumptions. Why binary DV model is different from OLS assumption? Why can’t we treat a dichotomous variable as a special form of continuous variable and use OLS regression? 1. Errors are not normally distributed, because Y can only take values of 0 and 1, the error term is also dichotomous, which violates the normality assumption. 2. The errors cannot have constant variance, which violates homoskedasticity assumption. 3. The predicted values of Y are not constrained to the 0-1 prob range, one can have predicted prob of Y that are less than 0 or greater than 1. Logit transformation solves these problems and allows one to model the log of the odds as a linear DV, however, the logistic regression coef canot be directly interpreted in the same way as reg coef Difference between multinominal and ordered probit/logit Multi always has a set of estimated slope coef for each contrast, whereas for ordered probit/logit there is only one slope coef for each IV but several intercept. In other words, ordered reg assumes parallel slopes across the categories of the IV (i.e., the parallel regression assumption), whereas this assumption is not necessary in multinomial reg, that is, the effect of a parameter b is assumed to be identical for all J-1 groupings. Marginal effect does not indicate the change in the probability that would be observed for a unit change in x Parallel regression assumption: the relationship between each pair of outcome groups is the same. In other words, ordinal logistic regression assumes that the coefficients that describe the relationship between, say, the lowest versus all higher categories of the response variable are the same as those that describe the relationship between the next lowest category and all higher categories, etc. This is called the proportional odds assumption or the parallel regression assumption. Because the relationship between all pairs of groups is the same, there is only one set of coefficients (only one model) Ordered Probit/Logit When the response variable, y, is an ordered response, as is often the case in survey research, then methods for modeling ordered outcomes should be considered. The most commonly used methods are ordered probit and logit. In ordered probit/logit, responses can be ordered from low to high by using thresholds to partition a continuous scale into various regions (usually more than 2), which is equivalent to mapping a number of observed outcomes into a latent continuous scale though the distance between the adjacent categories are unknown and may not be of the same distance. A few caveats should be addressed before I continue to discuss the situation under which ordered probit/logit model should be used. First, as many prior researches have indicated, the values of a response variable can be ordered does not mean they should be ordered (Miller and Volker 1985), this depends on researchers’ theoretical questions and their purposes. Second, though the response values have ordinal meaning among themselves, we cannot say a subject receiving 2 is twice more something than a subject receiving 1 on its response value, because the distances between these response values are not equal and the thresholds (or cutpoints) used to partition these responses contain many substantive (usually more theoretical than statistical) information. To begin with, researchers’ primary concern when they employ ordered probit/logit model (McKelvey and Zavoina 1975) is how changes in the predictors affect the probability that an observed response variable, 𝑌𝑗 (which is a linear combination of some IVs), is more (or less likely) to fall into a particular ordinal category. Ordered probit /logit models employ the same probit/logit link as probit/logit function, but unlike these binary response models (e.g., probit/logit), ordered probit/logit model partition the latent continuous scale into various regions corresponding to various ordinal categories by defined by establishing some threshold values and map observed response values into these categories. An extension of this difference between ordered probit/logit and logit/probit is the identification issue, because ordered probit/logit have “too many” free parameters (associated with the number of thresholds) to identify, therefore, there is no way to choose among these sets of parameters with the observed data and therefore, in contrast to the OLS or a probit/logit model, they are unidentified (though this can be compensated for by identifying a corresponding change in the thresholds). However, these characteristics make OLS model inappropriate in estimating ordinal outcomes. To begin with, neither ordered probit or logit models are linear; for the model to be linear, one must transform the dependent variable (in logistic model, this is done through applying natural log) so that latent outcomes are fall along a continuous scale, but this goes against our purpose because its dependent variable of interest is a non-interval outcome variable (i.e., the distances between outcomes are not equal). If we proceed as such, then we lose the information about the ordering and not answer the research question. Second, the residuals of the ordered probit/logit model are not normally distributed, they can only take on few values for each combination of independent variables, which is to say the errors are heteroskedasticity and not normal (unless the thresholds are all of the same distance apart). As a result, normality assumption is violated and this makes OLS model an inappropriate choice for the task of estimating ordinal outcomes. Nevertheless, researchers should, as discussed earlier, be cautious about their choice of ordinal outcome. First of all, the number of outcomes and their corresponding cutpoints are solely determined by researcher, if the response values can be ordered, then it follows that it can also be reordered. When another researcher is not satisfied with a large number of observations that fall into a particular ambiguous category or when the number of cases in a particular response category is small (so the model may fail to converge), she can always reorder the data by further differentiating response answers with more thresholds or merging the category with small number of cases into adjacent categories (but this could lead to inefficient estimate). Following the central limit theorem, as the number of ordinal response categories along the latent continuous scale increases, the distribution of y, our response variable, approaches that of normal distribution under the OLS model’s normality assumption. This makes OLS model an acceptable choice for modeling ordinal response. For instance, in many comparative politics or IR empirical researches that employ the untransformed Polity IV score (0 to -10 for autocracy, 0 to 10 for democracy), the 21 points regime score category makes a good enough fit to the OLS’s normality assumption. Additionally, because ordered probit/logit model also use maximum likelihood, it requires a relative large data set (as compared to OLS model) for the model to converge. Therefore, when the data under consideration does not have large number of observations and/or when the researchers have numerious ordinal outcomes in mind so that the distribution of responses among these ordinal categories makes data distribution pattern approach that of standard normal. Then OLS would be a viable model of choice for the researchers to consider. Interpretation For ordered probit, the interpretation of coefficient is the same as that of OLS model. For the ordered probit model, the partial change in y with respect to x can be interpreted as For a unit increase in x, y is expected to change by b units, holding all other variables constant. y-standardized and fully-standardized coefficients can be interpreted similarly. When ordinal outcomes are listed in the regression table and their coefficients are obtained through regression analysis, then we can match the category of interest with its corresponding variable and finds its coefficient. The coefficient, B, can be interpreted as, a unit increase in x, the probability that outcome y is more likely change by b units, holding all other variables constant For dummy variable, such as gender, its coefficient should be treated as the effect of moving from its minimum value (x = 0) to the maximum (x = 1) and be interpreted as Finally, for ordered logit model, it is often interpreted in terms of odds rations for cumulative probabilities where we estimate the cumulative probability that the outcome is equal to or less than a particular value. For instance, in a four point response scale ranging from disagree, neutral, agree, strongly agree. Then for a variable x, which increases by 1 and with a coefficient B, should be interpreted as For an increase of 1 in X, the odds of D versus all others changed by a factor of exp(1*B) or the odds becomes exp(1*B) times greater than all other outcomes (or, the odds of D is increased by 100[exp(1*B) -1]) , holding all other variables constant. ####focusing on marginal effect MLE Violation of OLS assumption 1. Zero conditional mean: y = b0 + b1x1 + b2x2 + e Adding an unknown, nonzero constant 𝛿 into the model Y = B0* (B0 + 𝛿) + B1X1 + B2X2 + E* (E- 𝛿) Thus E(B0*^) = B0 + 𝛿, and B0 is not identified individually though we can identify B0 + 𝛿. For a parameter that is unidentified it is impossible to estimate a parameter regardless of the data available, but we can identify it through the combination of other parameters. Y = b0 + b1x1 + b2x2 + e b0 + b1x1 + r assuming the error absorbs the omitted variable, r = b2x2 + e so if x1 and x2 are correlated, then e and r must be correlated, then the OLS model is a biased and inconsistent estimate of B1. Heteroskedasticity in binary outcome: Var(y|x) = Pr(y = 1|x) [1 – Pr(y = 1|x)] = xb(1 – xb), so the OLS estimate Is inefficient and the se are biased, resulting in incorrect test statistics. Marginal effect: the ration of the change in y to the change in x, when the change in x is infinitely small, holding other variables constant. While in the linear model, the partial derivative is the small for all values of the x, because in non-linear model, the d is not continuous, rather, the discrete change in y as d change from 0 to 1 is the case. The effect of a unit change in a variable depends on the values of all variables in the model ML Estimator: the value of the parameter that makes the observed data most likely and that value also maximizes the log of the likelihood. Odds ratio is a multiplicative coefficient, which means that “positive” effects are greater than 1, while neg effects are between 0 and 1. A constant factor change in the odds does not correspond to a constant or constant factor change in the probability, it is important to know the current level of the odds is. Empirical Bayes The OLS estimator Y can be viewed as the best unbiased estimator of β0j in the level-1 model. Similarly, the estimated grand mean, β00 can also be seen as an alternative estimator of β0j . A Bayesian estimator is an optimal weighted combination of these two β∗0J = ΛY + (1 - Λ) β00 , Where Λ is equal to the reliability of the least squares estimator (for the parameter, β00 ) or, the intraclass correlation (ICC) Λ = Var(β0J ) / Var(Y), where Λ is bounded between 0 and 1. So as Λ approaches 1, the Bayesian estimator will be pulled toward the OLS estimator, otherwise, it will be pulled toward to the estimated grand mean β00 . Noted that because Var(β0J ) is decrease in nj , therefore, as the simple size of group j increases, the Bayes estimator will give the simple mean more weight; on the contrary, if the simple size is too small, the Bayes estimator will assign more weight to the estimated grand mean. Similarly, the sample means b0j will increasingly pool around the grand mean when error variance is large (relative to parameter variance). Because the variances are unknown but β∗0J is based on substituting an estimate of Λj into the equation and tend to pull Y toward the grand mean β00 , the estimates have been termed empirical Bayes estimates or shrinkage estimator. Therefore, the estimator in RE is a weighted average between no pooling (the FE) and complete pooling (the OLS), because it borrows information from completely pooled mean to generate estimate of cluster mean (problem with small cluster sizes) Pooling is the degree to which the cluster means (the intercepts) are pulled toward the global mean of Y Consequence: If Λ = 0, bGLS reduces to bW (FE), so as sample size increases, β∗0J becomes more similar to W If Λ = 1, bGLS reduces to bOLS. Controversial assumption: We need an accurate estimate of b1. Note that this is fixed, so that bGLS, bW, bB are all estimates of the same parameter, b. Note that bGLS implicitly assumes that the between and within effects are the same (i.e., b). But why might they differ? The between effect could differ from the within effect due to omitted level-2 variables, which would then be related to the observed variable at level 1. – “Cluster confounding”: b1 could be confounded by conflicting between and within effects. – We can solve this by estimating both between and within effects of b in the random effects model. – Fundamental issue: the equality (or lack thereof) of within and between effects. Hausman Hausman test for the equality of RE and FE estimates. – RI and FE are consistent (for b) if correctly specified. However, if we violate the “controversial assumption,” RI becomes inconsistent, yet FE remains consistent The Hausman test checks a more efficient model against a less efficient but consistent model to make sure that the more efficient model also gives consistent results (first run FE, then RE) The hausman test tests the null hypothesis that the coefficients estimated by the efficient random effects estimator are the same as the ones estimated by the consistent fixed effects estimator. If they are (insignificant P-value, Prob>chi2 larger than .05) then it is safe to use random effects. If you get a significant P-value, however, you should use fixed effects. Duration Dependence BTSCS Structure of the Data: N versus T As is often the case, the first starting point is to examine the dimension of the data in order to have an informed sense of where the possible sources of variance come from. A quick diagnostic to begin with is to check the number of units (N) and the time span (T) over which units are observed. Beck and Katz (1995) first noted the finite sample properties of fGLS model and its inability to control for the nuisance from temporal correlation in TSCS data and treat it as a nuisance that can be controlled for by adding lagged dependent variable (LDV) into the model (Beck and Katz 1996). In their following article (Beck and Katz 1998), the authors also pointed out the “discrete” character of TSCS data with binary outcome (BTSCS) and the possible temporal dependence in the binary data structure, which may lead to severe inefficiency in estimation. They suggest using time dummies to account for the effect of the length of prior spells of peace and applying spline function to characterize the slowly-changing hazard rate over time. In a nutshell, Beck and Katz recommend analysts who use pooled TSCS data to first correct for autocorrelation within panel and then follow their recommended PCSE procedure to correct for panel heterogeneity, and, when BTSCS data is the data at hand, use time dummies to address the problem of temporal dependence across discrete time intervals. However, if researchers blindly follow Beck and Katz’s PCSE approach without actually paying attention to the “unobserved unit heterogeneity” and dynamics within their data (whether they be TSCS or BTSCS data), they could be led to obtain biased estimates. To begin with, PCSE assume a common error process and a common intercept for all units and, as Wilson and Butler (2007) illustrated in their article, temporal correlation within units can distort the regression results estimated using OLS with PCSE (which is quintessentially a fully pooled model). Also, if some sluggish variables (e.g., political institution) are of interest, then measuring the variable with different unit of analysis (e.g., replacing month with year) can have a profound impact on the statistical significance of these sluggish variables “within” units. That said, this all depends on researchers’ underlying theory and the variables they want to examine; if sluggish variables like political institution is the variable of interest and that researchers discover their data to have substantial unit heterogeneity, then the dynamic panel model or simply fixed effects model (FEM) as proposed by Wilson and Butler are feasible options. Wilson and Butler’s suggestion leads us to move the discussion to the consideration of the dimensionality of BTSCS data. Recall that in Beck and Katz’s original approach, they still use the OLS model as their workhorse model which, according to them, is because of its consistency. But note that OLS, if used to estimate TSCS data, is no longer consistent when T is finite and is more efficient than the fGLS model only when the size of N approaches that of T (Chen, Lin, Reed 2010). Given that social science data, particularly political economy data, seldom have T larger than 30 because of the difficulty of recording in the previous centuries while having over a hundred countries (N), this deficiency might lead us to prefer the FEM. The use of FEM reflects the researchers’ results (as well as their concerns) are largely driven by the potentially large unobserved variance across units (Green, Kim, Yoon 2001), but this taking does not come without a cost, first, FEM is less efficient in estimating sluggish variables which have very little within variance (Beck 2001) and, second, it does not allow the incorporation of other time-invariant variables. Going back to our previous discussion, if sluggish variables like political institution are of interest (e.g., the effect of political institution on dyadic conflict) but their within variance is greater than their between variance (cross-unit variance), then FEM might give us a poor estimate. Plumper and Troeger (2007) propose the Fixed Effects Vector Decomposition (FEVD) approach that separate the effect of sluggish variable from unit fixed effect, which provides a better standard error estimate than conventional FEM. Nevertheless, researchers should still be aware of the magnitude (and the ratio) of the within and between variance within their data and the properties of their interested variable, if the within-variance dominates the between variance across dyads, then it makes much more sense to employ the OLS model with PCSE or even the fGLS model in such situation. Similarly, if the overall effect of a particular variable across all units is the point of concern for the researcher, especially comparativists whose utmost concern is “generality,” then the within effect obtained from the FEM does not offer much generality to substantiate the researchers’ hypothesis. A related problem with the dimensionality of data concerns their hierarchical structure in that observations (e.g., years) are nested within dyads and the same variable, such as the political institution discussed earlier, can exert distinct within and between effect across different levels of analysis, a phenomenon many researchers called “cluster-confounding.” While the progress in multilevel/Bayesian models and the availability of commercial software have facilitated the analysis of hierarchical data, but the issue of “cluster-confounding” has seldom been seriously addressed, many researchers simply treated the between and within effects as the same, and this can have important implication for out statistical inference. If the directionality of the within and between effects are the same, then, there should be little to worry about, but if, on the contrary, they are opposite to each other, then, depending on the size of the each unit-cluster, the empirical Bayes estimator (which is an average between the coefficient estimate of the sample mean and the group mean) will not accurately convey the difference in the variable’s effect across two different levels, as shown in Zorn (2001b) and Neuhaus and Kalbfleish (2007). Bartles (2008), through partitioning and rearranging the within and between effects, constructs a Hausman-like test that help researchers assess the significance of the difference between the within and between effects. Nevertheless, researchers should have a good understanding of the structure of their data before proceeding to employ certain statistical models. Duration Dependence Another major issue confronts researchers using BTSCS data is the problem of duration dependence in which the hazard rate of current event occurrence is a function of prior event occurrence and this nuisance could affect the validity of our inference regarding the relationship between the covariates and the duration time. The first issue is repeated events. It is possible that a dyad experience multiple events over the observed period and that a dyad is at risk of experiencing their second dyadic conflict only after they had experienced their first conflict. Thus the proportional hazard assumption of which the hazard of experiencing certain event is constant across events. This is to say, there exists substantial “duration dependence” in the outcome and therefore the probability that an event would occur is not simply a function of the covariates of interest but also conditional on the timing and the number of occurrence prior event. Ignoring this problem could lead to serious estimation inefficiency. While many survival models allow for a more flexible (nonconstant) baseline hazard assumption (such as the Cox model whose baseline hazard is simply treated as a nuisance and being integrated out of the likelihood), but they still do not specify statistically the issue of duration dependence resulting from prior occurrence of event or the non-proportionality of covariates. If the hazard rates change over the rank of survival time, then the power of estimation becomes less precise. A second problem, as defined previously, is related to BTSCS’s data structure. BTSCS data are grouped duration data (Beck and Katz 1998) where event occurrences are recorded in some fixed and discrete time interval (say, dyad-year) but are observed once a year. This kind of data structure makes many modeling strategies commonly used in continuous time inappropriate for tackling BTSCS data. Finally, like in a hierarchical data structure, units sometimes exhibit unit-specific unobserved heterogeneity distinct from the aggregate group effect that makes some units more likely to experience certain events than others. To correct for the effect of duration dependence in BTSCS data, Beck and Katz (1998) propose including temporal dummies into the original probit/logit specification to test if grouped observations are temporally related. The two authors also suggest using cubic spline that provides a smooth characterization of duration dependency. Beck and Katz’s method provide an easily accessible method for analysts to deal with duration dependence in BTSCS data but does not pay proper attention to issues of non-proportional hazard and unit heterogeneity. If researchers expect non-proportional hazard and unit heterogeneity to exist in their data through careful examination, then several diagnostic and correction methods can be applied. Steffensmeiser and Zorn (2001) recommend various procedures to treat the non-proportional hazard problem. First, they suggest using residual-based test as a diagnostic test to test the relationship between an observation’s Schoenfeld residual and the length of its survival time. If proportional hazard assumption holds, then researchers should find out no relationship between the residual and the rank of the survival time; otherwise, the fitted model may over or under estimate the underlying hazard rates. Steffensmeiser and Zorn also suggest using piece regression that partitions the observed survival time by their mean to see if certain covariates exhibit non-proportionality across these two time scale and then multiplying these these “offending covariates” by the log of time to correct for their non-proportionality. This procedure leads to better specified model and contributes to greater accuracy though at the risk of incurring inefficiency because of the possibility of including many covariates that are proportional in the piecewise regression test. If researchers find out non-proportional hazard and unit heterogeneity to be serious problems in their data and “repeated events” is a common phenomenon across units, then conditional frailty model (Steffensmeier and De Boef 2006; Steffensmeier, De Boef, and Joyce 2007) measured in gap time with stratification should be considered. The model, unlike the unstratified Anderson-Gill method, assumes an observation is not at risk for a later event until it has experienced all prior events and treats each event occurrence as different failure types and stratifies observations based on these different failure types, which allows each stratum to have different baseline hazard functions that capture the “non-proportionality” of hazard rates over time. Another flexibility afforded by this model is that it specifies unshared random effects for each unit, thus controlling for the unobserved “frailty” across units that might bias the estimation. Nevertheless, researchers using the conditional frailty model should make sure that the amount of variation along different dimensions is sufficient for the use of conditional frailty model. Variation on the number of events experienced within a unit provides the statistical properties for incorporating random effects to model a unit’s frailty; variation on the timing of multiple events provides information on the degree of event dependence. Both variances are required for the proper use of conditional frailty model on BTSCS data. Conditional logit model differs from standard logit model in that data are grouped according to some attributes and the likelihood is estimated conditional on each grouping; any factor that remains constant within the grouping is perfectly collinear with the group (in the conditional logit model, the grouping is in terms of the country-dyad) For underlying continuous time process GLS estimate of B = (X’Ω−1 X)−1 X’Ω−1Y, where Ω is the variance-covariance matrix of the error and is constant (follows a common error process) within and across units. But this assumption may be violated when contemporaneously-correlated errors resulting from panel heterogeneity and/or when observations are temporally-correlated (i.e., serial correlation exists within units). Var(B) = (X’X)−1(X’ΩX) (X’X)−1 Terminology Probability theory: a quantitative analysis of the occurrence random phenomena. It concerns how random variable (a random numerical value whose generation follows a stochastic process and is expected to change every time and, if measured on an infinite and continuous scale, the probability of generating any specific value should approach zero) is generated and certain event occurrence that follows a non-deterministic (stochastic) process in which the initial condition is known but future evolution have many possible paths and some paths are more probable than others. Because it assumes that if a random event were to repeat many times, then based on the law of large numbers and central limit theorem, the sequence of the random event will exhibit certain systematic pattern that can be observed and estimated. Technically, probability theory makes use of some mathematical knowledge like density function, probability distribution, and sample space to estimate the occurrence of random variables and events. It is a theory because it covers many systematic concepts that help analysts understand “randomness” and explain the mechanics behind event occurrence, and most importantly, it provides the mathematical foundation for modern statistics that facilitate the prediction of event occurrence, thus suffice itself to be regarded as a theory. Random variable: a measurable function that assigns unique numerical values to all possible outcome, whose process is determined by a (usually infinitely repeated) well-defined experiment. Continuous rv: a random variable that takes on any real value with zero probability. Probability function: a measure of the probable distribution of some random variable, which assigns the likelihood of occurrence for all possible outcomes. PDF: summarizes the information concerning the possible outcomes of X and gives the probability that a random variable takes on each value. Parameter: an unknown value that describes a population relationship. Estimator: a procedure for combining data to produce a numerical value for a population parameter and the numerical value thus produced does not depend on the particular sample chosen (because the sample is randomly chosen) Sampling: the practice of selecting a subset of population with a purpose to obtain some knowledge of a statistical population Central Limit Theorem: an element of probability theory stipulating that a sufficiently large number of independent and identically distributed random variables will be approximately normally distributed with mean zero and estimatable variance that tends to standard normal Correlation Coefficient: a measure of linear dependence between two random variables that does not depend on the unit of measurement and is bounded between -1 and 1. Critical value: In hypothesis testing, it is a value against which a test statistic is compared to determine whether or not the null hypothesis is rejected. Interaction effect: the partial effect of an explanatory variable depends on the value of a different explanatory variable. Marginal Effect: The effect on the DV that results from changing an IV by a small amount. MLE: an estimation method where the parameter estimates are chose to maximize the log-likelihood function. Overdispersion: in modeling a count variable, the variance is larger than the mean Plim: the value to which an estimator converges as the sample size grows. Prediction: the estimate of an outcome obtained by putting specific values of the IVs into an estimation model. Random Sample: a sample obtained by sampling randomly from the specified population Robustness: a model or a model specification that performs well even if its assumptions are somewhat violated by the true model from which the data were generated or from alternative specifications Sample selection bias: a bias that is induced by using data that arise from endogenous sample selection Sampling distribution: the probability distribution of an estimator over all possible sample outcomes Serial correlation: correlation between the errors in different time periods Variance: a measure of spread in the distribution of a random variable Counterfactual and Matching In experimental studies or large-N social science research, researchers often assign subjects to treatment (usually a measurable parameter; this refers to treatment group ) and non-treatment status (the control group) in order to examine the effects of treatment on the outcome of interest. But researchers often confront problems arising from `unobserved heterogeneity’ and thus having difficulty ascertaining whether the observed effect is attributable to the treatment. Freedman (1999) provides an account on how to make causal inference based on association-based natural experiment. Rubin (1990) proposes the SUTVA assumption stipulating that potential outcomes for any particular unit i following treatment t is stable such that for every set of allowable treatment allocation there is a corresponding set of fixed (non-stochastic) potential outcomes that would be observed. Given its randomized nature, treatment must be randomly assigned in order to control for ‘unobserved heterogeneity’ that might distort our causal inference. However, subjects (usually humans) differ in their personal attributes and initial conditions, so random assignment may not remedy the heterogeneity exists between units. From the perspective of controlling individual-specific heterogeneity, a unit itself is definitely its best control group, but during the course of experiment, a unit cannot undergo both treatment and control, so using the unit itself as control is unfeasible and we will never know what would have been observed had the treatment t been turned off; this counterfactual dilemma is what Holland called ‘the fundamental problem of causal inference” (Holland 1986). Rubin also suggests randomly assigning treatment to the same individual at different time point over time to gauge the average treatment effect (ATT) over time and extend this experiment to multiple individuals to generalize the ATT. But this procedure still had not answer the fundamental question of how to find an appropriate “control” and the SUTVA assumption can easily be violated if (1) Treatment varying in effectiveness: this means the “outcome” is a distribution of potential outcome but not fixed potential outcome, thus “what’s the effect of treatment t?” is not a well-posed causal question and actually the subject receives two treatment. (2) Interference between units: this causes our inference to be spurious because the effect did not come from the assigned treatment. (Morgan and Winship, 2007) In experimental study, these potential violations pose little problem, because experiments are usually conducted in a control environment. But in social science, randomization poses a great challenge for in observational data (as is usually the case in social science data), there is a non-random assignment mechanism and researchers cannot control the assignment of “treatment.” Thus, how to minimize unobserved heterogeneity across observations and improve randomization have become a central problem for social science statistic and statistical matching technique is a response to such challenge. Rosenbaum and Rubin (1983) first proposes propensity score matching (PSM) as a technique to select a subset of comparison units similar to the treatment unit, which can be used as counterfactual in non-experimental setting. PSM measures the probability of a unit being assigned to a particular condition in a study given a set of known characteristics. Statistically, this procedure can be translated as the conditional probability of a unit being selected into treatment given independent variable X. Alternatively, Morgan and Winship (2007) suggest using conventional regression model as a conditional-variance-covariance weighted matching procedure. The authors treat regression to execute stratification, through which the marginal distribution of observations are distributed across different strata (conditional on observed characteristics) and the conditional variance assign weight to each stratum according to its stratum-specific variance. In a nutshell, Morgan and Winship equate stratification estimator (the variables) as matching. In addition, Morgan and Winship also suggest interacting treatment dummy with all observed characteristics as a way to capture the effects of treatment status as observed characteristics vary in all sample (including the hypothesized average treatment effect on the control group (ATC)). While PSM reduces selection bias by equating groups based on many known characteristics and provides an alternative method for estimating treatment effect when treatment assignment is not random, Morgan and Winship’s stratification as matching technique helps distinguishing stratum-specific effects from treatment effect. Both offer an easy to implement procedure and linear interpretation. However, the validity of PSM might not hold when our data has only limited observations and there exists little overlap in known characteristics between observations (which is quintessentially a small sample problem), thus making our search for matched pair extremely difficult (Shadish, Cook, and Campbell 2002). Pearl (2009) suggests modeling on qualitative causal relationship between treatment, outcome, and unobserved variables. The same argument also applies to Morgan and Winship’s stratification technique. Additionally, stratification estimator as matching only provides consistent estimates when the true propensity score does not differ by strata (or, equivalently, the average stratum-specific causal effect does not vary bvy strata). Conversely, if we stratify our data too well, then we might encounter “overmatching” which leaves little variation to be explained across observations (Manski 1995). Also, if the marginal distribution of observations within the intersection of certain observed characteristics and treatment are non-existent, then we won’t be able to use those groups as counterfactual or control groups. Lastly, both approaches only control for observed variables and we can surmise that unobserved heterogeneity still exist due to the still-unobservable omitted variables. Recently, Ho et al. (2007) propose a non-parametric approach as a general procedure for researchers to implement in multivariate analysis. Researchers first balance one’s data with conventional matching routine and then estimate a regression model on the balanced data. Ho et al.’s matching procedure can be interpreted as a preprocessing procedure that can be used to prepare data for subsequent analysis using conventional parametric methods. In light of this evidence, we can infer that for “matching” to match properly, the data under consideration must, like all regression models, be a large data with sufficient observations and that we should have enough observations with each groups sharing overlaps in many known characteristics such that they are similar enough are close to one another in terms of their propensity score (which minimizes within-stratum heterogeneity) in order for “matching” to provide a statistically well-behaved estimate and theoretically sound interpretation of causal effect.