* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download data structures and applicatons

Survey

Document related concepts

Transcript

DEPARTMENT OF INFORMATION TECHNOLOGY

EE2204 DATA STRUCTURES AND ALGORITHMS

(Common to EEE, EIE & ICE)

1. LINEAR STRUCTURES

9

Abstract Data Types (ADT) – List ADT – array-based implementation – linked list implementation –

cursor-based linked lists – doubly-linked lists – applications of lists – Stack ADT – Queue ADT – circular

queue implementation – Applications of stacks and queues

2. TREE STRUCTURES

9

Need for non-linear structures – Tree ADT – tree traversals – left child right sibling data structures for

general trees – Binary Tree ADT – expression trees – applications of trees – binary search tree ADT

3. BALANCED SEARCH TREES AND INDEXING

9

AVL trees – Binary Heaps – B-Tree – Hashing – Separate chaining – open addressing – Linear probing

4. GRAPHS

9

Definitions – Topological sort – breadth-first traversal - shortest-path algorithms – minimum spanning

tree – Prim's and Kruskal's algorithms – Depth-first traversal – biconnectivity – Euler circuits –

applications of graphs

5. ALGORITHM DESIGN AND ANALYSIS

9

Greedy algorithms – Divide and conquer – Dynamic programming – backtracking – branch and bound –

Randomized algorithms – algorithm analysis – asymptotic notations – recurrences – NP-complete

problems

L = 45 Total = 45

TEXT BOOKS

1. M. A. Weiss, “Data Structures and Algorithm Analysis in C”, Pearson Education Asia, 2002.

2. ISRD Group, “Data Structures using C”, Tata McGraw-Hill Publishing Company Ltd., 2006.

REFERENCES

1. A. V. Aho, J. E. Hopcroft, and J. D. Ullman, “Data Structures and Algorithms”, Pearson Education,

1983.

2. R. F. Gilberg, B. A. Forouzan, “Data Structures: A Pseudo code approach with C”, Second Edition,

Thomson India Edition, 2005.

3. Sara Baase and A. Van Gelder, “Computer Algorithms”, Third Edition, Pearson Education, 2000.

4. T. H. Cormen, C. E. Leiserson, R. L. Rivest, and C. Stein, "Introduction to algorithms", Second

Edition, Prentice Hall of India Ltd, 2001.

1

UNIT-I LINEAR STRUCTURES

1. Define data structure.

The organization, representation and storage of data is called the data structure. Since all programs

operate on data, a data structure plays an important role in deciding the final solution for the problem.

2. Define non-linear data structure.

Data structure which is capable of expressing more complex relationship than that of physical

adjacency is called non-linear data structure.

3. What are the operations of ADT?

Union, Intersection, size, complement and find are the various operations of ADT.

4. What is meant by list ADT?

List ADT is a sequential storage structure. General list of the form a1,2,a3.…., an and the size of

the list is 'n'. Any element in the list at the position I is defined to be ai, ai+1 the successor of ai and ai-1 is

the predecessor of ai.

5. What are the different ways to implement list?

• Simple array implementation of list

• Linked list implementation of list

6. What is meant by a linked list?

Linear list is defined as, item in the list called a node and contains two fields, an information field

and next address field. The information field holds the actual element on the list. the next address field

contains the address of the next node in the list.

7. What are the advantages of a linked list?

It is necessary to specify the number of elements in a linked list during its declaration.

Linked list can grow and shrink in size depending upon the insertion and deletion that

occurs in the list.

Insertion and deletion at any place in a list can be handed easily and efficiently.

A linked list does not waste any memory space.

8. What is a singly listed list?

The singly linked list , in which each node has a single link to its next node. This list is also

referred as a linear linked list. The head pointer points to the first node in the list and the null pointer is

stored in the link field of the last node in the list.

9. How is an element of a linked list called? What will it contain?

Linked list or list is an ordered collection of elements. Each element in the list is referred as a

node. Each node contains two fields namely,

Data field

Link field

The data field contains actual data of the element to be stored in the list and the link field referred as the

next address field contains the address of the next node in the list.

10. What is the use of header in a linked list?

A linked list contains a pointer, referred as the head pointer, which points to the first node in the

list that stores the address of the first node of the list.

11. What are the disadvantages of a singly linked list?

In a singly linked list, you can traverse (move) in a singly linked list in only one direction (i.e.

from head to null in a list). You can’t traverse the list in the reverse direction (i.e. ., from null to head in a

list.)

12. What are the operations can we perform on a linked list?

The basic operations that can be performed on linked list are,

Creation of a list.

Insertion of a node.

Modification of a node.

Deletion of a node.

2

Traversal of a node.

13. What are the applications of linked list?

Linked list form the basis of many data structures, so it’s worth looking at some applications that

are implemented using the linked list. Some important application using linked list are

Stacks

Queues

14. What is a singly linked circular list?

The next field in the last node contains a pointer back to the front node rather than the null pointer

such a list is called a circular list.

15. What is a double linked list?

Doubly linked list is an advanced form of a singly linked list, in which each node contains three

fields namely,

Previous address field.

Data field.

Next address field.

The previous address field of a node contains address of its previous node. The data field stores

the information part of the node. The next address field contains the address of the next node in

the list.

16. What are the basic operations in a circular doubly linked list?

The basis operations that can be performed on circular doubly linked lists are,

Creation of a list.

Insertion of a node.

Modification of a node.

Deletion of a node.

17. What is the advantage of doubly linked list?

For some applications, especially those where it is necessary to traverse list in both direction so,

doubly linked list work much better than singly linked list. Doubly linked list is an advanced form of a

singly linked list, in which each node contains three fields namely,

Previous address field.

Data field.

Next address field.

18. Define Stack.

A stack is an ordered collection of elements in which insertions and deletions are restricted to one

end. The end from which elements are added and/or removed is referred to as top of the stack.

19. Give some applications of stack.

Some important applications using stacks are

Conversion of infix to postfix

Expression evaluation

Backtracking problem

Towers of Hanoi.

20. What are the postfix and prefix forms of the expression?

A+B*(C-D)/(P-R)

Postfix form: ABCD-*PR-/+

Prefix form: +A/*B-CD-PR

21. Explain the usage of stack in recursive algorithm implementation?

In recursive algorithms, stack data structures is used to store the return address when a recursive

call is encountered and also to store the values of all the parameters essential to the current state of the

procedure.

3

22. Write down the operations that can be done with queue data structure?

Queue is a first - in -first out list. The operations that can be done with queue are addition and

deletion.

23. What is a circular queue?

The queue, which wraps around upon reaching the end of the array is called as circular queue.

24. What does a Priority Queue mean?

Queue is a kind of data structure, which stores and retries the data as First In First Out. But

priority queue disturbs the flow of FIFO and acts as per the priority of the job in the operating

system.

Del-min (H)

Priority queue.

H

Insert(H)

25. State Tower of Honoi.

The objective is to transfer the entire disk to tower 1 to entire tower 3 using tower2. The rules to

be followed in moving the disk from tower1 to tower 3 using tower 2 are as follows,

Only one disc can be moved at a time

Only the top disc on any tower can be moved to any other tower.

A larger disc cannot be placed on a smaller disc.

26. Define Queue.

A Queue is an ordered collection of elements in which insertions are made at one end and

deletions are made at the other end. The end at which insertions are made is referred to as the rear end,

and the end from which deletions are made is referred to as the front end.

27. Give some applications of queue.

Queues have many applications in computer systems. Most computers have only a singly

processor, so only one user may be served at a time. Entries from other users are placed in a queue. Each

entry gradually advances to the front of the queue as users receive their service. Queues are also used to

support print spooling.

28. What is abstract data type?(AU NOV 2011)

An Abstract data type (ADT) is a set of operations. Abstract data types are Mathematical

abstractions. Example:Object such as set, list, and graphs along their operations can be viewed as ADT.

Union, intersection, size, complement and find are the various operations of ADT.

General list: A1, A2, A3,…………..An

A1-> first element

An-> last element

In general Ai is an element in a list I

Ai-> current element position

Ai+1-> predecessor

29. List any applications of queue. (AU NOV 2011)

Batch processing in an operating system

* To implement Priority Queues.

* Priority Queues can be used to sort the elements using Heap Sort.

* Simulation.

* Mathematics user Queueing theory.

* Computer networks where the server takes the jobs of the client as per the queue strategy.

30. Define non linear data structure. (AU NOV 2011)

Data structure which is capable of expressing more complex relationship than that of

physical adjacency is called non-linear data structure.

4

31. Mention the advantages of representing stacks using linked list than arrays.(AUT

NOV 2011)

It is necessary to specify the number of elements in a linked list during its declaration.

Linked list can grow and shrink in size depending upon the insertion and deletion that

occurs in the list.

Insertion and deletion at any place in a list can be handed easily and efficiently.

A linked list does not waste any memory space.

32. Mention the applications of list.(AUT NOV 2011)

1.Polynomial ADT

2. Radix Sort

3. Multilist

Part B:

1. Explain with example the insertion & deletion in doubly linked list

Doubly Linked List

A Doubly linked list is a linked list in which each node has three fields namely data field,

forward link (FLINK) and Backward Link (BLINK). FLINK points to the successor node in the

list whereas BLINK points to the predecessor node.

STRUCTURE DECLARATION : Struct Node

{

int Element;

Struct Node *FLINK;

Struct Node *BLINK

};

ROUTINE TO INSERT AN ELEMENT IN A DOUBLY LINKED LIST

void Insert (int X, list L, position P)

{

Struct Node * Newnode;

Newnode = malloc (size of (Struct Node));

If (Newnode ! = NULL)

{

Newnode ->Element = X;

Newnode ->Flink = P Flink;

P ->Flink ->Blink = Newnode;

P ->Flink = Newnode ;

Newnode ->Blink = P;

}

}

ROUTINE TO DELETE AN ELEMENT

void Delete (int X, List L)

{

position P;

P = Find (X, L);

If ( IsLast (P, L))

{

Temp = P;

P ->Blink ->Flink = NULL;

5

free (Temp);

}

else

{

Temp = P;

P ->Blink ->Flink = P->Flink;

P ->Flink ->Blink = P->Blink;

free (Temp);

}

}

Advantage

* Deletion operation is easier.

* Finding the predecessor & Successor of a node is easier.

Disadvantage

* More Memory Space is required since it has two pointers.

2. Explain with example the insertion & deletion in singly linked list

Singly Linked List Implementation

Linked list consists of series of nodes. Each node contains the element and a pointer to its

successor node. The pointer of the last node points to NULL.

Insertion and deletion operations are easily performed using linked list.

A singly linked list is a linked list in which each node contains only one link field pointing to the

next node in the list.

DECLARATION FOR LINKED LIST

Struct node ;

typedef struct Node *List ;

typedef struct Node *Position ;

int IsLast (List L) ;

int IsEmpty (List L) ;

position Find(int X, List L) ;

void Delete(int X, List L) ;

position FindPrevious(int X, List L) ;

position FindNext(int X, List L) ;

void Insert(int X, List L, Position P) ;

void DeleteList(List L) ;

Struct Node

{

int element ;

position Next ;

};

ROUTINE TO INSERT AN ELEMENT IN THE LIST

void Insert (int X, List L, Position P)

/* Insert after the position P*/

6

{

position Newnode;

Newnode = malloc (size of (Struct Node));

If (Newnode! = NULL)

{

Newnode ->Element = X;

Newnode ->Next = P->Next;

P ->Next = Newnode;

}

}

ROUTINE TO CHECK WHETHER THE LIST IS EMPTY

int IsEmpty (List L) /*Returns 1 if L is empty */

{

if (L ->Next = = NULL)

return (1);

}

ROUTINE TO CHECK WHETHER THE CURRENT POSITION IS LAST

int IsLast (position P, List L) /* Returns 1 is P is the last position in L */

{

if (P->Next = = NULL)

return(1);

}

FIND ROUTINE

position Find (int X, List L)

{

/*Returns the position of X in L; NULL if X is not found */

position P;

P = L ->Next;

while (P! = NULL && P->Element ! = X)

P = P->Next;

return P;

}

}

FIND PREVIOUS ROUTINE

position FindPrevious (int X, List L)

{

/* Returns the position of the predecessor */

position P;

P = L;

while (P ->Next ! = Null && P ->Next ->Element ! = X)

P = P ->Next;

return P;

}

FINDNEXT ROUTINE

position FindNext (int X, List L)

{

/*Returns the position of its successor */

7

P = L ->Next;

while (P->Next! = NULL && P->Element ! = X)

P = P->Next;

return P->Next;

}

ROUTINE TO DELETE AN ELEMENT FROM THE LIST

void Delete(int X, List L)

{

/* Delete the first occurence of X from the List */

position P, Temp;

P = Findprevious (X,L);

If (!IsLast(P,L))

{

Temp = P->Next;

P ->Next = Temp->Next;

Free (Temp);

}

}

ROUTINE TO DELETE THE LIST

void DeleteList (List L)

{

position P, Temp;

P = L ->Next;

L->Next = NULL;

while (P! = NULL)

{

Temp = P->Next

free (P);

P = Temp;

}

}

3. Explain with example the insertion & deletion in circularly linked list(Refer singly LL)

Circular Linked List

In circular linked list the pointer of the last node points to the first node. Circular linked list can

be implemented as Singly linked list and Doubly linked list with or without headers.

Singly Linked Circular List

A singly linked circular list is a linked list in which the last node of the list points to the first

node.

Doubly Linked Circular List

A doubly linked circular list is a Doubly linked list in which the forward link of the last node

points to the first node and backward link of the first node points to the last node of the list.

Advantages of Circular Linked List

• It allows to traverse the list starting at any point.

• It allows quick access to the first and last records.

• Circularly doubly linked list allows to traverse the list in either

8

4. Write a procedure to insert &delete a element in the array implementation of stack

Stack Model :

A stack is a linear data structure which follows Last In First Out (LIFO) principle, in which both

insertion and deletion occur at only one end of the list called the Top.

Pile of coins., a stack of trays in cafeteria.

Operations On Stack

The fundamental operations performed on a stack are

1. Push

2. Pop

PUSH :

The process of inserting a new element to the top of the stack. For every push operation the top is

incremented by 1.

POP :

The process of deleting an element from the top of stack is called pop operation. After every pop

operation the top pointer is decremented by 1.

EXCEPTIONAL CONDITIONS

OverFlow

Attempt to insert an element when the stack is full is said to be overflow.

UnderFlow

Attempt to delete an element, when the stack is empty is said to be underflow.

Array Implementation of stack

In this implementation each stack is associated with a pop pointer, which is -1 for an empty

stack.

• To push an element X onto the stack, Top Pointer is incremented and then set Stack [Top] = X.

• To pop an element, the stack [Top] value is returned and the top pointer is decremented.

• pop on an empty stack or push on a full stack will exceed the array bounds.

void push (int x, Stack S)

{

if (IsFull (S))

Error ("Full Stack");

else

{

Top = Top + 1;

S[Top] = X;

}

}

int IsFull (Stack S)

{

if (Top = = Arraysize)

return (1);

}

ROUTINE TO POP AN ELEMENT FROM THE STACK

void pop (Stack S)

{

if (IsEmpty (S))

Error ("Empty Stack");

else

9

{

X = S [Top];

Top = Top - 1;

}

}

int IsEmpty (Stack S)

{

if (S Top = = -1)

return (1);

}

ROUTINE TO RETURN TOP ELEMENT OF THE STACK

int TopElement (Stack S)

{

if (! IsEmpty (s))

return S[Top];

else

Error ("Empty Stack");

return 0;

}

5. Write a procedure to insert & delete a element in the linked list implementation of stack

LINKED LIST IMPLEMENTATION OF STACK

• Push operation is performed by inserting an element at the front of the list.

• Pop operation is performed by deleting at the front of the list.

• Top operation returns the element at the front of the list.

struct node

{

int data;

struct node *link;

};

struct node *cur,*first;

void create();

void push();

void pop();

void display();

void create()

{

printf("\nENTER THE FIRST ELEMENT: ");

cur=(struct node *)malloc(sizeof(struct node));

scanf("%d",&cur->data);

cur->link=NULL;

first=cur;

}

void display()

10

{

cur=first;

printf("\n");

while(cur!=NULL)

{

printf("%d\n",cur->data);

cur=cur->link;

}

}

void push()

{

printf("\nENTER THE NEXT ELEMENT: ");

cur=(struct node *)malloc(sizeof(struct node));

scanf("%d",&cur->data);

cur->link=first;

first=cur;

}

void pop()

{

if(first==NULL)

{

printf("\nSTACK IS EMPTY\n");

}

else

{

cur=first;

printf("\nDELETED ELEMENT IS %d\n",first->data);

first=first->link;

free(cur);

}

}

6. Write a procedure to insert & delete a element in the array implementation of queue

A Queue is a linear data structure which follows First In First Out (FIFO) principle, in which

insertion is performed at rear end and deletion is performed at front end.

Example : Waiting Line in Reservation Counter,

Operations on Queue

The fundamental operations performed on queue are

1. Enqueue

2. Dequeue

Enqueue :

The process of inserting an element in the queue.

Dequeue :

The process of deleting an element from the queue.

Exception Conditions

Overflow : Attempt to insert an element, when the queue is full is said to be overflow condition.

11

Underflow : Attempt to delete an element from the queue, when the queue is empty is said to be

underflow.

Implementation of Queue

Queue can be implemented using arrays and pointers.

Array Implementation

In this implementation queue Q is associated with two pointers namely rear pointer and front

pointer.

To insert an element X onto the Queue Q, the rear pointer is incremented by 1 and then set

Queue [Rear] = X

To delete an element, the Queue [Front] is returned and the Front Pointer is incremented by 1.

ROUTINE TO ENQUEUE

void Enqueue (int X)

{

if (rear > = max _ Arraysize)

print (" Queue overflow");

else

{

Rear = Rear + 1;

Queue [Rear] = X;

}

}

ROUTINE FOR DEQUEUE

void delete ( )

{

if (Front < 0)

print (" Queue Underflow");

else

{

X = Queue [Front];

if (Front = = Rear)

{

Front = 0;

Rear = -1;

}

else

Front = Front + 1 ;

}

}

7. Write a procedure to insert & delete a element in the linked list implementation of queue

Linked List Implementation of Queue

Enqueue operation is performed at the end of the list.

Dequeue operation is performed at the front of the list.

void create()

12

{

printf("\nENTER THE FIRST ELEMENT: ");

cur=(struct node *)malloc(sizeof(struct node));

scanf("%d",&cur->data);

cur->link=NULL;

first=cur;

}

void display()

{

cur=first;

printf("\n");

while(cur!=NULL)

{

printf("%d\n",cur->data);

cur=cur->link;

}

}

void push()

{

printf("\nENTER THE NEXT ELEMENT: ");

cur=(struct node *)malloc(sizeof(struct node));

scanf("%d",&cur->data);

cur->link=first;

first=cur;

}

void pop()

{

if(first==NULL)

{

printf("\nSTACK IS EMPTY\n");

}

else

{

cur=first;

printf("\nDELETED ELEMENT IS %d\n",first->data);

first=first->link;

free(cur);

}

}

8. Write short notes on cursor implementation with example.

Cursor implementation is very useful where linked list concept has to be implemented without

using pointers.

Comparison on Pointer Implementation and Curson Implementation of Linked List.

Pointer Implementation Cursor Implementation

13

1. Data are stored in a collection of structures. Data are stored in a global array .Each structure

contains a data and next structures. Here array index is pointer. considered as an address.

Pointer Implementation Cursor Implementation

2. Malloc function is used to create a node and It maintains a list of free cells called free function

is used to released the cell. cursors space in which slot 0 is considered as a header and Next is

equivalent to the pointer which points to the next slot.

ROUTINE TO DELETE AN ELEMENT

void Delete (int X, List L)

{

position P, temp;

P = Findprevious (X, L);

if (! IsLast (P, L))

{

temp = CursorSpace [P].Next;

CursorSpace [P].Next = CursorSpace [temp].Next;

CursorFree (temp);

}

}

ROUTINE FOR INSERTION

void Insert (int X, List L, position P)

{

position newnode;

newnode = CursorAlloc ( );

if (newnode ! = 0)

CursorSpace [newnode].Element = X;

CursorSpace [newnode]. Next = CursorSpace [P].Next;

CursorSpace [P].Next = newnode;

}

9. Evaluate the following postfix expression

abcd+e*+f+* where a=3 b=2 c=5 d=6 e= 8 f=2

Read the postfix expression one character at a time until it encounters the delimiter `#'.

Step 1 : - If the character is an operand, push its associated value onto the stack.

Step 2 : - If the character is an operator, POP two values from the stack, apply the

operator to them and push the result onto the stack.

Ans=186

10. What is a doubly linked list? Write an algorithm for inserting and deleting an element from

doubly linked list.(16)(AU NOV 2011)(Refer 1)

11. What is doubly linked list? Explain the algorithm in detail for inserting a node to the left

and deleting a node from a doubly linked list(16)(AU MAY 2011)(Refer 1)

12. Write an algorithm and explain how insertions and deletions are carried out in a circular

queue implemented using linked list. (16)(AU MAY 2011)(Refer 3)

13. Explain operations of Doubly Linked list in detail with routines of add, delete node from

DLL.(16)(AUT NOV 2011)(Refer 1)

14. Write an algorithm for convert infix expression to postfix expression with an example of

(A+(B*C-(D/E^F)*G)*H).(16)(AUT NOV 2011)

Read the infix expression one character at a time until it encounters the delimiter. "#"

Step 1 : If the character is an operand, place it on to the output.

14

Step 2 : If the character is an operator, push it onto the stack. If the stack operator has a

higher or equal priority than input operator then pop that operator from the stack and

place it onto the output.

Step 3 : If the character is a left paraenthesis, push it onto the stack.

Step 4 : If the character is a right paraenthesis, pop all the operators from the stack till it

encounters left parenthesis, discard both the parenthesis in the output.

Postfix Expression:ABC*DEF^/G*-H*+

15. (i)Explain in detail the linked stack and linked queue(8) .(AU NOV 2011)(Refer 5 and 7)

(ii)Give two sorted lists, L1 and L2 , write procedure to compute L1 U L2 and L1 using only

the basic list operations.(8)(AU NOV 2011)(Refer 2)

UNIT-II TREE STRUCTURES

1. Define tree?

A tree is a data structure, which represents hierarchical relationship between individual data

items.

2. Define leaf?

In a directed tree any node which has out degree o is called a terminal node or a leaf.

3. Define Forest . (AU NOV 2011)

A forest is collection on N(N>0) disjoint tree or group of trees are called forest.

4. Define Level.

The level of a node in a binary tree is defined as the root of the tree has level 0, and the level of

any other node in the tree is one more than the level of its parent.

5. Define height or depth.

The height or depth of a tree is defined as the maximum level of any node in the tree.

6. Define Binary tree.

A Binary tree is a finite set of elements that is either empty or its partitioned into three disjoint

subsets contains a single element called root node of the tree. The other two subsets contains are

themselves binary trees, called the left and right sub trees of the original tree. A left of right sub tree can

be empty. Each element of a binary tree is called a node of the tree.

7. State some properties of a binary tree.

The maximum number of nodes on level n of a binary tree is 2n-1, where n>=1.

The maximum number of nodes in a binary tree of height n is 2n-1, where n>=1.

8. Define strictly binary tree.

If every non-leaf node in a binary tree has nonempty left and right sub trees, the tree is termed a

strictly binary tree.

9. Define full binary tree.

A full binary tree is a binary tree in which all the leaves are on the same level and every non-leaf

node has exactly two children.

10. Define Traversal.

One of the most important operations performed on a binary tree is its traversal. Traversing a

binary tree means moving through all the nodes in the binary tree, visiting each node in the tree exactly

once.

11. Give the types of traversal.

There are three different traversal of binary tree.

1. In order traversal

Process the left sub tree

Process the root

Process the right sub tree

Ex: A + B

15

2. Post order traversal

Process the left sub tree

Process the right sub tree

Process the root

Ex: AB+

3. Pre order traversal

Process the root

Process the left sub tree

Process the right sub tree

Ex: +AB

12. Give the code for pre order traversal.

Pretrav (p)

NODEPTR P;

{

if (P!=NULL)

{

Printf (“%d\n”,P->info);

Pretrav (P->left);

Pretrav (P->right);

}

}

13. Give the code for post order traversal.

posttrav(p)

NODEPTR P;

{

if(P!=NULL)

{

posttrav(P->left);

posttrav(P->right);

printf(“%d\n”,P->info);

}

}

14. Give the code for in order traversal.

intrav(p)

NODEPTR P;

{

if(P!=NULL)

{

posttrav(P->left);

printf(“%d\n”,P->info);

posttrav(P->right);

}}

15. What is meant by directed tree?

Directed tree is an acyclic diagraph which has one node called its root with indegree o whille all

other nodes have indegree I.

16. What is a ordered tree?

In a directed tree if the ordering of the nodes at each level is prescribed then such a tree is called

ordered tree.

17. What are the applications of binary tree?

Binary tree is used in data processing.

a. File index schemes

16

b. Hierarchical database management system

18. What is meant by traversing?

Traversing a tree means processing it in such a way, that each node is visited only once.

19. What is internal node and external node?

By definition, leaf nodes have no sons. Nonleafs are called internal nodes and leaves are

called external nodes.

20. What are the different types of traversing?

The different types of traversing are

a. Pre-order traversal-yields prefix from of expression.

b. In-order traversal-yields infix form of expression.

c. Post-order traversal-yields postfix from of expression.

21. What are the two methods of binary tree implementation?

Two methods to implement a binary tree are,

a. Linear representation.

b. Linked representation

22. Define in -order traversal?

In-order traversal entails the following steps;

a. Process the left subtree

b. Process the root node

c.

Process the right subtree

23. Define expression trees?

The leaves of an expression tree are operands such as constants or variable names and the other

nodes contain operators.

24. Define Binary search Tree.

Binary tree is a binary search tree in that for every node X in the tree, the values of all the keys in

its left sub tree are smaller than X, and the values of all the keys in its right sub tree are larger than X.

25. List out basic operations of Binary search Tree.

1. Make empty – create or initialize empty binary search tree

2. Find – find whether an element present in BST

3. Findmin & Findmax – find minimum and maximum from BST

4. Insert - insert an element into a proper node

Delete – delete an element from BST

26. Define complete binary tree. (AU NOV 2011)

A complete binary tree of height h has between 2h and 2h+1 - 1 nodes. In the bottom level the elements

should be filled from left to right.

28.What is a forest? (AU NOV 2011)

A forest is collection on N(N>0) disjoint tree or group of trees are called forest.

29.State the properties of a binary tree. (AUT NOV 2011)

Structure property: The elements are filled from left to right.

Each node contains at most two child.

30. Draw a directed tree representation of the formula (a+b*c)+((d*e+f)*g). (AUT NOV

2011)(Refer Part B 2)

31. Define tree. List the tree traversal techniques.( AU MAY 2011)

A tree is a data structure, which represents hierarchical relationship between individual data

items.

In order, Pre order and post order

32.Differentiate binary tree from binary search tree.( AU MAY 2011)

Part B

1. What is traversal? Give the algorithm for traversal in the binary tree

Tree Traversals

17

Traversing means visiting each node only once. Tree traversal is a method for visiting all the

nodes in the tree exactly once. There are three types of tree traversal techniques, namely

1. Inorder Traversal

2. Preorder Traversal

3. Postorder Traversal

Inorder Traversal

The inorder traversal of a binary tree is performed as

* Traverse the left subtree in inorder

* Visit the root

* Traverse the right subtree in inorder.

RECURSIVE ROUTINE FOR INORDER TRAVERSAL

void Inorder (Tree T)

{

if (T ! = NULL)

{

Inorder (T ->left);

printElement (T ->Element);

Inorder (T ->right);

}

}

Preorder Traversal

The preorder traversal of a binary tree is performed as follows,

* Visit the root

* Traverse the left subtree in preorder

* Traverse the right subtree in preorder.

RECURSIVE ROUTINE FOR PREORDER TRAVERSAL

void Preorder (Tree T)

{

if (T ! = NULL)

{

printElement (T ->Element);

Preorder (T ->left);

Preorder (T ->right);

}

Postorder Traversal

The postorder traversal of a binary tree is performed by the following steps.

* Traverse the left subtree in postorder.

* Traverse the right subtree in postorder.

* Visit the root.

RECURSIVE ROUTINE FOR POSTORDER TRAVERSAL

void Postorder (Tree T)

{

if (T ! = NULL)

{

Postorder (T ->Left);

Postorder (T ->Right);

PrintElement (T ->Element);

18

}

2. Write a program that reads an expression in its infix form and builds the binary tree

corresponding to that expression. Also write procedures to print the infix and postfix

forms of the expression, using in order and post order traversals of this tree.

Step 2: Read an expression.

Step 3: Scan the expression from left to right and repeat steps 4 to 7 for each character in the

expression till the delimiter.

Step 4: If the character is an operand, place it on to the output.

Step 5: If the character is an operator, then check the Stack operator has a higher or equal priority

than the input operator then pop that operator from the stack and place it onto the output. Repeat

this till there is no higher priority operator on the top of the Stack and push the input operator

onto the Stack.

Step 6: If the character is a left parenthesis, push it onto the Stack.

Step 7: If the character is a right parenthesis, pop all the operators from the Stack till it

encounters left parenthesis. Discard both the parenthesis in the output.

Step 8: Terminate the execution.

RECURSIVE ROUTINE FOR INORDER TRAVERSAL

void Inorder (Tree T)

{

if (T ! = NULL)

{

Inorder (T ->left);

printElement (T ->Element);

Inorder (T ->right);

}

}

RECURSIVE ROUTINE FOR PREORDER TRAVERSAL

void Preorder (Tree T)

{

if (T ! = NULL)

{

printElement (T ->Element);

Preorder (T ->left);

Preorder (T ->right);

}

RECURSIVE ROUTINE FOR POSTORDER TRAVERSAL

void Postorder (Tree T)

{

if (T ! = NULL)

{

postorder (T ->Left);

19

postorder (T ->Right);

printElement (T ->Element);

}

#include<stdio.h>

#include<alloc.h>

#include<process.h>

#include<conio.h>

#define SIZE 20

char Expr[SIZE];

char Stack[SIZE];

int Top=-1;

void push(char ch);

void pop();

void infix_to_postfix();

int m,l;

void main()

{

char ch;

clrscr();

printf("Program to covert infix expression into postfix expression:\n");

printf("Enter your expression & to quit enter fullstop(.)\n");

while((ch=getc(stdin))!='\n')

{

Expr[m]=ch;

m++;

}

l=m;

infix_to_postfix();

getch();

}

void push(char ch)

{

if(Top+1 >= SIZE)

{

printf("\nStack is full");

}

else

{

Top=Top+1;

Stack[Top] = ch;

}

20

}

void pop()

{

if (Top < 0)

{

printf("\n Stack is empty");

}

else

{

if(Top >=0)

{

if(Stack[Top]!='(')

printf("%c",Stack[Top]);

Top=Top-1;

}

}

}

void infix_to_postfix()

{

m=0;

while(m<l)

{

switch(Expr[m])

{

case '+' :

case '-' :

while(Stack[Top] =='-' || Stack[Top] =='+' ||Stack[Top] =='*'

||Stack[Top] =='/' ||Stack[Top] =='^' && Stack[Top] !='(')

pop();

push(Expr[m]);

++m;

break;

case '/' :

case '*' :

while(Stack[Top] =='*' ||Stack[Top] =='/' ||Stack[Top] =='^' &&

Stack[Top] !='(')

pop();

push(Expr[m]);

++m;

break;

21

case '^':

push(Expr[m]);

++m;

break;

case '(':

push(Expr[m]);

++m;

break;

case ')':

while(Stack[Top]!='(')

pop();

pop();

++m;

break;

case '.' :

while (Top >= 0)

pop();

exit(0);

default :

if(isalpha(Expr[m]))

{

printf("%c",Expr[m]);

++m;

break;

}

else

{

printf("\n Some error");

exit(0);

}

}

}

}

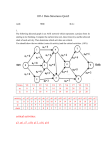

3. Draw a binary search tree for the following input 60, 25,75,15,50,66,33,44. Trace the

algorithm to delete the nodes 25, 75, 44 from the tree.

Binary Search tree:If the element is less than the root traverse to the left and if the element is

greater than the root traverse to the right and insert the element. Construct a binary search tree.

Insertion Routine for Binary search Tree

struct tree *insert(struct tree *t,int element)

{

if(t==NULL)

22

{

t=(struct tree *)malloc(sizeof(struct tree));

t->data=element;

t->lchild=NULL;

t->rchild=NULL;

return t;

}

else

{

if(element<t->data)

{

t->lchild=insert(t->lchild,element);

}

else

if(element>t->data)

{

t->rchild=insert(t->rchild,element);

}

else

if(element==t->data)

{

printf("element already present\n");

}

return t;

}

}

Deletion Routine for Binary search Tree

struct tree * del(struct tree *t, int element)

{

if(t==NULL)

printf("element not found\n");

else

if(element<t->data)

t->lchild=del(t->lchild,element);

else

if(element>t->data)

t->rchild=del(t->rchild,element);

else

if(t->lchild&&t->rchild)

{

temp=findmin(t->rchild);

t->data=temp->data;

t->rchild=del(t->rchild,t->data);

}

else

{

23

temp=t;

if(t->lchild==NULL)

t=t->rchild;

else

if(t->rchild==NULL)

t=t->lchild;

free(temp);

}

return t;

}

4. How do you represent binary tree in a list? Write an algorithm for finding K th element and

deleting an element. .(16)(AU NOV 2011)

REPRESENTATION OF A BINARY TREE

There are two ways for representing binary tree, they are

* Linear Representation

* Linked Representation

Linear Representation

The elements are represented using arrays. For any element in position i, the left child is in

position 2i, the right child is in position (2i + 1), and the parent is in position (i/2).

Linked Representation

The elements are represented using pointers. Each node in linked representation has three fields,

namely,

* Pointer to the left subtree

* Data field

* Pointer to the right subtree

In leaf nodes, both the pointer fields are assigned as NULL

5.

Construct an expression tree for the following expression (a+b*c)+(d*e+f)*g. .(16)(AU

NOV2011)(Refer 2)

6. Narrate the operations of Binary search tree on searching a node, Insertion node and

deletion node from binary tree with example.(16) (AUT NOV 2011)(Refer 3)

struct tree * find(struct tree *t, int element)

{

if(t==NULL)

return NULL;

if(element<t->data)

return(find(t->lchild,element));

else

if(element>t->data)

return(find(t->rchild,element));

else

return t;

}

struct tree *findmin(struct tree *t)

{

if(t==NULL)

return NULL;

24

else

if(t->lchild==NULL)

return t;

else

return(findmin(t->lchild));

}

struct tree *findmax(struct tree *t)

{

if(t!=NULL)

{

while(t->rchild!=NULL)

t=t->rchild;

}

return t;

}

7. i) Illustrate the construction of tree of a binary tree given its in order and post order

traversal.

Inorder:HDIJEKBALFMCNGO

Postorder:HIDJKEBLMFNPOGCA(10) (AUT NOV 2011)(Refer 4)

ii)To find inorder,preorder and postorder from a given tree.(6) (AUT NOV 2011)(Refer 2)

8. i)Derive the expression tree for the expression (a+b+c)+((d*e+f)*g). Briefly explain the

construction procedure for the above.(8) ( AU MAY 2011)(Refer 2)

ii)Write the routines to implement the basic binary search operations. ( AU MAY 2011)

9. i)Discuss how a node could be inserted in a binary tree?(8) .( AU MAY 2011)(Refer 4)

ii)Write the procedure in C to find the K th element in a binary tree(8) .( AU MAY

2011)(Refer 4)

UNIT III BALANCED SEARCH TREES AND INDEXING

1. What is a balance factor in AVL trees?

Balance factor of a node is defined to be the difference between the height of the node's left

subtree and the height of the node's right subtree.

2. What is meant by pivot node?

The node to be inserted travel down the appropriate branch track along the way of the deepest

level node on the branch that has a balance factor of +1 or -1 is called pivot node.

3. What is the length of the path in a tree?

The length of the path is the number of edges on the path. In a tree there is exactly one path form

the root to each node.

4. Define full binary tree.

A full binary tree is a binary tree in which all the leaves are on the same level and every non-leaf

node has exactly two children.

5. Explain AVL rotation.

Manipulation of tree pointers is centered at the pivot node to bring the tree back into height

balance. The visual effect of this pointer manipulation is to rotate the sub tree whose root is the pivot

node. This operation is referred as AVL rotation. Two types of rotation are single rotation and double

rotation.

6. What are the applications of tree?

Applications of tree are

a. Trees are used in the representation of sets

b. Decision making – ex Eight queens problem

25

c. Computer games like chess, tic-tac-toe, checkers etc

d. Manipulation of arithmetic expression

e. Symbol table construction

7. Define Maxheap and Minheap.

Maxheap: A heap in which the parent has a larger key than the child’s key values then it is called

Maxheap.

Minheap : A heap in which the parent has a smaller key than the child’s key values then it is called

Minheap.

8. What is the need for hashing?

Hashing is used to perform insertions, deletions and find in constant average time.

9. Define hash function?

Hash function takes an identifier and computes the address of that identifier in the hash table

using some function.

10. List out the different types of hashing functions?

The different types of hashing functions are,

a. The division method

b. The mind square method

c. The folding method

d. Multiplicative hashing

e. Digit analysis

11. What are the problems in hashing?

a. Collision

b. Overflow

12. What are the problems in hashing?

When two keys compute in to the same location or address in the hash table through any of the

hashing function then it is termed collision.

13. Define collision.

If when an element is inserted it hashes to the same value as an already inserted element, then we

have a collision and need to resolve it.

14. Write down the different collision resolution techniques.

Separate chaining

Open Addressing

Linear probing

Quadratic probing

Double hashing

15. Define separate chaining.

Separate chaining is a technique used to avoid collision, where a linked list is used to store the

keys which fall in to the same address in the hash table.

16. Define open addressing.

Open addressing is an alternative approach for collision resolution which does not requires

pointers for the implementation of hash table. If collision occurs, alternative cells are tried until an empty

cell is found.

17. Give the advantages and disadvantages of open addressing.

Advantages

All items are stored in the hash table itself. There is no need for another data structure.

Disadvantages

The keys of the objects to be hashed must be distinct.

Dependent on choosing a proper table size.

Requires the use of a three state (Occupied,Empty,Delete)flag in each cell.

18. Define Quadratic probing.

26

In the case of quadratic probing when we are looking for an empty location, instead of

incrementing the offset by 1 every time, we will increment the offset by 1, 4,9,16.. and so on.

19. Define cluster.

Placement of elements in the consecutive slots which forms a group called as cluster.

20. What is meant by primary clustering?

Whenever an insertion is made between two clusters that are separated by one un occupied

position in linear probing , the two clusters become one, thereby potentially increasing the cluster length

by an amount much greater than one.

21. What is meant by secondary clustering?

The phenomenon of primary clustering will not occur with quadratic probing. However if

multiple items all hash to the same initial bin, the same sequence of numbers will be followed. This is

called as secondary clustering. The effect is less significant than that of primary clustering.

22. Give some applications of hash table

Database system

Symbol table

Data dictionaries

Network processing algorithms

23. What are the characteristics of good hash function?

A good hash function avoids collision.

A good hash function tends to spread keys evenly in the array.

A good hash function is easy and fast

A good hash function uses all the information provided in the key.

24. Give the different types of hashing methods.

Division remainder method

Digit extraction method

Folding

Radix conversion

Random number generator

25. Define Linear probing

Linear probing algorithm works for inserting an element in to the slot as follows

If slot ‘I’ is empty,we occupy it

Otherwise check slot ‘i+1’,’i+2’..and so on, until empty slot is found.

If we reach the end of the hash table, we start at the front (slot 0).

26. Compare separate chaining and open addressing

Separate chaining has several advantages over open addressing:

Collision resolution is simple and efficient

The hash table can hold more elements without large performance deterioration of open

addressing

The performance of chaining declines much more slowing than open addressing

Deletion is easy

Table size need not be prime number.

The keys of the object s to be hashed need not be unique

Disadvantages of separate chaining:

It requires implementation of a separate data structure for chains, and code to manage it.

The main cost of chaining is the extra space required for the linked lists. For some

languages, creating new nodes is expensive and slows down the system.

27. Define AVL trees. (AU NOV 2011)

An AVL tree is a binary search tree except that for every node in the tree, the height of the left

and right subtrees can differ by atmost 1. The height of the empty tree is defined to be - 1. A

27

balance factor is the height of the left subtree minus height of the right subtree. For an AVL tree

all balance factor should be +1, 0, or -1. If the balance factor of any node in an AVL tree

becomes less than -1 or greater than 1, the tree has to be balanced by making either single or

double rotations.

28. In an AVL tree, at what condition the balancing is to be done?(AUT NOV 2011)

. A balance factor is the height of the left subtree minus height of the right subtree. For an AVL

tree all balance factor should be +1, 0, or -1. If the balance factor of any node in an AVL tree

becomes less than -1 or greater than 1, the tree has to be balanced by making either single or

double rotations.

29.What is collision hashing? (AUT NOV 2011)

If when an element is inserted it hashes to the same value as an already inserted element, then we

have a collision and need to resolve it.

30.What is meant by binary heaps?(AU MAY 2011)

The efficient way of implementing priority queue is Binary Heap. Binary heap is merely referred

as Heaps, Heap have two properties namely

* Structure property

* Heap order property.

31.What is linear hashing? Specify its merits and demerits.( AU MAY 2011)

In linear probing, for the ith probe the position to be tried is (h(k) + i) mod tablesize,

where F(i) = i, is the linear function. In linear probing, the position in which a key can be stored

is found by sequentially searching all position starting from the position calculated by the hash

function until an empty cell is found. If the end of the table is reached and no empty cells has

been found, then the search is continued from the beginning of the table. It has a tendency to

create clusters in the table.

Advantages

It doesn't requires pointers

Disadvantage

It forms clusters, which degrades the performance of the hash table for storing and retrieving

Part-B:

1. What are AVL tree? Explain in detail the AVL rotations.

AVL Tree : - (Adelson - Velskill and LANDIS)

An AVL tree is a binary search tree except that for every node in the tree, the height of the left

and right subtrees can differ by atmost 1. The height of the empty tree is defined to be - 1.

A balance factor is the height of the left subtree minus height of the right subtree. For an AVL

tree all balance factor should be +1, 0, or -1. If the balance factor of any node in an AVL tree

becomes less than -1 or greater than 1, the tree has to be balanced by making either single or

double rotations.

An AVL tree causes imbalance, when any one of the following conditions occur.

Case 1 : An insertion into the left subtree of the left child of node .

Case 2 : An insertion into the right subtree of the left child of node .

Case 3 : An insertion into the left subtree of the right child of node .

Case 4 : An insertion into the right subtree of the right child of node .

These imbalances can be overcome by

1. Single Rotation

2. Double Rotation.

Single Rotation

Single Rotation is performed to fix case 1 and case 4.

Case 1. An insertion into the left subtree of the left child of K2.

28

Single Rotation to fix Case 1.

ROUTINE TO PERFORM SINGLE ROTATION WITH LEFT

SingleRotatewithLeft (Position K2)

{

Position K1;

K1 = K2 ->Left ;

K2 ->left = K1 ->Right ;

K1 ->Right = K2 ;

K2 ->Height = Max (Height (K2 ->Left), Height (K2-> Right)) + 1 ;

K1 ->Height = Max (Height (K1 ->left), Height (K1 ->Right)) + 1;

return K1 ;

}

Single Rotation to fix Case 4 :Case 4 : - An insertion into the right subtree of the right child of K1.

ROUTINE TO PERFORM SINGLE ROTATION WITH RIGHT :Single Rotation With Right (Position K2)

{

Position K2 ;

K2 = K1-> Right;

K1 ->Right = K2 ->Left ;

K2 ->Left = K1 ;

K2 ->Height = Max (Height (K2 ->Left), Height (K2 ->Right)) +1 ;

K1 ->Height = Max (Height (K1 ->Left), Height (K1 ->Right)) +1 ;

Return K2 ;

}

Single Rotation with left (K3)

Double Rotation

Double Rotation is performed to fix case 2 and case 3.

Case 2 :

An insertion into the right subtree of the left child.

ROUTINE TO PERFORM DOUBLE ROTATION WITH LEFT :

Double Rotate with left (Position K3)

{ /* Rotation Between K1 & K2 */

K3 ->Left = Single Rotate with Right (K3 ->Left);

/* Rotation Between K3 & K2 */

Return Single Rotate With Left (K3);

}

Case 4 :

An Insertion into the left subtree of the right child of K1.

ROUTINE TO PERFORM DOUBLE ROTATION WITH RIGHT :

Double Rotate with Right (Position K1)

{

/* Rotation Between K2 & K3 */

K1-> Right = Single Rotate With Left (K1-> Right);

/* Rotation Between K1 & K2 */

return Single Rotate With Right (K1);

29

2. Write a ‘C’ program to create hash table and handle the collision using linear probing.

LINEAR PROBING

In linear probing, for the ith probe the position to be tried is (h(k) + i) mod tablesize, where F(i) =

i, is the linear function.

In linear probing, the position in which a key can be stored is found by sequentially searching all

position starting from the position calculated by the hash function until an empty cell is found.

If the end of the table is reached and no empty cell has been found, then the search is continued

from the beginning of the table. It has a tendency to create clusters in the table.

Advantage:

* It doesn't requires pointers

Disadvantage

* It forms clusters, which degrades the performance of the hash table for storing and retrieving

data.

3. How will you resolve the collisions, while inserting elements in to the hash table using

separate chaining and linear probing? Write the routines for inserting and searching

elements from the hash table using the above mentioned techniques.

SEPARATE CHAINING

Separate chaining is an open hashing technique. A pointer field is added to each record

location. When an overflow occurs this pointer is set to point to overflow blocks making a linked

list.

In this method, the table can never overflow, since the linked list are only extended upon the

arrival of new keys.

ROUTINE TO PERFORM INSERTION

void Insert (int key, Hashtable H)

{

Position Pos, Newcell;

List L;

/* Traverse the list to check whether the key is already present */

Pos = FIND (Key, H);

If (Pos = = NULL) /* Key is not found */

{

Newcell = malloc (size of (struct ListNode));

If (Newcell ! = NULL)

(

L = H ->Thelists [Hash (key, H->Tablesize)];

Newcell ->Next = L ->Next;

Newcell ->Element = key;

/* Insert the key at the front of the list */

L ->Next = Newcell;

}

}

}

FIND ROUTINE

Position Find (int key, Hashtable H)

{

Position P;

30

List L;

L = H Thelists [Hash (key, H->Tablesize)];

P = L Next;

while (P! = NULL && P->Element ! = key)

P = p->Next;

return p;

}

Advantage

More number of elements can be inserted as it uses array of linked lists.

Disadvantage of Separate Chaining

* It requires pointers, which occupies more memory space.

* It takes more effort to perform a search, since it takes time to evaluate the hash function and

also to traverse the list.

LINEAR PROBING

In linear probing, for the ith probe the position to be tried is (h(k) + i) mod tablesize, where F(i) =

i, is the linear function.

In linear probing, the position in which a key can be stored is found by sequentially searching all

position starting from the position calculated by the hash function until an empty cell is found.

If the end of the table is reached and no empty cell has been found, then the search is continued

from the beginning of the table. It has a tendency to create clusters in the table.

Advantage:

* It doesn't requires pointers

Disadvantage

* It forms clusters, which degrades the performance of the hash table for storing and retrieving

data.

4. Explain in detain B-Tree operation.

In computer science, a B-tree is a tree data structure that keeps data sorted and

allows searches, sequential access, insertions, and deletions in logarithmic time. The Btree is a generalization of a binary search tree in that a node can have more than two

children. (Comer 1979, p. 123) Unlike self-balancing binary search trees, the B-tree is

optimized for systems that read and write large blocks of data. It is commonly used in

databases and filesystems.

In B-trees, internal (non-leaf) nodes can have a variable number of child nodes

within some pre-defined range. When data is inserted or removed from a node, its number

of child nodes changes. In order to maintain the pre-defined range, internal nodes may be

joined or split. Because a range of child nodes is permitted, B-trees do not need rebalancing as frequently as other self-balancing search trees, but may waste some space,

since nodes are not entirely full. The lower and upper bounds on the number of child

nodes are typically fixed for a particular implementation. For example, in a 2-3 B-tree

(often simply referred to as a 2-3 tree), each internal node may have only 2 or 3 child

nodes.

According to Knuth's definition, a B-tree of order m (the maximum number of children for each

node) is a tree which satisfies the following properties:

1. Every node has at most m children.

2. Every node (except root) has at least ⌈m⁄2⌉ children.

31

3. The root has at least two children if it is not a leaf node.

4. A non-leaf node with k children contains k−1 keys.

5. All leaves appear in the same level, and carry information.

Each internal node’s elements act as separation values which divide its sub trees. For example, if

an internal node has 3 child nodes (or subtrees) then it must have 2 separation values or

elements: a1 and a2. All values in the leftmost subtree will be less than a1, all values in the

middle subtree will be between a1 and a2, and all values in the rightmost subtree will be greater

than a2.

Internal nodes

Internal nodes are all nodes except for leaf nodes and the root node. They are usually

represented as an ordered set of elements and child pointers. Every internal node contains

a maximum of U children and a minimum of L children. Thus, the number of elements

is always 1 less than the number of child pointers (the number of elements is between

L−1 and U−1). U must be either 2L or 2L−1; therefore each internal node is at least half

full. The relationship between U and L implies that two half-full nodes can be joined to

make a legal node, and one full node can be split into two legal nodes (if there’s room to

push one element up into the parent). These properties make it possible to delete and

insert new values into a B-tree and adjust the tree to preserve the B-tree properties.

The root node

The root node’s number of children has the same upper limit as internal nodes, but has no

lower limit. For example, when there are fewer than L−1 elements in the entire tree, the

root will be the only node in the tree, with no children at all.

Leaf nodes

Leaf nodes have the same restriction on the number of elements, but have no children,

and no child pointers.

A B-tree of depth n+1 can hold about U times as many items as a B-tree of depth n, but the cost

of search, insert, and delete operations grows with the depth of the tree. As with any balanced

tree, the cost grows much more slowly than the number of elements.

Some balanced trees store values only at leaf nodes, and use different kinds of nodes for leaf

nodes and internal nodes. B-trees keep values in every node in the tree, and may use the same

structure for all nodes. However, since leaf nodes never have children, the B-trees benefit from

improved performance if they use a specialized structure.

32

Insertion

A B Tree insertion example with each iteration. The nodes of this B tree have at most 3 children

(Knuth order 3).

All insertions start at a leaf node. To insert a new element, search the tree to find the leaf node

where the new element should be added. Insert the new element into that node with the following

steps:

1. If the node contains fewer than the maximum legal number of elements, then there is

room for the new element. Insert the new element in the node, keeping the node's

elements ordered.

2. Otherwise the node is full, evenly split it into two nodes so:

1. A single median is chosen from among the leaf's elements and the new element.

2. Values less than the median are put in the new left node and values greater than

the median are put in the new right node, with the median acting as a separation

value.

3. The separation value is inserted in the node's parent, which may cause it to be

split, and so on. If the node has no parent (i.e., the node was the root), create a

new root above this node (increasing the height of the tree).

33

Deletion

There are two popular strategies for deletion from a B-Tree.

1. Locate and delete the item, then restructure the tree to regain its invariants, OR

2. Do a single pass down the tree, but before entering (visiting) a node, restructure the tree

so that once the key to be deleted is encountered, it can be deleted without triggering the

need for any further restructuring

The algorithm below uses the former strategy.

There are two special cases to consider when deleting an element:

1. The element in an internal node may be a separator for its child nodes

2. Deleting an element may put its node under the minimum number of elements and

children

The procedures for these cases are in order below.

Deletion from a leaf node

1. Search for the value to delete

2. If the value's in a leaf node, simply delete it from the node

3. If underflow happens, check siblings, and either transfer a key or fuse the siblings

together

4. If deletion happened from right child, retrieve the max value of left child if it has no

underflow

5. In vice-versa situation, retrieve the min element from right

Deletion from an internal node

Each element in an internal node acts as a separation value for two subtrees, and when such an

element is deleted, two cases arise.

In the first case, both of the two child nodes to the left and right of the deleted element have the

minimum number of elements, namely L−1. They can then be joined into a single node with

2L−2 elements, a number which does not exceed U−1 and so is a legal node. Unless it is known

that this particular B-tree does not contain duplicate data, we must then also (recursively) delete

the element in question from the new node.

In the second case, one of the two child nodes contains more than the minimum number of

elements. Then a new separator for those subtrees must be found. Note that the largest element in

the left subtree is still less than the separator. Likewise, the smallest element in the right subtree

is the smallest element which is still greater than the separator. Both of those elements are in leaf

nodes, and either can be the new separator for the two subtrees.

34

1. If the value is in an internal node, choose a new separator (either the largest element in

the left subtree or the smallest element in the right subtree), remove it from the leaf node

it is in, and replace the element to be deleted with the new separator

2. This has deleted an element from a leaf node, and so is now equivalent to the previous

case

Rebalancing after deletion

If deleting an element from a leaf node has brought it under the minimum size, some elements

must be redistributed to bring all nodes up to the minimum. In some cases the rearrangement will

move the deficiency to the parent, and the redistribution must be applied iteratively up the tree,

perhaps even to the root. Since the minimum element count doesn't apply to the root, making the

root be the only deficient node is not a problem. The algorithm to rebalance the tree is as follows

1. If the right sibling has more than the minimum number of elements

1. Add the separator to the end of the deficient node

2. Replace the separator in the parent with the first element of the right sibling

3. Append the first child of the right sibling as the last child of the deficient node

2. Otherwise, if the left sibling has more than the minimum number of elements

1. Add the separator to the start of the deficient node

2. Replace the separator in the parent with the last element of the left sibling

3. Insert the last child of the left sibling as the first child of the deficient node

3. If both immediate siblings have only the minimum number of elements

1. Create a new node with all the elements from the deficient node, all the elements

from one of its siblings, and the separator in the parent between the two combined

sibling nodes

2. Remove the separator from the parent, and replace the two children it separated

with the combined node

3. If that brings the number of elements in the parent under the minimum, repeat

these steps with that deficient node, unless it is the root, since the root is permitted

to be deficient

The only other case to account for is when the root has no elements and one child. In this case it

is sufficient to replace it with its only child.

5. Explain with algorithm how insertion and deletion is performed in a AVL tree. Explain how

the tree is balanced after the operation.(16)(AU NOV 2011)

INSERTION ROUTINE

struct avltree *insertion(struct avltree *t,int x)

{

if(t==NULL)

{

t=(struct avltree*)malloc(sizeof(struct avltree));

t->data=x;

t->left=t->right=NULL;

t->height=0;

35

}

else if(x<t->data)

{

t->left=insertion(t->left,x);

if((height(t->left)-height(t->right))==2)

{

if(x<t->left->data)

t=singlerotationwithleft(t);

else

t=doublerotationwithleft(t);

}

}

else if(x>t->data)

{

t->right=insertion(t->right,x);

if((height(t->right)-height(t->left)) ==2)

{

if(x>t->right->data)

t=singlerotationwithright(t);

else

t=doublerotationwithright(t);

}

}

t->height=max(height(t->left),height(t->right))+1;

return t;

}

DELETION ROUTINE

Deletion is same as binary search tree .After deleting the element we have to check the balance factor of

the tree .If it is un balanced ,do suitable rotations to make the tree balanced.

6. i)Write the procedure in C that will traverse a linked B- tree , visiting all its entries in order

of keys(samller to larger). .(10)(AU NOV 2011)(Refer 4)

ii)What is meant by collision hashing?Explain the separate chaining resolution

strategy.(6)(AU NOV 2011)(Refer 3)

7. Write the functions to insert and delete elements from AVL tree. .(16)(AU NOV 2011)

(Refer 5)

8. What is meant by open addressing? Explain the collision resolution strategies. .(16)(AU NOV

2011)

Open addressing is also called closed Hashing, which is an alternative to resolve the collisions

with linked lists.

In this hashing system, if a collision occurs, alternative cells are tried until an empty cell is

found. (ie) cells h0(x), h1(x), h2(x).....are tried in succession.

There are three common collision resolution strategies. They are

(i) Linear Probing

(ii) Quadratic probing

(iii) Double Hashing.

36

LINEAR PROBING

In linear probing, for the ith probe the position to be tried is (h(k) + i) mod tablesize, where F(i) =

i, is the linear function.

In linear probing, the position in which a key can be stored is found by sequentially searching all

position starting from the position calculated by the hash function until an empty cell is found.

If the end of the table is reached and no empty cell has been found, then the search is continued

from the beginning of the table. It has a tendency to create clusters in the table.

Advantage:

* It doesn't requires pointers

Disadvantage

* It forms clusters, which degrades the performance of the hash table for storing and retrieving

data.

QUADRATIC PROBING

Quadratic probing is a collision resolution technique that eliminates the primary

clustering problem of linear probing.

The popular choice is F(i)=i2

Position Find(ElementType key,hashTable H)

{

Position CurrentPos;

int CollisionNum;

CollisionNum=0;

CurrentPos=Hash(Key,H->TableSize);

while(H->TheCells[CurrentPos].Info!=Empty&&H->TheCells[CurrentPos].Element!=key)

{

CurrentPos+=2*++CollisionNum-1;

if(CurrentPos>=H->TableSize)

CurrentPos-=H->TableSize;

}

Return CurrentPos;

}

Void Insert(ElementType Key,HashTable H)

{

Position Pos;

Pos=Fin

Disadvantage :

Secondary clustering

DOUBLE HASHING

Hash1(key)=key%tablesize

Hash2(key)=R-(key%R)

R is a prime number smaller than the table size.

9. Discuss about AVL Trees.(16)(AUT NOV 2011)(Refer 1)

37

10. i)Briefly explain the hash function in detail.(6) (AUT NOV 2011)

Hash Table

The hash table data structure is an array of some fixed size, containing the keys. A key is a value

associated with each record.

Hashing Function

A hashing function is a key - to - address transformation, which acts upon a given key to

compute the relative position of the key in an array.

A simple Hash function

HASH (KEYVALUE) = KEYVALUE MOD TABLESIZE

Example : - Hash (92)

Hash (92) = 92 mod 10 = 2

The keyvalue `92' is placed in the relative location `2'.

ROUTINE FOR SIMPLE HASH FUNCTION

Hash (Char *key, int Table Size)

{

int Hashvalue = 0;

while (* key ! = `\0')

Hashval + = * key ++;

return Hashval % Tablesize;

}

Some of the Methods of Hashing Function

1. Module Division

2. Mid - Square Method

3. Folding Method

4. PSEUDO Random Method

5. Digit or Character Extraction Method

6. Radix Transformation.

ii)Narrate B-Tree operations(10) (AUT NOV 2011)(Refer 4)

UNIT-IV GRAPHS

1 .Define a Graph

Graph is a non-linear data structure that represents less relationship between its adjacent

elements. There is no hierarchical relationship between the adjacent elements in case of graphs.

A Graph G={V,E} consists of two sets V & E, where V is a set of vertices and E is a set of Edges.

The set E is set of pair of vertices. So each is a pair (v,w) where v,w is an element of V.

2. Define adjacent nodes.

Any two nodes which are connected by an edge in a graph are called adjacent nodes. For example

if an edge x e E is associated with a pair of nodes (u,v), where u, v e V, then we say that the edge x

connect the nodes u and v.

3. Define undirected graph

If an edge between any two nodes in a graph is not directionally oriented, a graph is called as undirected

graph. It is also called as unqualified graph.

4. Define directed graph

If an edge between any two nodes in a graph is directionally oriented, a graph is called as directed

graph.It is also called as digraph.

5. Define a path in a graph.

A path in a graph is defined as a sequence of distinct vertices each adjacent to the next, except

possibly the first vertex and last vertex is different.

6. Define a cycle in a graph.

38

A cycle is a path containing at least thee vertices such that the starting and the ending vertices are

the same.

7. Define a strongly connected graph.

A directed graph is said to be a strongly connected graph if for every pair of distinct vertices there exists a

directed path from every vertex to ever other vertex. It is also called so Complete Graph.

8. Define a weakly connected graph.

A directed graph is said to be a weakly connected graph if any vertex doesn’t have a directed path

to any other vertices.

9. Define a weighted graph.

A graph is said to be weighted graph if every edge in the graph is assigned some weight or value.

The weight of an edge is a positive value that may be representing the distance between the vertices or the

weights of the edges along the path.

10. Define parallel edges

In some directed as well as undirected graph certain pair of nodes are joined by more than one

edge, such edges are called parallel edges.

11. Define Adjacency Matrix.

Adjacency Matrix is a representation used to represent a graph with zeros and ones.A graph

containing n vertices can be represented using n rows and n columns.

12. What is meant by traversing a graph? State the different ways of traversing a Graph.

It means visiting all the nodes in the graph.

Depth-first Traversal(or) Depth-first Search(DFS)

Breadth-first Traversal(or) Breadth-first Search(BFS)

13.Define out degree of a Graph

In a directed graph, for any node v, the numbers of outgoing edges from v are called out degree of a

node v.

14. Define in degree of a Graph

In a directed graph, for any node v, the number of incoming edges to v, are called in degree of a node v.

15. Define total degree of a node

The sum of the in degree and out degree of a node is called the total degree of the node

16. What is the use of Topological sort

A Topological sort is an ordering of vertices in a directed acyclic graph, such that there is a path from

vi to vj then vj appears after vi in the ordering. Topological ordering is linear ordering of vertices.

17. Define biconnected graph

A connected undirected graph G is said to be biconnected, if E remains connected after the

removal of any one vertex and the edges that are incident upon that vertex.

18. What is minimum spanning tree.

A minimum spanning tree of an undirected graph G is a tree formed graph edges that connects all

the vertices of G at the lowest total cost. The number of edges in a minimum spanning tree is | v | -1.

19. What is the running time for Prim’s algorithm

The running time for Prim’s algorithm withput using heaps = O ( | v | 2 ), which is optimal for dense

graph. The running time for Prim’s algorithm using binary heaps = O (| E | log | V |) which is good for

sparse graphs.

20. Define articulation point in a graph

The articulation point is the point at which the removal of the vertex will split the graph.

21.Define biconnectivity.(AU NOV 2011)

A connected undirected graph G is said to be biconnected, if E remains connected after the removal of

any one vertex and the edges that are incident upon that vertex.

22. What is meant by digraph? Define the terms in degree and out degree with respect to

digraph?(AU MAY 2011)

If an edge between any two nodes in a graph is directionally oriented, a graph is called as

directed graph.It is also called as digraph.

39

Out degree

In a directed graph, for any node v, the numbers of outgoing edges from v are called out degree of a

node v.

In degree

In a directed graph, for any node v, the number of incoming edges to v, are called in degree of a node

v.

23.Write the adjacency matrix for the graph.(AU MAY 2011)

Adjacency Matrix is a representation used to represent a graph with zeros and ones.A graph containing n