Inferring a Gaussian distribution Thomas P. Minka 1 Introduction

... p(V|X). It also derives p(X|Gaussian), the probability that the data came from any Gaussian whatsoever. From this we can get the posterior predictive density p(x|X), which has the most practical importance. The analysis is done for noninformative priors and for arbitrary conjugate priors. The presen ...

... p(V|X). It also derives p(X|Gaussian), the probability that the data came from any Gaussian whatsoever. From this we can get the posterior predictive density p(x|X), which has the most practical importance. The analysis is done for noninformative priors and for arbitrary conjugate priors. The presen ...

Week1_Lecture 3_post

... From it, we can see 1) The SD always equals to zero if the all the values in a particular dataset are the same (i.e. no spread in value) 2) The SD will be very large if the values in the dataset vary a lot from each other. (i.e. a huge spread in value) Therefore, in this sense, we use SD as a measur ...

... From it, we can see 1) The SD always equals to zero if the all the values in a particular dataset are the same (i.e. no spread in value) 2) The SD will be very large if the values in the dataset vary a lot from each other. (i.e. a huge spread in value) Therefore, in this sense, we use SD as a measur ...

Probability Distributions - Department of Earth System Science

... P = the probability that a randomly selected value of a variable X falls between a and b. f(x) = the probability density function. The probability function has to be integrated over distinct limits to obtain a probability. The probability for X to have a particular value is ZERO. Two importa ...

... P = the probability that a randomly selected value of a variable X falls between a and b. f(x) = the probability density function. The probability function has to be integrated over distinct limits to obtain a probability. The probability for X to have a particular value is ZERO. Two importa ...

A Markov chain approach to quality control

... with many well-known run and scan statistics as well as with analogous statistics not yet discussed in the literature but which may be useful at a practical level. Moreover, using the algorithms provided here, it is possible to apply the approach in many real situations also if the limits concerning ...

... with many well-known run and scan statistics as well as with analogous statistics not yet discussed in the literature but which may be useful at a practical level. Moreover, using the algorithms provided here, it is possible to apply the approach in many real situations also if the limits concerning ...

sampling - Routledge

... If the target population is 1,000 employees in nine organizations, then the sample size is 278 from the nine organizations. Put the names of the nine organizations on a card each and give each organization a number, then place all the cards in a box. Draw out the first card and put a tally mark by t ...

... If the target population is 1,000 employees in nine organizations, then the sample size is 278 from the nine organizations. Put the names of the nine organizations on a card each and give each organization a number, then place all the cards in a box. Draw out the first card and put a tally mark by t ...

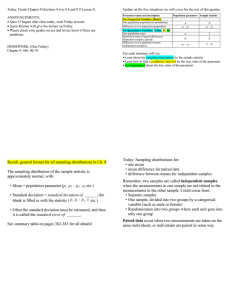

Recall, general format for all sampling distributions in Ch. 9: The

... differences, and for differences in means for independent samples Need to learn to distinguish between these two situations. Notation for paired differences: • di = difference in the two measurements for individual i = 1, 2, ..., n • µd = mean for the population of differences, if all possible pairs ...

... differences, and for differences in means for independent samples Need to learn to distinguish between these two situations. Notation for paired differences: • di = difference in the two measurements for individual i = 1, 2, ..., n • µd = mean for the population of differences, if all possible pairs ...

Today: Finish Chapter 9 (Sections 9.6 to 9.8 and 9.9 Lesson 3

... blank is filled in with the statistic ( pˆ , pˆ 1 − pˆ 2 , x etc.) • Often the standard deviation must be estimated, and then it is called the standard error of _______. See summary table on pages 382-383 for all details! ...

... blank is filled in with the statistic ( pˆ , pˆ 1 − pˆ 2 , x etc.) • Often the standard deviation must be estimated, and then it is called the standard error of _______. See summary table on pages 382-383 for all details! ...

CRYSTAL BALL ARTICLES Simulation Techniques for Risk

... In order to describe the distribution types of the "uncertain" variables in our model, we need additional data about the uncertain variables. There are several sources for this additional data: Analysis of Historical Data: If an organization has historical data describing an uncertain variable, a si ...

... In order to describe the distribution types of the "uncertain" variables in our model, we need additional data about the uncertain variables. There are several sources for this additional data: Analysis of Historical Data: If an organization has historical data describing an uncertain variable, a si ...