Bayesian Statistics Problems 2 1. It is believed that the number of

... the posterior distribution of θ and find its mean and variance. 2. A coin is known to be biased. The probability that it lands heads when tossed is θ. The coin is tossed successively until the first tail is seen. Let x be the number of heads before the first tail. (a) Show that the resulting geometr ...

... the posterior distribution of θ and find its mean and variance. 2. A coin is known to be biased. The probability that it lands heads when tossed is θ. The coin is tossed successively until the first tail is seen. Let x be the number of heads before the first tail. (a) Show that the resulting geometr ...

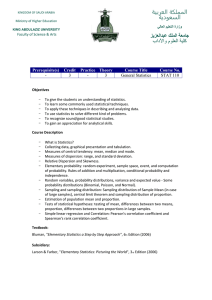

جامعة الملك عبدالعزيز

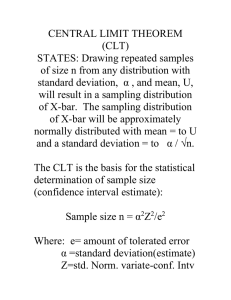

... Collecting data, graphical presentation and tabulation. Measures of central tendency: mean, median and mode. Measures of dispersion: range, and standard deviation. Relative Dispersion and Skewness. Elementary probability: random experiment, sample space, event, and computation of probability. Rules ...

... Collecting data, graphical presentation and tabulation. Measures of central tendency: mean, median and mode. Measures of dispersion: range, and standard deviation. Relative Dispersion and Skewness. Elementary probability: random experiment, sample space, event, and computation of probability. Rules ...