Problem Set 2 - Massachusetts Institute of Technology

... (due in class, 23-Sep-10) 1. Density matrices. A density matrix (also sometimes known as a density operator) is a representation of statistical mixtures of quantum states. This exercise introduces some examples of density matrices, and explores some of their properties. (a) Let |ψi = a|0i + b|1i be ...

... (due in class, 23-Sep-10) 1. Density matrices. A density matrix (also sometimes known as a density operator) is a representation of statistical mixtures of quantum states. This exercise introduces some examples of density matrices, and explores some of their properties. (a) Let |ψi = a|0i + b|1i be ...

LOYOLA COLLEGE (AUTONOMOUS), CHENNAI – 600 034 1

... 11. If A is any nxm matrix such that AB and BA are both defined. Show that B is an mxn matrix. 12. If A is any square matrix, then show that A + A' is symmetric and A – A' is Skew - symmetric. 13. Prove that every invertible matrix possesses a unique inverse. ...

... 11. If A is any nxm matrix such that AB and BA are both defined. Show that B is an mxn matrix. 12. If A is any square matrix, then show that A + A' is symmetric and A – A' is Skew - symmetric. 13. Prove that every invertible matrix possesses a unique inverse. ...

(pdf)

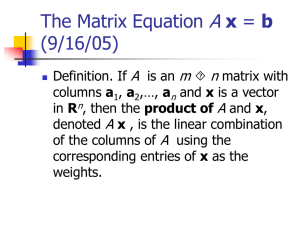

... List the free variables for the system Ax = b and find a basis for the vector space null(A). Find the rank(A). 3. Explain why the rows of a 3 × 5 matrix have to be linearly dependent. 4. Let A be a matrix wich is not the identity and assume that A2 = A. By contradiction show that A is not invertible ...

... List the free variables for the system Ax = b and find a basis for the vector space null(A). Find the rank(A). 3. Explain why the rows of a 3 × 5 matrix have to be linearly dependent. 4. Let A be a matrix wich is not the identity and assume that A2 = A. By contradiction show that A is not invertible ...

Orbital measures and spline functions Jacques Faraut

... to Baryshnikov. More generally the eigenvalues of the projection of X on the k × k upper left corner (1 ≤ k ≤ n − 1) is distributed according to a determinantal formula due to Olshanski. In particular (for k = 1) the entry X11 is distributed according to a probability measure on R, the density of wh ...

... to Baryshnikov. More generally the eigenvalues of the projection of X on the k × k upper left corner (1 ≤ k ≤ n − 1) is distributed according to a determinantal formula due to Olshanski. In particular (for k = 1) the entry X11 is distributed according to a probability measure on R, the density of wh ...

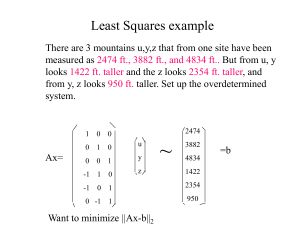

leastsquares

... •Does not require decomposition of matrix •Good for large sparse problem-like PET •Iterative method that requires matrix vector multiplication by A and AT each iteration •In exact arithmetic for n variables guaranteed to converge in n iterations- so 2 iterations for the exponential fit and 3 iterati ...

... •Does not require decomposition of matrix •Good for large sparse problem-like PET •Iterative method that requires matrix vector multiplication by A and AT each iteration •In exact arithmetic for n variables guaranteed to converge in n iterations- so 2 iterations for the exponential fit and 3 iterati ...

solution of equation ax + xb = c by inversion of an m × m or n × n matrix

... for X, where X and C are M × N real matrices, A is an M × M real matrix, and B is an N × N real matrix. A familiar example occurs in the Lyapunov theory of stability [1], [2], [3] with B = AT . Is also arises in the theory of structures [4]. Using the notation P × Q to denote the Kronecker product ( ...

... for X, where X and C are M × N real matrices, A is an M × M real matrix, and B is an N × N real matrix. A familiar example occurs in the Lyapunov theory of stability [1], [2], [3] with B = AT . Is also arises in the theory of structures [4]. Using the notation P × Q to denote the Kronecker product ( ...

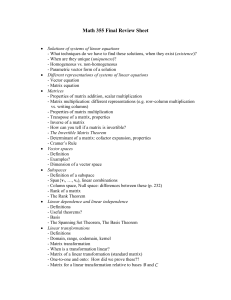

Review Sheet

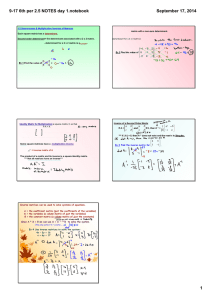

... - What techniques do we have to find these solutions, when they exist (existence)? - When are they unique (uniqueness)? - Homogeneous vs. non-homogeneous - Parametric vector form of a solution Different representations of systems of linear equations - Vector equation - Matrix equation Matrices - Pro ...

... - What techniques do we have to find these solutions, when they exist (existence)? - When are they unique (uniqueness)? - Homogeneous vs. non-homogeneous - Parametric vector form of a solution Different representations of systems of linear equations - Vector equation - Matrix equation Matrices - Pro ...

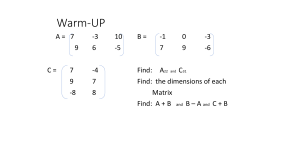

Multiplicative Inverses of Matrices and Matrix Equations 1. Find the

... 5. Find A-1 by forming [A|I] and then using row operations to obtain [I|B] where A-1 = [B]. Check that AA-1 = I and A-1A = I ...

... 5. Find A-1 by forming [A|I] and then using row operations to obtain [I|B] where A-1 = [B]. Check that AA-1 = I and A-1A = I ...

Worksheet 9 - Midterm 1 Review Math 54, GSI

... 12. True or false: AT A = AAT for every n × n matrix A. Justify your answer. 13. True or false: Every subspace U ⊂ Rn is the null space (same as kernel) of a linear transformation T : Rn → Rk for some k. Justify your answer. 14. An n × n matrix is said to be symmetric if AT = A and anti-symmetric if ...

... 12. True or false: AT A = AAT for every n × n matrix A. Justify your answer. 13. True or false: Every subspace U ⊂ Rn is the null space (same as kernel) of a linear transformation T : Rn → Rk for some k. Justify your answer. 14. An n × n matrix is said to be symmetric if AT = A and anti-symmetric if ...

Matrices Basic Operations Notes Jan 25

... • When businesses deal with sales, there is a need to organize information. For instance, let's say Karadimos King is a fast-food restaurant that made the following number of sales: • On Monday, Karadimos King sold 35 hamburgers, 50 sodas, and 45 fries. On Tuesday, it sold 120 sodas, 56 fries, and 4 ...

... • When businesses deal with sales, there is a need to organize information. For instance, let's say Karadimos King is a fast-food restaurant that made the following number of sales: • On Monday, Karadimos King sold 35 hamburgers, 50 sodas, and 45 fries. On Tuesday, it sold 120 sodas, 56 fries, and 4 ...

Let v denote a column vector of the nilpotent matrix Pi(A)(A − λ iI)ni

... Let v denote a column vector of the nilpotent matrix Pi (A)(A − λi I)ni −1 where ni is the so called nilpotency. Theorem 3 in [1] shows that APi (A)(A − λi I)ni −1 = λi Pi (A)(A − λi I)ni −1 . which means a column vector v of the matrix is an eigenvector corresponding to the eigenvalue λi . The symb ...

... Let v denote a column vector of the nilpotent matrix Pi (A)(A − λi I)ni −1 where ni is the so called nilpotency. Theorem 3 in [1] shows that APi (A)(A − λi I)ni −1 = λi Pi (A)(A − λi I)ni −1 . which means a column vector v of the matrix is an eigenvector corresponding to the eigenvalue λi . The symb ...