Linear Algebra 2270 Homework 9 Problems:

... iv. By performing matrix-matrix multiplication find (B ⋅ C) and then A ⋅ (B ⋅ C). Compare it with 1(c)iii. ...

... iv. By performing matrix-matrix multiplication find (B ⋅ C) and then A ⋅ (B ⋅ C). Compare it with 1(c)iii. ...

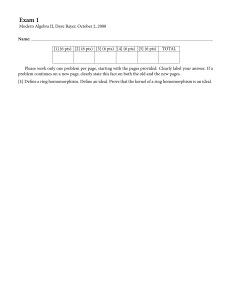

F08 Exam 1

... Please work only one problem per page, starting with the pages provided. Clearly label your answer. If a problem continues on a new page, clearly state this fact on both the old and the new pages. [1] Define a ring homomorphism. Define an ideal. Prove that the kernel of a ring homomorphism is an ide ...

... Please work only one problem per page, starting with the pages provided. Clearly label your answer. If a problem continues on a new page, clearly state this fact on both the old and the new pages. [1] Define a ring homomorphism. Define an ideal. Prove that the kernel of a ring homomorphism is an ide ...

USE OF LINEAR ALGEBRA I Math 21b, O. Knill

... could be used for example for cryptological purposes. Freely available quantum computer language (QCL) interpreters can simulate quantum computers with an arbitrary number of qubits. ...

... could be used for example for cryptological purposes. Freely available quantum computer language (QCL) interpreters can simulate quantum computers with an arbitrary number of qubits. ...

test 2

... (a) If A is a 3 × 3 matrix and {~v1 , ~v2 , ~v3 } is a linearly dependent set of vectors in R3 , then {A~v1 , A~v2 , A~v3 } is also a linearly dependent set. (b) If A is a 3 × 3 invertible matrix and {~v1 , ~v2 , ~v3 } is a linearly independent set of vectors in R3 , then {A~v1 , A~v2 , A~v3 } is al ...

... (a) If A is a 3 × 3 matrix and {~v1 , ~v2 , ~v3 } is a linearly dependent set of vectors in R3 , then {A~v1 , A~v2 , A~v3 } is also a linearly dependent set. (b) If A is a 3 × 3 invertible matrix and {~v1 , ~v2 , ~v3 } is a linearly independent set of vectors in R3 , then {A~v1 , A~v2 , A~v3 } is al ...

54 Quiz 3 Solutions GSI: Morgan Weiler Problem 0 (1 pt/ea). (a

... Solution: This problem is going to be taken off the quiz, because I did not specify the dimensions of A and B. (b). True or false: if A and B are n × n matrices and AB is invertible, A is invertible. Solution: True – this was a homework problem. (c). True or false: if A is invertible, then A is a pr ...

... Solution: This problem is going to be taken off the quiz, because I did not specify the dimensions of A and B. (b). True or false: if A and B are n × n matrices and AB is invertible, A is invertible. Solution: True – this was a homework problem. (c). True or false: if A is invertible, then A is a pr ...

9.3. Infinite Series Of Matrices. Norms Of Matrices

... can construct a matrix- valued function f A by replacing x with A in f x . Hopefully, useful characteristics of f x would be carried over to f A . For example, we expect the exponential e A to possess the laws of exponents, namely, t s A for all scalars s, t. ...

... can construct a matrix- valued function f A by replacing x with A in f x . Hopefully, useful characteristics of f x would be carried over to f A . For example, we expect the exponential e A to possess the laws of exponents, namely, t s A for all scalars s, t. ...

Document

... An m x 1 vector p is said to be a normalized vector or a unit vector if p’p=1. The m x 1 vectors p1, p2,…pn where n is less than or equal to m are said to be orthogonal if pi’pj=0 for all i not equal to j. If a group of n orthogonal vectors are also normalized, the vectors are said to be orthonormal ...

... An m x 1 vector p is said to be a normalized vector or a unit vector if p’p=1. The m x 1 vectors p1, p2,…pn where n is less than or equal to m are said to be orthogonal if pi’pj=0 for all i not equal to j. If a group of n orthogonal vectors are also normalized, the vectors are said to be orthonormal ...

Multiplication of Matrices

... and x = C●,k is a column vector. On the other hand by the first definition of matrix multiplication ((AB)C)ik = ((AB)i,●)(C●,k). By the third definition of matrix multiplication (AB)i,● = (Ai,●)B. So ((AB)C)ik = ((Ai,●)B)(C●,k) = (pB)x. However, in the previous section we proved that if p is a row v ...

... and x = C●,k is a column vector. On the other hand by the first definition of matrix multiplication ((AB)C)ik = ((AB)i,●)(C●,k). By the third definition of matrix multiplication (AB)i,● = (Ai,●)B. So ((AB)C)ik = ((Ai,●)B)(C●,k) = (pB)x. However, in the previous section we proved that if p is a row v ...

A recursive parameterisation of unitary matrices

... where A(2) is as defined in Eq.(23). Evidently, depending on the application one has in mind some choices may be more convenient than others. This is demonstrated in Refs.[2] and [3] which deal with the so called quark and lepton mixing matrices. The essential point is that V (3) is described in a r ...

... where A(2) is as defined in Eq.(23). Evidently, depending on the application one has in mind some choices may be more convenient than others. This is demonstrated in Refs.[2] and [3] which deal with the so called quark and lepton mixing matrices. The essential point is that V (3) is described in a r ...

Non-negative matrix factorization

NMF redirects here. For the bridge convention, see new minor forcing.Non-negative matrix factorization (NMF), also non-negative matrix approximation is a group of algorithms in multivariate analysis and linear algebra where a matrix V is factorized into (usually) two matrices W and H, with the property that all three matrices have no negative elements. This non-negativity makes the resulting matrices easier to inspect. Also, in applications such as processing of audio spectrograms non-negativity is inherent to the data being considered. Since the problem is not exactly solvable in general, it is commonly approximated numerically.NMF finds applications in such fields as computer vision, document clustering, chemometrics, audio signal processing and recommender systems.