Chapter 1 Linear Equations and Graphs

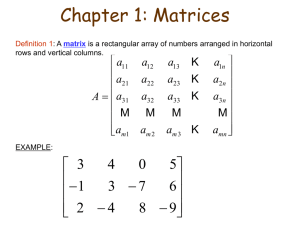

... • Two matrices are equal if they are the same size and their corresponding elements are equal. • The sum of two matrices of the same size is the matrix with elements which are the sum of the corresponding elements of the two given matrices. • The negative of a matrix is the matrix with elements that ...

... • Two matrices are equal if they are the same size and their corresponding elements are equal. • The sum of two matrices of the same size is the matrix with elements which are the sum of the corresponding elements of the two given matrices. • The negative of a matrix is the matrix with elements that ...

1. (a) Solve the system: x1 + x2 − x3 − 2x 4 + x5 = 1 2x1 + x2 + x3 +

... 16. Suppose that T : V1 → V2 is a one-to-one linear transformation and suppose that H is a nonzero subspace of the vector space V1 . Then T (H), the set of all images of vectors in H under T , is a subspace of V2 . (a) Define what it means for a set B = {v1 , v2 , ..., vn } to be a basis for H. (b) ...

... 16. Suppose that T : V1 → V2 is a one-to-one linear transformation and suppose that H is a nonzero subspace of the vector space V1 . Then T (H), the set of all images of vectors in H under T , is a subspace of V2 . (a) Define what it means for a set B = {v1 , v2 , ..., vn } to be a basis for H. (b) ...

Eigenvectors

... 1. Reminder: Eigenvectors A vector x invariant up to a scaling by λ to a multiplication by matrix A is called an eigenvector of A with eigenvalue λ : ...

... 1. Reminder: Eigenvectors A vector x invariant up to a scaling by λ to a multiplication by matrix A is called an eigenvector of A with eigenvalue λ : ...

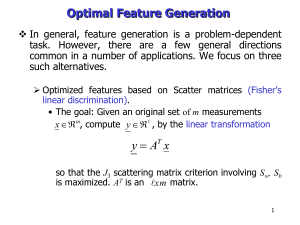

Feature Generation

... • For each class, estimate the autocorrelation matrix Ri, and compute the m largest eigenvalues. Form Ai, by using respective eigenvectors as columns. • Classify x to the class ωi, for which the norm of the subspace projection is maximum ...

... • For each class, estimate the autocorrelation matrix Ri, and compute the m largest eigenvalues. Form Ai, by using respective eigenvectors as columns. • Classify x to the class ωi, for which the norm of the subspace projection is maximum ...

Non-negative matrix factorization

NMF redirects here. For the bridge convention, see new minor forcing.Non-negative matrix factorization (NMF), also non-negative matrix approximation is a group of algorithms in multivariate analysis and linear algebra where a matrix V is factorized into (usually) two matrices W and H, with the property that all three matrices have no negative elements. This non-negativity makes the resulting matrices easier to inspect. Also, in applications such as processing of audio spectrograms non-negativity is inherent to the data being considered. Since the problem is not exactly solvable in general, it is commonly approximated numerically.NMF finds applications in such fields as computer vision, document clustering, chemometrics, audio signal processing and recommender systems.

![1. Let A = 1 −1 1 1 0 −1 2 1 1 . a) [2 marks] Find the](http://s1.studyres.com/store/data/005284378_1-9abef9398f6a7d24059a09f56fe1ac13-300x300.png)