* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Improving high availability in WebSphere Commerce using DB2 HADR

Commitment ordering wikipedia , lookup

Entity–attribute–value model wikipedia , lookup

Microsoft Access wikipedia , lookup

Serializability wikipedia , lookup

Extensible Storage Engine wikipedia , lookup

Oracle Database wikipedia , lookup

Functional Database Model wikipedia , lookup

Open Database Connectivity wikipedia , lookup

Ingres (database) wikipedia , lookup

Relational model wikipedia , lookup

Microsoft SQL Server wikipedia , lookup

Microsoft Jet Database Engine wikipedia , lookup

Concurrency control wikipedia , lookup

Database model wikipedia , lookup

Versant Object Database wikipedia , lookup

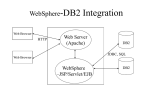

Improving high availability in WebSphere Commerce using DB2 HADR Xiao Qing (Shawn) Wang Software Engineer IBM WebSphere Commerce Ramiah Tin Software Architect IBM WebSphere Commerce March 28, 2007 © Copyright International Business Machines Corporation 2007. All rights reserved. Introduction.......................................................................................................................................2 Overview...........................................................................................................................................3 Prerequisites and configuration information .....................................................................................3 Knowledge requirement................................................................................................................3 Software configuration..................................................................................................................4 Hardware configuration ................................................................................................................4 Recommendation ..........................................................................................................................5 Introduction to topology with HADR and TSA ................................................................................5 High Availability Disaster Recovery.............................................................................................5 Tivoli System Automation ............................................................................................................6 Reliable Scalable Cluster Technology...........................................................................................7 Automatic Client Reroute .............................................................................................................7 Connection pool purge policy in WebSphere Application Server .................................................8 Typical WebSphere Commerce environment with HADR and TSA ............................................9 Setting up the topology ...................................................................................................................10 Installing WebSphere Commerce................................................................................................11 Configuring HADR on a primary/standby database ...................................................................11 Enabling and configuring client reroute in a HADR environment..............................................14 Installing Tivoli System Automation ..........................................................................................15 Defining and administering a TSA cluster ..................................................................................17 Configuring and registering instance and HADR with TSA for automatic management ...........18 Running WebSphere Commerce stress tests with HADR and TSA................................................21 Normal operations with HADR enabled .....................................................................................21 Testing process failure: Standby Instance Failure.......................................................................21 Testing physical failure: Standby system failure.........................................................................22 Testing process failure: Primary instance failure ........................................................................22 1 Testing physical failure: Primary system failure.........................................................................22 Switching the roles of primary database and standby database: Graceful takeover....................23 Performance overhead of HADR ................................................................................................24 Impact of HADR with NEARSYNC mode.............................................................................24 Failure duration in different disaster situations.......................................................................25 Restrictions and recommendations .................................................................................................30 Restrictions in this topology .......................................................................................................30 Recommendations.......................................................................................................................30 Appropriately set your synchronization modes for HADR.....................................................30 Use the LOGINDEXBUILD database parameter ...................................................................30 Set appropriate BUFFPAGE size to eliminate enough database physical read.......................31 Update C++ library version in AIX.........................................................................................31 Keep the database logs in different devices ............................................................................31 Conclusion ......................................................................................................................................31 Resources ........................................................................................................................................31 About the authors............................................................................................................................32 Introduction As Internet commerce becomes more popular and important to corporations, WebSphere® Commerce customers are investigating high availability and disaster recovery strategies to ensure their commerce site is available to users even in cases of disaster, and to ensure valuable data can be recovered with minimal disruption to their site. WebSphere Commerce runs seamlessly on top of WebSphere Application Server (Network Deployment Edition), which provides application level clustering capability through horizontal and vertical clustering topologies. With redundant Web servers and application servers, and with smart routing, a WebSphere Commerce application already provides high availability. To complete the high availability and disaster recovery picture, you need to consider the database tier l. This article describes a feature provided by IBM® DB2® Universal Database (UDB) for Linux, Unix, and Windows® v8.2 to achieve that goal. DB2 UDB v8.2 provides a new feature called High Availability Disaster Recovery (HADR), which supports a configuration where an active primary database processes all user database requests. A standby database server, possibly in a remote location, is kept "in synch" with the changes occur in the primary database. If the primary database server fails, the standby server takes over as the active primary database server. Cluster management tools are used to monitor the health of the primary database server and to automatically coordinate the takeover process if the primary database server fails. 2 Overview This article focuses on an environment that includes: • • • • WebSphere Commerce WebSphere Application Server (Network Deployment Edition) Tivoli System Automation DB2 UDB v8.2 with the HADR feature Setting up the topology describes the steps for configuring DB2 UDB v8.2 for HADR and Automatic Client Reroute (ACR) with WebSphere Commerce. Setting up the topology also describes the configuration required to use Tivoli System Automation (TSA) to monitor the health of the environment, and to integrate the automatic scripts provided by DB2 v9.1 to coordinate and automate disaster recovery. Running stress tests with HADR and TSA describes performance tests that simulate a heavy load environment, illustrates the implications of using an HADR environment, and measures the duration of failover and error. It also lists recommendations and suggestions for implementing a solution that uses DB2 UDB v8.2 with the HADR feature and ACR capabilities together with WebSphere Commerce. Throughout this article, there are detailed procedures, sample scripts, and output examples so that you can apply similar techniques in your environment. This article is intended for: z Technical architects and software developers deploying WebSphere Commerce for high availability. z Database administrators managing the database. Prerequisites and configuration information The environment described in this article includes multiple products as follows: • • • • WebSphere Commerce v6.0.0.1 DB2 UDB v8.2.3 Tivoli System Automation v2.1 AIX v5.2 Knowledge requirement This section covers required background knowledge, as well as hardware and software. Read this section before installing and configuring the software described in this article. Basic knowledge of WebSphere Commerce: http://publib.boulder.ibm.com/infocenter/wchelp/v6r0m0/index.jsp 3 Basic knowledge of DB2 UDB Version 8.2, HADR and ACR Information about DB2 UDB Version 8.2: http://publib.boulder.ibm.com/infocenter/db2luw/v8/index.jsp Information about HADR: http://publib.boulder.ibm.com/infocenter/db2luw/v8//index.jsp?topic=/com.ibm.db2.udb.doc/core/ c0011585.htm Basic knowledge of TSA cluster manager software Information about TSA V2.1: http://publib.boulder.ibm.com/tividd/td/IBMTivoliSystemAutomationforMultiplatforms2.1.html Information about RSCT: http://publib.boulder.ibm.com/epubs/pdf/bl5adm11.pdf Basic knowledge of AIX operating system: http://publib.boulder.ibm.com/infocenter/pseries/v5r3/index.jsp Software configuration The specific versions of the software described in this paper are: z Operating system: AIX v5.2, maintenance level 5 (5200-05) z WebSphere Commerce: WebSphere Commerce v6.0.0.1 z WebSphere Application Server: Network Deployment Edition v6.0.2.5 z DB2 UDB: DB2 UDB v8.2.3 (64 bit) z Tivoli Product: Tivoli System Automation v2.1 z RSCT: RSCT v2.3.7.1 Hardware configuration The 2-tier hardware configuration described in this article is two machines for WebSphere Commerce database nodes (primary and standby), each with the following configuration: z z z Processors: IBM Power PC® 4 CPU 1.40 GHz Memory: 16 GB Hard disk: 140GB*1 + 70GB*1 One machine for WebSphere Commerce node and one machine for TSA node, each with the following configuration: z z z Processors: IBM Power PC® 4 CPU 1.40 GHz Memory: 16 GB Hard disk: 140GB*1 4 Recommendation To achieve optimal performance with HADR, ensure that your system meets the following requirements for hardware, operating systems, and DB2 UDB: • Use the same hardware and software for the system where the primary database resides and for the system where the standby database resides. This helps to maintain the workload when the standby system takes over as primary. • The operating system on the primary and standby databases should be the same version, including patches. • A TCP/IP interface must be available between the HADR host machines, and a high-speed, high-capacity network is recommended. • The database versions used by the primary and standby databases must be identical. During rolling upgrades, the database version of the standby database may be later than the primary database for a short time. The DB2 UDB version of the primary database can never be later than the standby database version. Introduction to topology with HADR and TSA This section describes roles and responsibilities of each software products that are involved in this topology, and how they work together when combined together. High Availability Disaster Recovery High availability (HA) strategies enable database solutions to remain available to process client application requests despite hardware or software failure, which can ensure: • • • Transactions are processed efficiently, without appreciable performance degradation. Systems recover quickly when hardware or software failures occur, or when disaster strikes. Software that powers the enterprise databases is continuously running and available for transaction processing. HADR is a database replication feature that protects against data loss in the event of a partial or complete site failure by replicating changes from a source database, called the primary database, to a target database, called the standby database. Figure 1 illustrates the architecture of HADR. 5 Figure 1. HADR architecture Figure 1 illustrates the following key aspects of a HADR environment: • Enabling HADR on a primary server starts up a process called db2hadrp that communicates with the standby server. At the same time, on the standby server, a process called db2hadrs is started, which receives log records from the primary server, writes them to the log file on the standby server, and applies those transactions to the data and index pages. • When an application is running on the primary server, normal insert/update/delete activity results in log records being written to the log buffer. When the log buffer is full, or whenever a transaction commits, the log buffer is flushed to disk (to the log files) prior to the application receiving a successful return code to its commit request. • When the primary database is in peer state, log pages are shipped to the standby database whenever the primary database flushes a log page to disk. The log pages are written to the local log files on the standby database to ensure that the primary and standby databases have identical log file sequences. The log pages can then be replayed on the standby database to keep the standby database synchronized with the primary database. • Since HADR ensures the primary and standby databases have identical log file sequences. If a disaster happens at the primary site, the standby database server can take over as the new primary database server to handle client application requests. Tivoli System Automation HADR does not automatically monitor the health of a primary database node (for example, psvt01). A database administrator (or some other mechanism) must monitor the HADR pair manually (for example, psvt01 and psvt02), and issue appropriate takeover commands in the event of a primary database failure - this is where TSA automation comes in. TSA manages the availability of applications running in Linux systems or clusters on xSeries®, zSeries®, iSeries®, pSeries®, and AIX systems or clusters. It consists of the following features: • High availability and resource monitoring: TSA provides a high availability environment. It offers mainframe-like high availability by using fast detection of outages and sophisticated knowledge about application components and their relationships. 6 • Policy based automation: TSA configures high availability systems through the use of policies that define the relationships among the various components. • Automatic recovery: TSA quickly and consistently performs an automatic restart of failed resources or whole applications either in place or on another system of a Linux or AIX cluster. This greatly reduces system outages. • Automatic movement of applications: TSA manages the cluster-wide relationships among resources for which it is responsible. • Resource grouping: You can group resources together in TSA. Once grouped, all relationships among the members of the group are established, such as location relationships, start and stop relationships, and so on. In summary, TSA is a product that provides high availability by automating resources, such as processes, applications, IP addresses, and others in Linux-based clusters. To automate an IT resource (for example, a DB2 database instance), you define the resource to TSA. Furthermore, these resources must all be contained in at least one resource group. If these resources are always required to be hosted on the same machine, they are placed in the same resource group. For more information about TSA, see the Tivoli Software Information Center. Reliable Scalable Cluster Technology Reliable Scalable Cluster Technology (RSCT) is a product that is fully integrated into TSA. RSCT is a set of software products that provides a comprehensive clustering environment for AIX and Linux. RSCT provides clusters with improved system availability, scalability, and ease of use. RSCT provides three basic components, or layers, of functionality: 1. 2. 3. Resource Monitoring and Control (RMC) provides global access for configuring, monitoring, and controlling resources in a peer domain. High Availability Group Services (HAGS) is a distributed coordination, messaging, and synchronization services. High Availability Topology Services (HATS) provides a scalable heartbeat for adapter and node failure detection, and a reliable messaging service in a peer domain. For more information about RSCT, see the IBM Cluster Information Center. Automatic Client Reroute Automatic Client Reroute (ACR) is another feature that was first introduced in DB2 UDB v8.2. If a database application loses communication with a DB2 database server, ACR reroutes that client application to an alternate database server so that the application can continue its work with minimal interruption. Rerouting is only possible when an alternate database location has been specified at the primary database server. ACR is only supported with the TCP/IP protocol. 7 ACR is not tied to HADR. You can use it with HADR, clustering software, in a partitioned database environment, replication, and so on. ACR automatically and transparently reconnects DB2 database client applications to an alternate server without the application or end user being exposed to a communications error. The alternate server information is stored on the primary database server, and loaded into the client’s cache upon a successful connection to the primary database server. This means that for a client application to know the alternate database server, it must first successfully connect to the primary database server. When ACR is configured, the built-in retry logic alternates between the original primary server and the alternate server for 10 minutes, or until a database connection is re-established to the primary database server. Acquiescently, the retry logic built into ACR will: • Try to re-establish a connection to the original primary server to ensure there is no "accidental" failure. • Alternate connection attempts between both the original primary database server and the alternate database server every 2 seconds for 30-60 seconds. • Alternate connection attempts between both the original primary database server and the alternate database server every 5 seconds for 1-2 minutes. • Alternate connection attempts between both the original primary database server and the alternate database server every 10 seconds for 2-5 minutes. • Alternate connection attempts between both the original primary database server and the alternate database server every 30 seconds for 5-10 minutes. • If no connection to the original primary database server is made after all of these attempts, the SQL30081N error code is returned to the client application. At the same time, there are two DB2 database registry variables called DB2_MAX_CLIENT_CONNRETRIES and DB2_CONNRETRIES_INTERVAL that helps to accurately configure the retry logic of ACR: z z DB2_MAX_CLIENT_CONNRETRIES defines the maximal number for a DB2 client retry to connect to the database server. DB2_CONNRETRIES_INTERVAL defines the interval between two different reconnection attempts. Note: The tests in this article do not cover these two DB2 database registry variables. For more information about ACR, see The IBM DB2 Version 8.2 Automatic Client Reroute Facility. Connection pool purge policy in WebSphere Application Server WebSphere Application Server (hereafter called Application Sever) recognizes and handles DB2 database client reroute error codes and cleans up the connections in the connection pool according 8 to the database server change. There are two types of policies you can set in the Application Server connection pool that specifies how to purge connections when a stale connection or a fatal connection error is detected by the connection pool manager: 1. EntirePool All connections in the pool are marked stale. Any connection not in use is immediately closed. Any connections in use are closed, and a stale connection exception is returned upon the next operation on that connection. Subsequent getConnection() requests from the application result in new connections to the database being opened. When using this purge policy, there is a slight possibility that some connections in the pool are closed unnecessarily when they are not stale. However, this is a rare occurrence. In most cases, a purge policy of EntirePool is the best choice. 2. FailingConnectionOnly Only the connection that caused the stale connection exception is closed. Although this setting eliminates the possibility that valid connections are closed unnecessarily, it complicates application recovery. Because only the current failing connection is closed, there is a good possibility that the next getConnection() request from the application returns a connection from the pool that is also stale, resulting in more stale connection exceptions. In the configuration described in this article, when the primary server goes down, pooled connections that are not valid might exist in the free pool. For this reason, the Application Server purge pool policy should be set to “EntirePool” with the configuration described in this article. This is true when the purge policy is failingConnectionOnly. In this case, the failing connection is removed from the pool, but the remaining connections in the pool might not be valid. This is highly possible because the continued request from end users will use the invalid connections and result in exceptions. For more details about Application Server connection pool, see the WebSphere Application Information Center. Typical WebSphere Commerce environment with HADR and TSA If the primary database server fails, a DBA can use TSA to monitor a HADR primary database server and automatically failover to a standby database server. Figure 2 illustrates a common configuration for this case. 9 Figure 2. Typical WebSphere Commerce environment with HADR and TSA enabled This is a four-node topology: • • • • psvt01 hosts the primary database. psvt05 hosts the standby database. psvt07 stands for the WebSphere Commerce instance deployed in Application Server. psvt03 provides quorum between the primary and standby nodes. In this configuration, TSA can help to: • Monitor the HADR pair for primary database failure (both process failure and system failure), and issue appropriate takeover commands on the standby database if the primary database fails. • Monitor the status of the standby database. For both process failure and system failure on the standby server, TSA issues corresponding commands to restart the standby server • Provide quorum to avoid a “split” brain. psvt03 acts as a heartbeat node and a tie breaker in the event of communication failure between psvt01 and psvt05. Setting up the topology Once you understand the complete topology of the WebSphere Commerce environment with 10 HADR and TSA enabled, and prepared all of the hardware and software, you can start the set up procedures. The following five steps sets up the whole environment depicted in Figure 2 above. Note: In this topology, all of these machines are co-located side-by-side, which means network delay will not play a significant part of the results. (P): Primary database server - psvt01 (S): Standby database server - psvt05 (H): TSA heartbeat node - psvt03 (C): WebSphere Commerce node - psvt07 Installing WebSphere Commerce Follow the installation procedure described in the WebSphere Commerce Information Center. Review the logs carefully to make sure the following components are installed and configured successfully and correctly: • • • • • DB2 UDB v8.2.3 WebSphere Application Server v6.0.2.5 IBM Http Server v6.0.2.5 WebSphere Commerce v6.0.0.1 Instance for WebSphere Commerce has been successfully created After the installation, modify the Application Server connection pool’s configuration, if different: 1. 2. 3. 4. 5. Logon to the Application Server administration console: http://hostname:9061/ibm/console Go to the connection pool configuration page by following nested drop-down choices: Resources Æ JDBC providers Æ demo - WebSphere Commerce JDBC Provider ÆData Source Æ WebSphere Commerce DB2 DataSource demo Æ Connection pools. Set the Purge policy to “Entire Pool”. Set the Maximum connections configuration parameter to an appropriate number. Maximum connections are larger than the number of maximum concurrent users. For example, in this article, “Maximum connections” is set to 50 because the tests described simulate a workload of 50 concurrent users. Save the configuration and exit. Configuring HADR on a primary/standby database You can configure HADR using the command line processor (CLP), the Set Up High Availability Disaster Recovery (HADR) wizard in the Control Center, or by the corresponding application programming interfaces (APIs). Use the following procedure to configure the primary and standby databases for HADR using the CLP: 11 Steps: 1. Enable log archiving, configure other DB2 parameters on the primary database: (P) $ db2 update db configuration for rmall using LOGRETAIN RECOVERY (P) $ db2 update db configuration for rmall using LOGINDEXBUILD ON (P) $ db2 update db configuration for rmall using INDEXREC RESTART (P) $ db2 update db configuration for rmall using NEWLOGPATH ”/db2log/” Note: It is highly recommended that you use the NEWLOGPATH configuration parameter to put database logs on a separate device from the database once the database is created. This protects your database from media failure where the logs are stored, and improves the overall performance of database system. 2. Make an offline backup: (P) $ db2 deactivate db rmall (P) $ db2 backup db rmall 3. Move the backup image to the standby database host and restore the database to the roll forward pending state: (S) $ db2 restore db rmall replace history file 4. Configure HADR and client reroute on the primary database. As primary instance owner, set HADR related parameters for the primary database: (P) $ db2 update db configuration for rmall using HADR_LOCAL_HOST psvt01 (P) $ db2 update db configuration for rmall using HADR_LOCAL_SVC 18819 (P) $ db2 update db configuration for rmall using HADR_REMOTE_HOST psvt05 (P) $ db2 update db configuration for rmall using HADR_REMOTE_SVC 18820 (P) $ db2 update db configuration for rmall using HADR_REMOTE_INST db2inst1 (P) $ db2 update db configuration for rmall using HADR_SYNCMODE NEARSYNC Note: The configuration for this article uses the NEARSYNC synchronization mode. Transaction response time is shorter with NEARSYNC than with the other synchronous modes. However, protection against data loss is greater with other synchronization modes. 5. Configure HADR and client reroute on the standby database. As the standby instance owner, set HADR related parameters for the standby database: 12 (S) $ db2 update psvt05 (S) $ db2 update 18820 (S) $ db2 update HADR_REMOTE_HOST (S) $ db2 update 18819 (S) $ db2 update HADR_REMOTE_INST (S) $ db2 update NEARSYNC db configuration for rmall using HADR_LOCAL_HOST db configuration for rmall using HADR_LOCAL_SVC db configuration for rmall using psvt01 db configuration for rmall using HADR_REMOTE_SVC db configuration for rmall using db2inst1 db configuration for rmall using HADR_SYNCMODE Start HADR on the standby database: (S) $ db2 start hadr on db rmall as standby Note: It is recommended to start HADR on the standby server before you start HADR on a primary database server. When you start HADR on a primary database server, the database server waits up to HADR_TIMEOUT seconds for a standby database server to connect to it. If there is still no standby database server connected after HADR_TIMEOUT seconds have passed, the HADR start on the primary database server fails. 6. Start HADR on the primary database: (P) $ db2 start hadr on db rmall as primary 7. Verify that HADR has been successfully configured: 1. Review the db2diag.log on both the primary and the standby database server to see whether HADR is configured correctly. 2. Examine the HADR status from a snapshot of both the primary database and standby database. Figure 3 and Figure 4 show the HADR section returned by the GET SNAPSHOT command. Figure 3. Snapshot for the primary database server 13 Figure 4. Snapshot for the standby database server Recommendation: By default, the log receive buffer size on the standby database is two times the value specified for the LOGBUFSZ configuration parameter on the primary database. There might be times when this size is not sufficient. When the primary and standby databases are in HADR peer state, and the primary database is experiencing a high transaction load, the log receive buffer on the standby database might fill to capacity and the log shipping operation from the primary database might stall. To manage these temporary peaks, increase the size of the log receive buffer on the standby database by modifying the DB2_HADR_BUF_SIZE registry variable. Enabling and configuring client reroute in a HADR environment For a client application to be transparently redirected to an alternate standby database server when there is a loss of communication with the primary database server, specify that alternate server's location on the primary database server. To do this, use the UPDATE ALTERNATE SERVER FOR DATABASE command in the primary database server. Here is an example of the steps to specify an alternate database server: (P) $ db2 update alternate server for database rmall using hostname psvt05 port 50000 (S) $ db2 update alternate server for database rmall using hostname psvt01 port 50000 Note: Port 50000 is the TCP/IP port used by the DB2 database client to communicate with the alternate DB2 database server. Figure 5. Directory information in DB2 client 14 The alternate database server information is stored on the primary database server, and loaded into the client's cache upon a successful connection to the primary database server (Figure 5). This means that for a client application to know the standby server, it must first successfully connect to the primary server. As well as identifying an alternate database server on the primary database server, you must configure DB2 database clients to detect when the primary database server has failed (stopped responding) and redirect application connections to the alternate database server. The amount of time the DB2 database client takes to realize that the primary has stopped responding depends on configuration parameters. There are three ways to configure a DB2 database client to wait for a timeout period before concluding that a database server has stopped responding: • The DB2 database registry variable called DB2TCP_CLIENT_RCVTIMEOUT specifies the number of seconds the DB2 database client will wait for a response from the primary database server before deciding the database server has stopped responding. You can use the following command to set this registry variable: (C) $ db2set DB2TCP_CLIENT_RCVTIMEOUT = 60 • DB2TCP_CLIENT_CONTIMEOUT specifies how long the DB2 database client will wait on a connection before deciding the database server has stopped responding. You can use following command to set this registry variable: (C) $ db2set DB2TCP_CLIENT_CONTIMEOUT = 60 • WebSphere Commerce uses the DB2 CLI-based JDBC driver to communicate with the DB2 database server. CLI has its own receive timeout configuration, which might interfere with the DB2TCP_CLIENT_RCVTIMEOUT setting. To avoid this situation, add an entry “ReceiveTimeout” to the DB2 CLI configuration file ~/sqllib/cfg /db2cli.ini and set it to 60. By default, this value is 0, which means no timeout. Notes: • If you set these variables too low, you cause unnecessary timeouts. Set these values based on an estimate of the time the longest running query takes. • Using DB2TCP_CLIENT_CONTIMEOUT and DB2TCP_CLIENT_RCVTIMEOUT to configure a DB2 database client to wait for a timeout period before identifying a primary database server has failed might only result in a delay in how long the outage gets detected at the client. This affects the timeliness of your failover mechanism. • Setting DB2TCP_CLIENT_CONTIMEOUT limits the number of retries to 1, just like setting DB2TCP_CLIENT_RCVTIMEOUT. • Setting either DB2_CONNRETRIES_INTERVAL or DB2_MAX_CLIENT_CONNRETRIES overrides the retry limit imposed by either DB2TCP_CLIENT_CONTIMEOUT or DB2TCP_CLIENT_RCVTIMEOUT. Installing Tivoli System Automation The following steps give you a brief introduction on implementing the Tivoli System Automation for multi-platforms policy based self-healing capability running on AIX: 15 1. 2. 3. 4. 5. 6. 7. After you have purchased Tivoli System Automation v2.1, you can download a tar file for the AIX operating system. The name for the archive for AIX platforms is C85W5ML.tar. Download the installation archive into your local directory, and use the tar xvf command to extract the archive. When you have extracted the files, you find the installation wizard in the following directory: SAM2100Base/installSAM TSA is contained in several packages that must be installed on every node in the cluster to be automated. TSA for Multiplatforms requires a certain RSCT level to be installed on that system prior to the installation. RSCT is part of AIX, although not all of the RSCT related filesets are installed by default within operating system. For TSA pre-installation checking, a more recent level of RSCT may be required. In this case, for TSA v2.1 installation on AIX v5.2, the required RSCT version is 2.3.7.1. After you install the base filesets, you can download the specific updated filesets from IBM Support Fix Central. Note: The latest level of some RSCT filesets are updated to 2.3.7.3 (such as rsct.core.utils), which are also supported by AIX maintenance level (ML--5.2.0.0). It is recommended to download the latest filesets. Install the product including the automation adapter with the installSAM script. The installation process is finished automatically. Copy the automatic scripts shipped by DB2 v9 from the DB2 installation package to the local directory in all of the cluster nodes. In this case, they are copied into /software/Auto_Script. Note: IBM has created scripts that enable TSA to work seamlessly with the DB2 database. You can download the latest TSA automatic scripts from the DB2 for Linux site. Change the environment variable. For example: export CT_MANAGEMENT_SCOPE=2 export PATH = $PATH:/usr/sbin/rsct/bin:/usr/opt/IBM/db2_08_01/instance/ :/software/Auto_Script/ Confirm that TSA has been installed successfully (Figure 6). If TSA has been installed, and if the level of RSCT is correct, then you can start a TSA domain. Use following commands to verify that TSA has been installed successfully and that the level of RSCT is correct: 1. lsrpdomain - should show the domain as Online with RSCT level of 2.3.7.1. 2. lsrpnode - should show all nodes in that domain with RSCT level 2.3.7.1. 3. lssrc –ls IBM.RecoveryRM - should show an IVN and AVN of 2.1.0.0. 16 Figure 6. TSA post installation check Defining and administering a TSA cluster Before configuring your TSA cluster, verify that all of the installations of TSA in your topology know about one another and can communicate with one another in what is referred to as a TSA cluster domain. Many of the TSA commands that you will use in the following steps require Remote Shell (RSH) to be supported by your cluster nodes. RSH allows a user who is on one node to run commands on another node in the cluster environment. To configure RSH, perform following two steps: 1. To configure RSH to allow the root user to issue remote commands on each nodes, add the following lines to the file /root/.rhosts: On (P),(S),(H): psvt01 root psvt03 root psvt05 root 2. Verify that RSH is working by issuing the following commands. You will see the directories listed: (P)(S)(H) # rsh psvt01 ls (P)(S)(H) # rsh psvt03 ls (P)(S)(H) # rsh psvt05 ls This is essential for managing HADR by TSA. The following scenarios show how you can create a cluster and add nodes to the cluster, and how you can check the status of the Tivoli System Automation daemon (IBM.RecoveryRM). Configuration steps: 1. Run the following command as root in each host to prepare the proper security environment between the TSA nodes, so it is allowed to communicate between the cluster nodes: (P)(S)(H) # preprpnode psvt01 psvt03 psvt05 2. Issue the following command to create the cluster domain: 17 (P) # mkrpdomain HADR_Commerce psvt01 psvt03 psvt05 3. Now issue command to bring the cluster online. Note: all future TSA commands will run relative to this active domain. (P) # startrpdomain HADR_Commerce 4. In seconds, the cluster starts , and you can look up the status of HADR_Commerce, (P) # lsrpdomain You will get an output similar to Figure 7: Figure 7. TSA domain status 5. Ensure all nodes are online in the domain as follows: (P) # lsrpnode You will get an output similar to Figure 8: Figure 8. TSA Cluster nodes status 6. On each online node in the cluster, an IBM Tivoli System Automation daemon (IBM.RecoveryRM) is running. You can check the status and process id of the daemon with the lssrc command: lssrc –s IBM.RecoveryRM You will get an output similar to Figure 9: Figure 9. TSA recovery daemon status Configuring and registering instance and HADR with TSA for automatic management As the base for automation, the components involved must first be described in a set of RSCT defined resources. Due to diverse characteristics of resources, there are various RSCT resource classes to accommodate the differences. In a TSA cluster, a resource is any piece of hardware or software that has been defined to IBM Resource Monitoring and Control (RMC). So in this case, the DB2 database instance and the HADR pair of database are both resources in the cluster, which are configured and registered with TSA for automation management. As explained above, every application needs to be defined as a resource to be managed and automated with TSA. Application resources are usually defined in the generic resource class IBM.Application. In this resource class, there are several attributes that define a resource, but at least three of them are application- 18 specific: • • • StartCommand StopCommand MonitorCommand These commands may be scripts or binary executables. You must ensure that the scripts are well tested, and produce the desired effects within a reasonable period of time. This is necessary because these commands are the only interface between TSA and the application. The automatic package shipped with DB2 v9 includes several scripts that can control the behavior of the DB2 resources defined in TSA cluster environment. Here is a description of the scripts. For the DB2 database instance: 1. regdb2salin: This script registers the DB2 instance into TSA cluster environment as a resource. 2. db2_start.ksh, db2_stop.ksh, db2_monitor: These three scripts are registered as part of TSA resource automation policy. TSA refers to this policy when monitoring DB2 database instances and when responding to predefined events, such as restarting a DB2 database instance when TSA detects that the DB2 database instance has terminated. For the HADR database pair: 1. reghadrsalin: This script registers the DB2 HADR pair with the TSA environment. 2. hadr_start.ksh, hadr_stop.ksh, hadr_monitor.ksh: These three scripts are registered as part of the TSA automation policy for TSA to monitor and control the behavior of HADR database pair. Notes: z A resource is any piece of hardware or software that has been defined to RMC. z A resource class is a set of resources of the same type. For example, while a resource might be a particular file system or particular host machine, a resource class is a set of file systems, or a set of host machines. z A resource attribute describes some characteristics of a resource. Steps: First, register DB2 instances with TSA for management: 1. Register the DB2 instances: (P) # regdb2salin –a db2inst1 –r –l psvt01 (S) # regdb2salin –a db2inst1 –r –l psvt05 2. Verify that the resource groups (for example, db2_db2inst1_psvt01_0-rg and db2_db2inst1_psvt01_0-rg) are registered and are online by issuing the following command: (P) # lsrg –g db2_db2inst1_psvt01_0-rg You will get an output similar to Figure 10: 19 Figure 10. Status of instance resource group Second, register HADR with TSA for management: 1. Start HADR on both primary and standby database. 2. As instance owner, ensure that the HADR pair is in “peer” state as follows: (P)(S) % db2 get snapshot for db on rmall 3. As root on the primary node, create a resource group for the HADR pair as follows: (P) # reghadrsalin –a db2inst1 –b db2inst1 –d rmall 4. Check the status of all TSA resource groups by issuing the following command: (P) # lsrg –g db2hadr_rmall-rg You will get an output similar to Figure 11: Figure 11. Status of HADR resource group Third, check the status of the whole cluster (Figure 12): (H) # getstatus 20 Figure 12. Status of the whole cluster Running WebSphere Commerce stress tests with HADR and TSA In this article, three types of stress tests are covered to evaluate the behavior of this integrated environment. All of the tests are based on the same test configuration (browse/buy = 0.55/0.45, concurrent users = 50, no think time), while with the same business model B2C (WebSphere Commerce). 1. Run a normal stress test case without HADR, and get the result to setup the baseline. 2. Run a normal stress test case with DB2 HADR NEARSYNC mode, and then get the result to assess the performance overhead, if any, due to HADR. 3. Run the test case again with a NEARSYNC mode in different failure scenarios (Abnormal Termination) to compare the performance impact with baseline result. Normal operations with HADR enabled After the first successful connection to the primary database server, you can transfer the alternate server location information (host name or IP address, and service name or port number) to the client. When your environment is enabled for Automatic Client Reroute, the DB2 client automatically retries the original database server location, and then an alternate database server location that you specify on the DB2 database server itself. Testing process failure: Standby Instance Failure Steps: 1. On the standby server, as the standby database instance owner, issue the command to simulate an DB2 instance failure: (S) $ db2_kill Note: Record the time you issued the command. 21 2. On the heartbeat node, issue the command “getstatus” repeatedly, until the instance comes back. Check the duration for standby instance failover in db2diag.log on the standby database server. Testing physical failure: Standby system failure Steps: 1. On the standby server, as the root user, issue the command to simulate a system failure in standby system: (S) # shutdown Note: Record the time you issued the command. After the original standby system restart, check the duration for the standby database server to restart and rejoin as the standby database server again. Testing process failure: Primary instance failure Steps: 1. On the primary server, as the primary database instance owner, issue the command to simulate a DB2 instance failure: (P) $ db2_kill Note: Record the time you issued the command. Figure 13. Status of cluster after issuing db2_kill 2. On the heartbeat node, issue the command of “getstatus” repeatedly (Figure 13), until the instance comes back. 3. Check the duration for instance restarting in db2diag.log on the primary database server. TSA will detect the DB2 instance failure, and restart the instance according to script shipped in DB2 v9. Testing physical failure: Primary system failure Steps: 1. On the primary server, as the root user, issue the command to simulate a system failure in primary system: 22 (P) # shutdown Note: Record the time you issued the command. 2. On the heartbeat node, issue the command “getstatus” repeatedly, until the status of standby instance becomes “online” from “offline”: (H) # getstatus 3. Check the duration when the standby database finishes taking over as a new primary server in db2diag.log on the standby database server. 4. After the original primary system restart, check the duration for the original database server to restart and rejoin as a new standby database server, which means the HADR pair comes back. Switching the roles of primary database and standby database: Graceful takeover To perform regular software and/or hardware maintenance on the primary node, it is required to take the primary node down and reroute the traffic to the standby server. In HADR terms, this is known as a “graceful” takeover. You can plan this planned activity during the setup whether TSA is enabled or not. In the environment where TSA is not enabled, you can issue the command “db2 takeover hadr on db rmall” on the standby database server. In the environment where TSA is enabled, such as in the current setup, it is highly recommended to use the command supported by the TSA cluster infrastructure to achieve it as the HADR pair and DB2 instance as resource groups (Figure 14): (H) # rgreq –o move –n psvt05 db2hadr_rmall-rg Figure 14. Status of cluster after initiating graceful takeover 23 Performance overhead of HADR One of the key considerations before you plan to implement HADR in a WebSphere Commerce production environment is performance impact. This section discusses some analysis and methodology about how to evaluate the duration of a failover. Since these systems are not in a controlled environment, the performance data can vary from time to time. Note that the results given below are the average from multiple repeatable executions. Impact of HADR with NEARSYNC mode With HADR, you can specify one of three synchronization modes to choose your preferred level of protection from potential loss of data. The synchronization mode indicates how log writing is managed between the primary and standby databases. These modes apply only when the primary and standby databases are in peer state. 1. 2. 3. SYNC (synchronous) NEARSYNC (near synchronous) ASYNC (asynchronous) This article only covers the NEARSYNC mode. While this mode has a shorter transaction response time than synchronous mode, it also provides slightly less protection against transaction loss. In this mode, log writes are considered successful only when the log records have been written to the log files on the primary database, and when the primary database has received acknowledgement from the standby system that the logs have also been written to main memory on the standby system. The first measurements about the performance impact are the throughput, number of errors, CPU utilization on the database node comparing between HADR enabled and HADR disabled. Based on the result listed in Table 1, the performance impact due to HADR NEARSYNC mode is fairly minimal with no significant performance degradation. Notes: • The throughput and overall response time are not absolute, but instead with a normalized value using 1000 and 100 respectively for comparison. • Since automation test tool is used in this case to simulate the appropriate workload to the database server, from the end users’ (test tool) perspective, the errors in this measurement stand for the Receive/Send timeout or the mismatched return that cannot be parsed by the test tool. Table 1. Performance comparison table for baseline test HADR setup Throughput Error Number None (baseline test) 1000 /h 8 CPU Utilization 63% Overall response time 100.0 time unit 24 NEARSYNC mode Performance degradation 979.4 /h 2.06% 12 + 4 errors 63.5% 0.8% 102.3 time unit 2.3% For more information about HADR synchronization modes, see IBM DB2 UDB Data Recovery and High Availability Guide and Reference, V8.2. Failure duration in different disaster situations The second measurement for performance impact is the duration of unavailability in both simulated system failure and DB2 instance failure. If a system disaster happens in a primary database server, the overall time taken for failover can be roughly broken down to the following phases (Figure 15): 1. After issuing the command “shutdown” in primary system (T0), it takes 5-10 seconds for TSA to detect the disaster. The policy is defined in the TSA automation scripts. Then TSA initiates a takeover process on the standby server (T1) by issuing the HADR command to the standby server. 2. The standby server finishes taking over and becomes the new primary database server (T2). 3. The DB2 client detects communication failure with the primary server and initiates an ACR process (T3). This phase could start before item 2 as shown in Figure 15. Note that this phase depends on the timeout values setting described earlier. 4. ACR fails to reconnect to the original primary database server (T4). 5. ACR connects to standby database server successfully and returns the SQL error code (30108) to Application Server. Then Application Server initiates a purge process to refresh any stale connections and reestablishes the connections to the new primary database server. Note that the duration for ACR to reroute successfully to the new primary database server depends on how long the HADR takeover process takes, and when the new primary database server is ready to handle transactions (T5). 25 Figure 15. Time line for primary system failure scenario In the event that an instance failure happens on the primary database server, the overall time taken for restart is composed of the following phases (Figure 16): 1. After issuing the command “db2_kill” in primary system (T0), it takes 5-10 seconds for TSA to detect the disaster. The policy is defined in the TSA automation scripts. Then TSA tries to restart the failed primary instance (T1). 2. The failed instance finishes restarting and recovery (T2). 3. The DB2 client detects communication outage with the database server and initiates an ACR process (T3). This phase could start before item 2 as shown in Figure 16. Note that this phase depends on the timeout values setting described earlier. 4. ACR fails to connect to the standby database server (T4). 5. ACR finishes reconnecting to the original primary database server successfully, and ACR returns the SQL error code (30108) to Application Server. Application Server initiates a purge process to refresh any stale connections and reestablishes the connections to the restored database server. Note that the duration for ACR to reconnect successfully to the original primary database server depends on how long the instance restart process takes, and when the original primary database server is ready to handle transactions (T5). 26 Figure 16. Time line for primary instance failure scenario The ACR is initiated when either a primary instance failure or a primary system failure happens. It first attempts to detect an available primary database server and then tries a connection. No matter the database server is the original one or a new one, the mechanism for ACR to handle the abnormal termination is fairly similar. In this article, the same measurements are used to figure out the overall duration for unavailability: 1. 2. From the database’s perspective: The duration of unavailability is the time between when the primary disaster happens and the time when the database server comes back (no matter if it is the standby database server takeover or the failed instance restart, T1—T2 in the picture). From end users’ perspective: The measurement is the average duration of unavailability between when the primary disaster happens, the time when the Application Sever connection manager purges all the inactive connections, and when the new connection is reestablished (T1 – T5 in Figure 16 can stand for a typical duration). 27 The results are listed in Table 2 below: Table 2. Result table for primary system/instance failures Scenario Failover time from database’s perspective Primary system failure 22.33 seconds Primary instance failure Failover time from end users’ perspective 90.97 seconds 79.39 seconds 82.53 seconds Notes: • In the scenario of a primary system failure, the standby takes over as the new primary server. In a scenario of a primary instance failure, it is designed to restart the failed instance instead of the standby taking over. • In these two scenarios shown above, from the database’s perspective, even the duration for a takeover is quicker than to restart the instance. The same values (60 seconds) are used to set the timeout settings, which is the limiting factor. This explains why the unavailability from the end users’ perspective is very close to each other. Now take a look at the results when the standby database fails. Since all the transactions are handled by the primary server, and no real fail over occurs, the recovery logic is relatively simpler than others: z z After simulating the disaster in standby system, it takes 5-10 seconds for TSA to detect the disaster. The policy is defined the TSA automation scripts. TSA detects a failure (no matter if it’s a system failure or instance failure) on a standby server, and initiates a restore process on standby server. Duration for the standby node finishes restoring and rejoins as a peer standby server again. The results are listed in Table 3 below. Note that the duration represents the window where HADR is not available to protect the primary database server. Data loss can occur if the primary database server fails during this period of time. As a result, the shorter the duration, the better it is. Table 3. Result table for standby system/instance failure Scenario Duration for standby server comes back and rejoins as standby node again Standby system failure 47.552 seconds Standby instance failure 24.664 seconds The whole process is similar to the primary system failure and instance failure, which you can split into the following steps (Figure 17): 1) TSA initiates a graceful takeover process on the standby server or the DBA manually starts the process (T1). 2) The standby server finishes taking over and becomes the new primary server (T2). 3) The DB2 client detects communication outage with the database server and initiates the ACR process (T3). This phase can start before item 2 as shown in Figure 17. 28 4) 5) ACR fails to connect to the original primary server (T4). ACR connects successfully to the standby server and returns the SQL error code (30108) to Application Server. Then Application Sever initiates a purge process to refresh any stale connections and reestablishes the connections to the new primary server. Note that the duration for ACR to reroute to the new primary server depends on how long the HADR takeover process will take and when the new primary server is ready to handle transactions (T5). Figure 17. Time line for a graceful takeover scenario The results are listed in Table 4 below: Table 4. Result table for a graceful takeover scenario Scenario Failover time from database’s perspective Graceful takeover 11.83 seconds Failover time from end users’ perspective 21.16 seconds Note: In the scenario of a graceful takeover, the DB2 client tries to reconnect to the original primary database server first as it is defined in the ACR default rule, but the primary database server can return an error code to the DB2 client quickly without waiting for a connection or receive timeout (60 seconds). This explains why the failover duration is much shorter than the “forced” scenarios. 29 Restrictions and recommendations This section provides restrictions and recommendations. Restrictions in this topology Many products work together to form this complex topology, which leads to some limitations or restrictions in this topology. In this case, the following are some key restrictions for HADR, ACR and TSA: • The primary and standby databases must have the same operating system version and the same DB2 UDB version. During rolling upgrades, the database version of the standby database may be later than the primary database for a short time. • Reads operations on the standby server are not supported, which means clients cannot connect to the standby database. • • • Log archiving is performed by the current primary database. Normal backup operations are not supported on the standby database. ACR is only supported when the communications protocol used for database is TCP/IP. Recommendations Following are recommendations to improve system performance or to ensure the whole topology works more reliably and efficiently. Appropriately set your synchronization modes for HADR SYNC (synchronous) provides the greatest protection against transaction loss, while ASYNC (asynchronous) has the shortest transaction response time among the three modes, and causes potential data loss. For WebSphere Commerce, it is recommended not to use ASYNC mode due to its potential data loss, which defeats the purpose for full disaster recovery. NEARSYNC (near synchronous mode) seems to be a compromise between performance impact and data protection. However, performance impact has many other factors that are not covered by this article, such as network delay if the primary and secondary servers are far apart. Readers are encouraged to evaluate their own environment and perform some loading testing to decide if SYNC (synchronous) mode is acceptable. The general rule of thumb is to use the highest data protection mode if acceptable. Use the LOGINDEXBUILD database parameter For HADR databases, set the LOGINDEXBUILD database configuration parameter to ON to ensure that complete information is logged for index creation, recreation, and reorganization. The indexes are rebuilt on the standby system during HADR log replay, and are available when a 30 failover takes place. This shortens the response time for the standby server to takeover. Set appropriate BUFFPAGE size to eliminate enough database physical read Since it is faster to fetch data directly from memory than from hard disk. DB2 uses buffer page to cache temporary read and write in memory, which can significantly improve the performance of the whole database system. Customization in the buffer pool is one of the most important aspects in database optimization. In this case, enlarge the size of buffer pool size and cache most of the read operation, which will improve the performance of database system. Update C++ library version in AIX For the C++ library, the xlC.rteshould be at version 7.0.0.1 or higher. If you are below that level, the software will also run and you can go on. However, the RSCT has the xlC.rte level at the prerequest level, so upgrade the package later if time permits to avoid any potential issues. Keep the database logs in different devices Again, it is highly recommended that you use the newlogpath configuration parameter to put database logs on a separate device once the database is created. This prevents media failure from destroying a database and improves the overall performance of database system. Conclusion By using HADR and TSA, WebSphere Commerce supports an efficient high available solution in the tier of databases, and data protection with minimal performance impact to basic and normal operations from the end users’ perspective. This article showed an entire solution on how to setup the topology, as well as the measurement used and result analysis based on this solution. From this article, you also learned how to apply the same approach in your own setup to achieve high availability. Resources • • • • IBM DB2 UDB Data Recovery and High Availability Guide and Reference, V8.2 IBM DB2 Universal Database Administration Guide: Implementation, V8 IBM DB2 Universal Database Administration Guide: Performance, V8 IBM Tivoli System Automation for Multiplatforms Base Component User’s Guide, Version 2.1 • • IBM Cluster Information Center WebSphere Commerce Information Center 31 About the authors Xiao Qing (Shawn) Wang is still a freshman in IBM. He joined IBM China Lab about a year ago, and now works in the WepSphere Commerce System Verification Test team. His areas of interest include automation test, test tools, and unified process investigation. In his spare time, he enjoys sports, collects stamps, and travels. You can reach him at [email protected]. Ramiah Tin is the Business Continuity Architect for WebSphere Commerce in the IBM Software Group at the IBM Toronto Lab. He has a Master of Engineering degree from the University of Toronto, Canada. You can reach him at [email protected]. 32