* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Another version - Scott Aaronson

Generalized linear model wikipedia , lookup

Uncertainty principle wikipedia , lookup

Quantum machine learning wikipedia , lookup

Computational electromagnetics wikipedia , lookup

Quantum computing wikipedia , lookup

Hardware random number generator wikipedia , lookup

Computational complexity theory wikipedia , lookup

Theoretical computer science wikipedia , lookup

Probability box wikipedia , lookup

Nyquist–Shannon sampling theorem wikipedia , lookup

Factorization of polynomials over finite fields wikipedia , lookup

Canonical quantization wikipedia , lookup

Density matrix wikipedia , lookup

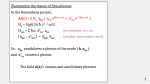

The Computational Complexity of Linear Optics vs Scott Aaronson and Alex Arkhipov MIT The Extended ChurchTuring Thesis (ECT) Everything feasibly computable in the physical world is feasibly computable by a (probabilistic) Turing machine Shor’s Theorem: QUANTUM SIMULATION has no efficient classical algorithm, unless FACTORING does also So the ECT is false … what more evidence could anyone want? Building a QC able to factor large numbers is damn hard! After 16 years, no fundamental obstacle has been found (or even seriously proposed), but who knows? Can’t we “meet the physicists halfway,” and show computational hardness for quantum systems closer to what they actually work with now? FACTORING might be in BPP! At any rate, it’s an extremely “special” problem Wouldn’t it be great to show that if BPP=BQP, then (say) the polynomial hierarchy collapses? Today: “A New Attack on the ECT” We define a model of computation based on linear optics: n identical photons traveling through a network of poly(n) mirrors, phase-shifters, etc., then a single measurement of where the photons ended up Crucial point: No entangling interactions between pairs of photons needed! Our model is contained in BQP, but seems unlikely to be BQP-complete. We don’t know if it solves any decision problems that are hard classically. But for sampling and relation problems, the situation is completely different… Theorem 1. Suppose that for every linear-optics network, the probability distribution over measurement outcomes can be sampled in classical polynomial time. Then P#P=BPPNP (so PH collapses) More generally, let O be any oracle that simulates a “OK, network but isn’tA, the realaquestion theof A and a linear-optics given description of approximate sampling?O randomhardness string r. Then #P NP After all, experiments are noisy, and P BPP not even the linear-optics network itselfoptics can sample exactly!” in BPPPH, that So even if linear can be simulated still collapses PH! (New evidence that QCs have capabilities beyond PH, complementing [A’10],[FU’10]) Theorem 2. Suppose two plausible conjectures are true: the permanent of a Gaussian random matrix is (1) #P-hard to approximate, and (2) not too concentrated around 0. Let O be any oracle takes as input a description of a linear-optics network A, a random string r, and 01/, and that samples from a distribution -close to A’s in variation distance. Then #P NP O P BPP In other words: if our conjectures hold, then even simulating noisy linear-optics experiments is classically intractable, unless PH collapses From Sampling to Relation Problems Let FBPP and FBQP be the classes of relation problems (like finding a Nash equilibrium) that are solvable by classical and quantum computers respectively, in poly(n,1/) time and with success probability 1- Then assuming our conjectures, even FBPP=FBQP would imply P#P=BPPNP Theorem (A., last week): FBPP=FBQP if and only if SampP=SampBQP. Proof Sketch: SampP=SampBQP FBPP=FBQP is immediate. For the other direction, use Kolmogorov complexity! Particle Physics In One Slide There are two basic types of particle in the universe… All I can say is, the bosons got the harder job Indeed, [Valiant 2002, Terhal-DiVincenzo 2002] BOSONS FERMIONS showed that noninteracting fermion systems can be simulated in BPP. But, confirming Avi’s joke, we’ll argue that the analogous problem for bosons Their transition amplitudes are given respectively (such as photons) is much much harder… by… Per A n a S n i 1 i, i Det A 1 sgn S n n a i, i 1 i Linear Optics for Dummies We’ll be considering a special kind of quantum computer, which is not based on qubits The basis states have the form |S=|s1,…,sm, where si is the number of photons in the ith “mode” We’ll never create or destroy photons. So if there are n photons, then s1,…,sm are nonnegative integers summing to n For us, m=poly(n) Initial state: |I=|1,…,1,0,……,0 You get to apply any mm unitary matrix U If n=1 (i.e., there’s only one photon, in a superposition over the m modes), U acts on that photon in the obvious way m n 1 M : n In general, there are ways to distribute n identical photons into m modes U induces an MM unitary (U) on the n-photon states as follows: Per U U S ,T S ,T s1! sm !t1! t m ! Here US,T is an nn submatrix of U (possibly with repeated rows and columns), obtained by taking si copies of the ith row of U and tj copies of the jth column for all i,j Example: The “Hong-Ou-Mandel Dip” 1 1 1 Suppose U . 2 1 1 U Then Pr[the two photons land in different modes] is Per U 0 2 Pr[they both land in the first mode] is 2 1 1 1 1 1 Per 2 2! 2 1 1 Beautiful Alternate Perspective The “state” of our computer, at any time, is a degree-n polynomial over the variables x=(x1,…,xm) (n<<m) Initial state: p(x) := x1xn We can apply any mm unitary transformation U to x, to obtain a new degree-n polynomial p' x pUx x S s1 ,, sm s1 S 1 sm m x s1 1 Then on “measuring,” we see the monomial x x 2 with probability S s1! sm ! sm m OK, so why is it hard to sample the distribution over photon numbers classically? Given any matrix ACnn, we can construct an mm unitary U (where m2n) as follows: A B U C D Suppose we start with |I=|1,…,1,0,…,0 (one photon in each of the first n modes), apply U, and measure. Then the probability of observing |I again is p : I U I 2 2n Per A 2 Claim 1: p is #P-complete to estimate (up to a constant factor) Idea: Valiant proved that the PERMANENT is #P-complete. Can use a classical reduction to go from a multiplicative approximation of |Per(A)|2 to Per(A) itself. Claim 2: Suppose we had a fast classical algorithm for linear-optics sampling. Then we could estimate p in BPPNP Idea: Let M be our classical sampling algorithm, and let r be its randomness. Use approximate counting to estimate Pr M r outputs I 2 r Conclusion: p : Suppose I U weI had a fast classical Per Aalgorithm for linear-optics sampling. Then P#P=BPPNP. 2n 2 As I said before, I find this result unsatisfying, since it only talks about the classical hardness of exactly sampling the distribution over photon numbers What about sampling a distribution that’s 1/poly(n)close in variation distance? Difficulty: The sampler might adversarially refuse to output the one submatrix whose permanent we care about! That changes the output distribution by only exp(-n), so we still have an excellent sampler … but we can no longer use it to estimate |Per(A)|2 in BPPNP To get around this difficulty, it seems we need to “smuggle in” the matrix A that we about as a random submatrix of U Main Result Consider applying a Haar-random mm unitary matrix U, to n photons in m=poly(n) modes: Distribution DU over photon numbers Main technical lemma used in proof: Suppose there’s a classical algorithm to sample a 6 Let mn . Then an nn submatrix of an mm distribution -close to DU in poly(n,1/) time. Then for Haar unitary matrix is Õ(1/n)-close in variation NP all ,1/poly(n), there’s also a BPP algorithm to distance to a matrix of independent Gaussians. 2 estimate |Per(X)| to within additive error n!, with probability 1- over a Gaussian random matrix U X ~ nn N 0 ,1 C So the question boils down to this: how hard is it to additively estimate |Per(X)|2, with high probability nn over a Gaussian random matrix C X ~ N 0 ,1 ? We conjecture that it’s #P-hard—in which case, even approximate classical simulation of our linear-optics experiment would imply P#P=BPPNP We can decompose this conjecture into two plausible sub-conjectures: that multiplicatively estimating Per(X) is #P-hard for Gaussian X, and that Per(X) is “not too concentrated around 0” The Permanent-of-Gaussians Conjecture (PGC) The following problem is #P-hard. Given a matrix XCnn of i.i.d. Gaussian entries, together with 01/ and 01/, output an approximation z such that Pr X ~ N 0 ,1Cnn z Per X Per X 1 . We can prove #P-hardness if =0 or =0. So what makes the PGC nontrivial is really the combination of average-case with approximation… The Permanent Anti-Concentration Conjecture (PACC) There exist constants C,D and >0 such that for all n and >0, Pr X ~ N 0,1Cnn PerX n! Cn D Empirically true! Also, we can prove it with determinant in place of permanent Experimental Prospects It seems well within current technology to do our experiment with (say) n=4 photons and m=20 modes (Current record: n=2 photons) Physicists we consulted: “Sounds hard! But If you can scale to n photons and error in variation not as hard as building a universal QC” distance, using “poly(n,1/) experimental effort,” then modulo our complexity conjectures, the ECT is false What Remark: would it take No point to scale in scaling to (say)this n=20 experiment photons and m=500 much modes? beyond 20 or 30 photons, since then a classical computer can’t(standard even verify thegood answers! - Reliable single-photon sources laser isn’t enough!) - Reliable photodetector arrays - Stable apparatus to ensure that w.h.p., all n photons arrive at photodetector arrays at exactly the same time Open Problems Prove the PGC ($200) and PACC ($100)! Can our linear-optics model solve classically-intractable decision problems? What about problems for which a classical computer can verify the answers? Do BPP=BQP or PromiseBPP=PromiseBQP have interesting structural complexity consequences? Similar hardness results for other natural quantum systems (besides linear optics)? Bremner, Jozsa, Shepherd 2010: Another system for which exact classical simulation would collapse PH