* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Inference about a Mean Vector

Sufficient statistic wikipedia , lookup

Degrees of freedom (statistics) wikipedia , lookup

Psychometrics wikipedia , lookup

Bootstrapping (statistics) wikipedia , lookup

Taylor's law wikipedia , lookup

Foundations of statistics wikipedia , lookup

Confidence interval wikipedia , lookup

Statistical hypothesis testing wikipedia , lookup

Omnibus test wikipedia , lookup

Resampling (statistics) wikipedia , lookup

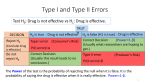

III. Inferences about a Mean Vector A. Basic Inference about a Single Mean m 1. Hypothesis Testing - Scientific method-based means for using sample data to evaluate conjectures about a population. 2. Null Hypothesis - Statement of the conjectured value(s) for the parameter that includes (but is not necessarily limited to) equality between the conjectured value and the tested parameter. Usually denoted H0: parameter ( = ) hypothesized value This is equivalent to a claim that the difference between the observations and the hypothesized value are due to random variation. 3. Alternative Hypothesis - Statement of the conjectured value(s) for the parameter that is mutually exclusive and collectively exhaustive with respect to the Null Hypothesis (and so includes the < and/or > relationship between conjectured value and the tested parameter. Usually denoted H1: parameter (< >) hypothesized value. This is equivalent to a claim that the difference between the observations and the hypothesized value are systematic (i.e., due to something other than random variation. Note that our conclusion is stated with regards to the null hypothesis, which can either be i) rejected or ii) not rejected – that is, we never accept the null hypothesis. 4. Critical Region - Area containing all possible estimated values of the parameter that will result in rejection of the null hypothesis. 5. Critical Value(s) – The value(s) that divide the critical region(s) from the ‘do not reject’ region 6. Test Statistic – Sample-based value that will be compared to the critical region(s) to decide whether to reject or not reject the null hypothesis. The generic form of the test statistic is Observed Value of Hypothesized Value of the Test Statistic the Test Statistic Test Statistic = Standard Error of the Test Statistic 7. Decision Rule – Statement that specifies values of the test statistic that will result in rejection or nonrejection of the null hypothesis 8. Two-Tailed Hypothesis Test - Evaluation of a conjecture for which sample results that are either sufficiently less than or greater than the conjectured value of the parameter will result in rejection of the null hypothesis, i.e., for a null hypothesis that only includes equality between the conjectured value and the tested parameter. 9. One-Tailed Hypothesis Test - Evaluation of a conjecture for which sample results that are only sufficiently less than or greater than the conjectured value of the parameter will result in rejection of the null hypothesis, i.e., for a null hypothesis that includes an inequality between the conjectured value and the tested parameter. 10. Upper-Tailed Test – Hypothesis test for which sample results that are only sufficiently greater than the conjectured value of the parameter will result in rejection of the null hypothesis) 11. Lower-tailed Test – Hypothesis test for which sample results that are only sufficiently less than the conjectured value of the parameter will result in rejection of the null hypothesis 12. Type I Error - Rejection of a true null hypothesis. The probability of this occurrence (given that the null hypothesis is true) is denoted as a. 13. Type II Error – Non-rejection of a false null hypothesis. The probability of this occurrence (given that the null hypothesis is false) is denoted as b. State of Nature C o Do Not n Reject H 0 c l u s Reject H 0 i o n H0 is True H0 is False Correct Decision Type II Error Probability=b Type I Error Probability= a Correct Decision 14. Level of Significance – The probability of rejecting the null when it is actually true. For a two-tailed test: f(t) 0.5-a/2 0.5-a/2 a/2 a/2 (1-a)100% Do Not Reject Region Reject Region μ0 - tα sX 2 -t α 2 μ0 0 Reject Region μ 0 + t α sX x 2 +t α Decision rule – do not reject H0 if –ta/2 t ta/2 otherwise reject H0 2 t For an upper-tailed test: f(t) 0.5 0.5-a a (1-a)100% Do Not Reject Region μ0 0 Decision rule – do not reject H0 if t ta otherwise reject H0 Reject Region μ0 + tαsX x +tα t For a lower-tailed test: f(t) 0.5 0.5-a a Reject Region μ0 - tαsX -tα (1-a)100% Do Not Reject Region μ0 x 0 t Decision rule – do not reject H0 if –ta t otherwise reject H0 15. Steps in Hypothesis Testing - State the Null & Alternative Hypotheses - Select the Appropriate Test Statistic - State the Desired Level of Significance a, Find the Critical Value(s) and State the Decision Rule - Calculate the Test Statistic - Use the Decision Rule to Evaluate the Test Statistic and Decide Whether to Reject or Not Reject the Null Hypothesis. Interpret your results. When testing a hypothesis about a single mean, the appropriate test statistic (when the parent population is normal or the sample is sufficiently large) is X - μ0 X - μ0 t = = s sX n where t has n – 1 degrees of freedom and 1 X = n n j=1 1 Xj, s = n-1 2 n X j=1 j - X 2 Example – suppose we had the following fifteen sample observations on some random variable X1: x j1 5.76 6.68 6.79 7.88 2.46 2.48 2.97 4.47 1.62 1.43 7.46 8.92 6.61 4.03 9.42 At a significance level of a = 0.10, do these data support the assertion that they were drawn from a population with a mean of 4.0? In other words, test the null hypothesis H0 : μ1 = μ0 vs H1 : μ1 μ0 or H0 : μ1 = 4.0 vs H1 : μ1 4.0 Let’s use the five steps of hypothesis testing to assess the potential validity of this conjecture: - State the Null and Alternative Hypotheses H0: m1 = 4.0 H1: m1 4.0 - Select the Appropriate Test Statistic – n = 15 < 30 but the data appear normal, so use X - μ0 X - μ0 t = = s sX n - State the Desired Level of Significance a, Find the Critical Value(s) and State the Decision Rule a=0.10 and we have a two-tailed test, so ta/2 = 1.761. 0.5-a/2=0.45 0.5-a/2=0.45 f(t) a/2=0.05 a/2=0.05 90% Do Not Reject Region Reject Region μ0 - tα sX 2 -t α 2 Reject Region μ 0 + t α sX μ0 = 4.0 = -1.761 0 2 +t α 2 = 1.761 Decision rule – do not reject H0 if –1.761 t 1.761 otherwise reject H0 x t - Calculate the Test Statistic We have x1 = 5.26, μ0 = 4.0, s12 = s11 = 7.12 s1 = 2.669 so x - μ0 5.26 - 4.0 t = = = 1.84 2.669 sx 15 - Use the Decision Rule to Evaluate the Test Statistic and Decide Whether to Reject or Not Reject the Null Hypothesis –1.761 t 1.761, i.e., –1.761 1.84 1.761 so reject H0. At a = 0.10. the sample evidence does not support the claim that the mean of X1 is 4.0. Another example – suppose we had the following fifteen sample observations on some random variable X2: x j2 -3.97 -3.24 -3.56 -1.87 -1.13 -5.20 -6.39 -7.88 -5.00 -0.69 1.61 -6.60 2.32 2.87 -7.64 At a significance level of a = 0.10, do these data support the assertion that they were drawn from a population with a mean of -1.5? In other words, test the null hypothesis H0 : μ2 = μ0 vs H1 : μ2 μ0 or H0 : μ2 = -1.5 vs H1 : μ2 -1.5 Let’s use the five steps of hypothesis testing to assess the potential validity of this conjecture: - State the Null and Alternative Hypotheses H0: m = -1.5 H1: m -1.5 - Select the Appropriate Test Statistic – n = 15 < 30 but the data appear normal, so use X - μ0 X - μ0 t = = s sX n - State the Desired Level of Significance a, Find the Critical Value(s) and State the Decision Rule a=0.10 and we have a two-tailed test, so ta/2 = 1.761. 0.5-a/2=0.45 0.5-a/2=0.45 f(t) a/2=0.05 a/2=0.05 90% Do Not Reject Region Reject Region μ0 - tα sX 2 -t α 2 Reject Region μ 0 + t α sX μ0 = -1.5 = -1.761 0 2 +t α 2 = 1.761 Decision rule – do not reject H0 if –1.761 t 1.761 otherwise reject H0 x t - Calculate the Test Statistic We have x2 = -3.09, μ0 = -1.5, s22 = s22 = 12.43 s2 = 3.526 so -3.09 - -1.5 x - μ0 t = = = -1.748 3.526 sx 15 - Use the Decision Rule to Evaluate the Test Statistic and Decide Whether to Reject or Not Reject the Null Hypothesis –1.761 t 1.761, i.e., –1.761 -1.748 1.761 so do not reject H0. At a = 0.10. the sample evidence does not refute the claim that the mean of X2 is -1.5. Consider the two-tailed test - note that rejecting H0 when |t| is large is equivalent to rejecting H0 if its square t 2 = X - μ0 2 s n 2 X = n X - μ0 s 2 -1 - μ0 is large! Note also that - t2 _ represents the distance from the sample mean x to the hypothesized population mean m0, expressed in terms of s_x (which is equivalent to square squared generalized) - If the null hypothesis is true, t2 ~ F1, n - 1. What if we wished to make simultaneous inferences about the two variables X1 and X2? We could take Bonferroni’s apporach – set the experimentwise probability of Type I error a, then use a/m (where m is the number of simultaneous inferences we wish to make) at the comparisonwise probability of Type I error. For our previous examples (with experimentwise probability of Type I error a = 0.10 and n – 1 = 14 degrees of freedom), we have a comparisonwise probability of Type I error of a = 0.05. This gives us critical values ta/2 = 2.145, and we would reject neither of the previous two null hypotheses! B. Inference about a Mean Vector m ~ T2 A natural generalization of the squared univariate distance t is the multivariate analog Hotelling’s T2: -1 ' 1 ' = X - μ0 S X - μ0 = n X - μ0 S-1 X - μ0 n where 1 x = n n j=1 x1 μ1 x μ 1 2 2 Xj = X'1 = , μ = n _ x p μ p _ 1 S = n-1 n X j=1 j -X X note that here j -X n-1S ~ ' s11 s12 s s 1 1 12 22 = X' I - 11' X = n-1 n s1p s2p s1p s2p spp _ is the estimated covariance matrix of X. ~ This gives us a framework for testing hypotheses about a mean vector, where the null and alternative hypotheses are H0: m~ = m ~0 H1: m m0 ~ ~ The T2 statistic can be rewritten as T2 = n X - μ0 ' -1 ' Xj - X Xj - X j= 1 n-1 n multivariate normal Np(m,S) random vector ~~ (Wishart Wp,n – 1~(S) random matrix/df)-1 n X - μ0 multivariate normal Np(m,S) random vector ~~ So when the null hypothesis is true, the T2 statistic can be written as the product of two multivariate normal Np(m,S) and a Wishart Wp,n – 1(S) distribution – ~ ~~ this is a complete generalization of the univariate case, where we could write the squared test statistic t2 as -1 2 2 t = n X - μ0 s n X - μ0 univariate normal (Chi Square univariate normal N(m,s) random random N(m,s) random variable variable/df)-1 variable We could use special tables to find critical values of T2 for various combinations of a and degrees of freedom, but that is not necessary because What happens to this in the univariate case? 2 T ~ n - 1 p F n- p p,n- p Example – suppose we had the following fifteen sample observations on some random variables X1 and X2: x j1 1.43 1.62 2.46 2.48 2.97 4.03 4.47 5.76 6.61 6.68 6.79 7.46 7.88 8.92 9.42 x j2 -0.69 -5.00 -1.13 -5.20 -6.39 2.87 -7.88 -3.97 2.32 -3.24 -3.56 1.61 -1.87 -6.60 -7.64 At a significance level of a = 0.10, do these data support the assertion that they were drawn from a population with a centroid (4.0, -1.5)? In other words, test the null hypothesis H0 : μ = μ0 vs H1 : μ μ0 or 4.0 4.0 μ μ H0 : μ1 = -1.5 vs H1 : μ1 -1.5 2 2 The scatter plot of pairs (x1, x2), sample centroid (x1, x2), and hypothesized centroid (m1, m2) is provided below: Scatter Plot X2 12.00 hypothesized centroid (m1, m2)0 6.00 sample _ _ centroid (x1, x2) 0.00 -2.00 1.00 4.00 7.00 10.00 X1 -6.00 -12.00 Do these data appear to support our null hypothesis? Let’s go through the five steps of hypothesis testing to assess the potential validity of our assertion. - State the Null and Alternative Hypotheses H0 : μ = μ0 or 4.0 μ H0 : μ1 = -1.5 2 - Select the Appropriate Test Statistic – n – p = 15 – 2 = 13 is not very large, but the data appear relatively bivariate normal, so use 2 T = n X - μ0 ' S-1 X - μ0 - State the Desired Level of Significance a, Find the Critical Value(s) and State the Decision Rule a = 0.10 and n1 = p = 2, n2 = n – p = 13 degrees of freedom, we have f2,13 (0.10) = 2.76 f(F) 0.5-a=0.90 a=0.10 90% Do Not Reject Region Reject Region F2,13 0.10 = 2.76 But we don’t yet have a decision rule since 2 T ~ n - 1 p F n- p p,n- p F Thus our critical value is n - 1 p F n- p p,n- p α = 15 - 1 2 F 15 - 2 2,15-2 0.10 So we have… Decision rule – do not reject H0 if T2 5.95 otherwise reject H0 = 5.95 - Calculate the Test Statistic We have 5.26 4.0 x = -3.09 , μ0 = -1.5 , 7.12 -0.72 0.1412 0.0082 S = -0.72 12.43 S-1 = 0.0082 0.0809 so 2 ' T = n X - μ0 S-1 X - μ0 ' 5.26 - 4.0 7.12 = 15 -3.09 + 1.5 -0.72 0.1412 = 15 1.26 -1.59 0.0082 -1 -0.72 5.26 - 4.0 12.43 -3.09 + 1.5 0.0082 1.26 0.0809 -1.59 = 5.970 - Use the Decision Rule to Evaluate the Test Statistic and Decide Whether to Reject or Not Reject the Null Hypothesis T2 = 5.970 5.95 so reject H0. The sample evidence supports the claim that the mean vector differs from 4.0 μ0 = -1.5 Why were the results of the original univariate tests and the bivariate test so consistent? Look at the data! Scatter Plot X2 12.00 hypothesized centroid (m1, m2)0 6.00 sample _ _ centroid (x1, x2) 0.00 -2.00 1.00 4.00 7.00 10.00 -6.00 -12.00 What do these data suggest about the relationship between X1 and X2? X1 What if we took the same values for variables X1 and X2 and changed their pairings? x j1 4.03 6.61 7.46 9.42 6.79 1.43 8.92 1.62 2.48 5.76 7.88 4.47 2.46 2.97 6.68 x j2 -1.87 -3.97 -6.39 -7.88 -5.20 2.87 -7.64 2.32 -0.69 -3.56 -6.60 -3.24 1.61 -1.13 -5.00 Of course, the univariate test results would not change – but at a significance level of a = 0.10, do these data now support the assertion that they were drawn from a population with a centroid (4.0, -1.5)? In other words, retest the null hypothesis H0 : μ = μ0 vs H1 : μ μ0 or 4.0 4.0 μ μ H0 : μ1 = -1.5 vs H1 : μ1 -1.5 2 2 Since we are using the same significance level (a = 0.10) and the degrees of freedom are unchanged, the decision rule is unchanged as well: f(F) 0.5-a=0.90 a=0.10 90% Do Not Reject Region Reject Region So our critical value is n - 1 p F p,n- p α = F2,13 0.10 = 2.76 15 - 1 2 F 2,15-2 0.10 n- p 15 - 2 Decision rule – do not reject H0 if T2 5.95 otherwise reject H0 F = 5.95 However, we will have to recalculate the test statistic (why?) 5.26 4.0 x = -3.09 , μ0 = -1.5 , 7.12 -9.19 3.0374 2.2452 S = -9.19 12.43 S-1 = 2.2452 1.7400 so 2 ' T = n X - μ0 S-1 X - μ0 ' 5.26 - 4.0 7.12 = 15 -3.09 + 1.5 -9.19 3.0374 = 15 1.26 -1.59 2.2452 -1 -9.19 5.26 - 4.0 12.43 -3.09 + 1.5 2.2452 1.26 1.7400 -1.59 = 3.411 Use the Decision Rule to Evaluate the Test Statistic and Decide Whether to Reject or Not Reject the Null Hypothesis T2 = 3.411 5.95 so do not reject H0. The sample evidence does not support the claim that the mean vector differs from 4.0 μ0 = -1.5 Why are we further from rejecting in the bivariate case? Again, look at the data! Scatter Plot X2 12.00 hypothesized centroid (m1, m2)0 6.00 sample _ _ centroid (x1, x2) 0.00 -2.00 1.00 4.00 7.00 10.00 -6.00 -12.00 What do these data suggest about the relationship between X1 and X2? X1 What if we took the same values for variables X1 and X2 and changed their pairings so that their correlation was positive? Again, the univariate test results x j1 x j2 2.46 -6.60 would not change – but at a 5.76 -3.56 significance level of a = 0.10, do these 1.62 -7.64 data now support the assertion that 4.03 -5.00 7.46 -0.69 they were drawn from a population 9.42 2.87 with a centroid (4.0, -1.5)? 8.92 2.32 6.68 7.88 6.61 1.43 4.47 6.79 2.97 2.48 -1.87 1.61 -3.24 -7.88 -3.97 -1.13 -5.20 -6.39 In other words, retest the null hypothesis H0 : μ = μ0 vs H1 : μ μ0 or 4.0 4.0 μ μ H0 : μ1 = -1.5 vs H1 : μ1 -1.5 2 2 Since we are using the same significance level (a = 0.10) and the degrees of freedom are unchanged, the decision rule is unchanged as well: f(F) 0.5-a=0.90 a=0.10 90% Do Not Reject Region Reject Region So our critical value is n - 1 p F p,n- p α = F2,13 0.10 = 2.76 15 - 1 2 F 2,15-2 0.10 n- p 15 - 2 Decision rule – do not reject H0 if T2 5.95 otherwise reject H F = 5.95 However, we will again have to recalculate the test statistic: 5.26 x = -3.09 , μ0 = 7.12 9.16 S = 9.16 12.43 4.0 , -1.5 2.7407 -2.0206 S-1 = -2.0206 1.5701 so 2 ' T = n X - μ0 S-1 X - μ0 ' -1 5.26 - 4.0 7.12 9.16 5.26 - 4.0 = 15 -3.09 + 1.5 9.16 12.43 -3.09 + 1.5 2.7407 -2.0206 1.26 = 15 1.26 -1.59 -2.0206 1.5701 -1.59 = 247.470 Use the Decision Rule to Evaluate the Test Statistic and Decide Whether to Reject or Not Reject the Null Hypothesis T2 = 247.470 5.95 so reject H0. The sample evidence supports the claim that the mean vector differs from 4.0 μ0 = -1.5 Why is our decision so radically different? Again, look at the data! Scatter Plot X2 12.00 hypothesized centroid (m1, m2)0 6.00 sample _ _ centroid (x1, x2) 0.00 -2.00 1.00 4.00 7.00 10.00 -6.00 -12.00 What do these data suggest about the relationship between X and X ? X1 C. Hotelling’s T2 and Likelihood Ratio Tests Likelihood Ratio Method – general principle for constructing test procedures which - have several optimal properties for large samples - are particularly convenient for testing multivariate hypotheses Recall that the maximum of the multivariate likelihood (over possible values of m and S) is given by ~ L(μ, Σ) = ~ 1 2 np 2 ˆ Σ n2 e-np 2 Where 1 ˆ = X = μ n n X j j=1 and n 1 ˆ Σ = S X j - X n j=1 X j -X ' n-1 = S n under H0: m = m0, the normal likelihood specializes to ~ ~ n L(μ0, Σ) = 1 2 np 2 ' 1 xj -μ0 Σ-1 xj -μ0 2 j= 1 ˆ Σ n2 e by an earlier result we can rewrite the exponent in L(m0, S), which yields ~ ~ n L(μ0, Σ) = = 1 2 np 2 ˆ Σ n2 2 e n ' 1 -1 - tr Σ xj -μ0 xj -μ0 2 j= 1 1 np 2 ' 1 tr Σ-1 xj -μ0 xj -μ0 2 j= 1 ˆ Σ n2 e Recall our earlier result: For a p x p symmetric positive definite matrix B and scalar b > 0, it ~ follows ~ that 1 Σ b -tr Σ-1B e 2 1 B b -bp 2b e pb for all positive definite S of dimension p x p, with ~ equality holding only for 1 Σ = B 2b If we apply this result with x n B = j - μ0 j=1 x j - μ0 ' n and b = 2 we have max L(μ0, Σ) = Σ 1 2 np 2 - ˆ Σ0 n2 e with 1 ˆ Σ0 = n x n j j=1 - μ0 x j - μ0 ' np 2 Now we can compare the maximum of L(m0, S) with the ~ ~ unrestricted maximum of L(m, S) to determine the ~ ~ plausibility of the null hypothesis – this ratio is called the Likelihood Ratio Statistic: np 1 2 e n2 np 2 ˆ ˆΣ max L(μ0, Σ) 2 Σ 0 Likelihood Ratio = Λ = Σ = = np max L(μ, Σ) 1 ˆΣ0 2 μ,Σ n2 e np 2 2 ˆΣ or equivalently 2 n Λ = ˆΣ ˆΣ0 = 1 n 1 n n x j=1 x n j=1 j j - x xj - x n x ' - μ0 xj - μ0 ' which is called Wilk’s Lambda. = j=1 x n j=1 j j - x xj - x ' - μ0 xj - μ0 ' n 2 Note that a small value for Wilk’s lambda suggests that the null hypothesis H0:m = m0 is not likely to be true ~ ~ (and leads to rejection) – that is, reject H0 if n 2 n Λ = ˆΣ ˆΣ0 x = j=1 x n j=1 j j - x xj - x ' - μ0 xj - μ0 ' < cα where ca is the lower (100a)th percentile of the distribution of L. But what is the distribution of Wilk’s Lambda??? There is a relationship between T2 and L that can help us here – suppose X1,…Xn is a random sample from an ~ ~ N(m,S) population. Then the test based on T2 is ~ ~ equivalent to the likelihood ratio test of H0:m = m0 ~ ~ because substitute the appropriate ˆΣ -1 T Λ = = 1 + ˆΣ0 n 1 2 n 2 critical value of T2 here to find the critical likelihood ratio value which also has the advantage of demonstrating that T2 can be calculated as the ratio of two determinants (avoiding the need to calculate S-1). Solving for T2 ~ yields n n - 1 xj - μ0 xj - μ0 ' 2 T = n - 1 ˆΣ0 ˆΣ - n - 1 = j=1 n x j=1 j - x xj - x ' - n - 1 Example: for our previous bivariate data, perform the likelihood ratio test of the hypothesis that the centroid is (4.0, -1.0): x j1 x j2 In our previous test of the null 1.43 -0.69 hypothesis 1.62 -5.00 H0 : μ = μ0 vs H1 : μ μ0 2.46 -1.13 2.48 2.97 4.03 4.47 5.76 6.61 6.68 6.79 7.46 7.88 8.92 9.42 -5.20 -6.39 2.87 -7.88 -3.97 2.32 -3.24 -3.56 1.61 -1.87 -6.60 -7.64 or 4.0 4.0 μ μ H0 : μ1 = -1.5 vs H1 : μ1 -1.5 2 2 we obtained a Hotelling’s T2 test statistic value of 5.997 and a critical value (at a = 0.10) of 5.95. Let’s go through the five steps of hypothesis testing to assess the potential validity of our assertion. - State the Null and Alternative Hypotheses H0 : μ = μ0 or 4.0 μ H0 : μ1 = -1.5 2 - Select the Appropriate Test Statistic – n – p = 15 – 2 = 13 is not very large, but the data appear relatively bivariate normal, so use ˆΣ -1 T Λ = = 1 + ˆΣ0 n 1 2 n 2 - State the Desired Level of Significance a, Find the Critical Value(s) and State the Decision Rule a = 0.10 and n1 = p = 2, n2 = n – p = 13 degrees of freedom, we have f2,13 (0.10) = 2.76 f(F) 0.5-a=0.90 a=0.10 90% Do Not Reject Region Reject Region F2,13 0.10 = 2.76 But we don’t yet have a decision rule since 2 T ~ n - 1 p F n- p p,n- p F Thus our critical T2 value is n - 1 p F n- p p,n- p α = 15 - 1 2 F 2,15-2 15 - 2 0.10 = 5.95 This leads to a critical likelihood ratio value of ˆΣ -1 T Λ = = 1 + ˆΣ0 n 1 2 n 2 -1 5.95 = 1 + 15 1 = 1.412 -1 So we have… 2 Decision rule – do not reject H0 if Λ n 0.7018 otherwise reject H0 = 0.7018 - Calculate the Test Statistic From our earlier results we have T2 = 5.970, so the calculated value of the likelihood ratio test statistic for this sample is ˆΣ -1 T Λ = = 1 + ˆΣ0 n - 1 2 n 2 -1 5.970 = 1 + 15 - 1 = 1.426 -1 = 0.7011 - Use the Decision Rule to Evaluate the Test Statistic and Decide Whether to Reject or Not Reject the Null Hypothesis T2 = 0.7011 0.7018 so reject H0. The sample evidence supports the claim that the mean vector differs from 4.0 μ0 = -1.5 SAS code for a Hypothesis Test of a Mean Vector : OPTIONS LINESIZE = 72 NODATE PAGENO = 1; DATA stuff; INPUT x1 x2; x1o = 4.0; x2o = -1.5; x1dif = x1 - x1o; x2dif = x2 - x2o; LABEL x1='Observed Values of X1' x2='Observed Values of X2' x1o='Hypothesized Value of X1' x2o='Hypothesized Value of X2' x1dif='Difference Between Observed and Hypothesized Values of X1' x2dif='Difference Between Observed and Hypothesized Values of X2'; CARDS; 1.43 -0.69 . . . . . . . . . 9.42 -7.64 ; SAS code for a Hypothesis Test of a Mean Vector (continued): PROC MEANS DATA=stuff N MEAN STD T PRT; VAR X1 X2 X1dif X2dif; TITLE4 'Using PROC MEANS to generate univariate summary statistics'; RUN; PROC CORR DATA=stuff COV; VAR X1 X2; TITLE4 'Using PROC CORR to generate the sample covariance matrix'; RUN; PROC GLM DATA=stuff; MODEL x1dif x2dif = /nouni; MANOVA H=INTERCEPT; TITLE4 'Using PROC GLM to test a Hypothesis of a Mean Vector'; RUN; SAS output for Univariate Hypothesis Test of Means: The MEANS Procedure Variable Label N ƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒ x1 Observed Values of X1 15 x2 Observed Values of X2 15 x1dif Difference Between Observed and Hypothesized Values of X1 15 x2dif Difference Between Observed and Hypothesized Values of X2 15 ƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒ Variable Mean Std Dev t Value Pr > |t| ƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒ x1 5.2653333 2.6686403 7.64 <.0001 x2 -3.0913333 3.5248543 -3.40 0.0043 x1dif 1.2653333 2.6686403 1.84 0.0876 x2dif -1.5913333 3.5248543 -1.75 0.1023 ƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒƒ SAS output Sample Covariance & Correlation Matrices: 2 x1 x2 The CORR Procedure Variables: x1 x2 Covariance Matrix, DF = 14 x1 Observed Values of X1 7.12164095 Observed Values of X2 -0.72558524 Variable x1 x2 Variable x1 x2 N 15 15 Simple Statistics Mean 5.26533 -3.09133 Simple Minimum 1.43000 -7.88000 x2 -0.72558524 12.42459810 Std Dev 2.66864 3.52485 Sum 78.98000 -46.37000 Statistics Maximum Label 9.42000 Observed Values of X1 2.87000 Observed Values of X2 Pearson Correlation Coefficients, N = 15 Prob > |r| under H0: Rho=0 x1 x2 x1 1.00000 -0.07714 Observed Values of X1 0.7847 x2 Observed Values of X2 -0.07714 0.7847 1.00000 SAS output for a Hypothesis Test of a Mean Vector : The GLM Procedure Multivariate Analysis of Variance Characteristic Roots and Vectors of: E Inverse * H, where H = Type III SSCP Matrix for Intercept E = Error SSCP Matrix Characteristic Root Percent 0.42640573 0.00000000 100.00 0.00 Characteristic Vector x1dif 0.07016070 0.07188395 V'EV=1 x2dif -0.05016332 0.05715782 MANOVA Test Criteria and Exact F Statistics for the Hypothesis of No Overall Intercept Effect H = Type III SSCP Matrix for Intercept E = Error SSCP Matrix S=1 Statistic Wilks' Lambda Pillai's Trace Hotelling-Lawley Trace Roy's Greatest Root note again that 1- Λ T 2 = n - 1 Λ M=0 N=5.5 Value F Value Num DF Den DF Pr > F 0.70106280 0.29893720 0.42640573 0.42640573 2.77 2.77 2.77 2.77 2 2 2 2 13 13 13 13 0.0994 0.0994 0.0994 0.0994 The previous test was a specific example of ~ application ~ of the General Likelihood Ratio Method: Let q~ be a vector consisting of all the unknown parameters that take values in some parameter set Q ~ (i.e., q Q). For example, in the p-dimensional ~ ~ multivariate normal case, q = [m1,… mp; q11,…, q1p; q21,…, q~ qp1,…, qpp] and 2p;…; ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ ~ of~ the p-dimensional space where -mi , I Q~ consists = 1,…,p combined with the 0.5p(p + 1)-dimensional space of variances and covariances such that S is ~ positive definite. Thus Q ~ has dimension n = p + 0.5p(p + 1). Also let L(q) be the likelihood function obtained by ~ evaluating the joint density of X1,…Xn at their observed ~ ~ values x1,…xn. ~ ~ Now under the null hypothesis H0: q = Q0, q is restricted ~ ~ ~ to lie in some region Q0 Q. ~ ~ For example, in the p-dimensional multivariate normal case with m = m0 and S unspecified, we have ~ Θ = [μ1 = μ10, σ21, , σ2p, ~ ~ , μ p = μ p0; σ11, , σ p1, , σ1p, , σ pp Σ is positive definite] so Q has dimension n0 = 0 + 0.5p(p + 1) = 0.5p(p + 1). ~ Now a likelihood ratio test of H0:q Q0 is rejected in ~ ~ favor of H0:q Q0 when ~ ~ max L(θ) θ Θ0 Likelihood Ratio = Λ = c max L(θ) θ Θ for a suitably chosen constant c (some percentile of the distribution of L). Conveniently, for a relatively large sample size n, under the null hypothesis H0: q = Q0, ~ ~ max L(θ) θ Θ χ2 -2ln Λ = -2ln 0 ν-ν0 max L(θ) θΘ . D. Confidence Regions and Simultaneous Comparisons of Component Means To extend the concept of a univariate 100(1 - a)% confidence interval for the mean ˆ-ε θ θ ˆ + ε = P θ ˆ - θ ε 1 - α P θ to a p-dimensional multivariate space, let q be a vector ~ of unknown population parameters such that q Q. ~ ~ The 100(1 – a)% Confidence Region determined by data ~ ~ ~ ~ X = [X1, X2 ,…,Xn]’ ~ is denoted R(X), is the region satisfying ~ ~ For example, a univariate 100(1 - a)% confidence interval for the mean may look like this P x - tα 2sx μ x + tα 2sx = 1 - α A corresponding confidence region in p-dimensional multivariate space is given by ' n - 1 p -1 P n x - μ S x - μ Fp,n- p α = 1 - α n - p Equivalently, P x - μ n - 1 p F p,n- p α n - p = 1-α provided nS-1 is the appropriate measure of distance. ~ More formally, a 100(1 - a)% confidence region for the mean vector m of a p-dimensional normal distribution ~ is the ellipsoid determined by all possible points m that ~ satisfy ' -1 n x-μ S x - μ n - 1 p F p,n- p α n - p where n ' 1 n 1 x = xj, S = xj - x xj - x , n j=1 n - 1 j=1 and X = x1, x2, , x n are the sample observations Example: Use our original bivariate sample to construct a two-dimensional 90% confidence region and determine if ~the point (4.0, -1.5) lies in this region. x j1 1.43 1.62 2.46 2.48 2.97 4.03 4.47 5.76 6.61 6.68 6.79 7.46 7.88 8.92 9.42 x j2 -0.69 -5.00 -1.13 -5.20 -6.39 2.87 -7.88 -3.97 2.32 -3.24 -3.56 1.61 -1.87 -6.60 -7.64 We have already calculated the summary statistics: 5.26 4.0 x = -3.09 , μ0 = -1.5 , 7.12 -0.72 0.1412 0.0082 S = -0.72 12.43 S-1 = 0.0082 0.0809 and n - 1 p F n- p p,n- p α = 5.95 So a 100(1 - a)% confidence region for the mean vector m is the ellipsoid determined by all possible points m ~ ~ that satisfy x - μ ' -1 n x-μ S n - 1 p F p,n- p α i.e., n - p ' 5.26 μ1 0.1412 0.0082 5.26 μ1 15 -3.09 - μ 0.0082 0.0809 -3.09 - μ 5.95 2 2 So does μ1 = 4.0 μ2 -1.5 fall inside this 90% confidence region? We have that ' 5.26 4.0 15 -3.09 - -1.5 0.1412 0.0082 4.0 - μ1 = 5.970 5.95 0.0082 0.0809 -1.5 μ2 …it does not fall inside the 90% confidence region! Does the point (-1.5, 4.0) lie in this region? We have that ' 5.26 -1.5 15 -3.09 - 4.0 0.1412 0.0082 -1.5 - μ1 = 146.201 5.95 0.0082 0.0809 4.0 μ2 This point falls FAR outside the 90% confidence region! We can draw the axes of the ellipsoid that represents the 100(1 - a)% confidence region for the mean vector m ~ – the ellipsoid determined by all possible points m that ~ satisfy x - μ c ' -1 n x-μ S 2 = n - 1 p F p,n- p α n - p For our ongoing problem at the 90% level of confidence, this is equivalent to ' 5.26 μ1 15 -3.09 - μ 2 0.1412 0.0082 5.26 - μ1 5.95 0.0082 0.0809 -3.09 μ2 We can draw the axes of the ellipsoid that represents the 100(1 - a)% confidence region for the mean vector m. ~ Recall that the directions and relative lengths of axes of this confidence interval ellipsoid are determined by going λi c n = λi n - 1 p F p,n-p α n n - p units along the corresponding eigenvectors ei. ~ _ Beginning at the centroid x, the axes of the confidence ~ interval ellipsoid are λi n - 1 p F p,n-p α ei n n - p Note that the ratios of the lis aid in identification of the relative amounts of elongation along pairs of axes. Example: We can draw the 100(1 - a)% confidence region for the mean vector m – the eigenvalue~ eigenvector pairs li, ei for the sample covariance matrix ~ S~ are -0.13316 λ1 = 12.522, e1 = 0.99109 0.99109 λ2 = 7.024, e2 = 0.13316 …so the half-lengths of the major and minor axes are given by λ1 n - 1 p F p,n- p α n n - p λ2 n - 1 p F p,n- p α n n - p = = 12.522 15 - 1 2 5.95 15 15 - 2 = 3.7293 7.024 15 - 1 2 5.95 15 15 - 2 = 2.7931 The axes lie along the corresponding eigenvectors ei: ~ -0.13316 0.99109 e1 = 0.99109 , e2 = 0.13316 when these _ vectors are plotted with the sample centroid x as the origin: ~ X2 sample _ _ centroid (x1, x2) 4.00 0.00 0.00 4.00 8.00 X1 -4.00 -8.00 -12.00 -0.13316 e1 = 0.99109 , 0.99109 e2 = 0.13316 X2 sample _ _ centroid (x1, x2) 4.00 Now we move 3.73 units along the vector e1 ~ 0.00 0.00 4.00 8.00 X1 -4.00 and 2.793 units along the vector e2. ~ -8.00 -12.00 -0.13316 e1 = 0.99109 , 0.99109 e2 = 0.13316 X2 4.00 0.00 0.00 shadow of the 90% confidence region on the X1 axis 4.00 8.00 X1 shadow of the 90% confidence region on the X2 axis -4.00 -8.00 -12.00 We can also project the ellipse onto the axes to form simultaneous confidence intervals. Simultaneous Confidence Statements – The joint confidence region ' n x - μ S-1 x - μ c2 for some constant c correctly assesses the plausibility of various value of m. However… ~ they are somewhat awkward – we may desire confidence statements about individual components of the mean vector m that would hold simultaneously ~ with a reasonably level of confidence. Such intervals are referred to as Simultaneous Confidence Intervals. One approach to constructing these intervals is to relate them to the T2-based confidence region. To start, suppose X ~ Np(m, S) and that they form the ~ ~ ~ linear combination p Z = a1X 1 + + ap X p = ' a X = a ii X i =1 From earlier results we have that p μZ = E Z = a1μ1 + + apμp = ' a μ = a ii μ i =1 and and σz2 = Var Z = a'Σa 2 z Z ~ N μZ,σ = N a μ, a Σa ' ' Now, if we take a sample X1,…,Xn from the Np(m, S) ~ ~ ~ ~ population and construct a corresponding sample of Z’s p Zj = a1X j1 + + a p X jp = ' a X = a i ji Xj i =1 the sample mean and variance of the observed values z1,…,zn are p z = a1x1 + + apx p = ' a x = a ii x i =1 and sz2 = a'Sa We can now develop simultaneous confidence intervals by judicious choices of a. For a fixed a and ~ ~ unknown, a 100(1 – a)% confidence interval for mz = a’m ~~ is z - μZ t = = sZ n n a'x - a'μ a'Sa which leads to the statement z - tn-1 sZ ' sZ α 2 n a Sa μΖ z + tn-1 α 2 n which can be rewritten as a'x - tn-1 α 2 a'Sa n μΖ a'x + tn-1 α 2 a'Sa n Note that the inequality a'x - tn-1 α 2 a'Sa n μΖ a'x + tn-1 α 2 a'Sa n can be interpreted as a statement about components of m. For example, taking a~ to be a 1 in the ith position and ~ zeros will yield the usual confidence interval about mi. However, it is obvious that if we did so for i = 1,…,p at some fixed level of confidence 1 – a, the confidence of all statements taken together (i.e., the experimentwise level of confidence) is not 1 – a! Given a set of data x1,…,xn and a particular a, the ~ ~ ~ confidence interval is the set of all a’m such that ~ ~ t = n a'x - a'μ tn-1 α 2 ' a Sa or equivalently 2 t = ' ' n ax - aμ a'Sa 2 n a' x - μ = a'Sa 2 t2n-1 α 2 So a simultaneous confidence region is given by the set of all a’m values such that t2 is relatively small for ~~ all choices of a! ~ Considering values of a for which t2 c2, it is natural to ~ try to determine 2 2 max t = max a n a' x - μ a a'Sa Now by a previous maximization lemma: For a given p x 1 vector d and p x p positive definite ~ matrix B, and an arbitrary nonzero vector x~ ~ max x 0 x'd 2 x'Bx = d'B-1d with the maximum attained when x =_cB-1d for any ~ ~ ~ constant c. Now if we take x = a, d = (x – m), and B = S, ~ ~ ~ ~ ~ ~ ~ we get: ' n a x-μ 2 max t = max a a'Sa a = n max a' x - μ a 2 a'Sa ' 2 = n x - μ S x - μ = T2 with the maximum occurring where a ~ _ S-1(x ~ ~ – m). ~ Thus, for a sample X1,…,Xn from the Np(m, S) ~ ~ ~ ~ population with positive definite S, we have simultaneously for all a~ that n 1 p n 1 p a'X Fp,n-p α , a'X + Fp,n-p α n n - p n n - p will contain a’m with probability 1 – a. ~~ These are often referred to as T2-intervals. Note that successive choices of a’ = [1 0 0 …. 0], a’ = [0 1 ~ 2 ~ 0 …. 0],…, a’ = [0 0 0 …. 1] for the T -intervals allow us ~ to conclude that x1 - n - 1 p F α p,n-p n n - p n - 1 p s11 s11 μ1 x1 + Fp,n-p α n n n - p n x2 - n - 1 p F α p,n-p n n - p n - 1 p s22 s22 μ2 x2 + Fp,n-p α n n n - p n xp - n - 1 p F p,n- p α n n - p spp n μp x p + n - 1 p F p,n- p α n n - p will all hold simultaneously with probability 1 – a. spp n …or that a judicious choice of a’ (such as a’ = [1 -1 0 …. ~ ~ 2 0]) for the T -intervals allows us to test special contrasts: x1 - x 2 - n - 1 p F p,n- p α n n - p x1 - x 2 + s11 - 2s12 + s22 μ1 - μ2 n n - 1 p F p,n- p α n n - p s11 - 2s12 + s22 n which again will hold simultaneously with probability 1 – a. Example: Use our original bivariate sample to construct simultaneous 90% confidence intervals for the means ~ of two variables X1 and X2. x j1 1.43 1.62 2.46 2.48 2.97 4.03 4.47 5.76 6.61 6.68 6.79 7.46 7.88 8.92 9.42 x j2 -0.69 -5.00 -1.13 -5.20 -6.39 2.87 -7.88 -3.97 2.32 -3.24 -3.56 1.61 -1.87 -6.60 -7.64 We have already calculated the summary statistics: 5.26 4.0 x = -3.09 , μ0 = -1.5 , 7.12 -0.72 0.1412 0.0082 S = -0.72 12.43 S-1 = 0.0082 0.0809 and n - 1 p F n- p p,n- p α = 5.95 Our choices of a’ are [1 0] and [0 1], which yield T2~ intervals: x1 - n - 1 p F p,n- p α n n - p n - 1 p s11 s11 μ1 x1 + Fp,n- p α n n n - p n or 7.12 7.12 5.26 - 5.95 μ1 5.26 + 5.95 15 15 or 3.579 μ1 6.941 i.e., μ1 = 5.26 1.681, i.e., μ1 = 3.579,6.941 and x2 - n - 1 p F p,n- p α n n - p n - 1 p s22 s22 μ2 x 2 + Fp,n- p α n n n - p n or 12.43 12.43 -3.09 - 5.95 μ2 -3.09 - 5.95 15 15 or - 5.310 μ2 -0.870 i.e., μ2 = -3.09 2.220, i.e., μ2 = -5.310,-0.870 which will hold simultaneously with probability 1 – a. Example: Use our original bivariate sample to construct simultaneous 90% confidence intervals for the sum of and ~ difference between means of two variables X1 and X2. x j1 1.43 1.62 2.46 2.48 2.97 4.03 4.47 5.76 6.61 6.68 6.79 7.46 7.88 8.92 9.42 x j2 -0.69 -5.00 -1.13 -5.20 -6.39 2.87 -7.88 -3.97 2.32 -3.24 -3.56 1.61 -1.87 -6.60 -7.64 Again, we will make use of our previously calculated the summary statistics: 5.26 4.0 x = -3.09 , μ0 = -1.5 , 7.12 -0.72 0.1412 0.0082 S = -0.72 12.43 S-1 = 0.0082 0.0809 and n - 1 p F n- p p,n- p α = 5.95 Our choices of a’ are [1, 1] and [1 -1], so the T2~ ~ intervals are: x1 - x 2 - n - 1 p F p,n- p α n n - p x1 - x 2 + s11 - 2s12 + s22 μ1 - μ2 n n - 1 p F p,n- p α n n - p s11 - 2s12 + s22 n or 7.12 - 2 0.72 + 12.43 5.26 - -3.09 - 5.95 μ1 - μ2 15 7.12 - 2 0.72 + 12.43 5.26 - -3.09 - 5.95 15 or 5.465 μ1 - μ2 11.235 i.e., μ1 - μ2 = 8.35 2.885, i.e., μ1 - μ2 = 5.465, 11.235 and: x1 + x 2 - n - 1 p F p,n- p α n n - p x1 + x 2 + s11 - 2s12 + s22 μ1 + μ2 n n - 1 p F p,n- p α n n - p s11 - 2s12 + s22 n or 7.12 - 2 0.72 + 12.43 5.26 + -3.09 - 5.95 μ1 + μ2 15 7.12 - 2 0.72 + 12.43 5.26 + -3.09 - 5.95 15 or - 0.715 μ1 + μ2 5.055 i.e., μ1 + μ2 = 2.17 2.885, i.e., μ1 + μ2 = -0.715, 5.055 which will hold simultaneously with probability 1 – a. Our choices of a’ are [1, 1] and [1 -1], so the T2~ ~ intervals are: x1 - x 2 - n - 1 p F p,n- p α n n - p x1 - x 2 + s11 - 2s12 + s22 μ1 - μ2 n n - 1 p F p,n- p α n n - p s11 - 2s12 + s22 n which again will hold simultaneously with probability 1 – a. X2 4.00 shadow of the 90% confidence region on the X1 axis 3.6 0.00 -0.9 0.00 shadow of the 90% confidence region on the X2 axis 4.00 6.9 8.00 X1 -4.00 -5.3 -8.00 -12.00 Note that the projections the ellipse onto the axes do form the simultaneous confidence intervals. Also note that, for this example, the one-at-a-time (univariate) confidence intervals would be: x1 - tn-1 α 2 s11 s11 μ1 x1 + tn-1 α 2 n n or 7.12 7.12 5.26 - 1.761 μ1 5.26 + 1.761 15 15 or 4.047 μ1 6.473 i.e., μ1 = 5.26 1.213, i.e., μ1 = 4.047,6.473 and: x2 - tn-1 α 2 s22 s22 μ2 x2 + t n-1 α 2 n n or 12.43 12.43 -3.09 - 1.761 μ2 -3.09 + 1.761 15 15 or - 4.690 μ2 -1.487 i.e., μ2 = -3.09 1.603, i.e., μ2 = -4.690,-1.487 These intervals are not guaranteed to hold simultaneously with probability 1 – a. Why? X2 shadow of the 90% confidence region 4.00 on the X1 axis univariate 90% confidence interval for X1 4.0 univariate 90% confidence interval for X2 0.00 0.00 -1.5 4.00 6.5 8.00 X1 -4.00 -4.7 shadow of the 90% confidence region on the X2 axis -8.00 -12.00 Note that the univariate intervals are shorter – they do not consider covariance between X1 and X2! Even if we make a Bonferroni-type adjustment to the one-at-a-time (univariate) confidence intervals: x1 - tn-1 α 2p s11 s11 μ1 x1 + tn-1 α 2p n n or 7.12 7.12 5.26 - 2.145 μ1 5.26 + 2.145 15 15 or 3.782 μ1 6.738 i.e., μ1 = 5.26 1.478, i.e., μ1 = 3.782,6.738 and: x2 - tn-1 α 2p s22 s22 μ2 x2 + t n-1 α 2p n n or 12.43 12.43 -3.09 - 2.145 μ2 -3.09 + 2.145 15 15 or - 5.043 μ2 -1.137 i.e., μ2 = -3.09 1.953, i.e., μ2 = -5.043,-1.137 These intervals are not guaranteed to hold simultaneously with probability 1 – a. Why? X2 shadow of the 90% confidence region 4.00 on the X1 axis Bonferonni adjusted univariate 90% confidence interval for X1 3.8 Bonferonni adjusted univariate 90% confidence interval for X2 0.00 -1.10.00 4.00 6.7 8.00 X1 -4.00 -5.0 shadow of the 90% confidence region on the X2 axis -8.00 -12.00 Note that the Bonferonni adjusted univariate intervals are still shorter – they do not consider covariance between X1 and X2! E. Large Sample Inferences About a Population Mean Vector When the sample is large (n > > p) we don’t need to rely on the multivariate normality of the population to make inferences about the mean vector m (why not?). ~ From an earlier result, we know that ' n X - μ S-1 X - μ ~. χ2p so we can say that ' -1 2 P n X - μ S X - μ χ p 1-α This result leads directly to large sample (n > > p) simultaneous confidence intervals and hypothesis tests for the mean vector m. ~ Let X ,…X be a random sample from a population with ~1 ~n mean m, positive definite covariance matrix S, and some ~ ~ arbitrary distribution. When n – p is large, the hypothesis H0: m = m0 ~ ~ is rejected in favor of H1: m m0 ~ ~ at a level of significance a, if X - μ χ ' -1 n X-μ S 2 p α The difference between the normal theory (small sample) test and the large sample test is the critical value of the distribution n - 1 p F p,n- p α n n - p vs χ2p α In fact, as n – p grows, the distribution for the normal theory (small sample) test almost surely approaches the distribution of the large sample test: n - 1 p F p,n- p α n n - p a.s. 2 χ p α n- p So it is never truly inappropriate to use the normal theory (small sample) test – just conservative. We can also build a large sample (n > > p) simultaneous confidence intervals for the mean vector m. ~ Let X ,…X be a random sample from a population with ~ 1 ~ ~n mean m, positive definite covariance matrix S, and some ~ ~ arbitrary distribution. When n – p is large, a'X χ2p α a'S-1a n will contain a’m, for every a, with probability 1 – a. ~~ ~ Consequently, we can make the following 100(1 – a)% simultaneous confidence statements: xi χ 2 p α sii , i = 1..., p n Furthermore, for all pairs (mi, mk), i, k = 1….,p, we have ~ ~ that sii sik n xi - xk s s xi - xk χ2p α ik kk ' contain (mi, mk) with probability 1 – a. ~ ~ Example: Suppose we have collected a random sample of 107 observations in p = 5 dimensions and calculated the following summary statistics: Variable Sample Mean (x i ) Sample Standard Deviation ( sii ) X1 X2 X3 X4 X5 58.6 19.3 101.5 67.0 42.5 4.44 2.39 8.87 3.99 4.69 ~ five simultaneous 90% confidence Construct~ the intervals for the individual mean components mi , i = 1,…,5. We have a large sample (n = 107 > > p = 5), so we will use the large sample approach to constructing the simultaneous 90% confidence intervals for the individual components of the mean vector m. ~ μ1 = x1 s11 4.44 χ α = 58.6 9.236 n 107 = 58.6 6.034 = 52.565,64.635 μ2 = x 2 s22 2.39 χ α = 19.3 9.236 n 107 = 19.3 3.248 = 16.052, 22.548 μ3 = x3 s33 8.87 χ α = 101.5 9.236 n 107 = 101.5 12.056 = 89.444, 113.556 2 p 2 p 2 p μ4 = x4 s44 3.99 χ α = 67.0 9.236 n 107 = 67.0 5.423 = 61.577, 72.423 μ5 = x5 s55 4.69 χ α = 42.5 9.236 n 107 = 42.5 6.374 = 36.126, 48.874 2 p 2 p If we used these intervals to test the null hypothesis H0 : μ = μ0 = 55 μ1 25 μ2 μ3 = 111 59 μ4 34 μ5 at a = 1 - 0.90 = 0.10 significance level, we would reject the null (why?).