* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download 18.4 Regression Diagnostics - II

Survey

Document related concepts

Transcript

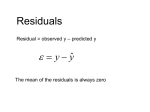

Econometric Problems • Econometric problems, detection & fixes? • Hands on problems 1 Regression Diagnostics • The required conditions for the model assessment to apply must be checked. – Is the error variance constant? – Are the errors independent? – Is the error variable normally distributed? – Is multicollinearity a problem? 2 Econometric Problems – Heteroskedasticity (Standard Errors not reliable) – Non-normal distribution of error term (t & Fstats not reliable) – Autocorrelation or Serial Correlation (Standard Errors not reliable) – Multicolinearity (t-stats may be biased downward) 3 • Is the error variance constant? (Homoskedasticity) • When the requirement of a constant variance is met we have homoskedasticity. + ^y Residual + + + + + + + + + + + + + + + + + ++ + + ++ + + + + + + + + The spread of the data points does not change much. y^ ++ ++ ++ + + +++ +++ + ++ + + ++ + + 4 •Heteroskedasticity – When the requirement of a constant variance is violated we have heteroskedasticity. The plot of the residual Vs. predicted value of Y will exhibit a cone shape. + ^y ++ Residual + + + + + + + + + + + + ++ + + + + + + + + + + + + + + + y^ ++ + ++ ++ ++ + + ++ + + The spread increases with ^y 5 Heteroskedasticity • When the variance of the error term is different for different values of X you have heteroskedasticity. • Problem: The OLS estimators for the’s are no longer minimum variance. You can no longer be sure that the value you get for bi a lies close to the true i. 6 Detection/ Fix for heteroskedasticity • Detection: Plot of Residual Vs. Predicted Y exhibits a cone or megaphone shape. • Advanced Test: White test (Use Chi-square stat) • Fix: White Correction (Uses OLS but keeps heteroskedasticity from making the variance of the OLS estimators swell in size) 7 Heteroskedasticity EX1 (Xr19-06) • Univ GPA o 1HS GPA 3SAT 4 Activities SUMMARY OUTPUT Regression Statistics Multiple R 0.5368707 R Square 0.2882302 Adjusted R Square 0.2659873 Standard Error 2.0302333 Observations 100 ANOVA df Regression Residual Total Intercept HS GPA SAT Activities 3 96 99 SS MS F 160.2370587 53.41235 12.95835312 395.6973413 4.121847 555.9344 Coefficients Standard Error 0.7211046 1.869815022 0.610872 0.100749211 0.0027085 0.002873196 0.0462535 0.064049816 t Stat 0.385656 6.063293 0.942677 0.722149 8 heteroskedasticity EX1 No Megaphone pattern No heteroskedasticity Residuals 12 10 Residual 8 6 Residuals 4 2 0 -5 -4 -3 -2 -1 0 1 2 3 4 5 Predicted GPA at University 9 White Test • Ho: No heteroskedasticity H1: Have heteroskedasticity White’s Chi –Square stat is N*R-square from regression of residual squared (ei)2 against X1,…Xk and their squared terms. et2 o 1 X 1 2 X 2 3 X 1 X 2 4 X 12 5 X 22 10 White Test • White’s Test Stat > Chi-Square from table (with d.f. = # of slope coefficients) => reject Homoskedasticity, you have a problem with heterokcedasticity White’s Test Stat < Chi-Square from table (with d.f. = # of slope coefficients) => Fail to reject Homoskedasticity, you do not have a problem with heteroskedasticity 11 • 12.9 < 16.92 (Chi-Square, 95% Confidence, 9 df) Fail to reject homoskedasticity White Heteroskedasticity Test: F-statistic Obs*R-squared 1.485871 12.93651 Probability Probability Test Equation: Dependent Variable: RESID^2 Method: Least Squares Date: 09/10/03 Time: 15:21 Sample: 1 100 Included observations: 100 Variable CoefficientStd. Error t-Statistic C 24.26312 HSGPA 3.928636 HSGPA^2 -0.07362 HSGPA*SAT -0.0049 HSGPA*ACTIVITIES-0.04933 SAT -0.13973 SAT^2 0.000168 SAT*ACTIVITIES -0.00084 ACTIVITIES 0.597115 ACTIVITIES^2 0.033446 30.8596 2.374181 0.090684 0.003259 0.072608 0.091374 7.41E-05 0.001889 1.337251 0.050154 0.786242 1.654733 -0.81185 -1.50251 -0.67935 -1.52916 2.269871 -0.44207 0.446524 0.666867 12 Heteroskedasticity EX2 Xr 19-10 Internet o 1AGE 3INCOME SUMMARY OUTPUT Regression Statistics Multiple R 0.445484192 R Square 0.198456165 Adjusted R Square 0.190318665 Standard Error 4.190258376 Observations 200 ANOVA df Regression Residual Total Intercept Age Income 2 197 199 SS 856.4167439 3458.978256 4315.395 MS F 428.2083719 24.38785 17.55826526 Coefficients Standard Error t Stat P-value 13.02717915 2.542151536 5.124469946 7.09E-07 -0.279139752 0.044842815 -6.224848997 2.85E-09 0.093837098 0.031237341 3.004004037 0.00301 13 Heteroskedasticity EX2 Residual Vs. Predicted Internet 16 14 12 Residual 10 8 Residual Vs. Predicted Internet 6 4 2 0 -14 -12 -10 -8 -6 -4 -2 0 2 4 6 8 Predicted Internet 14 White Test EX 2 33.75 > 11.07 (Ch—Square 95% confidence, d.f. = 5%) => Have heteroskedasticity White Heteroskedasticity Test: F-statistic 7.876243 Obs*R-squared 33.7484 Probability Probability 0.000001 0.000003 Test Equation: Dependent Variable: RESID^2 Method: Least Squares Date: 09/10/03 Time: 15:38 Sample: 1 200 Included observations: 200 Variable C AGE AGE^2 AGE*INCOME INCOME INCOME^2 CoefficientStd. Error t-Statistic -67.6927 4.211074 -0.03078 -0.049 0.10313 0.021946 96.59632 -0.70078 3.113861 1.352364 0.03072 -1.00181 0.028515 -1.7185 1.91587 0.053829 0.012718 1.725546 Prob. 0.4843 0.1778 0.3177 0.0873 0.9571 0.086 15 Non-normality of Error • If the assumption that is distributed normally is called into question we cannot use any of the t-test, F-tests or R-square because these tests are based on the assumption that is distributed normally. The results of these tests become meaningless. 16 Non-normality of Error term • Indication of Non-normal Distribution: • Histogram of residuals does not look normal • (Formal test Jarque-Bera Test) 2 n ( Kurt 3 ) 2 JB Skew 6 4 17 Non-normality of Error term • If the JB stat is smaller than a Chi-square with 2 degrees of freedom (5.99 for 95% significance level) then you can relax!! Your error term follows a normal distribution. • If not, get more data or transform the dependent variable. Log(y), Square Y, Square Root of Y, 1/Y etc. 18 Jarque-Bera Test for nonnormality of error term • JB stat = 45 > 5.99 (Chi-square, 95% Confidence df = 2) => Error term not normally distributed 24 Series: Residuals Sample 1 200 Observations 200 20 16 Mean Median Maximum Minimum Std. Dev. Skewness Kurtosis 12 8 4 Jarque-Bera Probability 0 -10 -5 0 2.04E-16 1.667127 5.349932 -11.31068 4.169149 -1.169375 3.190836 45.88472 0.000000 5 19 Additional Fixes for Normality • Bootstrappig – Ask your advisor if this approach is right for you • Appeal to large, Assymptotic Sample theory. If your sample is large enough the tand F tests are valid approximately. 20 SERIAL CORRELATION Or AUTOCORRELATION Patterns in the appearance of the residuals over time indicates that autocorrelation exists. Residual Residual + ++ + 0 + + + + + + + + + + ++ + + + Time Note the runs of positive residuals, replaced by runs of negative residuals + + + 0 + + + + Time + + Note the oscillating behavior of the residuals around zero. 21 Positive first order autocorrelation occurs when consecutive residuals tend to be similar. Then, Residuals the value of d is small (less than 2). Positive first order autocorrelation + + + + 0 + + Time + + Negative first order autocorrelation Residuals + + Negative first order autocorrelation occurs when consecutive residuals tend to markedly differ. Then, the value of d is large (greater than 2). + + + + + 0 Time 22 Autocorrelation or Serial Correlation • The Durbin - Watson Test – This test detects first order auto-correlation between consecutive residuals in a time series – If autocorrelation exists the error variables are n not independent 2 ( e e ) i i 1 d i 2 n 2 ei i 1 The range of d is 0 d 4 23 • One tail test for positive first order auto-correlation – If d<dL there is enough evidence to show that positive firstorder correlation exists – If d>dU there is not enough evidence to show that positive first-order correlation exists – If d is between dL and dU the test is inconclusive. • One tail test for negative first order auto-correlation – If d>4-dL, negative first order correlation exists – If d<4-dU, negative first order correlation does not exists – if d falls between 4-dU and 4-dL the test is inconclusive. 24 • Two-tail test for first order auto-correlation – If d<dL or d>4-dL first order auto-correlation exists – If d falls between dL and dU or between 4-dU and 4-dL the test is inconclusive – If d falls between dU and 4-dU there is no evidence for first order auto-correlation First order correlation exists 0 dL First order correlation does not exist Inconclusive test dU 2 First order correlation does not exist Inconclusive test 4-dU First order correlation exists 4-dL 4 25 • Example – How does the weather affect the sales of lift tickets in a ski resort? – Data of the past 20 years sales of tickets, along with the total snowfall and the average temperature during Christmas week in each year, was collected. – The model hypothesized was TICKETS=0+1SNOWFALL+2TEMPERATURE+ – Regression analysis yielded the following results: 26 SUMMARY OUTPUT The model seems to be very poor: Regression Statistics Multiple R 0.3464529 R Square 0.1200296 Adjusted R Square 0.0165037 Standard Error 1711.6764 Observations 20 ANOVA df Regression Residual Total Intercept Snowfall Tempture • The fit is very low (R-square=0.12), • It is not valid (Signif. F =0.33) • No variable is linearly related to Sales Diagnosis of the required conditions resulted with SS MS F Signif. F following 6793798.2 the 3396899.1 1.1594 findings 0.3372706 2 17 49807214 2929836.1 19 56601012 Coefficients Standard Error t Stat P-value Lower 95% Upper 95% 8308.0114 903.7285 9.1930391 5E-08 6401.3083 10214.715 74.593249 51.574829 1.4463111 0.1663 -34.22028 183.40678 -8.753738 19.704359 -0.444254 0.6625 -50.32636 32.818884 27 3000 2000 7 y Residual vs. predicted 1000 0 -10007500 -2000 The errors may be normally distributed 8500 9500 -3000 -4000 The error distribution 6 5 4 3 105002 11500 1 0 The error variance is constant 12500 -2.5 -1.5 -0.5 0.5 1.5 2.5 More Residual over time The errors are not independent 3000 2000 1000 0 -1000 0 -2000 -3000 -4000 5 10 15 20 25 28 Test for positive first order auto-correlation: n=20, k=2. From the Durbin-Watson table we have: dL=1.10, dU=1.54. The statistic d=0.59 Conclusion: Because d<dL , there is sufficient evidence to infer that positive first order auto-correlation exists. Using the computer - Excel Tools > data Analysis > Regression (check the residual option and then OK) Tools > Data Analysis Plus > Durbin Watson Statistic > Highlight the range of the residuals from the regression run > OK Durbin-Watson Statistic -2793.99 -1723.23 d = 0.5931 -2342.03 -956.955 The residuals -1963.73 . . Residuals 4000 2000 0 -2000 0 -4000 5 10 15 20 29 25 MANUAL D-W CALCULATION RESIDUAL OUTPUT MANUAL DURBIN-WATSON Observation Predicted Tickets 1 9628.992039 2 9593.231176 3 8515.029601 4 8935.954928 5 9602.730825 6 8632.272672 7 9533.073879 8 9488.932234 9 9423.092722 10 9659.816599 11 9734.036892 12 10251.62629 13 8207.902866 14 9707.402723 15 8115.429185 16 10080.74193 17 9668.943293 18 8672.596882 19 9594.350043 20 9259.843227 Residuals -2793.992039 -1723.231176 -2342.029601 -956.9549279 -1963.730825 -1465.272672 -1439.073879 414.0677664 364.907278 -102.8165988 49.96310771 1823.373709 920.0971341 -660.402723 2515.570815 2482.258074 1343.056707 1368.403118 334.6499568 1831.156773 1146528.8 382911.49 1918431.9 1013597.7 248460.53 686.37677 3434134 2416.7536 218765.62 23341.639 3144985.2 815908.57 2497979.8 10086808 1109.7387 1297779.8 642.44054 1068645.6 2239532.7 (e i ) 2 7806391.5 2969525.7 5485102.7 915762.73 3856238.8 2147024 2070933.6 171452.12 133157.32 10571.253 2496.3121 3324691.7 846578.74 436131.76 6328096.5 6161605.1 1803801.3 1872527.1 111990.59 3353135.1 SUM 29542666 49807214 D-W 0.5931403 e i - e i-1 1070.761 -618.798 1385.075 -1006.78 498.4582 26.19879 1853.142 -49.1605 -467.724 152.7797 1773.411 -903.277 -1580.5 3175.974 -33.3127 -1139.2 25.34641 -1033.75 1496.507 (e i - e i-1) 2 30 The modified regression model The autocorrelation has occurred over time. Therefore, a time dependent variable added TICKETS= 2TEMPERATURE+ 3YEARS+ to the model may correct the problem 0+ 1SNOWFALL+ • All the required conditions are met for this model. • The fit of this model is high R2 = 0.74. • The model is useful. Significance F = 5.93 E-5. • SNOWFALL and YEARS are linearly related to ticket sales. • TEMPERATURE is not linearly related to ticket sales. 31 Multicollinearity • When two or more X’s are correlated you have multicollinearity. • Symptoms of multicollinearity include insignificant t-stats (due to inflated standard errors of coefficients) and a good R-square. • Test: Run a correlation matrix of all X variables. • Fix: More data, combine variables. 32 Multicolinearity Ex: Xm 19-02 SUMMARY OUTPUT Regression Statistics Multiple R 0.748329963 R Square 0.559997733 Adjusted R Square 0.546247662 Standard Error 25022.70761 Observations 100 ANOVA df Regression Residual Total Intercept Bedrooms House Size Lot Size 3 96 99 SS MS 76501718347 2.55E+10 60109046053 6.26E+08 1.36611E+11 Coefficients Standard Error 37717.59451 14176.74195 2306.080821 6994.19244 74.29680602 52.97857934 -4.36378288 17.0240013 t Stat 2.660526 0.329714 1.402393 -0.25633 F Significance F 40.7269 4.57E-17 P-value Lower 95%Upper 95% Lower 95.0% Upper 95.0% 0.009145 9576.963 65858.23 9576.963 65858.23 0.742335 -11577.3 16189.45 -11577.3 16189.45 0.164023 -30.8649 179.4585 -30.8649 179.4585 0.798244 -38.1562 29.42862 -38.1562 29.42862 33 Multicolinearity Ex: Xm 19-02 • Diagnostic Correlation Matrix • Correlation Coefficient over 0.5 => Problem with Multicollinearity Bedrooms House Size Lot Size Bedrooms 1 House Size 0.846453504 1 Lot Size 0.837429579 0.993615 1 34