* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Linear regression model • we assume that two quantitative variables

Sufficient statistic wikipedia , lookup

Linear least squares (mathematics) wikipedia , lookup

History of statistics wikipedia , lookup

Confidence interval wikipedia , lookup

Degrees of freedom (statistics) wikipedia , lookup

Taylor's law wikipedia , lookup

Bootstrapping (statistics) wikipedia , lookup

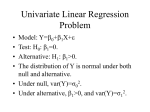

Linear regression model • we assume that two quantitative variables, x and y, are linearly related; that is, the population of (x, y) pairs are related by an ideal population regression line y = α + βx + e where α and β represent the y-intercept and slope coefficients; the quantity e is included to represent the fact that the relation is subject to random errors in measurement • e can be interpreted to represent either - the deviation from the mean of that value of y from the population regression line, or - the error in using the line to predict a value of y from the corresponding given x • we assume that e is a normally distributed random variable with mean µe = 0 and standard deviation σe, which will be large when prediction errors are large and small when prediction errors are small • note that e is a different random variable for different values of x; all such e are assumed to be independent of each other and identically distributed (so there is no harm in giving them the same name) • for a fixed value x* of x, the quantity α + βx* represents the (fixed) height of the regression line at x = x*, so y = α + βx* + e is subject to the same kind of variability as e: namely, y is normally distributed with mean µy = α + βx* and standard deviation σy = σe • β, being the slope of the line, represents the change in µy associated with a unit change in x; that is, β is the average change in y associated with a unit change in x • parameters of interest for the regression model are σe, which measures the ideal size of errors in using the line to make predictions of y values, and β, which measures the average change in y associated with a unit change in x Estimating regression parameters • estimating σe standard deviation about the regression line se = SSResid n−2 is not an unbiased estimator of σe € [TI83: STAT TESTS LinRegTTest (denoted s)] • estimating β the slope of the regression line, b=r sx , sy is an unbiased estimator for β € LinRegTTest, [TI83: STAT TESTS also STAT CALC LinReg(a+bx).] The sampling distribution for b • the sampling distribution of b is studied to determine how estimates of β will behave from sample to sample • assuming that the n data points produce identical independent normally distributed errors e, all with mean 0 and standard deviation σe, we have that - µb = β σe sx n −1 - the sampling distribution of b is normal, but since neither σe nor σb are known, we estimate σ with the statistic se, and σb with the statistic € e se sb = , then estimate b with the statistic sx n −1 b−β t= having df = n – 2 sb - σb = € € Confidence interval for β Assuming that the n data points produce identical independent normally distributed errors e, all with mean 0 and standard deviation σe, we obtain the following confidence interval for β: b ± (t-crit.)·sb where the t-critical value is based on df = n – 2 Model utility test for linear regression If the slope of the regression line is β = 0, then the line is horizontal and values of y do not depend on x, so there is no use to search for a prediction of y based on knowledge of x. A test for whether β = 0 can determine whether it is appropriate to search for a linear regression between the variables x and y. Hypotheses H0 : β = 0 Ha : β ≠ 0 Test statistic t= Assumptions independent normally distributed errors with mean 0 and equal standard deviatons € b −0 , with df = n – 2 sb [TI-83: STAT TESTS LinRegTTest ] Residual analysis We can use a residual plot (a plot of residuals vs. x values) to check whether it is reasonable to assume that errors are identically distributed independent normal variables; the z-scores of these residuals can be used to display a standardized residual plot: zresid = resid − 0 sresid but the standard deviations of each residual vary from point to point and are not automatically calculated by € the TI-83. Many statistical packages, however, do perfome these calculations. What to look for: • absence of patterns in the (standardized) residual plot • very few large residuals (more than 2 standard deviations from the x-axis) • no variations in spread of the residuals (would indicate that σe varies with x) • influential residuals (residual points far removed from the bulk of the plot) The sampling distribution for a + bx* Assuming that the n data points produce identical independent normally distributed errors e, all with mean 0 and standard deviation σe, we study the distribution of the prediction statistic a + bx* for some fixed choice of x = x*. • a + bx* is an unbiased estimate for the true regression value α + βx*, which thus represents µa + bx* 2 1 zx • σa + bx* = σ e + and is estimated by the n n −1 statistic sa + bx* = se 2 1 zx + n n −1 € • a + bx* is normally distributed, but replacing σa + bx* with the estimate sa + bx* produces a standardized t € variable with df = n – 2 Confidence interval for a + bx* With the same assumptions as above, the confidence interval formula for a + bx*, the mean value of the predicted y, is (a + bx*) ± (t-crit)·sa + bx* where t has df = n – 2 Prediction intervals With the same assumptions as above, the prediction interval formula for y*, the prediction of y for the x value x = x*, is 2 (a + bx*) ± (t-crit)· s2e + sa+bx* where t has df = n – 2 (variability comes not only from the size of the error but the extent to which the estimate a + bx* differs€from the mean value)