* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download Orange Sky PowerPoint Template

Time series wikipedia , lookup

Neural modeling fields wikipedia , lookup

Gene expression programming wikipedia , lookup

Reinforcement learning wikipedia , lookup

Concept learning wikipedia , lookup

Machine learning wikipedia , lookup

Convolutional neural network wikipedia , lookup

Pattern recognition wikipedia , lookup

Multilayer Perceptron &

Backpropagation

based on

<Data Mining : Practical Learning Tools and Techniques>, 2 nd ed.,

written by Ian H. Witten & Eibe Frank

Images and Materials are from the official lecture slides of the book.

30th November, 2009

Presented by Kwak, Nam-ju

Coverage

Nonlinear Classification

Multilayer Perceptron

Backpropagation

Radial Basis Function Network

Nonlinear Classification

To use a single-layer perceptron, the dataset should

be linearly separable.

This condition significantly restrict the ability of

classification of the model.

Here, by n-layer perceptron, the # of layers does

not include the input layer.

Nonlinear Classification

Logical operations AND, OR, NOT can be

implemented by single-layer perceptrons.

Nonlinear Classification

AND

OR

NOT

Nonlinear Classification

However, XOR can not.

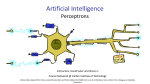

Multilayer Perceptron

It is mentioned that a perceptron is regarded as an

artificial neuron.

Actually, each individual neuron doesn’t have a

power enough to solve complex problems.

Then, how can brain-like structures solve complex

tasks such as image recognition?

Multilayer Perceptron

Complex (or nonlinear) problems can be solved by

a set of massively interconnected neurons in such a

way that the global problem is decomposed (or

transformed) into several subproblems and multiple

neurons take one of them.

Multilayer Perceptron

A XOR B = (A OR B) AND (A NAND B)

OR, NAND and AND are linearly separable.

NAND

Multilayer Perceptron

XOR

AND

NAND

OR

Multilayer Perceptron

A multilayer perceptron has the same expressive

power as a decision tree.

It turns out that a two-layer perceptron (not counting

the input layer) is sufficient.

Hidden layer refers to output units (perceptron) and

a bias unit having no direct connection to the

environment (i.e. input and output layer).

Hidden layer

Circle-like objects

represent perceptrons.

Multilayer Perceptron

So far, we’ve just talked about the “tool” for

representing a classifier.

Don’t forget the purpose of us, which is, “learning”.

Therefore let’s move on to the learning issues.

Multilayer Perceptron

How to learn a multilayer perceptron?

The question is further divided into two aspects:

learning the structure of the network and learning

the connection weights.

Multilayer Perceptron

Learning the structure of the network: commonly

solved by through experimentation

Learning the connection weights: backpropagation

Let’s focus on it this time!

Backpropagation

Modify the weight of the connections leading to the

hidden units based on the strength of each unit’s

contribution to the final prediction.

Based on how much

each unit contributes to

the final prediction

Gradient descent

Backpropagation

The function is given as follows for gradient descent

Learning rate (r) is 0.1 and start value is 4.

w(t+1)=w(t)-r*f’(w(t))

4->3.2->2.56->2.048

-> … -> 0

Backpropagation

Since gradient descent requires taking derivatives,

the step function should be differentiable.

Each perceptron makes an output using this function.

Backpropagation

Error function: squared-error loss function

x: the input value a (output) perceptron may receive

f(x): the output value a perceptron makes when x is

given

y: the ACTUAL class label

We need to find the weight set which minimizes this

function.

Backpropagation

An example multilayer perceptron for illustration

Backpropagation

Given

Backpropagation

For each w∈{wi’s, wij’s}, find all dE/dw values for all

the training instances and add up them.

We multiply this added up value by a learning rate

and subtract it from the current w value.

dE/dw for training instance 1

w

dE/dw for training instance 2

add up

…

multiply

by

learning

rate

dE/dw for training instance k

subtract

new

w

Backpropagation

Batch learning

Stochastic backpropagation: Udate the weights

incrementally after each training instance had been

processed. (online learning, in which new data

arrives in a continuous stream and every training

instance is processed just once)

Backpropagation

Overfitting: The training instances can not represent

the mother population completely.

Early stopping: When the error of holdout set starts

to increase, it terminates the propagation iteration.

Weight decay: Add to the error function a penalty

term, which is the squared sum of all weights in the

network multiplied by a decay factor.

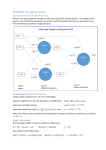

Radial Basis Function Network

It differs from a multilayer perceptron in the way

that the hidden units perform computations.

Each hidden unit represents a particular point in

input space and its output for a given instance

depends on the distance between its point and the

instance.

The closer these two points, the stronger the output.

A bell-shaped Gaussian function is used.

Radial Basis Function Network

Things to learn: the centers and widths of the RBFs,

and the weights used to from the linear combination

of the outputs obtained from the hidden layer

Picture from http://documents.wolfram.com/applications/neuralnetworks/NeuralNetworkTheory/2.5.2.html

Question

Any question?