On the Decision Boundaries of Hyperbolic Neurons

... which is analytic and bounded in the hyperbolic plane unlike the non-split (fully) complex-valued sigmoid activation function [4]. Using this function is a significant extension of the model in [1]. As the result of this study, it was learned that one of the advantages of hyperbolic neurons is that t ...

... which is analytic and bounded in the hyperbolic plane unlike the non-split (fully) complex-valued sigmoid activation function [4]. Using this function is a significant extension of the model in [1]. As the result of this study, it was learned that one of the advantages of hyperbolic neurons is that t ...

GEO 428: Slope

... identifying 'run' distance identifying 'run' direction Raster Notation Let E be the cell for which to calculate slope Za is elevation for cell A, etc. A Common Algorithm Horn (1981), implemented in A/I Grid Averages east-west & north-south gradient: dz/dx = ((Za + 2*Zd + Zg) - (Zc + 2*Zf + Zi)) / 8* ...

... identifying 'run' distance identifying 'run' direction Raster Notation Let E be the cell for which to calculate slope Za is elevation for cell A, etc. A Common Algorithm Horn (1981), implemented in A/I Grid Averages east-west & north-south gradient: dz/dx = ((Za + 2*Zd + Zg) - (Zc + 2*Zf + Zi)) / 8* ...

Combination of LSTM and CNN for recognizing mathematical symbols

... gradient features is deployed to improve the recognition accuracy of LSTM. We applied the dropout technique mentioned in [1] to two first full -connected layers of CNN. These approaches have been proved efficiently through our experiments with 5 trials: Using LSTM, local gradient features increased ...

... gradient features is deployed to improve the recognition accuracy of LSTM. We applied the dropout technique mentioned in [1] to two first full -connected layers of CNN. These approaches have been proved efficiently through our experiments with 5 trials: Using LSTM, local gradient features increased ...

Biologically Plausible Error-driven Learning using Local Activation

... This equation can be iteratively applied until the network settles into a stable equilibrium state (i.e., until the change in activation state goes below a small threshold value), which it will provably do if the weights are symmetric (Hopfield, 1984), and often even if they are not (Galland & Hinto ...

... This equation can be iteratively applied until the network settles into a stable equilibrium state (i.e., until the change in activation state goes below a small threshold value), which it will provably do if the weights are symmetric (Hopfield, 1984), and often even if they are not (Galland & Hinto ...

Document

... A hidden layer “hides” its desired output. Neurons in the hidden layer cannot be observed through the input/output behaviour of the network. There is no obvious way to know what the desired output of the hidden layer should be. Commercial ANNs incorporate three and sometimes four layers, including o ...

... A hidden layer “hides” its desired output. Neurons in the hidden layer cannot be observed through the input/output behaviour of the network. There is no obvious way to know what the desired output of the hidden layer should be. Commercial ANNs incorporate three and sometimes four layers, including o ...

artificial neural networks

... Information is stored and processed in a neural network simultaneously throughout the whole network, rather than at specific locations. In other words, in neural networks, both data and its processing are global rather than local. Learning is a fundamental and essential characteristic of biological ...

... Information is stored and processed in a neural network simultaneously throughout the whole network, rather than at specific locations. In other words, in neural networks, both data and its processing are global rather than local. Learning is a fundamental and essential characteristic of biological ...

Neural Networks

... shared by different parts of the brain • Evidence from neuro‐rewiring experiments • Cut the wire from ear to auditory cortex • Route signal from eyes to the auditory cortex • Auditory cortex learns to see • animals will eventually learn to perform a variety of object ...

... shared by different parts of the brain • Evidence from neuro‐rewiring experiments • Cut the wire from ear to auditory cortex • Route signal from eyes to the auditory cortex • Auditory cortex learns to see • animals will eventually learn to perform a variety of object ...

Classification of Clustered Microcalcifications using Resilient

... In this database, expert radiologists marked areas where MCCs are present. Such areas are called Regions of Interest (ROIs). ...

... In this database, expert radiologists marked areas where MCCs are present. Such areas are called Regions of Interest (ROIs). ...

ARTIFICIAL NEURAL NETWORKS AND COMPLEXITY: AN

... Complexity can therefore be summarised by mixing the following factors: high number of dimensions (or descriptive variables), non-linearity in description of differential equation systems, some noise, which may come naturally from environment, from exclusion of any marginal aspect of the system desc ...

... Complexity can therefore be summarised by mixing the following factors: high number of dimensions (or descriptive variables), non-linearity in description of differential equation systems, some noise, which may come naturally from environment, from exclusion of any marginal aspect of the system desc ...

Artificial Neural Network for the Diagnosis of Thyroid Disease using

... Using a small value for momentum will lead to prolonged training. The training epochs of the training cycle is the number of times the training data has been presented to the network. The BP algorithm guarantees that total error in the training set will continue to decrease as the number of training ...

... Using a small value for momentum will lead to prolonged training. The training epochs of the training cycle is the number of times the training data has been presented to the network. The BP algorithm guarantees that total error in the training set will continue to decrease as the number of training ...

Modeling Estuarine Salinity Using Artificial Neural Networks

... common learning type, supervised learning, involves presenting the network with a set of expected outputs, and each predicted output is compared with the respective expected output to obtain an error. Using the error, each weight is iteratively updated using gradient descent to minimize the error. T ...

... common learning type, supervised learning, involves presenting the network with a set of expected outputs, and each predicted output is compared with the respective expected output to obtain an error. Using the error, each weight is iteratively updated using gradient descent to minimize the error. T ...

A Project on Gesture Recognition with Neural Networks for

... answer questions that require computational thinking. Overall, the neural network project is versatile since it allows for theoretical questions and for implementations. We list a variety of possible project choices, including easy and difficult questions. Teachers need to select among them since we ...

... answer questions that require computational thinking. Overall, the neural network project is versatile since it allows for theoretical questions and for implementations. We list a variety of possible project choices, including easy and difficult questions. Teachers need to select among them since we ...

chapter two neural networks

... The history of Artificial Neural Network can be traced back to the early 1940s. The first important paper on neural network was published by physiologist, Warren McCulloch and Walter Pitts in 1943, they proposed a simple model of neuron with electronic circuit, this model consists of two input and o ...

... The history of Artificial Neural Network can be traced back to the early 1940s. The first important paper on neural network was published by physiologist, Warren McCulloch and Walter Pitts in 1943, they proposed a simple model of neuron with electronic circuit, this model consists of two input and o ...

X t

... The figures below are such examples. This type of trees is known as Ordinary Binary Classification Trees (OBCT). The decision hyperplanes, splitting the space into regions, are parallel to the axis of the spaces. Other types of partition are also possible, yet less popular. ...

... The figures below are such examples. This type of trees is known as Ordinary Binary Classification Trees (OBCT). The decision hyperplanes, splitting the space into regions, are parallel to the axis of the spaces. Other types of partition are also possible, yet less popular. ...

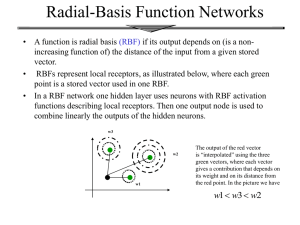

Radial-Basis Function Networks

... – RBF NN using Gaussian functions construct local approximations to non-linear I/O mapping. – FF NN construct global approximations to non-linear I/O mapping. ...

... – RBF NN using Gaussian functions construct local approximations to non-linear I/O mapping. – FF NN construct global approximations to non-linear I/O mapping. ...

This paper a local linear radial basis function neural network

... Burke et al. [7] compared the accuracy of TNM staging system with the accuracy of a multilayer back propagation Artificial Neural Network (ANN) for predicting the 5-year survival of patients with breast carcinoma. ANN increased the prediction capacity by 10 % obtaining the final result of 54 %. They ...

... Burke et al. [7] compared the accuracy of TNM staging system with the accuracy of a multilayer back propagation Artificial Neural Network (ANN) for predicting the 5-year survival of patients with breast carcinoma. ANN increased the prediction capacity by 10 % obtaining the final result of 54 %. They ...

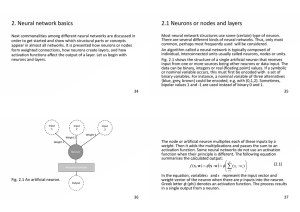

2. Neural network basics 2.1 Neurons or nodes and layers

... appear in almost all networks. It is presented how neurons or nodes form weighted connections, how neurons create layers, and how activation functions affect the output of a layer. Let us begin with neurons and layers. ...

... appear in almost all networks. It is presented how neurons or nodes form weighted connections, how neurons create layers, and how activation functions affect the output of a layer. Let us begin with neurons and layers. ...

application of an expert system for assessment of the short time

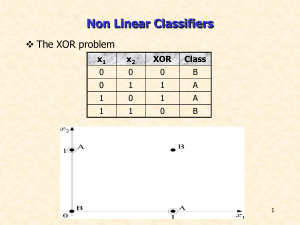

... The aim of the perceptron is to classify inputs, x1, x2, . . ., xn, into one of two classes, say A1 and A2. In the case of an elementary perceptron, the ndimensional space is divided by a hyperplane into two decision regions. The hyperplane is defined by the linearly separable function: ...

... The aim of the perceptron is to classify inputs, x1, x2, . . ., xn, into one of two classes, say A1 and A2. In the case of an elementary perceptron, the ndimensional space is divided by a hyperplane into two decision regions. The hyperplane is defined by the linearly separable function: ...

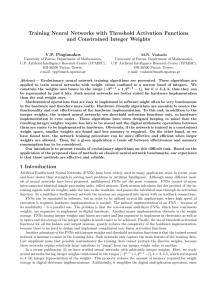

Training Neural Networks with Threshold Activation Functions and Constrained Integer Weights

... for testing the functionality, and computer simulations have been developed to study the performance of the DE training algorithms for various values of k. We call DE1 the algorithm that uses relation (1) as mutation operator, DE2 the algorithm that uses relation (2), and so on. Table 1 summarizes t ...

... for testing the functionality, and computer simulations have been developed to study the performance of the DE training algorithms for various values of k. We call DE1 the algorithm that uses relation (1) as mutation operator, DE2 the algorithm that uses relation (2), and so on. Table 1 summarizes t ...

PERFORMANCE OF MEE OVER TDNN IN A TIME SERIES PREDICTION

... This project deals with the prediction of a non-linear time series, like sunspot time series, using a non-linear system. For this the time-delay neural network with back propagation learning is used to predict the time series. The primary objective is to implement the MEE cost function over TDNN and ...

... This project deals with the prediction of a non-linear time series, like sunspot time series, using a non-linear system. For this the time-delay neural network with back propagation learning is used to predict the time series. The primary objective is to implement the MEE cost function over TDNN and ...

... Here the input vector p is represented by the solid dark vertical bar at the left. The dimensions of p are shown below the symbol p in the figure as Rx1. (Note that a capital letter, such as R in the previous sentence, is used when referring to the size of a vector.) Thus, p is a vector of R input e ...

2016 prephd course work study material on development of BPN

... with its target tk to determine the associated error for that pattern with the unit. Based on the error, the factor k k 1,..., m is compared and is used to distribute the error at output unit y k based to all units in the previous layer. Similarly the factor j j 1,..., p is computed fo ...

... with its target tk to determine the associated error for that pattern with the unit. Based on the error, the factor k k 1,..., m is compared and is used to distribute the error at output unit y k based to all units in the previous layer. Similarly the factor j j 1,..., p is computed fo ...