* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Reasoning of significance tests

Foundations of statistics wikipedia , lookup

History of statistics wikipedia , lookup

Degrees of freedom (statistics) wikipedia , lookup

Bootstrapping (statistics) wikipedia , lookup

Taylor's law wikipedia , lookup

Confidence interval wikipedia , lookup

German tank problem wikipedia , lookup

Misuse of statistics wikipedia , lookup

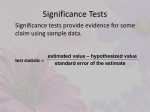

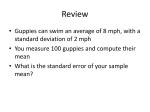

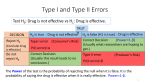

T-tests • Computing a t-test the t statistic the t distribution • Measures of Effect Size Confidence Intervals Cohen’s d Part I • Computing a t-test the t statistic the t distribution Z as test statistic • Use a Z-statistic only if you know the population standard deviation (σ). •Z-statistic converts a sample mean into a z-score from the null distribution. Z test X H0 SE X H0 n • p-value is the probability of getting a Ztest as extreme as yours under the null distribution One tail Reject H0 Fail to reject H0 .05 Zcrit -1.65 Two tail Reject H0 Reject H0 Fail to reject H0 .025 Zcrit -1.96 .025 Zcrit 1.96 t as a test statistic Sample _ X Sample _ X, s Population z-test Population t-test t as a test statistic • t-test: uses sample data to evaluate a hypothesis about a population mean when population stdev () is unknown • We use the sample stdev (s) to estimate the standard error standard error x z = n X H0 X estimated standard error t sx = s n X H0 sX t as a test statistic • Use a t-statistic when you don’t know the population standard deviation (σ). •t-statistic converts a sample mean into a t-score (using the null hypothesis) ttest X H0 SE X H0 s n • p-value is the probability of getting a ttest as extreme as yours under the null distribution t distribution • You can use s to approximate σ, but then the sampling distribution is a t distribution instead of a normal distribution •Why are Z-scores normally distributed, but t-scores are not? normal normal Random variable Random variable constant ztest X H0 X constant ttest X H0 sX non normal constant Random variable t distribution •With a very large sample, the estimated standard error will be very near the true standard error, and thus t will be almost exactly the same as Z. •Unlike the standard normal (z) distribution, t is a family of curves. As n gets bigger, t becomes more normal. •For smaller n, the t distribution is platykurtic (narrower peak, fatter tails) •We use “degrees of freedom” to identify which t curve to use. For a basic t-test, df = n-1 Comparing a t (df=5) with the Standard Normal Distribution Zcrit Tcrit 1.96 2.57 Degrees of freedom the number of scores in a sample that are free to vary e.g. for 1 sample, sample mean restricts one value so df = n-1 As df approaches infinity, t-distribution approximates a normal curve At low df, t-distribution has “fat tails”, which means tcrit is going to be a bit larger than Zcrit. Thus the sample evidence must be more extreme before we get to reject the null. (a tougher test). t distribution Too many different curves to put each one in a table. Table E.6 shows just the critical values for one tail at various degrees of freedom and various levels of alpha. Table E.6 Level of significance for a one-tailed test df .05 1 2 3 4 6.314 2.920 2.353 2.132 .025 .01 .005 tcrit 12.706 32.821 63.657 4.303 6.965 9.925 3.182 4.541 5.841 2.776 3.747 4.604 This makes it harder to get exact p-values. You have to estimate. Practice with Table E.6 With a sample of size 6, what is the degrees of freedom? For a onetailed test, what is the critical value of t for an alpha of .05? For an alpha of .01? df=5, t =2.015; t =3.365 crit crit For a sample of size 25, doing a two-tailed test, what is the degrees of freedom and the critical value of t for an alpha of .05 and for an alpha of .01? df=24, tcrit=2.064; tcrit=2.797 You have a sample of size 13 and you are doing a one-tailed test. Your tcalc = 2. What do you approximate the p-value to be? p-value between .025 and .05 What if you had the same data, but were doing a two-tailed test? p-value between .05 and .10 Illustration In a study of families of cancer patients, Compas et al (1994) observed that very young children report few symptoms of anxiety on the CMAS. Contained within the CMAS are nine items that make up a “social desirability scale”. Compas wanted to know if young children have unusually high social desirability scores. Illustration He got a sample of 36 children of families with a cancer parent. The mean SDS score was 4.39 with a standard deviation of 2.61. Previous studies indicated that a population of elementary school children (all ages) typically has a mean of 3.87 on the SDS. Is there evidence that Compas’s sample of very young children was significantly different than the general child population? tcalc=1.195, df = 35 two tailed p-value = btwn .20 and .30 What should he conclude? What can he do now? Factors that affect the magnitude of t and the decision • the actual obtained difference X • the magnitude of the sample variance (s2) • the sample size (n) • the significance level (alpha) • whether the test is one-tail or two-tail How could you increase your chances of rejecting the null? Part II • Measures of Effect Size Confidence Intervals Cohen’s d Hypothesis Tests vs Effect Size • Hypothesis Tests •Set up a null hypothesis about µ. Reject (or fail to reject) it. •Only indicates direction of effect (e.g. >μH0) •Says nothing about effect size except “large enough to be significant”. • Effect Size •Tells you about the magnitude of the phenomenon •Helpful in deciding “importance” •Not just “which direction” but “how far” P-value: Bad Measure of Effect Size • “Significant” does not mean important or large • Significance is dependent on sample size • “The null hypothesis is never true in fact. Give me a large enough sample and I can guarantee a significant result. ” -Abelson Confidence Interval • We could estimate effect size with our observed sample deviation X H 0 • But we want a window of uncertainty around that estimate. • So we provide a “confidence interval” for our observed deviation • We say we are xx% confident that the true effect size lies somewhere in that window Finding the Window __ X Finding the Window __ X Finding the Window could be… __ X If we want alpha = .05, what is the lowest µH0 we would accept? .05 X 1.96(SE ) __ X If we want alpha = .05, what is the highest µH0 we would accept? .05 __ X X 1.96(SE ) Let’s generalize. Our Window! For any particular level of alpha, the confidence interval is X tcrit (SE ) X 1.96(SE ) to __ X X tcrit (SE ) X 1.96(SE ) Confidence intervals Confidence level = 1 - If alpha is .05, then the confidence level is 95% 95% confidence means that 95% of the time, this procedure will capture the true mean (or the true effect) somewhere within the range. Constructing confidence intervals Choose level of confidence (90%, 95%, 99%…) Find critical t-value (compare with two-tailed alphas) Find standard error Get Confidence Interval C.I. for mean C.I. for effect X tcrit ( s X ) ( X H0 ) tcrit (s X ) Exercise in constructing CI We have a sample of 10 girls who, on average, went on their 1st dates at 15.5 yrs, with a standard deviation of 4.2 years. What range of values can we assert with 95% confidence contains the true population mean? Margin = 3 years CI = (12.50, 18.50) • Using an alpha=.05, would we reject the null hypothesis that µ=10? •What about that µ=17? yes no Exercise in constructing CI We have a sample of 10 girls who, on average, went on their 1st dates at 15.5 yrs, with a standard deviation of 4.2 years. Let’s say we were comparing this sample (of girls from New York) to the general American population μ = 13 years What is our C.I. estimate of the effect size for being from New York? Margin = 3 years CI = (-0.50, 5.50) Factors affecting a CI 1. Level of confidence 1. (higher confidence ==> wider interval) 2. Sample size 1. (larger n ==> narrower interval) Confidence Intervals Pros • Gives a range of likely values for effect in original units • Has all the information of a significance test and more • builds in the level of certainty Cons • Units are specific to sample •Hard to compare across studies • No reference point (is this a big effect?) Cohen’s D • A standardized way to estimate effect size • Compares the size of the effect to the size of the standard deviation d X H0 s Exercise in constructing d We have a sample of 10 girls who, on average, went on their 1st dates at 15.5 yrs, with a standard deviation of 4.2 years. Let’s say we were comparing this sample (of girls from New York) to the general American population μ = 13 years What is our d estimate of the effect size for being from New York? 15.5 13 d .595 4.2 Exercise in constructing d What is our d estimate of the effect size for being from New York? 15.5 13 d .595 4.2 Is this big? .2 small .5 moderate .8 large >1 a very big deal Cohen’s D Pros • Uses an important reference point (s) • Is standardized • Can be compared across studies Cons • Loses raw units • Provides no estimate of certainty Review Hypothesis Tests • t-test ttest X sX Confidence Interval • t interval X tcrit s X 0 Effect Size • Cohen’s d or ( X H ) tcrit s X X uH0 s