* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download Estimation/Confidence Intervals for Popn Mean

Foundations of statistics wikipedia , lookup

History of statistics wikipedia , lookup

Degrees of freedom (statistics) wikipedia , lookup

Confidence interval wikipedia , lookup

Bootstrapping (statistics) wikipedia , lookup

Taylor's law wikipedia , lookup

German tank problem wikipedia , lookup

Misuse of statistics wikipedia , lookup

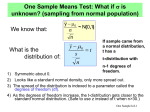

One Sample Means Test: What if is

unknown? (sampling from normal population)

We know that:

What is the

distribution of:

y 0

~ N(0,1)

n

y 0

t

s

n

If sample came from

a normal distribution,

t has a

t-distribution with

n-1 degrees of

freedom.

1) Symmetric about 0.

2) Looks like a standard normal density, only more spread out.

3) The spread of the distribution is indexed to a parameter called the

degrees of freedom (df).

4) As the degrees of freedom increase, the t-distribution gets closer to the

standard normal distribution. (Safe to use z instead of t when n>30.)

One Sample Inf-1

See Table 3 Ott & Longnecker

Tail probabilities of the

t-distribution

Df

.1

.05

.025

.01

.005

.001

1

3.078

6.314

12.706

31.821

63.657

318.309

5

1.476

2.015

2.571

3.365

4.032

5.893

10

1.372

1.812

2.228

2.764

3.169

4.144

15

1.341

1.753

2.131

2.602

2.947

3.733

20

1.325

1.725

2.086

2.528

2.845

3.552

25

1.316

1.708

2.060

2.485

2.787

3.450

30

1.310

1.697

2.042

2.457

2.750

3.385

40

1.303

1.684

2.021

2.423

2.704

3.307

NORMAL

(0,1)

1.282

1.645

1.960

2.326

2.576

3.090

One Sample Inf-2

Rejection Regions for hypothesis tests using

t-distribution critical values

H0:

HA:

= 0

1. > 0

For Pr(Type I error) = , df = n - 1

y 0

T.S. : t

s n

Reject H0 if t > t,n-1

2. < 0

t < -t,n-1

3. 0

| t | > t/2,n-1

One Sample Inf-3

Degrees of Freedom

Why are the degrees of freedom only n - 1 and not n?

We start with n independent pieces of information with which we

n

estimate the sample mean. y 1 y

n

Now consider the sample variance:

i 1

i

s

n

1

n 1

2

(y

y)

i

i 1

Because the sum of the deviations yi y are equal to zero, if we

know n-1 of these deviations, we can figure out the nth deviation.

Hence there are only n-1 independent deviations that are available to

estimate the variance (and standard deviation). That is, there are only

n-1 pieces of information available to estimate the standard deviation

after we “spend” one to estimate the sample mean. The t-distribution is

a normal distribution adjusted for unknown standard deviation hence it

is logical that it would have to accommodate the fact that only n-1

pieces of information are available.

One Sample Inf-4

Confidence Interval for when unknown (samples

are assumed to come from a normal population)

y t / 2, n 1

s

n

with df = n - 1 and confidence

coefficient (1 - ). (Can use

z/2 if n>30.)

Example: Compute 95% CI for given

y 40.1

df n 1 8

y t .025 ,8

s

n

s 5 .6

n9

t .025 ,8 2.306

40.1 2.306

.05

z.025 1.960

40.1 3.659

40.1 4.304

2 .025

5 .6

9

One Sample Inf-5

The Level of Significance of a Statistical Test (p-value)

• Suppose the result of a statistical test you carry out is to reject the Null.

• Someone reading your conclusions might ask: “How close were you to not

rejecting?”

• Solution: Report a value that summarizes the weight of evidence in favor of Ho,

on a scale of 0 to 1. This the p-value. The larger the p-value, the more evidence

in favor of Ho.

Formal Definition: The p-value of a test is the probability of observing a

value of the test statistic that is as extreme or more extreme (toward Ha)

than the actually observed value of the test statistic, under the assumption

that Ho is true. (This is just the probability of a Type I error for the observed

test statistic.)

Rejection Rule: Having decided upon a Type I error

probability , reject Ho if p-value < .

One Sample Inf-6

Equivalence between confidence intervals and

hypothesis tests

Rejecting the two-sided null Ho: = 0

is equivalent to

0 falling outside a (1-)100% C.I. for .

Rejecting the one-sided null Ho: 0

is equivalent to

0 being greater than the upper endpoint of a (1-2)100% C.I. for ,

or 0 falling outside a one-sided (1-)100% C.I. for with –infinity as

lower bound.

Rejecting the one-sided null Ho: 0

is equivalent to

0 being smaller than the lower endpoint of a (1-2)100% C.I. for ,

or 0 falling outside a one-sided (1-)100% C.I. for with +infinity as

upper bound.

One Sample Inf-7

Example: Practical Significance vs. Statistical Significance

Dr. Quick and Dr. Quack are both in the business of selling diets, and they

have claims that appear contradictory. Dr. Quack studied 500 dieters and

claims,

A statistical analysis of my dieters shows a significant weight loss for my

Quack diet.

The Quick diet, by contrast, shows no significant weight loss by its dieters. Dr.

Quick followed the progress of 20 dieters and claims,

A study shows that on average my dieters lose 3 times as much weight

on the Quick diet as on the Quack diet.

So which claim is right? To decide which diets achieve a significant weight loss

we should test:

Ho: 0

vs.

Ha: < 0

where is the mean weight change (after minus before) achieved by dieters on

each of the two diets. (Note: since we don’t know we should do a t-test.)

One Sample Inf-8

MTB output for Quack diet analysis (Stat Basic Stats 1 - Sample t)

One-Sample T: Quack

Test of mu = 0 vs mu < 0

Variable

Quack

N

500

Mean

-0.913

Variable

Quack

95.0% Upper Bound

-0.194

Difference

1

Size

500

StDev

9.744

T

-2.09

SE Mean

0.436

P

0.018

Power

0.6295

R output for Quick diet analysis (Read 20 values into vector “quack”)

> t.test(quick,alternative=c("less"),mu=0,conf.level=0.95)

One Sample t-test, data: quick

t = -1.0915, df = 19, p-value = 0.1443

alternative hypothesis: true mean is less than 0

95 percent confidence interval: -Inf 1.594617

sample estimate of mean of x: -2.73

> power.t.test(n=20,delta=1,sd=11.185,type="one.sample",

alternative="one.sided")

n = 20, delta = 1, power = 0.104

One Sample Inf-9

Summary

1.

Quick’s average weight loss of 2.73 is over 3 times as much as the 0.91

weight loss reported by Quack.

2.

However, Quack’s small weight loss was significant, whereas Quick’s larger

weight loss was not! So Quack might not have a better diet, but he has more

evidence, 500 cases compared to 20.

Remarks

1.

Significance is about evidence, and having a large sample size can make

up for having a small effect.

2.

If you have a large enough sample size, even a small difference can be

significant. If your sample size is small, even a large difference may not be

significant.

3.

Quick needs to collect more cases, and then he can easily dominate the

Quack diet (though it seems like even a 2.7 pound loss may not be enough

of a practical difference to a dieter).

4.

Both the Quick & Quack statements are somewhat empty. It’s not enough to

report an estimate without a measure of its variability. Its not enough to

report a significance without an estimate of the difference. A confidence

interval solves these problems.

One Sample Inf-10

A confidence interval shows both statistical and practical significance.

Quack two & one-sided 95% CIs

y z.025

s

.913 1.96(.436) (1.768,0.058)

n

(, y z.05 s

n ) (,0.194)

One-sided CI says

mean is sig. less than

zero.

Quick two & one-sided 95% CIs

y t.025,19

s

11.185

2.73 2.093

(7.965,2.505)

n

20

(, y t.05,19 s

n ) (,1.595)

One-sided CI says

mean is NOT sig. less

than zero.

One Sample Inf-11