* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download CS342 Data Structures - William Paterson University

History of the function concept wikipedia , lookup

Large numbers wikipedia , lookup

Musical notation wikipedia , lookup

Abuse of notation wikipedia , lookup

History of mathematical notation wikipedia , lookup

Non-standard calculus wikipedia , lookup

Mathematics of radio engineering wikipedia , lookup

Bra–ket notation wikipedia , lookup

Series (mathematics) wikipedia , lookup

Principia Mathematica wikipedia , lookup

Elementary mathematics wikipedia , lookup

Factorization of polynomials over finite fields wikipedia , lookup

CS342 Data Structures

Complexity Analysis

Hu:

asymptotic:

Complexity Analysis: topics

•

•

•

•

•

•

•

•

•

Introduction

Simple examples

The Big-O notation

Properties of Big-O notation

Ω and Θ notations

More examples of complexity analysis

Asymptotic complexity

The best, average, and worst cases

Amortized complexity

Definition of a good program

• Correctness: does it work?

• Ease of use: user friendly?

• Efficiency: runs fast? consumes a lot of

memory?

• Others

– easy to maintain

–…

Why it is important to study

complexity of algorithm

• It addresses the efficiency (time and space)

problem.Complexity analysis allows one to

estimate

– the time needed to execute an algorithm (time

complexity)

– the amount of memory needed to execute the

algorithm (space complexity).

Space complexity

• Instruction space: space needed to store compiled

code.

• Data space: space needed to store all constants,

variables, dynamically allocated memory,

environmental stack space (used to save

information needed to resume execution of

partially completed functions).

Although memory is inexpensive today, it can be

important for portable devices (where size, power

consumption, are important).

Time or computational complexity

• It is not objective to simply measure the total execution

time of an algorithm because the the execution time

depends on many factors such as the processor

architecture, the efficiency of the compiler, and the

semiconductor technology used to make the processor.

• One more meaningful approach is to identify one or more

key or primary operations and determine the number of

times these operations are performed. Key operations are

those that take much more time to execute than other types

of operations.

The time needed to execute an algorithm increases as the amount of data,

n, as n increases, so does the execution time. The big-O notation

provides an (asymptotic) estimate (upper bound) of the execution time

when n becomes large.

Example on key instruction count: locating the

minimum element in any array of n elements

• Assuming the comparison operation is the

key operation (i.e., assignment operation is

not considered a key operation here).

then: T(n) = n – 1

A more involved example: the selection sort

of an n-element array a into ascending order

• Again, assuming that comparison is the key

operation or instruction.

– The algorithm requires (n –1) passes; each pass locates

the minimum value in the unsorted sub-list and the

value is swapped with a[i], where i = 0 for pass 1, 1 for

pass 2, and so on. In pass 1, there are (n - 1)

comparison operations. The # comparison decreases by

1 in the following pass, which lead to:

• T(n) = (n – 1) + (n – 2) + … + 3 + 2 + 1

= n(n - 1) / 2

= n2 / 2 – n / 2

The best, worst, and average case

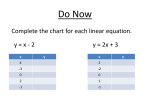

• Using linear search as an example:

searching for an element in an n-element

array.

– best case:

T(n) = 1

– worst case: T(n) = n

– average case: T(n) = n / 2

• The complexity analysis should take into

account of the best, worst and average

cases, although the worst case is the most

important.

The big-O notation

• Big-O notation can be obtained from T(n)

by taking the dominant term from T(n);

dominant term is the term that increases

fastest as the value of n increases.

• Example

– T(n) = n – 1, n is the dominant term (why?), therefore,

O(n) = n, where O(n) reads big-O of n.

– T(n) = n2 / 2 – n / 2, (n2 / 2) is the dominant term

(why?), after dropping the constant 2, we obtain O(n2).

We say the selection sort has a running time of O(n2)

Orders of magnitude of Big-O functions

• In reality, a small set of big-O functions defines the running

time of most algorithms.

– constant time: O(1), e.g., access first element of an array, append

an element to the end of a list, etc.

– Linear: O(n), linear search

– Quadratic: O(n2), selection sort

– Cubic: O(n3); matrix multiplication; slow

– Logarithmic: O(log2n), binary search; very fast

– O(nlog2n), quick-sort

– Exponential: O(2n); very slow

Contributions from individual terms of f(n) = n2 + 100n

+ log10n + 1000 and how they varies with n

Formal definition of Big-O notation

• Definition: f(n) is O(g(n)) if there exist two

positive numbers c and N such the f(n)

cg(n) for all n N. The definition reads f(n)

is big-O of g, where g is an upper bound

on the value of f(n), meaning f grows at

most as fast as g as n increases.

Big-O notation: an example

• Let f(n) = 2n2 + 3n + 1

• Based on the definition, we can say f(n) = O(n2),

where g(n) = n2, if and only if we can find solutions for c and n such

that processor. Since c is positive, we can re-write the equation: 2 + 3 /

n + 1 / n2 c. There can be infinite set of solutions for the inequality.

For example, the above inequality is satisfied if c 6 and n = 1,

or c 3 ¾ and n = 2, etc., i.e., c = 6 and n = 1 pair is a solution; so is c

= 3 and n = 2.

• The best pair of (c, n) solution, we need to determine the smallest

value of n for which the dominant term (i.e., 2n2 in f(n)) becomes

larger than all other terms. Setting 2n2 3n, and 2n2 > 1, it is clear that

when n = 2, the two inequalities hold. From 2 + 3 / n + 1 / n2 c, we

obtain c 3 ¾.

Solutions to the inequality equation

2n2 + 3n + 1 cn2

Comparison of functions for different

values of c and n

Classes of algorithms and their execution

time (assuming processor speed =1 MIPS)

Typical function g(n) applied in big-O

estimates

Properties of big-O notation

• Transitivity: if f(n) is O(g(n)), and g(n) is O(h(n)),

then f(n) is O(h(n)).

• If f(n) is O(h(n)) and g(n) is O(h(n)) then f(n) +

g(n) is O(h(n)).

• The function ank is O(nk).

• The function nk is O(nk + j) for any positive j.

• If f(n) = cg(n), then f(n) is O(g(n)).

• The function logan is O(logbn) for any positive

numbers a and b 0.

The proofs are rather straightforward and appear on

p57 of textbook.

The notation

• Definition: the function f(n) is (f(n)) is there

exist positive numbers c and N such that f(n)

cg(n) for all n N. It reads "f is big-Omega of g"

and implies cg(n) is the lower bound of f(n).

• The above definition implies: f(n) is (g(n)) if

and only if g(n) is O(f(n)).

• Similar to big-O situation, there can be infinite

number solutions for c and N pair. For practical

purposes, only the closest s are the interest,

which represents the largest lower bound.

The (theta) notation

• Definition: f(n) is (g(n)) if there exist

positive numbers c1, c2, and N such that

c1g(n) f(n) c2g(n) for all n N. It reads

"f has an order of magnitude of g, or f is on

the order of g, or both f and g functions

grow at the same rate in the long run (i.e.,

when n continues to increase.)

Some observations

• Comparing two algorithms: O(n) and O(n2),

which one is faster?

• But, what about f(n) = 10 n2 + 108 n. Is it

O(n) or O(n2)? Obviously, for smaller value

of n (n 106) the second is faster. So,

sometimes it is desirable to consider

constants which are very large.

Additional examples

• for (i = sum = 0; i < n; i++)

sum += a[i];

// There are (2 + 2n) assignments (key ops); O(n)

• for (i = 0; i < n; i++){

for (j = 1, sum = a[0]; j <= i; j++)

sum += a[j];

cout << "Sum for sub-array 0 thru "

<< i << " is " << sum << endl;

}

// There are 1 + 3n + 2(1 + 2 + … + n – 1) = 1 + 3n + n(n –1)

// = O(n) + O(n2) = O(n2) assignment operations before the program is

completed.

Rigorous analyses of best,

average, and worst cases

• Best case: smallest number of steps.

• Worst case: maximum number of steps.

• Average case: the analysis may sometimes

be quite involved. There are a couple of

examples on pages 65 and 66.

The amortized complexity

• Takes into consideration of interdependence

between operations and their results. Will be

discussed in more detail in conjunction with

data structures.

as·ymp·tote (ăsʹĭm-tōt´, -ĭmp-) noun

Mathematics.

A line considered a limit to a curve in the

sense that the perpendicular distance from a

moving point on the curve to the line

approaches zero as the point moves an

infinite distance from the origin.

am·or·tize (ămʹər-tīz´, ə-môrʹ-) verb, transitive

am·or·tized, am·or·tiz·ing, am·or·tiz·es

1.

To liquidate (a debt, such as a

mortgage) by installment payments or

payment into a sinking fund.

2.

To write off an expenditure for (office

equipment, for example) by prorating over a

certain period.

Computational complexity

• Complexity of an algorithm measured in terms of

space and time.

– Space: main memory (RAM) needed to run the

program (implementation of the algorithm); less

important today because of the advancement of the

VLSI technology.

– Time: total execution time of an algorithms is not

meaningful because it is dependent on the hardware

(architecture or instruction set), on the language in

which the algorithm is code, and the efficiency of the

compiler (optimizer). A more meaningful metric is to

measure the number of operations as a function of size

n, which can be the number of elements in an array or a

data file.

The asymptotic behavior of math

functions

as·ymp·tote (ăsʹĭm-tōt´, -ĭmp-) noun

Mathematics.

A line considered a limit to a curve in the sense that

the perpendicular distance from a moving point on

the curve to the line approaches zero as the point

moves an infinite distance from the origin.

Examples: tan(), e-x, etc.

The asymptotic complexity: an example

• A simple loop that sum n elements in array a:

for (int i = sum = 0; i < n; n++)

sum += a[i];

![{ } ] (](http://s1.studyres.com/store/data/008467374_1-19a4b88811576ce8695653a04b45aba9-150x150.png)