* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Document

Georgian grammar wikipedia , lookup

Word-sense disambiguation wikipedia , lookup

Kannada grammar wikipedia , lookup

Navajo grammar wikipedia , lookup

Preposition and postposition wikipedia , lookup

Morphology (linguistics) wikipedia , lookup

Lithuanian grammar wikipedia , lookup

Ukrainian grammar wikipedia , lookup

Lexical semantics wikipedia , lookup

Chinese grammar wikipedia , lookup

Macedonian grammar wikipedia , lookup

Old Irish grammar wikipedia , lookup

Ojibwe grammar wikipedia , lookup

Arabic grammar wikipedia , lookup

Old Norse morphology wikipedia , lookup

Modern Hebrew grammar wikipedia , lookup

Japanese grammar wikipedia , lookup

Compound (linguistics) wikipedia , lookup

Zulu grammar wikipedia , lookup

Portuguese grammar wikipedia , lookup

Swedish grammar wikipedia , lookup

Vietnamese grammar wikipedia , lookup

Romanian nouns wikipedia , lookup

Modern Greek grammar wikipedia , lookup

Russian grammar wikipedia , lookup

Spanish grammar wikipedia , lookup

Latin syntax wikipedia , lookup

Icelandic grammar wikipedia , lookup

Old English grammar wikipedia , lookup

Ancient Greek grammar wikipedia , lookup

Italian grammar wikipedia , lookup

Sotho parts of speech wikipedia , lookup

Esperanto grammar wikipedia , lookup

Yiddish grammar wikipedia , lookup

French grammar wikipedia , lookup

Malay grammar wikipedia , lookup

Scottish Gaelic grammar wikipedia , lookup

Polish grammar wikipedia , lookup

Serbo-Croatian grammar wikipedia , lookup

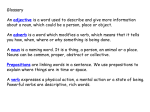

8. Word Classes and Part-of-Speech Tagging 2007년 5월 26일 인공지능 연구실 이경택 Text: Speech and Language Processing Page.287 ~ 303 Origin of POS Techne: a grammatical sketch of Greek which is written by Dionysius Thrax of Alexandria (c. 100 B.C.) or someone else. Eight parts-of-speech: noun, verb, pronoun, preposition, adverb, conjunction, participle, article The basis for practically all subsequent part-of-speech descriptions of Greek, Latin and most European language for the next 2000 years. Recent Lists of POS Recent POS list have much larger than before Penn Treeback (Marcus et al., 1993): 45 Brown corpus (Francis, 1979; Francis and Kučera, 1982): 87 C7 tagset (Garside et al., 1997): 146 Synonym of POS word classes morphological classes lexical tags POS can be used in Recognize or Produce pronunciation of words CONtent (noun), conTENT (adjective) Object (noun), obJECT (adjective) …… In information retrieval Stemming Select out nouns or other important words ASR language model like class-based N-grams Partial parsing 8.1 (Mostly) English Word Classes Closed class types: have relatively fixed membership Ex. Prepositions: new prepositions are rarely coined. Generally function words (ex. of, it, and, or, ……) - Very short - Occur frequently - Play an important role in grammar Open class types: have relatively updatable membership Ex. Noun and verb: new words continually coined or borrowed from other language. Four major open classes (but not all of human language have all of these) - Nouns - Verbs - Adjectives - Adverbs Open Classes Noun Verb Adjective Adverb Definition of Noun Functional definition is not good The name given to the lexical class in which the words for most people, places, or things occur Bandwidth? Relationship? Pacing? Semantic definition of noun Thing like its ability to occur with determiners (a goat, its bandwidth, Plato’s Republic), to take (IBM’s annual revenue), and for most but not all nouns, to occur in the plural form (goats, abaci). Grouping Noun Uniqueness Proper nouns: Regina, Colorado, IBM, …… Common nouns: book, stair, apple, …… Countable Count nouns - Can occur in both the singular and plural: goat(s), relationship(s), …… - Can be counted: (one, two, ……) goat(s) Mass nouns - Cannot be counted: two snows (x), two communisms (x) - Can appear without articles where singular count nouns cannot: Snow is white (o), Goat is white (x) Verbs Verbs have a number of morphological forms Non-3rd-person-sg: eat 3rd-person-sg: eats Progressive: eating Past participle: eaten Auxiliaries: subclass of English verbs Adjectives Terms that describe properties or qualities Concept of color, age, value, …… There are languages without adjectives. (ex. Chinese) Adverbs Directional adverbs, locative adverbs: specify the direction or location of some action Ex. home, here, downhill Degree adverbs: specify the extent of some action, process, or property Ex. extremely, very, somewhat Manner adverbs, temporal adverbs: describe the time that some action or event took place Ex. yesterday, Monday Some adverbs (ex. Monday) are tagged in some tagging schemes as nouns Closed Classes Prepositions: on, under, over, near, by, at, from, to, with Determiners: a, an, the Pronouns: she, who, I, others Conjunctions: and, but, or, as, if, when Auxiliary verbs: can, may, should, are Particles: up, down, on, off, in, out, at, by Numerals: one, two, three, first, second, third Prepositions Occur before noun phrases Often indicating spatial or temporal relations Literal (ex. on it, before then, by the house) Metaphorical (on time, with gusto, beside herself) Often indicate other relations as well Ex. Hamlet was written by Shakespeare, and [from Shakespeare] “And I did laugh sans intermission an hour by his dial” Figure 8.1 Prepositions (and particles) of English from the CELEX on-line dictionary. Frequently counts are from the COBUILD 16 million word corpus Particle Often combines with a verb to form a larger unit called a phrasal verb Come in: adjective Come with: preposition Come on: particle Figure 8.2 English single-word particles from Quirk et al. (1985). Determiners (articles) a, an: mark a noun phrase as indefinite the: mark it as definite this?, that? COBUILD statistics out of 16 million words the: 1,071,676 a: 413,887 an: 59,359 Conjunctions Used to join two phrases, clauses, sentences Coordinating conjunction: equal status - and, or, but Subordinating conjunction: embedded status - that (ex. I thought that you might like some milk) - complementizers: Subordinating conjunctions like that which link a verb to its argument in this way (more: Chapter 9, 11) Figure 8.3 Coordinating and subordinating conjunctions of English from the CELEX on-line dictionary. Frequency counts are from the COBUILD 16 million word corpus. Pronouns A kind of shorthand for referring to some noun phrase or entity or event. Personal pronouns: you, she, I, it, me, …… Possessive pronouns: my, your, his, her, its, one’s, our, their, …… Wh-pronouns: what, who, whom, whoever Figure 8.4 Pronouns of English from the CELEX on=line dictionary. Frequency counts are from the COBUILD 16 million word corpus. Auxiliary Verbs Words that mark certain semantic features of a main verb = modal verb be: copula verb do have: perfect tenses can: ability, possibility may: permission, possibility …… Figure 8.5 English modal verbs from the CELEX on-line dictionary. Frequency counts are from the COBUILD 16 million word corpus. Other Closed Classes Interjections on, ah, hey, man, alas, …… negatives no, not, …… politeness markers please, thank you, …… greetings hello, goodbye, …… existential there There are two on the table 8.2 Tagsets for English There are various tagsets for English. Brown corpus (Francis, 1979; Francis and Kučera, 1982): 87 tags Penn Treebank (Marcus et al., 1993): 45 tags British National Corpus (Garside st al., 1997): 61 tags (C5 tagset) C7 tagset: 164 tags Which tagset to use for a particular application depends on how much information the application needs Figure 8.6 Penn Treebank part-of-speech tags (including punctuation) 8.3 Part-of-Speech Tagging Definition: Process of assigning a POS or other lexical class marker to each word in a corpus. Input: a string of words, tagset (ex. Book that flight, Penn Treebank tagset) Output: a single best tag for each word (ex. Book/VB that/DT flight/NN ./.) Problem: resolve ambiguity → disambiguation Ex. book (Hand me that book, Book that flight) Figure 8.7 The number of word types in Brown corpus by degree of ambiguity (after DeRose(1988)) Taggers Rule-based taggers Generally involve a large database of hand-written disambiguation rule Ex. ENGTWOL (based on the Constraint Grammar architecture of Karlsson et al. (1995)) Stochastic taggers Generally resolve tagging ambiguities by using a training corpus to compute the probability of a given word having a given tag in a given context. Ex. HMM tagger(=Maximum Likelihood Tagger = Markov model tagger, based on the Hidden Markov Model) Transformation-based tagger, Brill tagger (after Brill(1995)) Shares features of rule-based tagger and stochastic tagger The rules are automatically induced from a previously tagged training corpus. 8.4 Rule-Based Part-of-Speech Tagging Earliest algorithm Based on two-stage architecture - First stage: assign each word a list of potential POS using dictionary - Second stage: winnow down the lists using hand-written disambiguation rule ENGTWOL (Voutilainen, 1995) lexicon Based on two-level morphology Using 56,000 entries for English word stems (Heikkilä, 1995) Counting a word with multiple POS as separate entries Figure 8.8 Sample lexical entries from the ENGTWOL lexicon described in Voutilainen (1995) and Heikkilä (1995) ENGTWOL – Process1 Process First stage: Each word is run through the two-level lexicon transducer and the all possible POS are returned. - Ex. - Pavlov - had - shown - that - salivation PAVLOV N NOM SG PROPER HAVE V PAST VFIN SVO HAVE PCP2 SVO SHOW PCP2 SVOO SVO SV ADV PRON DEM SG DET CENTRAL DEM SG CS N NOM SG ENGTWOL – Process2 Second stage - Eliminate tags that are inconsistent with the context using a set of about 1,100 constraints in negative way - Ex. - Adverbial-that rule - Given input: “that” - if (+1 A/ADV/QUANT); /* if next word is adj, adverb, or quantifier */ (+2 SENT-LIM); /* and following which is a sentence boundary, */ (NOT -1 SVOC/A); /* and the previous word is not a verb like */ /* ‘consider’ which allows adjs as object complements */ - then eliminate non-ADV tags - else eliminate ADV tag Also uses - Probabilistic constraints - Other syntactic information