* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Improving Database Performance

Commitment ordering wikipedia , lookup

Microsoft SQL Server wikipedia , lookup

Microsoft Access wikipedia , lookup

Entity–attribute–value model wikipedia , lookup

Open Database Connectivity wikipedia , lookup

Serializability wikipedia , lookup

Oracle Database wikipedia , lookup

Ingres (database) wikipedia , lookup

Extensible Storage Engine wikipedia , lookup

Functional Database Model wikipedia , lookup

Relational model wikipedia , lookup

Microsoft Jet Database Engine wikipedia , lookup

Concurrency control wikipedia , lookup

Database model wikipedia , lookup

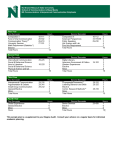

Week 11 Improving Database Performance Semester 1 2005 Week 11 Database Performance / 1 Improving Database Performance So far, we have looked at many aspects of designing, creating, populating and querying a database. We have (briefly) explored ‘optimisation’ which is used to ensure that query execution time is minimised In this lecture we are going to look at some techniques which are used to improve performance and availability WHY ? Because databases are required to be available, in many installations and applications, 24 hours a day, 7 days a week, 52 weeks every year - think of the ‘user’ demands in e-business Semester 1 2005 Week 11 Database Performance / 2 Improving Database Performance There are many ‘solutions’ - including parallel processors faster processors higher speed communications more memory faster disks more disk units on line higher capacity disks any others ? Semester 1 2005 Week 11 Database Performance / 3 Improving Database Performance We are going to look at a technique called ‘clustering’ - an architecture for improving ‘power’ and availability What are the ‘dangers’ to non-stop availability Try these :– System outages (planned) » Maintenance, tuning – System outages (unplanned) » hardware failure, bugs, virus attacks Semester 1 2005 Week 11 Database Performance / 4 Improving Database Performance E-business is not the only focus Businesses are tending to be ‘global organisations’ remember one of the early lectures ? So what is one of the solutions’ ? In a single word - clustering Clustering is based on the premise that multiple processors can provide better, faster and more reliable processing than a single computer Semester 1 2005 Week 11 Database Performance / 5 Improving Database Performance However, as in most ‘simple’ solutions in Information Technology, the problem is in the details How can clustering be achieved ? Which technologies and architectures off the best approach to clustering ? – and, what is the measure, or metric, of ‘best’ ? Semester 1 2005 Week 11 Database Performance / 6 Improving Database Performance What are some of the advantages of clustering ? – Improved availability of services – Scalability Clustering involves multiple and independent computing systems which work together as one When one of the independent systems fails, the cluster software can distribute work from the failing system to the remaining systems in the cluster Semester 1 2005 Week 11 Database Performance / 7 Improving Database Performance ‘Users’ normally would not notice the difference – They interact with a cluster as if it were a single server and importantly the resources they require will still be available Clustering can provide high levels of availability Semester 1 2005 Week 11 Database Performance / 8 Improving Database Performance What about ‘scalability’ ? Loads will (sometimes) exceed the capabilities which make up the cluster. Additional facilities can be incrementally added to increase the cluster’s computing power and ensure processing requirements are met As transaction and processing loads become established, the cluster (or parts of it) can be increased in size or number Semester 1 2005 Week 11 Database Performance / 9 Improving Database Performance Clustering is NOT a ‘new’ concept A company named DEC introduced them for VMS systems in the early 1980’s - about 20 years ago Which firms offer clustering packages now ? IBM, Microsoft and Sun Microsystems Semester 1 2005 Week 11 Database Performance / 10 Improving Database Performance What are the different types of Clustering ? There are 2 architectures; – Shared nothing and – Shared disk – In a shared nothing architecture, each system has its own private memory and one or more disks And each server in the cluster has its own independent subset of the data it can work on independently without meeting resource contention from other servers Semester 1 2005 Week 11 Database Performance / 11 Improving Database Performance This might explain better:Interconnection network CPU 1 Memory 1 CPU 2 CPU 3 Memory 2 Memory ..n A Shared Nothing Architecture Semester 1 2005 Week 11 Database Performance / 12 Improving Database Performance As you saw on the previous overhead, a shared nothing environment, each system has its own ‘private memory’ and one or more disks And each server in the cluster has its own independent subset of the data it can work on without meeting resource conflicts from other servers The clustered processors communicate by passing messages through a network which interconnects the computers Semester 1 2005 Week 11 Database Performance / 13 Improving Database Performance Client requests are automatically directed to the system which owns the particular resource Only one of the clustered systems can ‘own’ and access a particular resource at a time. When a failure occurs, resource ownership can be dynamically transferred to another system in the cluster Theoretically, a shared nothing multiprocessor could scale up to thousands of processors - the processors don’t interfere with one another - no resources are shared Semester 1 2005 Week 11 Database Performance / 14 Improving Database Performance CPU 1 CPU 2 Memory 1 Memory 2 CPU …n Memory ..n Interconnecting Network A Shared All Environment Semester 1 2005 Week 11 Database Performance / 15 Improving Database Performance In a ‘shared all’ environment, you noticed that all of the connected systems shared the same disk devices Each processor has its own private memory, but all the processors can directly access all the disks In addition, each server has access to all the data Semester 1 2005 Week 11 Database Performance / 16 Improving Database Performance In this arrangement, ‘shared all’ clustering doesn’t scale as effectively as shared-nothing clustering for small machines. All the nodes have access to the same data, so a controlling facility must be used to direct processing to make sure that all nodes have a consistent view of the data as it changes Attempts by more than one nodes to update the same data need to be prohibited. This can cause performance and scalability problems (similar to the concurrency aspect) Semester 1 2005 Week 11 Database Performance / 17 Improving Database Performance Shared-all architectures are well suited to the large scale processing found in main frame environments Main frames are large processors capable of high work loads. The number of clustered PC’s and midrange processors, even with the newer, faster processors, which would equal the computing power from a few clustered mainframes, would be high - about 250 nodes. Semester 1 2005 Week 11 Database Performance / 18 Improving Database Performance This chart might help : Shared Disk Quick adaptability to changing workloads High availability Data need not be partitioned cluster Shared Nothing High possibility of simpler, cheaper hardware Almost unlimited scalability Data may need to be partitioned across the Semester 1 2005 Week 11 Database Performance / 19 Improving Database Performance There is another technique - InfiniBand architecture which can reduce bottlenecks in the Input/Output level, and which has a further appeal of reducing the cabling, connector and administrative overheads of the database infrastructure It is an ‘intelligent’ agent - meaning software. Its main attraction is that it can change the way information is exchanged in applications. It removes unnecessary overheads from a system Semester 1 2005 Week 11 Database Performance / 20 Improving Database Performance Peripheral Interconnect (PCI) remains a bus-based system this allows the transfer of data between 2 (yes, 2!) of the members at a time Many PCI buses cause bottlenecks in the bridge to the memory subsystems. Newer versions of PCI allowed only a minor improvement - only 2 64 bit 66MKz adapters on the bus A bus allows only a small number of devices to be interconnected, is limited in its electrical paths, and cannot adapt to meet high availability demands Semester 1 2005 Week 11 Database Performance / 21 Improving Database Performance A newer device, called a fabric, can scale to thousands of devices with parallel communications between each node A group formed in 1999 (Intel/Microsoft, IBM, Compaq and Sun Future IO) to form the InfiniBand Trade Association Their objective was to develop and ensure one standard for communication interconnects and system I/O. One of their early findings was that replacing a bus architecture by fabric was not the full story - or solution Semester 1 2005 Week 11 Database Performance / 22 Improving Database Performance Their early solution needed to be synchronised with software changes. If not, a very high speed network could be developed, but actual application demands would not be met InfinBand is comprised of 1 Host Channel Adapters (HCA) 2. Target Channel Adapters (TCA) 3. Switches 4. Routers 1 and 2 define end nodes in the fabric, 3 and 4 are interconnecting devices Semester 1 2005 Week 11 Database Performance / 23 Improving Database Performance The HCA (host channel adapter) manages a connection a connection and interfaces with a fabric The TCA (target channel adapter) delivers required data such as a disk interface which replaces the existing SCSI interface An InfiniBand switch links HCAs and TCAs into a network The router allows the interface to other networks AND the translation of legacy components and networks. It can be used for MAN and WAN interfaces. Semester 1 2005 Week 11 Database Performance / 24 Improving Database Performance InfiniBand link speeds are identified in multiples of the base 1x - (0.5 Gb full duplex link - 0.25Gb in each direction) Other defined sizes are $x (2 Gb full duplex) and 12x (6Gb full duplex). Just for size : A fast SCSi adapter could accommodate a throughput rate of 160Mb per second A single InfinBand adapter 4x can deliver between 300 and 500 Mb per second Semester 1 2005 Week 11 Database Performance / 25 Improving Database Performance End Nodes Routers Switch Semester 1 2005 Week 11 Database Performance / 26 Improving Database Performance So far we have looked at improving database performance by 1. The use of ‘shared-all’ or ‘shared-nothing’ architectures 2. Implementing an InfiniBand communications interface and network facility Semester 1 2005 Week 11 Database Performance / 27 Improving Database Performance Now we are going to look at another option It’s known as the ‘Federated Database’ environment So, what is a ‘Federated Database’ ? Try this: It is a collection of data stored on multiple autonomous computing systems connected to a network. The user or users is presented with what appears to be one integrated database Semester 1 2005 Week 11 Database Performance / 28 Improving Database Performance A federated database presents ‘views’ to users which look exactly the same as views of data from a centralised database This is very similar to the use of the Internet where many sites have multiple sources - but the user doesn’t see them In a federated database approach, each data resource is defined (as you have done) by means of table schemas, and the user is able to access and manipulate data Semester 1 2005 Week 11 Database Performance / 29 Improving Database Performance The ‘queries’ actually access data from a number of databases at a number of locations One of the interesting aspects of a federated database is that the individual databases may consist of any DBMS (IBM, Oracle, SQL Server, possibly MS Access) and run on any operating system (Unix, VMS, MS-XP) and on different hardware ( Hewlett-Packhard servers, Unisys, IBM, Sun Microsystems ….. Semester 1 2005 Week 11 Database Performance / 30 Improving Database Performance However, there a some reservations : Acceptable performance requires the inclusion of a smart optimiser using the cost-based technique which has intelligence about both the distribution (perhaps a global data dictionary) and also the different hardware and DBMS at each accessed site. Semester 1 2005 Week 11 Database Performance / 31 Improving Database Performance Another attractive aspect of the federated arrangement is that additional database servers can be added to the federation at any time - and servers can also be deleted. As a general comment, any multisource database can be implemented in either a centralised or federated architecture In the next few overheads, there are some comments on this Semester 1 2005 Week 11 Database Performance / 32 Improving Database Performance The centralised approach has some disadvantages, the major one being that investment is large, and the return on investment may take many months, or years The process includes these steps: 1. Concept development and data model for collecting data needed to support business decisions and processes 2. Identification of useful data sources (accurate, timely, comprehensive, available …) Semester 1 2005 Week 11 Database Performance / 33 Improving Database Performance 3. Obtain a database server platform to support the database (and probably lead to data warehousing). 4. Capture data, or extract data, from the source(s) 5. Clean, format, and transform data to the quality and formats required 6. Design an integrated database to hold this data 7. Load the database (and review quality) Semester 1 2005 Week 11 Database Performance / 34 Improving Database Performance 8. Develop systems to ensure that content is current (probably transaction systems) From this point, that database becomes ‘usable’ So, what is different with the Federated Database approach 1. Firstly, the economics are different - the investment in the large, high speed processor is not necessary 2. Data is not centralised - it remains with and on the systems used to maintain it Semester 1 2005 Week 11 Database Performance / 35 Improving Database Performance 3. The database server can be a mid-range, or several servers. 4. Another aspect is that it is probably most unlikely to run a query which regularly needs access to all of the individual databases - but with the centralised approach all of the data needs to be ‘central’. 5. Local database support local queries - that’s probably why the local databases were introduced. Semester 1 2005 Week 11 Database Performance / 36 Improving Database Performance The Internet offers the capability of large federations of content servers Distributed application architectures built around Web servers and many co-operating databases are (slowly) becoming common both – within and – between enterprises (companies). Users are normally unaware of the interfacing and supporting software necessary for federated databases to be accessible Semester 1 2005 Week 11 Database Performance / 37 Improving Database Performance Finally, there is another aspect which is used to improve the availability and performance of a database This occurs at the ‘configuration stage’ which is when the database and its requirements are being ‘created’ - quite different from the ‘create table’ which you have used It is the responsibility of the System Administration and Database Management (and of course Senior / Executive Management) Semester 1 2005 Week 11 Database Performance / 38 Improving Database Performance Physical Layouts The physical layout very much influences – How much data a database can hold – The number of concurrent and database users – How many concurrent processes can execute – Recovery capability – Performance (response time) – Nature of Database Administration – Cost – Expansion Semester 1 2005 Week 11 Database Performance / 39 Improving Database Performance Oracle Architecture Oracle8i and 9i are object-relational database management systems. They contain the capabilities of relational and object-oriented database systems They utilise database servers for many types of business applications including – On Line Transaction Processing (OLTP) – Decision Support Systems – Data Warehousing Semester 1 2005 Week 11 Database Performance / 40 Improving Database Performance Oracle Architecture In perspective, Oracle is NOT a ‘high end’ application DBMS A high end system has one or more of these characteristics: – Management of a very large database (VLDB) - probably hundreds of gigabytes or terabytes – Provides access to many concurrent users - in the thousands, or tens of thousands – Gives a guarantee of constant database availability for mission critical applications - 24 hours a day, 7 days a week. Semester 1 2005 Week 11 Database Performance / 41 Improving Database Performance Oracle Architecture High end applications environments are not normally controlled by Relational Database Management Systems High end database environments are controlled by mainframe computers and non-relational DBMSs. Current RDBMSs cannot manage very large amounts of data, or perform well under demanding transaction loads. Semester 1 2005 Week 11 Database Performance / 42 Improving Database Performance Oracle Architecture There are some guidelines for designing a database with files distributed so that optimum performance, from a specific configuration, can be achieved The primary aspect which needs to be clearly understood is the nature of the database – Is it transaction oriented ? – Is it read-intensive ? Semester 1 2005 Week 11 Database Performance / 43 Improving Database Performance The key items which need to be understood are – Identifying Input/Output contention among datafiles – Identifying Input/Output bottlenecks among all database files – Identifying concurrent Input/Output among background processes – Defining the security and performance goals for the database – Defining the system hardware and mirroring architecture – Identifying disks which can be dedicated to the database Semester 1 2005 Week 11 Database Performance / 44 Improving Database Performance Let’s look at tablespaces : These ones will be present in some combination System Data Data_2 Indexes Indexes_2 RBS RBS_2 Temp Temp_user Data dictionary Standard-operation tables Static tables used during standard operation Indexes for the standard-operation tables Indexes for the static tables Standard-operation RollBack Segments Special RollBack segments used for data loads Standard operation temporary segments Temporary segments created by a temporary user Semester 1 2005 Week 11 Database Performance / 45 Improving Database Performance Tools Tools_1 Users Agg_data Partitions RDBMS tools tables Indexes for the RDBMS tools tables User objects in development tables Aggregation data and materialised views Partitions of a table or index segments; create multiple tablespaces for them Temp_Work Temporary tables used during data load processing Semester 1 2005 Week 11 Database Performance / 46 Improving Database Performance (A materialised view stores replicated data based on an underlying query. A materialised view stores data which is replicated from within the current database). A Snapshot stores data from a remote database. The system optimiser may choose to use a materialised view instead of a query against a larger table if the materialised view will return the same data and thus improve response time. A materialised view does however incur an overhead of additional space usage, and maintenance) Semester 1 2005 Week 11 Database Performance / 47 Improving Database Performance Each of the tablespaces will require a separate datafile Monitoring of I/O performance among datafiles is done after the database has been created, and the DBA must estimate the I/O load for each datafile (based on what information ?) The physical layout planning is commenced by estimating the relative I/O among the datafiles, with the most active tablespace given a weight of 100. Estimate the I/O from the other datafiles relative to the most active datafile Semester 1 2005 Week 11 Database Performance / 48 Improving Database Performance Assign a weight of 35 for the System tablespace files and the index tablespaces a value of 1/3 or their data tablespaces Rdb’s may go as high as 70 (depending on the database activity) - between 30 and50 is ‘normal’ In production, Temp will be used by large sorts Tools will be used rarely in production - as will the Tools_2 tablespace Semester 1 2005 Week 11 Database Performance / 49 Improving Database Performance So, what do we have ? Tablespace Weight Data 100 Rbs 40 System 35 Indexes 33 Temp 5 Data_2 4 Indexes_2 2 Tools 1 - Something like this % of Total 45 18 16 15 2 2 1 1 (220) Semester 1 2005 Week 11 Database Performance / 50 Improving Database Performance 94% of the Input/Output is associated with the top four tablespaces This indicates then that in order to properly the datafile activity, 5 disks would be needed, AND that NO other database files should be put on the disks which are accommodating the top 4 tablespaces There are some rules which apply : 1. Data tablespaces should be stored separately from their Index tablespaces 2. RBS tablespaces should be stored separately from their Index tablespaces Semester 1 2005 Week 11 Database Performance / 51 Improving Database Performance and 3. The System tablespace should be stored separately from the other tablespaces in the database In my example, there is only 1 Data tablespace. In production databases there will probably be many Data tablespaces (which will happen if Partitions are used). If/when this occurs, the weightings of each of the Data tablespaces will need to be made (but for my efforts, 1 Data tablespace will be used). Semester 1 2005 Week 11 Database Performance / 52 Improving Database Performance As you have probably guessed, there are other tablespaces which require to be considered - many used by the many and various ‘processes’ of Oracle One of these considerations is the on-line redo log files (you remember these and their purpose ?) They store the records of each transaction. Each database must have at least 2 online redo log files available to it - the database will write to one log in sequential mode until the redo log file is filled, then it will start writing to the second redo log file. Semester 1 2005 Week 11 Database Performance / 53 Improving Database Performance Redo log files (cont’d) The Online Redo Log files maintain data about current transactions and they cannot be recovered from a backup unless the database is/was shut down prior to backup - this is a requirement of the ‘Offline Backup’ procedure (if we have time we will look at this) On line redo log files need to be ‘mirrored’ A method of doing this is to employ redo log groups - which dynamically maintain multiple sets of the online redo logs The operating system is also a good ally for mirroring files Semester 1 2005 Week 11 Database Performance / 54 Improving Database Performance Redo log files should be placed away from datafiles because of the performance implications, and this means knowing how the 2 types of files are used Every transaction (unless it is tagged with the nologging parameter) is recorded in the redo log files The entries are written by the LogWriter (LGWR) process The data in the transaction is concurrently written to a number of tablespaces(the RBS rollback segments and the Data tablespace come to mind) via the DataBase Writer (DBWR) and this raises possible contention issues if a datafile is located on the same disk as a redo log file Semester 1 2005 Week 11 Database Performance / 55 Improving Database Performance Redo log files are written sequentially Datafiles are written in ‘random’ order - it is a good move to have these 2 different demands separated If a datafile must be stored on the same disk as a redo log files, then it should not belong to the System tablespace, the RBS tablespace, or a very active Data or Index tablespace So what about Control Files ? There is much less traffic here, and they can be internally mirrored. (config.ora or init.orafile). The database will maintain the control files as identical copies of each other. There should be 3 copies, across 3Semester disks 1 2005 Week 11 Database Performance / 56 Improving Database Performance The LGWR background process writes to the online redo files in a cyclical manner When the lst redo file is full, it directs writing to the 2nd file …. When the ‘last’ file is full, LWGR starts overwriting the contents of the 1st file .. and so on When ARCHIVELOG mode is used, the contents of the ‘about to be overwritten file’ are written to a redo file on a disk device Semester 1 2005 Week 11 Database Performance / 57 Improving Database Performance There will be contention on the online redo log as LGWR will be attempting to write to one redo log file while the Archiver (ARCH) will be trying to read another. The solution is to distribute the redo log files across multiple disks The archived redo log files are high I/O and therefore should NOT be on the same device as System, Rbs, Data, or Indexes tablespaces Neither should they be stored on the same device as any of the online redo log files. Semester 1 2005 Week 11 Database Performance / 58 Improving Database Performance The database will stall if there is not enough disk space, and the archived files should directed to a disk which contains small and preferably static files Concurrent I/O A commendable goal, and one which needs careful planning to achieve. Placing two random access files which are never accessed at the same time will quite happily avoid contention for I/O capability Semester 1 2005 Week 11 Database Performance / 59 Improving Database Performance What we have just covered is known as 1. Concurrent I/O - when concurrent processes are being performed against the same device (disk) This is overcome by isolating data tables from their Indexes for instance 2. Interference - when sequential writing is interfered by reads or writes to other files on the same disk Semester 1 2005 Week 11 Database Performance / 60 Improving Database Performance At the risk of labouring this a bit, The 3 background processes to watch are 1. DBWR, which writes in a random manner 2. LGWR, which writes sequentially 3. ARCH, which reads and writes sequentially LGWR and ARCH write to 1 file at a time, but DBWR may be attempting to write to multiple files at once - (can you think of an example ?) Multiple DBWR processes for each instance or multiple I/O slaves for each DBWR is a solution Semester 1 2005 Week 11 Database Performance / 61 Improving Database Performance What are the disk layout goals ? Are they (1) recoverability or (2) performance Recoverability must address all processes which impact disks (storage area for archived redo logs and for Export dump files - (which so far we haven’t mentioned) come to mind). Performance calls for file I/O performance and relative speeds of the disk drives Semester 1 2005 Week 11 Database Performance / 62 Improving Database Performance What are some recoverability issues ? All critical database files should be placed on mirrored drives, and the database run in ARCHIVELOG mode The online red files must also be mirrored (Operating system or mirrored redo log groups) Recoverability issues involve a few disks and this is where we start to look at hardware specification Semester 1 2005 Week 11 Database Performance / 63 Improving Database Performance Mirroring architecture leads to specifying – the number of disks required – the models of disks (capacity and speed) – the strategy – If the hardware system if heterogeneous, the faster drives should be dedicated to Oracle database files – RAID systems should be carefully analysed as to their capability and the optimum benefit sought - RAID-1, RAID3 and RAID-5 have different processes relating to parity Semester 1 2005 Week 11 Database Performance / 64 Improving Database Performance The disks chosen for mirroring architecture must be dedicated to the database This guarantees that non-database load on these disks will not interfere with database processes Semester 1 2005 Week 11 Database Performance / 65 Improving Database Performance Goals for disk layout : – The database must be recoverable – The online redo log files must be mirrored via the system or the database – The database file I/O weights must be estimated – Contention between DBWR, LGWR and ARCH must be minimised – Contention between disks for DBWR must be minimised – The performance goals must be defined – The disk hardware options must be known – The disk mirroring architecture must be known – Disks must be dedicated to the database Semester 1 2005 Week 11 Database Performance / 66 Improving Database Performance So where does that leave us ? We’re going to look at ‘solutions’ from Optimal to Practical and we’ll assume that : the disks are dedicated to the database the online redo log files are being mirrored by the Operating System the disks are of identical size the disks have identical performance characteristics (obviously the best case scenario !) Semester 1 2005 Week 11 Database Performance / 67 Improving Database Performance So, with that optimistic outlook let’s proceed Case 1 - The Optimum Physical Layout Disk No Contents 1 Oracle Software 2 SYSTEM tablespace 3 RBS tablespace 4 DATA tablespace 5 INDEXES tablespace 6 TEMP tablespace 7 TOOLS tablespace 8 OnLine Redo Log 1 9 OnLine redo log 2 10 OnLine redo Log 3 11 Control file 1 Disk No. Contents 12 Control file 2 13 Control file 3 14 Application software 15 RBS_2 16 DATA_2 17 INDEXES_2 18 TEMP_USER 19 TOOLS_1 20 USERS 21 Archived redo dest. disk 22 Archived dump file Semester 1 2005 Week 11 Database Performance / 68 Hardware Configurations • The 22 disk solution is an optimal solution. • It may not be feasible for a number of reasons, including hardware costs • In the following overheads there will be efforts to reduce the number of disks, commensurate with preserving performance Semester 1 2005 Week 11 Database Performance / 69 Hardware Configurations This leads to - 17 disk configuration Disk Contents Disk Contents 1 Oracle software 11 Application software 2 SYSTEM tablespace 12 RBS_2 3 RBS tablespace 13 DATA_2 4 DATA tablespace 14 INDEXES_2 5 INDEXES tablespace 15 TEMP_USER 6 TEMP tablespace 16 Archived redo log 7 TOOLS tablespace destination disk 8 Online Redo log 1, Control file 1 17 Export dump 9 Online Redo log 2, Control file 2 destination disk 10 Online Redo log 3, Control file 3 Semester 1 2005 Week 11 Database Performance / 70 Hardware Configurations The Control Files are candidates for placement onto the three redo log disks. The altered arrangement reflects this. The Control files will interfere with the online redo logfiles but only at log switch points and during recovery Semester 1 2005 Week 11 Database Performance / 71 Hardware Configurations The TOOLS_1 tablespace will be merged with the TOOLS tablespace In a production environment, users will not have resource privileges, and the USERS tablespace can be ignored However, what will be the case if users require development and test access ? Create another database ? (test ?) Semester 1 2005 Week 11 Database Performance / 72 Hardware Configurations The RBS and RBS_2 tablespaces have special rollback segments used during data loading. Data loads should not occur during production usage, and so if the 17 disk option is not practical, we can look at combining RBS and RBS_2 - there should be no contention TEMP and TEMP_USER can be placed on the same disk The TEMP tablespace weighting (5 in the previous table) can vary. It should be possible to store these 2 tablespaces on the same disk. TEMP_USER is dedicated to a specific user - (such as Oracle Financials, and these have temporary segments requirements which are greater than the system’s users) Semester 1 2005 Week 11 Database Performance / 73 Hardware Configurations The revised solution is now Disk Contents Disk Content 1 Oracle software 11 Application software 2 SYSTEM tablespace 12 DATA_2 3 RBS, RBS_2 tablespace 13 INDEXES_2 4 DATA tablespace 14 Archived Redo Log 5 INDEXES tablespaces destination disk 6 TEMP, TEMP_USER tablespace 15 Export dump file 7 TOOLS tablespace destination disk 8 Online Redo Log 1, Control file 1 9 Online Redo Log 2, Control file 2 15 disks 10 Online Redo Log 3, Control file 3 Semester 1 2005 Week 11 Database Performance / 74 Hardware Configurations What if there aren’t 15 disks ? -->> Move to attempt 3 Here the online Redo Logs will be placed onto the same disk. Where there are ARCHIVELOG backups, this will cause concurrent I/O and interference contention between LGWR and ARCH on that disk What we can deduce from this, is that the combination about to be proposed is NOT appropriate for a high transaction system or systems running in ARCHIVELOG mode (why is this so - Prof. Julius Sumner Miller ?) Semester 1 2005 Week 11 Database Performance / 75 Hardware Configurations The ‘new’ solution Disk Contents 1 Oracle software 2 SYSTEM tablespace, Control file 1 3 RBS, RBS_2 tablespaces, Control file 2 4 DATA tablespace, Control file 3 5 INDEXES tablespaces 6 TEMP, TEMP_USER tablespaces 7 TOOLS, INDEXES_2 tablespaces 8 OnLine Redo Logs 1, 2 and 3 9 Application software 10 DATA_2 11 Archived redo log destination disk 12 Export dump file destination disk 12 disks Semester 1 2005 Week 11 Database Performance / 76 Hardware Configurations Notice that the Control Files have been moved to Disks 2, 3 and 4 The Control Files are not I/O demanding, and can safely coexist with SYSTEM, RBS and DATA What we have done so far is to ‘move’ the high numbered disks to the ‘low’ numbered disks - these are the most critical in the database. The next attempt to ‘rationalise’ the disk arrangement is to look carefully at the high numbered disks. Semester 1 2005 Week 11 Database Performance / 77 Hardware Configurations – DATA_2 can be combined with with the TEMP tablespaces (this disk has 4% of the I/O load). – This should be safe as the static tables (which ones are those ?) are not as likely to have group operations performed on them as the ones in the DATA tablespace – The Export dump files have been moved to the Online Redo disk (the Redo log files are about 100Mb and don’t increase in size -(is that correct ?) Exporting causes minor transaction activity. – The other is the combination of the application software with the archived redo log file destination area. This leaves ARCH space to write log files, and avoids conflicts with DBWR Semester 1 2005 Week 11 Database Performance / 78 Hardware Configurations Disk Content 1 Oracle software 2 SYSTEM tablespace, Control file 1 3 RBS tablespace, RBS_2 tablespace, Control file 2 4 DATA tablespace, Control file 3 5 INDEXES tablespace 9 disks 6 TEMP, TEMP_USER, DATA_2 tablespaces 7 TOOLS, INDEXES_2 tablespaces 8 Online Redo logs 1, 2 and 3, Export dump file 9 Application software, Archived Redo log destination disk Semester 1 2005 Week 11 Database Performance / 79 Hardware Configurations Can the number of required disks be further reduced ? Remember that the performance characteristics will deteriorate It’s now important to look closely at the weights set during the I/O estimation process. Semester 1 2005 Week 11 Database Performance / 80 Hardware Configurations Estimated Weightings for the previous (9 disk) solution are Disk Weight Contents 1 Oracle software 2 35 SYSTEM tablespace, Control file 1 3 40 RBS, RBS_2 tablespace, Control file 2 4 100 DATA tablespace, Control file 3 5 33 INDEXES tablespaces 6 9 TEMP, TEMP_USER, DATA_2 tablespace 7 3 TOOLS, INDESES_2 tablespaces 8 40+ Online Redo logs 1,2 and 3, Export dump file destination disk 9 40+ Application software, archived redo log destination disk Semester 1 2005 Week 11 Database Performance / 81 Hardware Configurations A further compromise distribution could be Disk Weight Contents 1 Oracle software 2 38 SYSTEM, TOOLS, INDEXES_2 tablespaces, Control file1 3 40 RBS, RBS_2 tablespaces, Control file 2 4 100 DATA tablespace, Control file 3 5 42 INDEXES, TEMP, TEMP_USER, DATA_2 tablespaces 6 40+ Online redo logs 1,2 and 3, Export dump file destination disk 7 40+ Application software, Archived redo log destination disk Semester 1 2005 Week 11 Database Performance / 82 Hardware Configurations A few thoughts for a small database system - 3 disks 1. Suitable for an OLTP application. Assumes that the transactions a small in size, large in number and variety, and randomly scattered among the available tables. The application should be as index intensive as possible, and the full table scans must be kept to the minimum possible Semester 1 2005 Week 11 Database Performance / 83 Hardware Configurations 2. Isolate the SYSTEM tablespace. This stores the data dictionary - which is accessed for every query and is accessed many times for every query In a ‘typical case’, query execution requires – the column names to be checked in CODES_TABLE table – the user’s privilege of access to the CODES_TABLE table – the user’s privilege to access the Code column of the CODES_TABLE table – the user’s role definition(s) – the indexes defined on the CODES_TABLE table – the columns of the columns defined on the Semester 1 2005 Week 11 Database Performance / 84 CODES_TABLE table Hardware Configurations 3. Isolate the INDEXES tablespace. This probably accounts for 35 to 40% of the I/O 4. Separate the rollback segments and DATA tablespaces There is a point to watch here - with 3 disks there are 4 tablespaces - SYSTEM, INDEXES, DATA and RBS. The placement of RBS is determined by the volume of transactions. If high, RBS and DATA should be kept apart. If low, RBS and DATA should work together without causing contention Semester 1 2005 Week 11 Database Performance / 85 Hardware Configurations The 3 disk layout would be one of these : Disk 1: SYSTEM tablespace, control file, redo log Disk 2 : INDEXES tablespace, control file, redo log, RBS tablespace Disk 3 : DATA tablespace, control file, redo log OR Disk 1 : SYSTEM tablespace, control file, redo log Disk 2 : INDEXES tablespace, control file, redo log Disk 3 : DATA tablespace, control file, redo log, RBS tablespace Semester 1 2005 Week 11 Database Performance / 86 Hardware Configurations Summary : Database Type Tablespaces Small development SYSTEM database DATA INDEXES RBS TEMP USERS TOOLS Semester 1 2005 Week 11 Database Performance / 87 Hardware Configurations Summary : Database Type Production OLTP database Tablespaces SYSTEM DATA DATA_2 INDEXES INDEXES_2 RBS RBS_2 TEMP TEMP_USER TOOLS Semester 1 2005 Week 11 Database Performance / 88 Hardware Configurations Summary : Database Type Production OLTP with historical data Tablespaces Tablespaces SYSTEM TEMP DATA TEMP_USER DATA_2 TOOLS DATA_ARCHIVE INDEXES INDEXES_2 INDEXES_ARCHIVE RBS RBS_2 Semester 1 2005 Week 11 Database Performance / 89