* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Normality distribution testing for levelling data obtained

Pattern recognition wikipedia , lookup

Theoretical computer science wikipedia , lookup

Corecursion wikipedia , lookup

Error detection and correction wikipedia , lookup

Data analysis wikipedia , lookup

K-nearest neighbors algorithm wikipedia , lookup

Probability box wikipedia , lookup

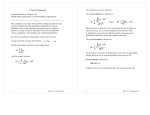

Geonauka Vol. 3, No. 1 (2015) UDC: 519.246 DOI: 10.14438/gn.2015.08 Typology: 1.04 Professional Article Article info: Received 2015-03-01, Accepted 2014-04-06, Published 2015-04-10 Normality distribution testing for levelling data obtained by geodetic control measurements Milan TRIFKOVIĆ1* 1 University of Novi Sad, Faculty of Civil Engineering, Subotica, Serbia Abstract. Normal distribution of data is of crucial importance in data processing and hypothesis testing in geodesy. Models of geodetic measurements adjustment assume that data are normally distributed. However, results of measurements could be affected by different influences because geodetic data are obtained under external conditions and under different limitations such as the deadlines or other processes on the construction site. These possibilities implicate certain risks that deviations from normal distribution in geodetic data could appear. Those deviations from normal distribution could spread through the mathematical and stochastic models and violate conclusions based on geodetic data. To avoid mentioned risks it is of considerable importance to devote attention to testing normality distribution of geodetic data obtained from production measurements. In this paper one set of leveling data obtained from production measurements was considered from aspect of its normal distribution curve. Keywords: Shapiro-Wilk test, Pearson Chi-square test, skewness, kurtosis * Milan Trifković> [email protected] 40 Geonauka Vol. 3, No. 1 (2015) normality available in statistical literature but most powerful test of researched one is Shapiro-Wilk test. Shapiro and Wilk coined the test for normality distribution of data in 1965 [4]. The Shapiro-Wilk statistics is defined as 1 Introduction The basic assumption for geodetic data processing especially for their adjustment by least square method is that they are normally distributed. The models of data distribution are well researched and their importance for least square method is explained in literature [1]. Also the methods of hypotheses of normally distribution of geodetic data testing are given in literature [2]. Most common tests for normality distribution of geodetic data testing are Shapiro-Wilk test, Pearson’s Chi-square test and Kolmogorov-Smirnov test but any conclusions about distribution is suggested to be checked by other characteristics of normally distributed data such as skewness and kurtosis [2]. Some authors [3] have found, on the base of simulated results, that ShapiroWilk test is most powerful normality test, followed by Anderson-Darling test, Lilliefors test and Kolmogorov-Smirnov test and that the power of all these four tests is still low for small sample size. In this paper the frequency histograms (as graphical methods), skewness and kurtosis (as numerical methods) and Shapiro-Wilk and Pearson’s Chi-square method (as formal methods) will be used for testing normality distribution as they are suggested in literature [2]. For case study the set of n=1324 differences of heights obtained by levelling for one object deformation monitoring are analysed. The levelling was performed by digital level and by using bar coded levelling rods. ∑ = ∑ (2) where: - – ith order statistics, - – sample mean and - – coefficients from [4]. In order to accept the null hypothesis the statistics shall fulfil the condition > ; = ; where is number of observations and α is significance level. In that case “there is no evidence, from the test, of non-normality of this sample” [4]. Pearson’s Chi-square test of distributions is given as follows [2] " = # - [2] % 2 Background $%∗ % ' ∑()* % ~",# ,. = − 1 (3) )∗ – number of data in 1 23 interval, ) – theoretical number of data in 1 23 interval, 4) – probability 4) = % ;) = 4) and ",# – chi-square statistics with .-degrees of freedom. Determination of interval ends is given as follows = 4) = 5 6)" − 5 6)8 = 5 9)" − 5 9)8 where (for normal distribution) 9)8 Normality distribution of geodetic data testing is of considerable importance because it is the base for correct utilization of least squares method in process of measured data adjustment. If geodetic data were not normally distributed it may cause the errors in hypotheses testing and consequently lead to wrong decisions in acceptance or rejecting ones. To avoid these possibilities it is recommended that normality of data distribution shall be tested before adjustment. Before starting procedures of normality tests the data should be grouped and tabled. For large samples of data optimal number of interval shall be determined as [2]. ≤ 5 = :% = ∆<% , 9)88 = :% > = ∆<% (4) (5) In spite of results of normality distribution conclusions obtained by formal tests (1) and (2) literature [2] proposes careful approach and suggests utilization of skewness and kurtosis measure as well as theoretical relationship between mean square error, average error and probable error as methods for normality distribution checking for sample of data. Skewness and kurtosis are given by formulae: ?@( = AB ∑*6 − 6C (1) where is optimal number of intervals and is number of data. According to [3] there are nearly 40 tests of where 41 (6) DE = AF ∑*6 − 6G (7) 6 = ∑* 6 (8) Geonauka H # = ∑*6 − 6# Vol. 3, No. 1 (2015) normality by Shapiro-Wilk test and there was no reason to reject null hypothesis because: (9) Even though some modifications of formulae 4 and 5 for skewness and kurtosis exist in contemporary literature, in this paper we will use only here showed ones. Average and probable error are defined as follows [2]: Θ = ∑*|Δ | p|Δ| < O = = 0.95 > b;c.db = 0.874 Table 1. Ends of intervals, empirical frequencies and averages for each interval # -∞ (11) The theoretical relationships between errors is given as P: Θ: O = 1:1.25:1.48 h∗i f, g (10) (12) Research in this paper will be mostly provided according to the models given by formulae (1) to (12). h∗i j k li h∗i i*j -0.198 1 -0.173 0 -0.21 -0.148 1 -0.14 -0.124 2 -0.12 -0.099 30 -0.10 -0.074 48 -0.07 -0.050 142 -0.05 -0.025 206 -0.02 3 Normality testing of levelling data obtained from production measurements - Case Study 0.000 367 0.00 0.024 225 0.02 In this paper normality distribution of set of levelling data is tested. Data are obtained by digital level with bar coded rods. Data were collected for purpose of deformation monitoring of object. 0.049 212 0.05 0.074 54 0.07 0.098 30 0.10 0.123 4 0.12 3.1 Description of measurement 0.148 2 0.14 +∞ The method of levelling was “back”-“for”-“for”“back” on the each station. Differences of heights differences obtained on all stations are the object of analysis. Heights differences on the each station was calculated by formulae Δ3 ,UVWVX = Y − , ΔYZ(WVX = Y# − ,# 3 Consequently, according to Shapiro-Wilk test it is possible to state that the analysed data follow the normal distribution. But bearing in mind that the power of normality distribution tests is low for small sample size [3] in next step we shall also use the Pearson’s Chi-square test for normality. According to optimal number of intervals and condition )∗ ≥ 5 the table for normality distribution test of levelling data was formed. Span of intervals was calculated as follows (13) (14) Differences of height differences for each station are [ = Δ3 ,UVWVX − ΔYZ(WVX 3 n= (15) Set of data for analysis is consisted of =1324 results of [ i.e. of 1324 measured height differences. n [o: − [o 0.16 − −.21 = = = 0.024667 15 ≈ 0.025mm Optimal number of intervals according to (1) is 15 because: Table 2 shows the data for normality distribution testing by Pearson’s Chi-square test. According to results of Pearson’s Chi-square test we shall reject the null hypothesis and accept the alternative one, because Table 1 shows the ends of intervals, empirical frequencies and averages for each group of data. Averages of data for intervals were tested on Different results suggest further analysis consisted of skewness, kurtosis and theoretical 3.2 Obtained Results and Discussion ≤ 5 ∗ log 1324 = 15 # " # = 138.30 > ";c.ddd = 31.26 42 Geonauka relationship between characteristic errors. Diagrams 1 and 2 shows the polygon of frequencies and histogram Vol. 3, No. 1 (2015) of frequencies related to theoretical frequencies for normal distribution, respectively. Table 2. Data tabled for Pearson’s Chi-square test xj tj nj* pj npj nj*-npj (nj*-npj)^2/npj -0.98005 0.00997 13.205 20.795 32.748 48 -0.92117 0.02944 38.983 9.017 2.086 -1.188 142 -0.76520 0.07798 103.250 38.750 14.543 -0.619 206 -0.46438 0.15041 199.146 6.854 0.236 0.000 -0.049 367 -0.03917 0.21260 281.488 85.512 25.977 0.024 0.521 225 0.39786 0.21851 289.310 -64.310 14.295 0.049 1.090 212 0.72435 0.16325 216.137 -4.137 0.079 0.074 1.660 54 0.90300 0.08933 118.268 -64.268 34.924 0.098 2.230 30 0.97420 0.03560 47.134 -17.134 6.229 +∞ +∞ 6 1.00000 0.01290 17.080 -11.080 7.187 1.00000 1324 0.000 -∞ -∞ -0.099 -2.328 34 -0.074 -1.758 -0.050 -0.025 F(tj) -1.00000 1324 400 " # =138.30 Polygon of frequences Fre… Th… 300 200 100 0 Diagram 1. Polygon of empirical frequencies related to theoretical frequencies Histogram of frequences 400 Frequ… Theor… 300 200 100 0 -∞-2.328 -1.758 -1.188 -0.619 -0.049 0.521 1.090 1.660 2.230 +∞ Diagram 2. Histogram of empirical frequencies related to theoretical frequencies Literature [2] contains detailed analytical models for skewness and kurtosis analysis which will be performed next. Quantiles of distribution ?(; for statistics ?@( , where probability 4 ?@( < ?(; = 4, are tabulated and value for = 1324 and 4 = 0.95 is ±0.111. According to formulae (6) and (7) the estimation of skewness and kurtosis are ?@( = −0.141 DE = 3.372 sE = 3.372 − 3 = +0.372 43 Geonauka Because ?@( = −0.141 ∉ −0.111, +0.111 we shall reject null hypothesis about symmetry of empirical distribution of analysed data for significance level v = 0.05. However for 4 = 0.99 we have ?@( = −0.141 ∈ −0.158, +0.158 meaning that for significance level v = 0.01 there is no reason for rejecting null hypothesis about the symmetry of analysed data. Criterion for accepting null hypothesis about kurtosis is given as follows: Vol. 3, No. 1 (2015) probability density function of the observables is not needed to routinely apply a least-squares algorithm and compute estimates for the parameters of interest. For the interpretation of the outcomes, and in particular for the statements on the quality of the estimator, the probability density has to be known.” Bearing in mind that geodetic control measurements are the base for conclusion about the state of an object or engineering structure it could be stated that knowledge of probability density is significant. 24 xsE x < 9 y = 0.264 4 Conclusions Case study showed that it is possible to accept opposite hypothesis about the distribution of the one set of data. As set of measured data was quite big it possible to state that all tests are reliable. This fact could open different questions about results of production measurements. For example during the measurements it could exist some influences which disturbed the normal distribution of data or there was some gross errors contaminating the results of measurements. However, production measurements are almost impossible to repeat even some gross errors or some influences have been detected. This may be caused by the limited time for measurements, by some technological processes or by changes of measured object with time. Also production measurements are the base for certain decisions which, if not based on reliable data, could lead to unacceptable losses. One of possible solution for this problem in these conditions (limited possibilities for measurements repetition and opposite hypotheses acceptance) is to analyse the influence of deviations from normal distribution to the reliability of final results for every measurement. For our set of data: xsE x = +0.372 > 0.264 meaning that null hypothesis shall be rejected. Also, comparing the mean square error, average error and relative error it is obtained: z = 0.0433 Θ = 0.0303 O = 0.0300 z: Θ:O = 1: 1.43: 1.44 meaning that theoretical relationship is not satisfied. According to [5] Jarque-Bera (SkewnessKurtosis) test is given by formula { A(|W|AA } + (~V2UAAC #G ~" # 2 (16) Replacing obtained values for ?@( = −0.141 and xsE x = +0.372 in formula (16) we have got 1324 References −0.141# 0.372# # + = 12.05 > "#;c.dd = 11.34 6 24 [1] Perović, G.: Least Squares. University of Belgrade, Faculty of Civil Engineering, Belgrade. 2005. [2] Perović, G.: Adjustment calculus, theory of measurements error (in SRB:Рачун изравнања, теорија грешака мерења). University of Belgrade, Faculty of Civil Engineering, Belgrade.1989. [3] Razali, N. M., Wah, Y. B.: Power comparisons of Shapiro-Wilk, Kolmogorov-Smirnov, Lillefors and Anderson-Darling tests. Journal of Staistical Modeling and Analytics, Vol.2.No.1, pp 21-33. 2011. [4] Shapiro, S.S., Wilk, M.B.: An Analysis of Variance Test for Normality (Complete Samples). Biometrika, Vol.52, No.3/4, pp 591-611. 1965. [5] Park, Hun Myoung: Univariate Analysis and Normality which means that there is no reason to accept null hypothesis even for significance level v = 0.01. Summarizing the obtained results only the Shapiro-Wilk test confirmed the normal distribution of analysed data, while Pearson’s Chi-square test, skewness and kurtosis analyses as well as theoretical relationship between errors could not confirm normal distribution of analysed data. This situation implicate that different methods could lead to opposite conclusions about the distribution of data. In literature [6] is stated “Knowledge of the 44 Geonauka Vol. 3, No. 1 (2015) Test Using SAS, Stata and SPSS. Technical Working Paper. The University Information technology Services (UITS) Center for Statistical and Mathematical Computing, Indiana University. 2008 [6] Tiberius, C.C.J.M., Borre, K.: Are GPS data normally distributed? (http://link.springer.com/chapter/10.1007%2F978-3642-59742-8_40#page-2) 45