* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download Goodness of Fit

Survey

Document related concepts

Transcript

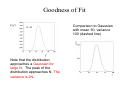

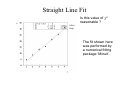

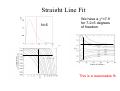

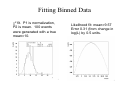

Goodness of Fit How do we decide if the fit is good ? Common quantitative criterion (for Gaussian distributed errors) – chi-square (χ2) test. We derive the distribution of χ2. Assume µi is the true mean of xi. The normalized probability of observing x1 in dx1, x2 in dx2, …, for Gaussian distributed variables is ⎡ 1 ⎤ 1 2 P(x1, x 2 ,..., x N )dx1dx 2 Ldx N = ∏ exp⎢− 2 (x i − µi ) ⎥ dx i ⎣ 2σ i ⎦ i=1 2πσ i 1 qi = (x i − µi ) Define: N σi r r Q(q )dq1 LdqN = P( x )dx1 Ldx N N /2 N /2 N ⎡ ⎤ ⎞ ⎞ ⎛ ⎛ ⎡ 1 2⎤ 1 1 1 r 2 Q(q ) = ⎜ ⎟ exp⎢− ∑ qi ⎥ = ⎜ ⎟ exp⎢− χ ⎥ ⎣ 2 ⎦ ⎝ 2π ⎠ ⎣ 2 i=1 ⎦ ⎝ 2π ⎠ Goodness of Fit χ = 2 Represents the square of a radius in Ndimensional q space. N ∑ qi2 i=1 We calculate the probability distribution for χ2 F( χ 2 )dχ 2 = F( χ 2 )2 χdχ For a given value of χ, we find the total probability within a shell of thickness dχ F( χ )dχ = f ( χ )dχ = 2 2 r ∫ Q(q )dq1 LdqN shell χ ,dχ Note: all solutions with same χ2 are equally probable. We therefore integrate the joint pdf from one χ2 surface to the next. Goodness of Fit F( χ )dχ = f ( χ )dχ = 2 2 r ∫ Q(q )dq1 LdqN shell χ ,dχ ⎛ 1 ⎞N / 2 =⎜ ⎟ ∫ e− χ ⎝ 2π ⎠ shell χ ,dχ ⎛ 1 ⎞N / 2 − χ =⎜ ⎟ e ⎝ 2π ⎠ ⎛ 1 ⎞N / 2 − χ =⎜ ⎟ e ⎝ 2π ⎠ = Ce −χ 2 / 2 2 /2 2 /2 dq1 LdqN ∫ dq1 LdqN shell χ ,dχ 2 /2 Aχ N −1dχ χ N −2 2 χdχ Goodness of Fit So F( χ ) = Ce 2 −χ 2 / 2 χ N −2 We can figure out the constant C by normalization ∞ ∞ ∫ F( χ ) dχ = 1 = C ∫ e−z / 2 z(N / 2)−1dz 2 2 0 0 [ C= 2 N /2 ] Γ(N /2) −1 1 F( χ )dχ = N / 2 e− χ 2 Γ(N /2) 2 2 2 /2 ( χ 2 )(N / 2)−1 dχ 2 This is the famous chi-square distribution for N degrees of freedom. It can be used to check whether a fit is reasonable. Goodness of Fit For a given (least-squares) fit to a set of data, a certain χ2 value will be obtained. One can then look up in tables whether this value is reasonable by calculating, e.g., PN ( χ > 2 χ 02 ) ∞ = ∫ F( χ 2 )dχ 2 χ 02 or χ 02 PN ( χ 2 < χ 02 ) = ∫ F( χ 2 )dχ 2 0 These are sometimes called p-numbers Goodness of Fit Comparison to Gaussian with mean 50, variance 100 (dashed line) F(χ2) N=50 χ2 Note that the distribution approaches a Gaussian for large N. The peak of the distribution approaches N. The variance is 2N. Goodness of Fit χ2 1 − ∫ F ( χ ′ 2 ) dχ ′ 2 0 Here n is the number of ‘degrees of freedom’ Reduced chi-square, χ2/ndf as a function of the number of degrees of freedom. Use this to determine if fit bad, or too good (errors overestimated). Goodness of Fit Note that the previous derivation assumed we knew the true values of the parameters. Of course we don’t, or we would not have performed the experiment. We use the measured best estimate as our mean. This produces a too small chi-squared. Look again at our starting point: ⎡ 1 ⎤ 1 2 exp⎢− 2 (x i − µi ) ⎥ dx i P(x1, x 2 ,..., x N )dx1dx 2 Ldx N = ∏ ⎣ 2σ i ⎦ i=1 2πσ i N Where do the µI come from ? Degrees of Freedom Suppose the theory prediction depends on n parameters ai and we fix these from the observations µi* = µ(a1,L,an ;i) N χ 2 = ∑ qi2 with q i = i=1 F( χ )dχ = f ( χ )dχ = 2 2 ∫ shell χ ,dχ (x i − µi* ) σi r n r Q(q ) ∏ δ ( f i (q ) − ai ) dq1 LdqN i=1 The δ-functions indicate that the parameters used to determine the qi themselves depend on the data. We therefore have reduced dimensionality of the integral. Degrees of Freedom F( χ )dχ = f ( χ )dχ = 2 2 ∫ shell χ ,dχ = Ae = Ce r n r Q(q ) ∏ δ ( f i (q ) − ai ) dq1 LdqN −χ 2 / 2 −χ 2 / 2 i=1 ∫ dq1 LdqN −n shell χ ,dχ χ N −n−2 2 χdχ So the χ2 distribution has N-n effective data points – ‘degrees of freedom’. Imagine fitting a line to 2 points. The (2) parameters of the line are fixed by the data, and the χ2 is obviously 0 since any line goes through two points. Straight Line Fit Is this value of χ2 reasonable ? Offset Slope The fit shown here was performed by a numerical fitting package ‘Minuit’. Straight Line Fit N=5 We have a χ2=7.9 for 7-2=5 degrees of freedom This is a reasonable fit. Straight Line Fit Example of good fit Errors too small ? Errors too big ? Fitting and standard errors How do we define the standard error on the parameters ? For the line fit, they came out analytically. In general, we can get the standard error by recalling the definition: Recall: −1/ 2 ⎛ d ln L ⎞ ∆α = ⎜− 2 ⎟ ⎝ dα ⎠ 2 Generalize: ( ) 2 −1 σa ij ∂2 ln L =− ∂ai∂a j Assuming a Gaussian likelihood, we write, for one parameter * 2 α − α ) ( log(L) = log(L* ) − 2σ 2 For |α-α*|=σ, we have 2 σ ) ( log(L) = log(L* ) − 2 = log(L* ) − 0.5 2σ Fitting and standard errors-cont. This is a prescription which can be used by the numerical fitting programs – the likelihood is evaluated, and the best fit values correspond to the maximum of the likelihood (or minimum chi-squared). The covariance matrix is then evaluated by determining where the log of the likelihood drops by 0.5 (for one standard deviation). This allows for asymmetric errors (as opposed to evaluating the second derivative, which yields a symmetric error). For chi-squared, the prescription for one standard deviation is the value of the parameters where chi-squared has increased by one unit. Recall 2 2 (U ) −1 ij = 1 ∂ χ 2 ∂ai ∂a j General Case We discussed in detail the case where the function to be compared to the data is linear in the fit parameters. For this case, we found: n Summary (linear form, Gaussian errors): ξ i = ∑ Cim am m=1 The parameters are given by: a = M −1 X The covariance of the parameters is σ ij2 = (M −1 )ij Where: N Xm = ∑ i =1 C im σ 2 i N xi M ml = ∑ i =1 C im C il σ 2 i = M lm General Case What if the function to be fit is not linear in the parameters ? In this case, we have to use an iterative procedure (numerical fitting program). This implies making a first guess on the value of the parameters, calculating χ2, then determining how χ2 varies with the value of the parameters, and finding a minimum. r 2 ∂χ 2 r r ∂f (x i ; a0 ) = gr ( a0 ) = ∑ − 2 [y i − f (x i ; a0 )] ∂ar ar = ar ∂ ar i σi 0 To find an extremum, we want to solve r r ∂χ 2 gr ( a0 + δa) = =0 ∂ar ar = ar +δar 0 For all r General Case Expanding in a Taylor series ∂ gr ∂ 2χ 2 r r r r δ as gr ( a0 + δa) ≈ gr ( a0 ) + ∑ δas = gr ( a0 ) + ∑ s ∂ as s ∂ar∂as then r r δa = −Gg where ⎛ ∂ 2 f i ⎞⎤ ⎛ −2 ⎞⎡ ⎛ ∂f i ⎞⎛ ∂f i ⎞ ∂ 2χ 2 Grs = = ∑ ⎜ 2 ⎟⎢−⎜ ⎟⎜ ⎟ + (y i − f i )⎜ ⎟⎥ ∂as∂ar i ⎝ σ i ⎠⎣ ⎝∂ar ⎠⎝∂as ⎠ ⎝∂ar∂as ⎠⎦ and r f i = f (x i ; a0 ) We then iterate until the changes become small. Starting guess is very important. Scanning possible, … Uses and misuses of χ2 The χ2 approach to fitting is the most widely used because of its simplicity. In addition, the χ2 value is a valuable indicator of how well the theory represents the data. However, you should always remember that it relies on Gaussian distributed errors. A standard problem is the attempt to fit such a histogram with a χ2 approach: Assume the parent distribution is a Poisson distribution. We know how to extract the Poisson mean using the Bayes method or a maximum likelihood approach, but it can be cumbersome. How do we perform a χ2 fit ? Fitting Binned Data The probability for an event to be in a bin is P(x|a), and we have measured a total of N events. Suppose we have M bins, labeled by the index j, and bin j is centered at xj, and has a width wj. The predicted number of events in bin j is therefore: f j = NP(x j | a)w j Note that wj=1 for a Poisson distribution. For a continuous distribution, we should integrate: x max f j = N ∫ P(x | a)dx x min Where xmin and xmax are the bin boundaries. The actual number of evenst in a bin follows a Poisson distribution, and we set σ2j=fj Fitting Binned Data Then we set: χ2 = ∑ j (n j − f j ) 2 fj But fj depends on the unknown parameter a, so this formula is replaced by: 2 − f ) (n j χ2 = ∑ j nj j You will see this used very often. Remember that the χ2 approach was derived for Gaussian uncertainties. I.e., fj should be large, and nj should be reasonably close to fj. In the histogram shown, what do we take for bins with 0,1,.. entries ? Usually empty bins ignored, althought they contain information. Should use Bayes of max likelihood if possible. Fitting Binned Data χ2 fit. P1 is normalization, P2 is mean. 100 events were generated with a true mean=10. Likelihood fit: mean=9.57 Error 0.31 (from change in log(L) by 0.5 units. Kolmogorov Test Another test that a one-dimensional data sample is compatible with being a random sampling from a given distribution is called the Kolmogorov (also called Kolmogorov-Smirnov) test. It is also used to test whether two data samples are compatible with being random samplings of the same, unknown distribution. First arrange your (N) data in order of increasing x, and calculate the cumulative distribution as a function of x, giving a weight of 1/N to each data point. This is then compared to the cumulative distribution of the pdf (or second data sample). The maximum deviation is calculated: DN = max[SN (x) − F(x)] This is multiplied by √N and compared to expectations Kolmogorov Test Example: Poisson distribution with mean 7, 100 events ND 1.63 1.36 1.22 1.07 Signific ance 1% 5% 10% 20%