* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Chapter 8 Linear regression

Survey

Document related concepts

Transcript

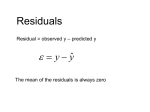

Chapter 8 Linear regression Math2200 Scatterplot • One double Whopper (a kind of sandwich of Burger King) contains 53 grams of protein, 65 grams of fat and 1020 calories. So two double would contain enough calories for a day. • fat versus protein for 30 items on the Burger King menu The linear model • Parameters – Intercept – Slope • Model is NOT perfect! • Predicted value ŷ • Residual = = Observed – predicted – Overestimate when residual<0 – Underestimate when residual>0 The linear model (cont.) • We write our model as ŷ b0 b1 x • This model says that our predictions from our model follow a straight line. • If the model is a good one, the data values will scatter closely around it. How did I get the line? “Best fit” line – Minimize residuals overall – The line of best fit is the line for which the sum of the squared residuals is smallest. – Least squares, that is to minimize How did I get the line? (cont.) • The regression line – Slope: in units of y per unit of x – Intercept: in units of y TI-83 • • • • • Enter data as lists first Press STAT Then move the cursor to CALC Press 4 (LinReg(ax+b)) or Press 8 (LinReg(a+bx)) Then put the list names for which you want to do regression, e.g., L1, L2 • Press ENTER • To see – – – – Set DIAGNOSTICS ON 2ND + 0 (CATALOG), move the cursor down to DiagnosticsOn Press ENTER (You will see ‘DONE’) Now repeat the above operations for linear regression, you will see the correlation coefficient and TI-83 • How to make the residual plot? – Same as making a scatterplot – Make the XLIST as the explanatory variable – Make the YLIST as ‘RESID’ Interpreting the regression line • Slope: – Increasing 1 unit in x increasing y units in • Intercept: predicted value of y when x=0 • Predicted value at x Fat Versus Protein: An Example • The regression line for the Burger King data fits the data well: – The equation is – The predicted fat content for a BK Broiler chicken sandwich is 6.8 + 0.97(30) = 35.9 grams of fat. Correlation and the Line • Moving one standard deviation away from the mean in x moves us r standard deviations away from the mean in y. How Big Can Predicted Values Get? • r cannot be bigger than 1 (in absolute value), so each predicted y tends to be closer to its mean (in standard deviations) than its corresponding x was. • This property of the linear model is called regression to the mean; the line is called the regression line. Sir Francis Galton Residuals Revisited • The residuals are the part of the data that hasn’t been modeled. Data = Model + Residual or (equivalently) Residual = Data – Model Or, in symbols, e y yˆ Residuals Revisited (cont.) • When a regression model is appropriate, nothing interesting should be left behind. – Residual plot should have no pattern, no bend, no outlier. • Residual against x • Residual against predicted value ŷ – The spread of the residual plot should be the same throughout. Residuals Revisited (cont.) • If the residuals show no interesting pattern in the residual plot, we use standard deviation of the residuals to measure how much the points spread around the regression line. The standard deviation of residuals is given by • We need the Equal Variance Assumption for the standard deviation of residuals. The associated condition to check is the Does the Plot Thicken? Condition. Residual plot Residuals vs Fitted 49 40 23 -20 0 Residuals 20 35 0 20 40 Fitted values lm(cars$dist ~ cars$speed) 60 80 How well does the linear model fit? • The variation in the residuals is the key to assessing how well the model fits. • Total fat (y): sd =16.4g • Residual: sd = 9.2g – less variation • How much of the variation is accounted for by the model? • How much is left in the residuals? Variation • The squared correlation gives the fraction of the data’s variation explained by the model. • We can view as the percentage of variability in y that is NOT explained by the regression line, or the variability that has been left in the residuals • For the BK model, r2 = 0.832 = 0.69, so 31% of the variability in total fat has been left in the residuals. 2 R —The Variation Accounted For • 0: no variance explained • 1: all variance explained by the model • How big should R2 be to conclude the model fit the data well? – R2 is always between 0% and 100%. What makes a “good” R2 value depends on the kind of data you are analyzing and on what you want to do with it. Check the following conditions • The two variables are both quantitative • The relationship is linear (straight enough) – Scatterplot – Residual plot • No outliers: Are there very large residuals? – Scatterplot – Residual plot • Equal variance: all residuals should share the same spread – Residual plot: Does the Plot Thicken? Summary • Whether the linear model is appropriate? – Residual plot • How well does the model fit? 2 –R Reality Check: Is the Regression Reasonable? • Statistics don’t come out of nowhere. They are based on data. – The results of a statistical analysis should reinforce your common sense, not fly in its face. – If the results are surprising, then either you’ve learned something new about the world or your analysis is wrong. • When you perform a regression, think about the coefficients and ask yourself whether they make sense. What Can Go Wrong? • Don’t fit a straight line to a nonlinear relationship. • Beware extraordinary points (y-values that stand off from the linear pattern or extreme x-values). • Don’t extrapolate beyond the data—the linear model may no longer hold outside of the range of the data. • Don’t infer that x causes y just because there is a good linear model for their relationship— association is not causation. • Don’t choose a model based on R2 alone. What have we learned? • When the relationship between two quantitative variables is fairly straight, a linear model can help summarize that relationship. – The regression line doesn’t pass through all the points, but it is the best compromise in the sense that it has the smallest sum of squared residuals. What have we learned? (cont.) • The correlation tells us several things about the regression: – The slope of the line is based on the correlation, adjusted for the units of x and y. – For each SD in x that we are away from the x mean, we expect to be r SDs in y away from the y mean. – Since r is always between -1 and +1, each predicted y is fewer SDs away from its mean than the corresponding x was (regression to the mean). – R2 gives us the fraction of the response accounted for by the regression model. What have we learned? (cont.) • The residuals also reveal how well the model works. – If a plot of the residuals against predicted values shows a pattern, we should reexamine the data to see why. – The standard deviation of the residuals quantifies the amount of scatter around the line. What have we learned? (cont.) • The linear model makes no sense unless the Linear Relationship Assumption is satisfied. • Also, we need to check the Straight Enough Condition and Outlier Condition with a scatterplot. • For the standard deviation of the residuals, we must make the Equal Variance Assumption. We check it by looking at both the original scatterplot and the residual plot for Does the Plot Thicken? Condition. Summary for Chapters 7 and 8 • How to read a scatter plot? – Direction – Form – Strength • Correlation coefficient – When can you use it? – How to calculate it? – How to interpret it? • Linear regression – – – – – When can you use it? How to calculate it? How to interpret it? How to make predictions? How to read residual plot?