* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Document

Degrees of freedom (statistics) wikipedia , lookup

Foundations of statistics wikipedia , lookup

Confidence interval wikipedia , lookup

Bootstrapping (statistics) wikipedia , lookup

History of statistics wikipedia , lookup

Taylor's law wikipedia , lookup

Misuse of statistics wikipedia , lookup

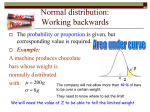

Universita’ degli Studi di Milano Corso di Laurea Magistrale in Farmacia Aspetti di economia e marketing dei medicinali e medicinali generici - Modulo: Medicinali generici Prof. Andrea Gazzaniga Richiami di Statistica Dott. Matteo Cerea Basic statistics Dr. Matteo Cerea, PhD Why Statistics? Two Purposes 1. Descriptive Finding ways to summarize the important characteristics of a dataset 2. Inferential How (and when) to generalize from a sample dataset to the larger population Descriptive Statistics Provides graphical and numerical ways to organize, summarize, and characterize a dataset. VARIABLE A characteristic or a property that can vary in value among subjects in a sample or a population. Ex: • The weight of tablets in a batch • The concentration of drug in plasma in patients after the administration of a fixed dose Types of Variables Predictor variable: The antecedent conditions that are going to be used to predict the outcome of interest. If an experimental study, then called an “independent variable”. x Outcome variable: The variable you want to be able to predict. If an experimental study, then called a “dependent variable”. y=f(x) Continuous variable: Can assume an infinite number of possible values that fall between any two observed values: the lowest and the highest. Ex: the drug content of tablets in a batch expressed as microgr Ranked variable: Are continuous variables although they do not represent physical measurement, such scale represent numerically ordered system. Ex: 0 1 2 3 4 5 no encrustation microscopic deposits on <50% of the stent microscopic deposits on >50% of the stent small macroscopic deposits on <50% of the stent small macroscopic deposits on >50% of the stent heavy macroscopic deposits Discrete (discontinous, meristic) variable: Consists of separate, indivisible categories. Discrete variables have integer numbers Ex: # of asthma attacks, # of fatalities, # of colonies of microrganisms Nominal variable (categorical): Cannot be measured because of their qualitative nature Ex: sex, gender, side effects associated with the treatment Nominal ranked (ordinal) variables Ex: side effects associated with the treatment, if ordered How to present data? 1.Describing data with tables and graphs (quantitative or categorical variables) 2.Numerical descriptions of center, variability, position (quantitative variables) 3.Bivariate descriptions (in practice, most studies have several variables) 1. Tables and Graphs There are several types of graphs or plots employed to display scientific data: • Graphs or plots that are employed to describe relationships between a fixed (independent) variable and a dependent variable • Graphs that are employed to pictorially describe distributions of data Frequency distribution lists possible values of variable (or intervals) and number of times each occurs Example Pharmaceutical Statistics, David Jones, Pharmaceutical Press http://books.google.co.ve/books?id=oXZD1GPOJIcC&printsec=frontcover&hl=it&sou rce=gbs_ge_summary_r&cad=0#v=onepage&q&f=false Frequency Tables Frequency Distribution Histogram: Bar graph of frequencies or percentages or relative frequency Frequency Distribution Frequency Distribution 309,1-310,0 308,1-309,0 307,1-308,0 306,1-307,0 305,1-306,0 304,1-305,0 303,1-304,0 302,1-303,0 301,1-302,0 300,1-301,0 299,1-300,0 298,1-299,0 297,1-298,0 296,1-297,0 295,1-296,0 294,1-295,0 293,1-294,0 292,1-293,0 291,1-292,0 290,1-291,0 Relative Frequency Distribution Proportion of tablets in Interval 0,180 0,160 0,140 0,120 0,100 0,080 0,060 0,040 0,020 0,000 Cumulative frequency distribution data Less than More than Cumulative frequency distribution graph Less than More than Qualitative or nominal Civil status X : disconnected qualitative o determined on nominal scale Ex. 4 different possibilities x1 = N x2 = C x3 = V x4 = S xi : k values of X ni : frequency of xi n: total number of observations fi = ni /n relative frequency; pi percent frequency Distribution of frequency of X is xi ni N C V S 6 7 4 3 n=20 fi = ni /n pi = fi · 100% 0.30 0.35 0.20 0.15 30 35 20 15 1.00 100 Pie chart Ex. Annual income in thousand Euro W : continuous quantitative Data (k = 20) are devided in classes (4) ai : wideness (amplitude) of each class li = ni /ai : frequency density Frequency table: xi ni fi Ni ai li 40 ⊣ 50 50 ⊣ 58 58 ⊣ 70 70 ⊣ 95 3 6 4 7 20 0.15 0.30 0.20 0.35 1.00 3 9 13 20 10 8 12 25 0.30 0.75 0.33 0.28 2. Descriptive Measures Numerical descriptions Let X denote a quantitative variable, with observations X1 , X2 , X3 , … , Xn • a-Central Tendency measures. They are computed to give a “center” around which the measurements in the data are distributed. • b-Variation or Variability measures. They describe “data spread” or how far away the measurements are from the center. • c-Relative Standing measures. They describe the relative position of specific measurements in the data. a. Measures of Central Tendency • Mean (average): Sum of all measurements divided by the number of measurements. N is the number of observations • Weighted mean: Each datum point does not contribute proportionally w is the frequency a. Measures of Central Tendency • Median: The central number of a set of data arranged in order of magnitude. • Mode: The most frequent measurement in the data. Calculation of the mean = (2+0+0+6+4+24+9+6+1+0) 10 Calculation of the median 0, 0, 0, 1, 2, 4, 6, 6, 9, 24 3 = 5.2 Properties of mean and median • For symmetric distributions, mean = median • For skewed distributions, mean is drawn in direction of longer tail, relative to median • Mean valid for continuous scales, median for continuous or ordinal scales • Mean sensitive to “outliers” (median often preferred for highly skewed distributions) • When distribution symmetric or mildly skewed or discrete with few values, mean preferred because uses numerical values of observations Ex. Tmax, median (range); AUC, mean (std.dev) In other words… • When the Mean is greater than the Median the data distribution is skewed to the Right. • When the Median is greater than the Mean the data distribution is skewed to the Left. • When Mean and Median are very close to each other the data distribution is approximately symmetric. b. Describing variability Range: Difference between largest and smallest observations (but highly sensitive to outliers, insensitive to shape). Used for non-normally distributed data (Ex: tmax) Mean deviation: The average distance from the mean The deviation of observation j from the mean is yj-y Mean deviation: The average distance from the mean MD: (𝑋𝑗−𝑋𝑚) 𝑁 # drug content Absolute values of errors 1 100,6 0,1 2 98,3 2,2 3 98,9 1,6 4 95,1 5,4 5 104,5 4,0 6 105,5 5,0 mean 100,5 sum 18,3 MD 3,1 Variance: is the “sums of squares” (SS) of the sample ( yi y ) ( y1 y ) ... ( yn y ) s n 1 n 1 2 2 2 2 • It is a measure of “spread”: the larger the deviations (positive or negative) the larger the variance The variance of a sample is the “sums of squares” (SS) 𝑆𝑆 = 𝑌𝑗 − 𝑌 2 The mean sums of squares σ 2= 𝑌𝑗−µ 2 𝑁 The variance of a sample of a population s 2= 𝑌𝑗 −𝑌 2 𝑁−1 The variance of a sample is the “sums of squares” (SS) 𝑆𝑆 = 𝑌𝑗 − 𝑌 2 The mean sums of squares σ 2= 𝑌𝑗−µ 2 𝑁 Population The variance of a sample of a population s 2= 𝑌𝑗 −𝑌 2 𝑁−1 Sample The variance of a sample is the “sums of squares” (SS) ( yi y ) ( y1 y ) ... ( yn y ) s n 1 n 1 2 2 2 2 Standard deviation: s is the square root of the variance, s s 2 • It is a measure of “spread”: the larger the deviations (positive or negative) the larger the variance The variance of a population is ( yi my ) ( y1 y ) ... ( yn y ) s n n 1 n 1 2 2 2 2 2 s The standard deviation s is the square root of the variance, ss 2 2 ss Ex: sample of a population: 100 tablets (sample) are removed from a batch of 1.000.000 tablets (population) and tested. The variance of a single random sample of measurements does not provide a good estimation of variance of the population from which the sample was derived A good estimation of population variance can be achieved from sample data if an average of several sample variance is calculated Concentration of a penicillin antibiotic in 5 bottles Bottle # Concentration of penicillin (mg/5mL) Contribute to the variance 1 125 134,56 2 124 123,21 3 121 92,16 4 123 112,36 5 16 1840,41 mean (mg/ 5mL) Total variance (sample) 101,8 2302,7 standard deviation 48,0 median 123 Standard deviation (error) of the mean: SEM Concentration of amoxicillin in 5 aliquots tested 5 times N = observation in sample Aliquot 1 mean s Aliquot 2 Aliquot 3 Aliquot 4 Aliquot 5 25,1 27,6 24,3 23,9 25,7 25,4 25,5 26,4 24,9 23,5 21,9 25,6 25,1 26,1 24,2 24,5 25 27,1 27 25,7 23,1 24,2 25 25,2 24,3 24,0 25,6 25,6 25,4 24,7 1,5 1,3 1,1 1,2 1,0 Mean total 25,1 SEM 0,70 Standard deviation (error) of the mean: SEM SEM = s/ 𝑁 s = standard deviation of the sample N = # of observations of the sample Standard deviation (error) of the mean: SEM Concentration of amoxicillin in 5 aliquots Aliquot 1 mean s Aliquot 2 Aliquot 3 Aliquot 4 Aliquot 5 25,1 27,6 24,3 23,9 25,7 25,4 25,5 26,4 24,9 23,5 21,9 25,6 25,1 26,1 24,2 24,5 25 27,1 27 25,7 23,1 24,2 25 25,2 24,3 24,0 25,6 25,6 25,4 24,7 1,5 1,3 1,1 1,2 1,0 Mean total 25,1 SEM 0,70 SEM estimed 0,66 Standard deviation of a sample is an estimation of the variability of a population the value does not reduce if the # of observations increases Standard deviation of the mean is an measure of the variability (precision) of the estimation of a defined population parameter (i.e. the mean) As the size of the sample increases, the magnitude of the standard error decreases Coefficient of variation (CV) 𝑆 CV (%) = x 100 𝑋 Accuracy: the closenes of a measured value to the true value (the value in absence of error) Absolute error: error abs = O - E E (true value, exact) O (observed value, or mean) Relative error: error rel = error abs = O – E E E Precision: describes the dispersion (variability) of a set of measurements Typically precision is associated with low dispersion of the value around a central value (low standard deviations) Accuracy: Accuracy is how close a measurement is to the "true" value. In a laboratory setting this is often how far a measured value is from a standard with a known value that was measured by different technology or on a different instrument. Precision: Precision is how close repeated measurements are to each other. Precision has no bearing on a target value, it is simply how close multiple measurements are together. Reproducibility is key to scientific research and precision is important in this aspect. c. Measures of position pth percentile: p percent of observations below it, (100 - p)% above it. • Example, if in a certain data the 85th percentile is 340 means that 15% of the measurements in the data are above 340. It also means that 85% of the measurements are below 340 • Notice that the median is the 50th percentile p = 50: median p = 25: lower quartile (LQ) p = 75: upper quartile (UQ) Interquartile range IQR = UQ - LQ Quartiles portrayed graphically by box plots (John Tukey) Example: weekly TV watching for n=60 from student survey data file, 3 outliers Box plots have box from LQ to UQ, with median marked. They portray a five-number summary of the data: Minimum, LQ, Median, UQ, Maximum except for outliers identified separately Outlier = observation falling below LQ – 1.5(IQR) or above UQ + 1.5(IQR) Ex. If LQ = 2, UQ = 10, then IQR = 8 and outliers above 10 + 1.5(8) = 22 Normal distribution curve Normal distribution curve In a normal distribution of data, also known as a bell curve, - the majority of the data in the distribution approximately 68% will fall within plus or minus one standard deviation of the statistical average. This means that if the standard deviation of a data set is 2, for example, the majority of data in the set will fall within +2 and -2 the average. -95.5% of normally distributed data is within two standard deviations of the mean, -over 99% are within three For any data • At least 75% of the measurements differ from the mean less than twice the standard deviation. • At least 89% of the measurements differ from the mean less than three times the standard deviation. Inferential Statistics …mathematical tools that permit the researcher to generalize to a population of individuals based upon information obtained from a limited number of research participants (observation) Sample statistics / Population parameters • We distinguish between summaries of samples (statistics) and summaries of populations (parameters). • Common to denote statistics by Roman letters, parameters by Greek letters: Population mean =m, standard deviation = s proportion p In practice, parameter values unknown, we make inferences about their values using sample statistics. Sample proportion Definition. The statistic that estimates the parameter 𝜋, a proportion of a population that has some property, is the sample proportion p 𝑝= 𝑛𝑢𝑚𝑏𝑒𝑟𝑜𝑓 𝑠𝑢𝑐𝑐𝑒𝑠𝑠𝑒𝑠 𝑖𝑛 𝑡ℎ𝑒 𝑠𝑎𝑚𝑝𝑙𝑒 𝑡𝑜𝑡𝑎𝑙 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑜𝑏𝑠𝑒𝑟𝑣𝑎𝑡𝑖𝑜𝑛𝑠 𝑖𝑛 𝑡ℎ𝑒 𝑠𝑎𝑚𝑝𝑙𝑒 The sample mean X estimates the population mean m (quantitative variable) The sample standard deviation s estimates the population standard deviation s (quantitative variable) A sample proportion p estimates a population proportion π (categorical variable) Ex. From a population of n individuals 2 samples of 100 individuals are extracted. mean height of the first sample X1 = 168 cm mean height of the first sample X2 = 162 cm … until all the samples are estracted. The sample proportion estimates the mean of population but with uncertainty. The uncertainty dependes upon: 1 - sample dimension 2 - variability of the population the means are different, but how are they distributed? population sample n1 sample n2>n1 Standard deviation of sampling distribution Standard Error SE = s/ 𝑁 SEM = s/ 𝑁 𝑝(1 − 𝑝) 𝑛 4. Probability Distributions Probability: With random sampling or a randomized experiment, the probability an observation takes a particular value is the proportion of times that outcome would occur in a long sequence of observations. Usually corresponds to a population proportion (and thus falls between 0 and 1) for some real or conceptual population. A probability distribution lists all the possible values and their probabilities (which add to 1.0) Basic probability rules Let A, B denotes possible outcomes • • • • P(not A) = 1 – P(A) For distinct (separate) possible outcomes A and B, P(A or B) = P(A) + P(B) If A and B not distinct, P(A and B) = P(A) x P(B given A) For “independent” outcomes, P(B given A) = P(B), so P(A and B) = P(A) x P(B). Probability distribution of a variable Lists the possible outcomes for the “random variable” and their probabilities Discrete variable: Assign probabilities P(y) to individual values y, with 0 P( y) 1, P( y) 1 In practice, probability distributions are often estimated from sample data, and then have the form of frequency distributions Like frequency distributions, probability distributions have descriptive measure like mean and standard deviation m E (Y ) yP( y) Expected value Standard Deviation - Measure of the “typical” distance of an outcome from the mean, denoted by σ If a distribution is approximately bell-shaped, then: • all or nearly all the distribution falls between µ - 3σ and µ + 3σ • Probability about 0.68 falls between µ - σ and µ + σ • • • • Continuous variables: Probabilities assigned to intervals of numbers Most important probability distribution for continuous variables is the normal distribution Symmetric, bell-shaped Characterized by mean (m) and standard deviation (s), representing center and spread Probability within any particular number of standard deviations of m is same for all normal distributions An individual observation from an approximately normal distribution has probability 0.68 of falling within 1 standard deviation of mean 0.95 of falling within 2 standard deviations 0.997 of falling within 3 standard deviations The normal curve is often called the Gaussian distribution, after Carl Friedrich Gauss, who discovered many of its properties. Gauss, commonly viewed as one of the greatest mathematicians of all time, was honoured by Germany on their 10 Deutschmark bill. Properties (cont.) • Has a mean = 0 and standard deviation = 1. • General relationships: ±1 s = about 68.26% ±2 s = about 95.44% ±3 s = about 99.72% 68.26% 95.44% 99.72% -5 -4 -3 -2 -1 0 1 2 3 4 5 Notes about z-scores Are a way of determining the position of a single score under the normal curve. Measured in standard deviations relative to the mean of the curve. The Z-score can be used to determine an area under the curve known as a probability. z = (y - µ) σ • The standard normal distribution is the normal distribution with µ = 0, σ = 1 For that distribution, z = (y - µ)/σ = (y - 0)/1 = y i.e., original score = z-score µ + zσ = 0 + z(1) = z • Why is normal distribution so important? If different studies take random samples and calculate a statistic (e.g. sample mean) to estimate a parameter (e.g. population mean), the collection of statistic values from those studies usually has approximately a normal distribution. (So?) Notes about z-scores Ex. 5000 tablets produced and assayed for content. The mean (µ ± σ) is 200 ± 10 mg and the concentration is normally distributed. Calculate the proportion of tablets that contain 180 mg or less. 68.26% z = (y - µ) σ 95.44% 99.72% -5 -4 -3 -2 -1 -40 -30 -20 -10 160 170 180 190 0 5 z 1 2 3 4 0 10 20 30 40 y-µ (mg) 200 210 220 240 240 Y (mg) z= 𝑦−µ σ 180−200 10 = = -2.00 68.26% 95.44% 99.72% -5 -4 -3 -2 -1 0 1 2 3 4 5 probability distribution z-score table (z probability table) (http://www.bucknam.com/zprob.html) z= - 2.00, probability below 0.023% A sampling distribution lists the possible values of a statistic (e.g., sample mean or sample proportion) and their probabilities How close is sample mean Ῡ to population mean µ? To answer this, we must be able to answer, “What is the probability distribution of the sample mean?” Sampling distribution of sample mean • y is a variable, its value varying from sample to sample about the population mean µ • Standard deviation of sampling distribution of y is called the standard error of y • For random sampling, the sampling distribution of y has mean µ and standard error s population standard deviation sy n sample size Central Limit Theorem: For random sampling with “large” n, the sampling distribution of the sample mean is approximately a normal distribution • Approximate normality applies no matter what the shape of the population distribution. • How “large” n needs to be depends on skew of population distribution, but usually n ≥ 30 sufficient 5. Statistical Inference: Estimation Goal: How can we use sample data to estimate values of population parameters? Point estimate: A single statistic value that is the “best guess” for the parameter value Interval estimate: An interval of numbers around the point estimate, that has a fixed “confidence level” of containing the parameter value. Called a confidence interval. (Based on sampling distribution of the point estimate) Point Estimators – Most common to use sample values • Sample mean estimates population mean m y mˆ y i n • Sample std. dev. estimates population std. dev. s sˆ s 2 ( y y ) i n 1 • Sample proportion pˆ estimates population proportion π Confidence Intervals • A confidence interval (CI) is an interval of numbers believed to contain the parameter value. Ex. When public health practitioners use health statistics, sometimes they are interested in the actual number of health events, but more often they use the statistics to assess the true underlying risk of a health problem in the community. Statistical sampling theory is used to compute a confidence interval to provide an estimate of the potential discrepancy between the true population parameters and observed rates. Understanding the potential size of that discrepancy can provide information about how to interpret the observed statistic. Confidence Intervals • A confidence interval (CI) is an interval of numbers believed to contain the parameter value. • The probability the method produces an interval that contains the parameter is called the confidence level. Most studies use a confidence level close to 1, such as 0.95 or 0.99. • Most CIs have the form point estimate ± margin of error with margin of error based on spread of sampling distribution of the point estimator; e.g., margin of error ±2(standard error) for 95% confidence. Confidence Intervals A 95% confidence interval for a percentage is the range of scores within which the percentage will be found if you went back and got a different sample from the same population. • The sampling distribution of a sample proportion for large random samples is approximately normal (Central Limit Theorem) • So, with probability 0.95, sample proportion pˆ falls within 1.96 standard errors of population proportion π • 0.95 probability that z= 1.96 probability of 97.5% (or better 0.975) pˆ falls between p 1.96s pˆ and p 1.96s pˆ • Once sample selected, we’re 95% confident pˆ 1.96s pˆ to pˆ 1.96s pˆ contains p This is the CI for the population proportion π (almost) Finding a CI in practice • Complication: The true standard error se = s pˆ s / n p (1 p ) / n 𝑝 1−𝑝 𝑛 itself depends on the unknown parameter! In practice, we estimate s pˆ p (1 p ) n by se pˆ 1 pˆ n and then find the 95% CI using the formula pˆ 1.96(se) to pˆ 1.96(se) (1-p)= q Greater confidence requires wider CI Greater sample size gives narrower CI (quadruple n to halve width of CI) Some comments about CIs • Effects of n, confidence coefficient true for CIs for other parameters also • If we repeatedly took random samples of some fixed size n and each time calculated a 95% CI, in the long run about 95% of the CI’s would contain the population proportion π. • The probability that the CI does not contain π is called the error probability, and is denoted by α. • α = 1 – confidence coefficient (1-)100% 90% 95% 99% /2 .10 .05 .01 .050 .025 .005 z/2 1.645 1.96 2.58 Confidence Interval for the Mean • In large random samples, the sample mean has approximately a normal sampling distribution with mean m and standard error s sy n • Thus, P(m 1.96s y y m 1.96s y ) .95 • We can be 95% confident that the sample mean lies within 1.96 standard errors of the (unknown) population mean • Problem: Standard error is unknown (s is also a parameter). It is estimated by replacing s with its point estimate from the sample data: s se n 95% confidence interval for m : s y 1.96(se), which is y 1.96 n This works ok for “large n,” because s then a good estimate of σ (and CLT applies). But for small n, replacing σ by its estimate s introduces extra error, and CI is not quite wide enough unless we replace z-score by a slightly larger “t-score.” The t distribution (Student’s t) The t distribution is used instead of the normal distribution whenever the standard deviation is estimated. • Bell-shaped, symmetric about 0 • Standard deviation a bit larger than 1 (slightly thicker tails than standard normal distribution, which has mean = 0, standard deviation = 1) • Precise shape depends on degrees of freedom (df). For inference about mean, df = n – 1 • Gets narrower and more closely resembles standard normal distribution as df increases (nearly identical when df > 30) • CI for mean has margin of error t(se), (instead of z(se) as in CI for proportion) The t distribution (Student’s t) Part of a t table df 1 10 16 30 100 infinity Confidence Level 90% 95% 98% t.050 t.025 t.010 6.314 12.706 31.821 1.812 2.228 2.764 1.746 2.120 2.583 1.697 2.042 2.457 1.660 1.984 2.364 1.645 1.960 2.326 99% t.005 63.657 3.169 2.921 2.750 2.626 2.576 df = ∞ corresponds to standard normal distribution CI for a population mean • For a random sample from a normal population distribution, a 95% CI for µ is y t.025 (se), with se s / n where df = n - 1 for the t-score • Normal population assumption ensures sampling distribution has bell shape for any n. Comments about CI for population mean µ • The method is robust to violations of the assumption of a normal population distribution (But, be careful if sample data distribution is very highly skewed, or if severe outliers. Look at the data.) • Greater confidence requires wider CI • Greater n produces narrower CI • t methods developed by the statistician William Gosset of Guinness Breweries, Dublin (1908) Choosing the Sample Size • Determine parameter of interest (population mean or population proportion) • Select a margin of error (M) and a confidence level (determines z-score) Proportion (to be “safe,” set p = 0.50): Mean (need a guess for value of s): z n p (1 p ) M z n s M 2 2 2 • We’ve seen that n depends on confidence level (higher confidence requires larger n) and the population variability (more variability requires larger n) • In practice, determining n not so easy, because (1) many parameters to estimate, (2) resources may be limited and we may need to compromise • CI’s can be formed for any parameter. Using CI Inference in Practice • What is the variable of interest? quantitative – inference about mean categorical – inference about proportion • Are conditions satisfied? Randomization (why? Needed so sampling distribution and its standard error are as advertised) 6. Statistical Inference: Significance Tests Goal: Use statistical methods to test hypotheses such as “For treating anorexia, cognitive behavioral and family therapies have same mean weight change as placebo” (no effect) “Mental health tends to be better at higher levels of socioeconomic status (SES)” (i.e., there is an effect) “Spending money on other people has a more positive impact on happiness than spending money on oneself.” Hypotheses: For statistical inference, these are predictions about a population expressed in terms of parameters (e.g., population means or proportions or correlations) for the variables considered in a study A significance test uses data to evaluate a hypothesis by comparing sample point estimates of parameters to values predicted by the hypothesis. We answer a question such as, “If the hypothesis were true, would it be unlikely to get data such as we obtained?” Five Parts of a Significance Test • Assumptions about type of data (quantitative, categorical), sampling method (random), population distribution (e.g., normal, binary), sample size (large enough?) • Hypotheses: Null hypothesis (H0): A statement that parameter(s) take specific value(s) (Usually: “no effect”) Alternative hypothesis (Ha): states that parameter value(s) falls in some alternative range of values (an “effect”) • Test Statistic: Compares data to what null hypotesis H0 predicts, often by finding the number of standard errors between sample point estimate and H0 value of parameter • P-value (P): A probability measure of evidence about H0. The probability (under presumption that H0 true) the test statistic equals observed value or value even more extreme in direction predicted by Ha. – The smaller the P-value, the stronger the evidence against H0. • Conclusion: – If no decision needed, report and interpret P-value – If decision needed, select a cutoff point (such as 0.05 or 0.01) and reject H0 if P-value ≤ that value – The most widely accepted cutoff point is 0.05, and the test is said to be “significant at the .05 level” if the Pvalue ≤ 0.05. – If the P-value is not sufficiently small, we fail to reject H0 (then, H0 is not necessarily true, but it is plausible) – Process is analogous to American judicial system • H0: Defendant is innocent • Ha: Defendant is guilty Fine prima parte Significance Test for Mean • Assumptions: Randomization, quantitative variable, normal population distribution (robustness?) • Null Hypothesis: H0: µ = µ0 where µ0 is particular value for population mean (typically “no effect” or “no change” from a standard) • Alternative Hypothesis: Ha: µ µ0 2-sided alternative includes both > and < H0 value • Test Statistic: The number of standard errors that the sample mean falls from the H0 value y m0 t where se s / n se When H0 is true, the sampling distribution of the t test statistic is the t distribution with df = n - 1. • P-value: Under presumption that H0 true, probability the t test statistic equals observed value or even more extreme (i.e., larger in absolute value), providing stronger evidence against H0 – This is a two-tail probability, for the two-sided Ha • Conclusion: Report and interpret P-value. If needed, make decision about H0 Making a decision: The α-level is a fixed number, also called the significance level, such that if P-value ≤ α, we “reject H0” If P-value > α, we “do not reject H0” Note: We say “Do not reject H0” rather than “Accept H0” because H0 value is only one of many plausible values. A high significance level means there is a large chance that the experiment proves something that is not true. A very small significance level assures the statistician that there is little room to doubt the results. Effect of sample size on tests • With large n (say, n > 30), assumption of normal population distribution not important because of Central Limit Theorem. • For small n, the two-sided t test is robust against violations of that assumption. However, one-sided test is not robust. • For a given observed sample mean and standard deviation, the larger the sample size n, the larger the test statistic (because se in denominator is smaller) and the smaller the P-value. (i.e., we have more evidence with more data) • We’re more likely to reject a false H0 when we have a larger sample size (the test then has more “power”) • With large n, “statistical significance” not the same as “practical significance.” Significance Test for a Proportion π • Assumptions: – Categorical variable – Randomization – Large sample (but two-sided ok for nearly all n) • Hypotheses: – Null hypothesis: H0: p p0 – Alternative hypothesis: Ha: p p0 (2-sided) – Ha: p > p0 Ha: p < p0 (1-sided) – Set up hypotheses before getting the data • Test statistic: Note pˆ p 0 pˆ p 0 z s p 0 (1 p 0 ) / n pˆ s pˆ se0 p 0 (1 p 0 ) / n , not se pˆ (1 pˆ ) / n as in a CI As in test for mean, test statistic has form (estimate of parameter – H0 value)/(standard error) = no. of standard errors the estimate falls from H0 value • P-value: Ha: p p0 P = 2-tail prob. from standard normal dist. Ha: p > p0 P = right-tail prob. from standard normal dist. Ha: p < p0 P = left-tail prob. from standard normal dist. • Conclusion: As in test for mean (e.g., reject H0 if P-value ≤ α) Decisions in Tests -level (significance level): Pre-specified “hurdle” for which one rejects H0 if the P-value falls below it (Typically 0.05 or 0.01) P-Value .05 > .05 H0 Conclusion Reject Do not Reject Ha Conclusion Accept Do not Accept • Rejection Region: Values of the test statistic for which we reject the null hypothesis For 2-sided tests with = 0.05, we reject H0 if |z| 1.96 Error Types • Type I Error: Reject H0 when it is true • Type II Error: Do not reject H0 when it is false Test Result – Reject H0 Don’t Reject H0 Reality H0 True Type I Error Correct H0 False Correct Type II Error P(Type I error) • Suppose -level = 0.05. Then, P(Type I error) = P(reject null, given it is true) = P(|z| > 1.96) = 0.05 • i.e., the -level is the P(Type I error). • Since we “give benefit of doubt to null” in doing test, it’s traditional to take small, usually 0.05 but 0.01 to be very cautious not to reject null when it may be true. • As in CIs, don’t make too small, since as goes down, β = P(Type II error) goes up (Think of analogy with courtroom trial) • Better to report P-value than merely whether reject H0 P(Type II error) • P(Type II error) = b depends on the true value of the parameter (from the range of values in Ha ). • The farther the true parameter value falls from the null value, the easier it is to reject null, and P(Type II error) goes down. • Power of test = 1 -α = P(reject null, given it is false) • In practice, you want a large enough n for your study so that P(Type II error) is small for the size of effect you expect. Practical Applications of Statistics researchers use a test of significance to determine whether to reject or fail to reject the null hypothesis …involves pre-selecting a level of probability, “α” (e.g., α = .05) that serves as the criterion to determine whether to reject or fail to reject the null hypothesis Steps in using inferential statistics… 1. select the test of significance 2. determine whether significance test will be twotailed or one tailed 3. select α (alpha), the probability level 4. compute the test of significance 5. consult table to determine the significance of the results Tests of significance... • statistical formulas that enable the researcher to determine if there was a real difference between the sample means different tests of significance account for different factors including: the scale of measurement represented by the data; method of participant selection, number of groups being compared, and, the number of independent variables …the researcher must first decide whether a parametric or nonparametric test must be selected parametric test... • assumes that the variable measured is normally distributed in the population • the selection of participants is independent • the variances of the population comparison groups are equal used when the data represent a interval or ratio scale nonparametric test... makes no assumption about the distribution of the variable in the population, that is, the shape of the distribution • used when the data represent a nominal or ordinal scale, when a parametric assumption has been greatly violated, or when the nature of the distribution is not known • • usually requires a larger sample size to reach the same level of significance as a parametric test • The most common tests of significance… t-test z-test ANOVA Chi Square t-test... …used to determine whether two means are significantly different at a selected probability level …adjusts for the fact that the distribution of scores for small samples becomes increasingly different from the normal distribution as sample sizes become increasingly smaller the strategy of the t-test is to compare the actual mean difference observed to the difference expected by chance t-test... forms a ratio where the numerator is the difference between the sample means and the denominator is the chance difference that would be expected if the null hypothesis were true after the numerator is divided by the denominator, the resulting t value is compared to the appropriate t table value, depending on the probability level and the degrees of freedom if the t value is equal to or greater than the table value, then the null hypothesis is rejected because the difference is greater than would be expected due to chance (t stat > t tab, H0 rejected) t-test... there are two types of t-tests: the t-test for independent samples (randomly formed) the t-test for nonindependent samples (nonrandomly formed, e.g., matching, performance on a pre-/posttest, different treatments) t-test... Ex. t-test Z-test... Ex. Z-test ANOVA (Analysis of Variance) used to determine whether two or more means are significantly different at a selected probability level avoids the need to compute duplicate t-tests to compare groups (more than 2) the strategy of ANOVA is that total variation, or variance, can be divided into two sources: treatment variance “between groups,” variance caused by the treatment groups error variance “within groups” variance ANOVA (Analysis of Variance) forms a ratio, the F ratio, with the treatment variance as the numerator (between group variance) and error variance as the denominator (within group variance) …the assumption is that randomly formed groups of participants are chosen and are essentially the same at the beginning of a study on a measure of the dependent variable …at the study’s end, the question is whether the variance between the groups differs from the error variance by more than what would be expected by chance • if the treatment variance is sufficiently larger than the error variance, a significant F ratio results, that is, the null hypothesis is rejected and it is concluded that the treatment had a significant effect on the dependent variable • if the treatment variance is not sufficiently larger than the error variance, an insignificant F ratio results, that is, the null hypothesis is accepted and it is concluded that the treatment had no significant effect on the dependent variable when the F ratio is significant and more than two means are involved, researchers use multiple comparison procedures (e.g., Scheffé test, Tukey’s HSD test, Duncan’s multiple range test) ANOVA (Analysis of Variance) Ex. ANOVA One-way Two-way Chi Square (Χ2) Pearson’s test a nonparametric test of significance appropriate for nominal or ordinal data that can be converted to frequencies It can be applied to interval or ratio data that have been categorized into a small number of groups. It assumes that the observations are randomly sampled from the population. All observations are independent (an individual can appear only once in a table and there are no overlapping categories). It does not make any assumptions about the shape of the distribution nor about the homogeneity of variances. Chi Square (Χ2)... compares the proportions actually observed (O) to the proportions expected (E) to see if they are significantly different …the chi square value increases as the difference between observed and expected frequencies increases One- and two- tailed tests of significance... • tests of significance that indicate the direction in which a difference may occur …the word “tail” indicates the area of rejection beneath the normal curve • Ho : The two variables are independent • Ha : The two variables are associated Chi Square (Χ2) calculations • Contrasts observed frequencies in each cell of a contingency table with expected frequencies. • The expected frequencies represent the number of cases that would be found in each cell if the null hypothesis were true ( i.e. the nominal variables are unrelated). • Expected frequency of two unrelated events is product of the row and column frequency divided by number of cases. Fe= Fr Fc / N ( Fo Fe ) Fe 2 2 Determine Degrees of Freedom • df = (R-1)(C-1) Compare computed test statistic against a tabled/critical value • The computed value of the Pearson chisquare statistic is compared with the critical value to determine if the computed value is improbable • The critical tabled values are based on sampling distributions of the Pearson chisquare statistic • If calculated 2 is greater than 2 table value, reject Ho • A=B no difference between means; the direction can be positive or negative direction can be in either tail of the normal curve called a “two-tailed” test divides the α level between the two tails of the normal curve • A > B or A < B there is a difference between means; the direction is either positive or negative called a “one-tailed” test the α level is found in one tail of the normal curve Ex. Chi Square (Χ2) 3. Bivariate description • Usually we want to study associations between two or more variables (e.g., how does number of close friends depend on gender, income, education, age, working status, rural/urban, religiosity…) • Response variable: the outcome variable • Explanatory variable(s): defines groups to compare Ex.: number of close friends is a response variable, while gender, income, … are explanatory variables Response var. also called “dependent variable” Explanatory var. also called “independent variable” Summarizing associations: • Categorical var’s: show data using contingency tables • Quantitative var’s: show data using scatterplots • Mixture of categorical var. and quantitative var. (e.g., number of close friends and gender) can give numerical summaries (mean, standard deviation) or side-by-side box plots for the groups Contingency Tables • Cross classifications of categorical variables in which rows (typically) represent categories of explanatory variable and columns represent categories of response variable. • Counts in “cells” of the table give the numbers of individuals at the corresponding combination of levels of the two variables Another Example Heparin Lock Placement Time: 1 = 72 hrs 2 = 96 hrs Complication Incidence * Heparin Lock Placement Time Group Crosstabulation Complication Incidence Had Compilca Had NO Compilca Total Count Expected Count % within Heparin Lock Placement Time Group Count Expected Count % within Heparin Lock Placement Time Group Count Expected Count % within Heparin Lock Placement Time Group Heparin Lock Placement Time Group 1 2 9 11 10.0 10.0 Total 20 20.0 18.0% 22.0% 20.0% 41 40.0 39 40.0 80 80.0 82.0% 78.0% 80.0% 50 50.0 50 50.0 100 100.0 100.0% 100.0% 100.0% 147 Hypotheses in Heparin Lock Placement • Ho: There is no association between complication incidence and length of heparin lock placement. (The variables are independent). • Ha: There is an association between complication incidence and length of heparin lock placement. (The variables are related). 148 More of SPSS Output 149