* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download Artificial Neural Networks (ANN)

Perceptual control theory wikipedia , lookup

Concept learning wikipedia , lookup

Neural modeling fields wikipedia , lookup

History of artificial intelligence wikipedia , lookup

Machine learning wikipedia , lookup

Gene expression programming wikipedia , lookup

Pattern recognition wikipedia , lookup

Hierarchical temporal memory wikipedia , lookup

Backpropagation wikipedia , lookup

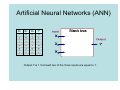

Artificial Neural Networks (ANN) Artificial Neural Networks 1. 類神經網路(Artificial Neural Networks, ANNs)或 譯為人工神經網路,其主要的基本概念是嘗試著模仿人 類的神經系統 2. 它是由很多非線性的運算單元(即:神經元 neuron)和 位於這些運算單元間的眾多連結(links)所組成,而這 些運算單元通常是以平行且分散的方式來進行運算 3. 以電腦的軟硬體來模擬生物神經網路的資訊處理系統 4. 整個ANN的聚集形式就如同人類的大腦一般,可透過樣本 或資料的訓練來展現出學習(learning)、回想 (recall)、歸納推演(generalization)的能力 使用ANN的原因 類神經網路在處理複雜的工作時 不需要針對問題定義複雜的數學模式 不用去解任何微分方程、積分方程或其他的數學 方程式 藉由學習來面對複雜的問題與不確定性的環境 聯想速度快 網路架構容易維持 解決最佳化、非線性系統問題 具容錯特性 具平行處理能力 Artificial Neural Networks (ANN) X1 X2 X3 Y 1 1 1 1 0 0 0 0 0 0 1 1 0 1 1 0 0 1 0 1 1 0 1 0 0 1 1 1 0 0 1 0 Output Y is 1 if at least two of the three inputs are equal to 1. Artificial Neural Networks (ANN) X1 X2 X3 Y 1 1 1 1 0 0 0 0 0 0 1 1 0 1 1 0 0 1 0 1 1 0 1 0 0 1 1 1 0 0 1 0 Y I ( 0 .3 X 1 0 .3 X 2 0 .3 X 3 0 .4 0 ) 1 where I ( z ) 0 if z is true otherwise Artificial Neural Networks (ANN) • Model is an assembly of inter-connected nodes and weighted links • Output node sums up each of its input value according to the weights of its links Perceptron Model Y I ( wi X i t ) i • Compare output node against some threshold t or Y sign( wi X i t ) i General Structure of ANN Training ANN means learning the weights of the neurons Algorithm for learning ANN • Initialize the weights (w0, w1, …, wk) • Adjust the weights in such a way that the output of ANN is consistent with class labels of training examples 2 – Objective function: E Yi f ( w i , X i ) i – Find the weights wi’s that minimize the above objective function • e.g., backpropagation algorithm Classification by Backpropagation • Backpropagation: A neural network learning algorithm • Started by psychologists and neurobiologists to develop and test computational analogues of neurons • A neural network: A set of connected input/output units where each connection has a weight associated with it • During the learning phase, the network learns by adjusting the weights so as to be able to predict the correct class label of the input tuples • Also referred to as connectionist learning due to the connections between units 如何設定網路的大小 依據問題的複雜程度、可用於訓 練網路的範例個數以及所需的精 確度等來決定 學者們的經驗累積與不斷地利用 試誤法(trial and error) Neural Network as a Classifier • Weakness – Long training time – Require a number of parameters typically best determined empirically, e.g., the network topology or ``structure." – Poor interpretability: Difficult to interpret the symbolic meaning behind the learned weights and of ``hidden units" in the network • Strength – – – – – – High tolerance to noisy data Ability to classify untrained patterns Well-suited for continuous-valued inputs and outputs Successful on a wide array of real-world data Algorithms are inherently parallel Techniques have recently been developed for the extraction of rules from trained neural networks Backpropagation • Iteratively process a set of training tuples & compare the network's prediction with the actual known target value • For each training tuple, the weights are modified to minimize the mean squared error between the network's prediction and the actual target value • Modifications are made in the “backwards” direction: from the output layer, through each hidden layer down to the first hidden layer, hence “backpropagation” • Steps – – – – Initialize weights (to small random #s) and biases in the network Propagate the inputs forward (by applying activation function) Backpropagate the error (by updating weights and biases) Terminating condition (when error is very small, etc.) Software • • • • • Excel + VBA SPSS Clementine SQL Server Programming Other Data Mining Packages …