* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Lecture 2

Survey

Document related concepts

Transcript

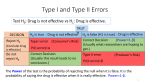

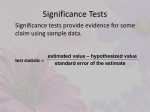

Lecture 2 Survey Data Analysis Principal Component Analysis Factor Analysis Exemplified by SPSS Taylan Mavruk 1. 2. 3. 4. 5. Topics: Bivariate analysis (quantitative & qualitative variables) Scatter-plot and Correlation coefficient The straight line equation and regression Coefficient of determination R2 (goodness of fit) Hypothesis testing X-variable (independent) Qualitative Qualitative Quantitative Case 1 Case - Case 2 Case 3 Y-variable (dependent) Quantitative Definition: the combination of frequencies of two (qualitative) variables Example: F7b_a * Stratum F7b_a * Stratum Crosstabulation Stratum 1 F7b_a 1 2 3 Total Count % within Stratum Count % within Stratum Count % within Stratum Count % within Stratum 14 27,5% 15 29,4% 22 43,1% 51 100,0% 2 20 36,4% 18 32,7% 17 30,9% 55 100,0% 3 12 22,6% 26 49,1% 15 28,3% 53 100,0% 4 32 36,0% 47 52,8% 10 11,2% 89 100,0% 5 18 21,7% 47 56,6% 18 21,7% 83 100,0% 6 18 22,0% 55 67,1% 9 11,0% 82 100,0% Total 114 27,6% 208 50,4% 91 22,0% 413 100,0% Observe that the independent variable (stratum) should be the column variable if the assumption is that sick leave depends on the size of the company Is the identified dependency between the variables statistically significant or is it due to chance? Using hypothesis to test for dependency, (Chi2-test) H0: The variables are independent i.e. there is no difference in the change in sick leave due to company size H1: The variables are dependent i.e. there is a difference in ….. Chi-Square Tests Pears on Chi-Square Likelihood Ratio Linear-by-Linear Ass ociation N of Valid Cas es Value 41,895a 42,068 3,281 10 10 Asymp. Sig. (2-s ided) ,000 ,000 1 ,070 df 413 a. 0 cells (,0%) have expected count less than 5. The minimum expected count is 11,24. The Chi2-test tells us that we can reject H0 since the likelihood that the difference is due to chance is less than 1 in 1 000. (p-value = 0,000) From different values of X we can study the change in Y in the form of mean values. Example: F5a * Stratum Report F5a Stratum 1 2 3 4 5 6 Total Mean 3.924 4.529 5.530 7.044 7.172 7.056 6.161 N 50 55 53 89 82 82 411 Std. Deviation 3.3303 2.8795 2.2802 1.9363 2.4085 2.3954 2.7774 Measures of Association F5a * Stratum Eta ,449 Eta Squared ,201 The Eta2 means that (only) 20 % of the variation in the Yvariable can be explained by the X-variable (size) Is the identified dependency between the stratum means statistically significant or is it due to chance? Using hypothesis to test for dependency, (Anova-test) H0: There are no difference between the stratum means H1: Two or more of the mean values are different The Anova-test tells us ANOVA that we can reject H0 5a since the likelihood that Sum of the difference is due to Squares df Mean Square F Sig. chance is less than 1 in etween Groups 636,639 5 127,328 20,414 ,000 ithin Groups 2526,103 405 6,237 1 000. otal 3162,741 410 (p-value = 0,000) The connection between two quantitative variables are best presented by a scatter-plot and the strength of the connection can be explained by the coefficient of correlation r. The strength of the connection between the variables: Coefficient of correlation r. The stronger the connection the closer r is to +/- 1. Correlations F5a F5a F4_Kv Pears on Correlation Sig. (2-tailed) N Pears on Correlation Sig. (2-tailed) N 1 413 ,497** ,000 413 **. Correlation is s ignificant at the 0.01 level (2-tailed). F4_Kv ,497** ,000 413 1 416 Y = β0 + β1X regression. β0 is the intercept at witch the line cuts the Y-axis and β1 is the coefficient of y= μ y|x ε = β0 β1 x ε y|x = b0 + b1x + e is the mean value of the dependent variable y when the value of the independent variable is x b0 is the y-intercept, the mean of y when x is 0 b1 is the slope, the change in the mean of y per unit change in x e is an error term that describes the effect on y of all factors other than x β0 and β1 are called regression parameters β0 is the y-intercept and β1 is the slope We do not know the true values of these parameters So, we must use sample data to estimate them b0 is the estimate of β0 and b1 is the estimate of β1 Y = 3,595 + 0,048X A measure of estimation ability is achieved if the r (coef. of correlation) is squared. R2 (goodness of fit) is the proportion of the total variation in Y that can be explained by the linear relation X-Y Is the identified dependency between X and Y statistically significant or is it due to chance? Using hypothesis to test for dependency, (F-test) H0: There is no dependence, R2 = 0 H1: There is dependence, R2 > 0 (The F test tests the significance of the overall regression relationship between x and y) ANOVAb Model 1 Regression Residual Total Sum of Squares 789,068 2401,960 3191,028 a. Predictors: (Constant), F4_Kv b. Dependent Variable: F5a df 1 411 412 Mean Square 789,068 5,844 F 135,018 Sig. ,000 a The F-test tells us that we can reject H0 since the likelihood that the difference is due to chance is less than 1 in 1 000. (p-value = 0,000) To test H0: b1= 0 versus Ha: b1 0 at the level of significance Test F statistics based on F: Explained variation (Unexplain ed variation )/(n - 2) Reject F H0 if F(model) > F or p-value < is based on 1 numerator and n-2 denominator degrees of freedom Null and Alternative Hypotheses and Errors in Testing t - Tests about a Population Mean (std. unknown) z Tests about a Population Proportion The null hypothesis, denoted H0, is a statement of the basic proposition being tested. The statement generally represents the status quo and is not rejected unless there is convincing sample evidence that it is false. The alternative or research hypothesis, denoted Ha, is an alternative (to the null hypothesis) statement that will be accepted only if there is convincing sample evidence that it is true Type I Error: Rejecting H0 when it is true Type II Error: Failing to reject H0 when it is false State of Nature Conclusion Reject H0 Do not Reject H0 H0 True H0 False Type I Error Correct Decision Correct Decision Type II Error Error Probabilities Type I Error: Rejecting H0 when it is true is the probability of making a Type I error 1 – is the probability of not making a Type I error Type II Error : Failing to reject H0 when it is false b is the probability of making a Type II error 1 – b is the probability of not making a Type II error State of Nature Conclusion Reject H0 Do not Reject H0 H0 True H0 False 1– 1–b b Usually set to a low value ◦ So that there is only a small chance of rejecting a true H0 ◦ Typically, = 0.05 For = 0.05, strong evidence is required to reject H0 Usually choose between 0.01 and 0.05 = 0.01 requires very strong evidence to reject H0 Sometimes choose as high as 0.10 ◦ Tradeoff between and b For fixed sample size, the lower we set , the higher is b And the higher , the lower b x-bar be the mean of a sample of size n with standard deviation s Also, 0 is the claimed value of the population mean Define a new test statistic Let t x 0 s n If the population being sampled is normal, and If s is used to estimate s, then … The sampling distribution of the t statistic is a t distribution with n – 1 degrees of freedom Alternative Reject H0 if: p-value Ha: > 0 t > t Area under t distribution to right of t Ha: < 0 t < –t Area under t distribution to left of –t Ha: 0 |t| > t /2 * Twice area under t distribution to right of |t| t, t/2, and p-values are based on n – 1 degrees of freedom (for a sample of size n) * either t > t/2 or t < –t/2 If the sample size n is large, we can reject H0: p = p0 at the level of significance (probability of Type I error equal to ) if and only if the appropriate rejection point condition holds or, equivalently, if the corresponding p-value is less than We have the following rules … Alternative Reject H0 if: p-value H a : p p0 z z Area under standard normal to the right of z H a : p p0 z z Area under standard normal to the left of –z H a : p p0 z z / 2* Twice the area under standard normal to the where the test statistic is: right of |z| z p̂ p0 p0 1 p0 n * either z > z/2 or z < –z/2 You have a set of p continuous variables. You want to repackage their variance into m components. You will usually want m to be < p. Each component is a weighted linear combination of the variables Data reduction. Discover and summarize pattern of intercorrelations among variables. Test theory about the latent variables underlying a set of measurement variables. Construct a test instrument. There are many other uses of PCA and FA. A principal component is a linear combination of weighted observed variables. In PCA, the goal is to explain as much of the total variance in the variables as possible. The goal in Factor Analysis is to explain the covariances or correlations between the variables. PCA: Reduce multiple observed variables into fewer components that summarize their variance. FA: Determine the nature of and the number of latent variables that account for observed variation and covariation among set of observed indicators. Use Principal Components Analysis to reduce the data into a smaller number of components. Use Factor Analysis to understand. Analyze, Data Reduction, Factor, Click Descriptives and then check Initial Solution, Coefficients, KMO and Bartlett’s Test of Sphericity, and Antiimage. Click Continue. Click Extraction and then select Principal Components, Correlation Matrix, Unrotated Factor Solution, Scree Plot, and Eigenvalues Over 1. Click Continue. Click Rotation. Select Varimax and Rotated Solution. Click Continue. Click Options. Select Exclude Cases Listwise and Sorted By Size. Click Continue. Click OK, and SPSS completes the Principal Components Analysis. KMO and Bartlett's Test Kaiser-Meyer-Olkin Measure of Sampling Adequacy. Bartlett's Test of Sphericity Approx. Chi-Square df Sig. .665 1637.9 21 .000 Check the correlation matrix : If there are any variables not well correlated with some others, might as well delete them. Bartlett’s test of sphericity tests null that the matrix is an identity matrix, but does not help identify individual variables that are not well correlated with others. For each variable, check R2 between it and the remaining variables. SPSS reports these as the initial communalities when you do a principal axis factor analysis. Delete any variable with a low R2 . Look at partial correlations – pairs of variables with large partial correlations share variance with one another but not with the remaining variables – this is problematic. Kaiser’s MSA will tell you, for each variable, how much of this problem exists. The smaller the MSA, the greater the problem. Variables with small MSAs should be deleted From p variables we can extract p components. Each of p eigenvalues represents the amount of standardized variance that has been captured by one component. The first component accounts for the largest possible amount of variance. The second captures as much as possible of what is left over, and so on. Each is orthogonal to the others. Example for the eigenvalues and proportions of variance for the seven components: Only the first two components have eigenvalues greater than 1. Big drop in eigenvalue between component 2 and component 3. Components 3-7 are scree. Try a 2 component solution. Should also look at solution with one fewer and with one more component. Scree Plot 3.5 Total 3.313 2.616 .575 .240 .134 9.E-02 4.E-02 3.0 2.5 2.0 1.5 1.0 Eigenvalue Component 1 2 3 4 5 6 7 Initial Eigenvalues % of Cumulative Variance % 47.327 47.327 37.369 84.696 8.209 92.905 3.427 96.332 1.921 98.252 1.221 99.473 .527 100.000 .5 0.0 1 2 Extraction Method: Principal Component Analysis. Component Number 3 4 5 6 7 Kaiser-Meyer-Olkin measure of sampling adequacy Variable kmo gini herfindal_~x first_second sumfive one_sumtwo~r largest_ow~r ssd5l ssc5l ssd5o ssc5o ssd5_l ssd5_o ssc5_l ssc5_o bzc5l bzc5o bzd5l bzd5_l bzc5_l bzc5_o 0.9897 0.8414 0.8612 0.8895 0.8501 0.8785 0.8994 0.9252 0.8498 0.9210 0.9181 0.9250 0.9117 0.8657 0.8272 0.8258 0.8629 0.9557 0.8337 0.8290 Overall 0.8771 Principal components/correlation Rotation: (unrotated = principal) Number of obs Number of comp. Trace Rho = = = = 240 3 20 0.9336 Component Eigenvalue Difference Proportion Cumulative Comp1 Comp2 Comp3 Comp4 Comp5 Comp6 Comp7 Comp8 Comp9 Comp10 Comp11 Comp12 Comp13 Comp14 Comp15 Comp16 Comp17 Comp18 Comp19 Comp20 15.421 1.73364 1.51688 .56182 .269777 .184037 .125996 .0736059 .0364746 .0300457 .018322 .0128105 .00953429 .00283511 .001435 .000996377 .000505233 .000183788 .0000985823 .0000106178 13.6874 .21676 .95506 .292043 .0857398 .0580413 .0523902 .0371313 .00642889 .0117238 .00551142 .00327624 .00669918 .0014001 .000438626 .000491144 .000321445 .0000852056 .0000879645 . 0.7710 0.0867 0.0758 0.0281 0.0135 0.0092 0.0063 0.0037 0.0018 0.0015 0.0009 0.0006 0.0005 0.0001 0.0001 0.0000 0.0000 0.0000 0.0000 0.0000 0.7710 0.8577 0.9336 0.9617 0.9752 0.9844 0.9907 0.9943 0.9962 0.9977 0.9986 0.9992 0.9997 0.9998 0.9999 1.0000 1.0000 1.0000 1.0000 1.0000 PCA SHOWS THAT FIRST 3 COMPANENTS EXPLAIN 93.36% OF THE VARIATION ON THE FACTOR, OWNERSHIP CONCENTRATION. EIGENVALUES FOR 3 COMPONENTS ARE GREATER THAN ONE. THUS THESE THREE COMPENENTS CAN BE USED IN FURTHER ANALYSIS AS OWNERSHIP CONCENTRATION MEASURES. NEXT, WE LOOK AT THE FACTOR LOADINGS. Rotated components Variable Comp1 Comp2 Comp3 Unexplained gini herfindal_~x first_second sumfive one_sumtwo~r largest_ow~r ssd5l ssc5l ssd5o ssc5o ssd5_l ssd5_o ssc5_l ssc5_o bzc5l bzc5o bzd5l bzd5_l bzc5_l bzc5_o 0.2696 0.1787 -0.0211 0.3090 -0.0063 0.1936 0.2098 0.2115 -0.2970 -0.2941 0.2092 -0.3068 0.2120 -0.3076 0.1666 -0.2480 0.0470 -0.0729 0.1983 -0.2914 -0.2893 -0.0113 -0.0094 -0.1809 -0.0077 0.0913 0.1645 0.1562 0.0613 0.0539 0.1669 0.1082 0.1539 0.1138 0.2884 -0.1010 0.4848 0.6045 0.2141 0.0311 -0.0470 0.2912 0.6643 -0.0025 0.6525 0.1714 0.0232 0.0220 0.0298 0.0312 0.0220 0.0259 0.0294 0.0219 -0.0257 0.0688 -0.0530 0.0014 -0.0087 0.0537 .4403 .0764 .04202 .08146 .02178 .02779 .02214 .03451 .01334 .0219 .02217 .02161 .02427 .0194 .0413 .06749 .1403 .1602 .01959 .03062 BASED ON OBSERVING THE FACTOR LOADINGS OVER 20% (OF COURSE THIS CHOICE IS ARBITRARY, WE SHOULD TAKE THE HIGH CORRELATIONS), WE CAN GROUP THE OWNERSHIP VARIABLES.