* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Full proceedings volume - Institute of Formal and Applied Linguistics

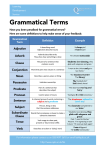

Swedish grammar wikipedia , lookup

Zulu grammar wikipedia , lookup

Navajo grammar wikipedia , lookup

Musical syntax wikipedia , lookup

Japanese grammar wikipedia , lookup

Esperanto grammar wikipedia , lookup

Kannada grammar wikipedia , lookup

Macedonian grammar wikipedia , lookup

Compound (linguistics) wikipedia , lookup

Chinese grammar wikipedia , lookup

French grammar wikipedia , lookup

Georgian grammar wikipedia , lookup

Modern Hebrew grammar wikipedia , lookup

Old English grammar wikipedia , lookup

Portuguese grammar wikipedia , lookup

Distributed morphology wikipedia , lookup

Integrational theory of language wikipedia , lookup

Dependency grammar wikipedia , lookup

Malay grammar wikipedia , lookup

Icelandic grammar wikipedia , lookup

Cognitive semantics wikipedia , lookup

Ancient Greek grammar wikipedia , lookup

Yiddish grammar wikipedia , lookup

Transformational grammar wikipedia , lookup

Latin syntax wikipedia , lookup

Scottish Gaelic grammar wikipedia , lookup

Serbo-Croatian grammar wikipedia , lookup

Polish grammar wikipedia , lookup

Spanish grammar wikipedia , lookup

Russian grammar wikipedia , lookup

Junction Grammar wikipedia , lookup