* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Introduction to Statistics, Lecture 5

Bootstrapping (statistics) wikipedia , lookup

Confidence interval wikipedia , lookup

Psychometrics wikipedia , lookup

History of statistics wikipedia , lookup

Statistical hypothesis testing wikipedia , lookup

Foundations of statistics wikipedia , lookup

Resampling (statistics) wikipedia , lookup

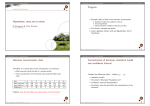

Overview Course 02402/02323 Introduction to Statistics Lecture 5: Hypothesis test, power and model control - one sample 1 Motivating example - sleeping medicine 2 One-sample t-test and p-value p-values and hypothesis tests (COMPLETELY general) Critical value and confidence interval Per Bruun Brockhoff 3 Hypothesis test with alternatives Hypothesis test - the general method DTU Compute Danish Technical University 2800 Lyngby – Denmark 4 Planning: Power and sample size 5 Checking the normality assumption The Normal QQ plot Transformation towards normality e-mail: [email protected] Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 1 / 50 Motivating example - sleeping medicine Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Example - sleeping medicine Difference of sleeping medicines? The hypothesis of no difference: In a study the aim is to compare two kinds of sleeping medicine A and B. 10 test persons tried both kinds of medicine and the following 10 DIFFERENCES between the two medicine types were measured: (For person 1, sleep medicine B was 1.2 sleep hour better than medicine A, etc.): Sample, n = 10: Per Bruun Brockhoff ([email protected]) x = Beffect - Aeffect 1.2 2.4 1.3 1.3 0.9 1.0 1.8 0.8 4.6 1.4 Introduction to Statistics, Lecture 5 2 / 50 Motivating example - sleeping medicine Motivating example - sleeping medicine person 1 2 3 4 5 6 7 8 9 10 Fall 2015 H0 : µ = 0 Sample mean and standard deviation: x̄ = 1.670 = µ̂ Data: x̄ = 1.67, H0 : µ = 0 s = 1.13 = σ̂ NEW:p-value: p − value = 0.00117 (Computed under the scenario, that H0 is true) Fall 2015 4 / 50 Per Bruun Brockhoff ([email protected]) Is data in acoordance with the null hypothesis H0 ? NEW:Conclusion: As the data is unlikely far away from H0 , we reject H0 - we have found a significant effect of sleep medicine B as compared to A. Introduction to Statistics, Lecture 5 Fall 2015 5 / 50 One-sample t-test and p-value One-sample t-test and p-value Metode 3.22: One-sample t-test and p-value p-values and hypothesis tests (COMPLETELY general) The definition and interpretation of the p-value (COMPLETELY general) How to compute the p-value? For a (quantitative) one sample situation, the (non-directional) p-value is given by: p − value = 2 · P (T > |tobs |) where T follows a t-distribution with (n − 1) degrees of freedom. The observed value of the test statistics to be computed is tobs = x̄ − µ0 √ s/ n Very strong evidence against H0 Strong evidence against H0 Some evidence against H0 Weak evidence against H0 Little or no evidence against H0 The p-value is the probability of obtaining a test statistic that is at least as extreme as the test statistic that was actually observed. This probability is calculated under the assumption that the null hypothesis is true. H0 : µ = µ0 Introduction to Statistics, Lecture 5 One-sample t-test and p-value p < 0.001 0.001 ≤ p < 0.01 0.01 ≤ p < 0.05 0.05 ≤ p < 0.1 p ≥ 0.1 Definition 3.12 of the p-value: where µ0 is the value of µ under the null hypothesis: Per Bruun Brockhoff ([email protected]) The p-value expresses the evidence against the null hypothesis – Table 3.1: Fall 2015 7 / 50 p-values and hypothesis tests (COMPLETELY general) Example - sleeping medicine Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 One-sample t-test and p-value Fall 2015 8 / 50 p-values and hypothesis tests (COMPLETELY general) Example - sleeping medicine - in R - manually The hypothesis of no difference: ## Enter data: x <- c(1.2, 2.4, 1.3, 1.3, 0.9, 1.0, 1.8, 0.8, 4.6, 1.4) n <- length(x) ## Compute the tobs - the observed test statistic: tobs <- (mean(x) - 0) / (sd(x) / sqrt(n)) ## Compute the p-value as a tail-probability in the t-distribution: pvalue <- 2 * (1-pt(abs(tobs), df=n-1)) pvalue H0 : µ = 0 Compute the p-value: Compute the test-statistic: 1.67 − 0 √ = 4.67 tobs = 1.13/ 10 2P (T > 4.67) = 0.00117 2 * (1-pt(4.67, 9)) ## [1] 0.0011659 Interpretation of the p-value in light of Table 3.1: There is strong evidence agains the null hypothesis. Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 9 / 50 Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 10 / 50 One-sample t-test and p-value p-values and hypothesis tests (COMPLETELY general) Example - sleeping medicine - in R - with inbuilt function One-sample t-test and p-value p-values and hypothesis tests (COMPLETELY general) The definition of hypothesis test and significance (generally) Definition 3.23. Hypothesis test: t.test(x) ## ## ## ## ## ## ## ## ## ## ## We say that we carry out a hypothesis test when we decide against a null hypothesis or not using the data. One Sample t-test A null hypothesis is rejected if the p-value, calculated after the data has been observed, is less than some α, that is if the p-value < α, where α is some pre-specifed (so-called) significance level. And if not, then the null hypothesis is said to be accepted. data: x t = 4.67, df = 9, p-value = 0.0012 alternative hypothesis: true mean is not equal to 0 95 percent confidence interval: 0.86133 2.47867 sample estimates: mean of x 1.67 Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 One-sample t-test and p-value Fall 2015 Definition 3.28. Statistical significance: An effect is said to be (statistically) significant if the p-value is less than the significance level α. (OFTEN we use α = 0.05) 11 / 50 Per Bruun Brockhoff ([email protected]) p-values and hypothesis tests (COMPLETELY general) Example - sleeping medicine Introduction to Statistics, Lecture 5 One-sample t-test and p-value Fall 2015 12 / 50 Critical value and confidence interval Critical value Definition 3.30 - the critical values of the t-test: The (1 − α)100% critical values for the (non-directional) one-sample t-test are the (α/2)100% and (1 − α/2)100% quantiles of the t-distribution with n − 1 degrees of freedom: With α = 0.05 we can conclude: Since the p-value is less than α so we reject the null hypothesis. tα/2 and t1−α/2 And hence: We have found a significant effect af medicine B as compared to A. (And hence that B works better than A) Metode 3.31: One-sample t-test by critical value: A null hypothesis is rejected if the observed test-statistic is more extreme than the critical values: If |tobs | > t1−α/2 then reject otherwise accept. Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 13 / 50 Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 14 / 50 One-sample t-test and p-value Critical value and confidence interval One-sample t-test and p-value Critical value and confidence interval Critical value and hypothesis test Critical value and hypothesis test The acceptance region are the values for µ not too far away from the data - here on the standardized scale: The acceptance region are the values for µ not too far away from the data - now on the original scale: Acceptance Acceptance Rejection Rejection t0.025 Per Bruun Brockhoff ([email protected]) 0 Rejection x − t0.975s t0.975 Introduction to Statistics, Lecture 5 One-sample t-test and p-value Rejection Fall 2015 15 / 50 Per Bruun Brockhoff ([email protected]) Critical value and confidence interval n x + t0.975s Introduction to Statistics, Lecture 5 One-sample t-test and p-value Critical value, confidence interval and hypothesis test x n Fall 2015 16 / 50 Critical value and confidence interval Proof: Theorem 3.32: Critical value method = Confidence interval method We consider a (1 − α) · 100% confidence interval for µ: Remark 3.33 A µ0 inside the confidence interval will fullfill that s x̄ ± t1−α/2 · √ n s |x̄ − µ0 | < t1−α/2 · √ n The confidence interval corresponds to the acceptance region for H0 when testing the (non-directional) hypothesis which is equivalent to |x̄ − µ0 | √s n H0 : µ = µ0 < t1−α/2 and again to (New) interpretation of the confidence interval: |tobs | < t1−α/2 The confidence interval covers those values of the parameter that we believe in given the data. Those values that we accept by the corresponding hypothesis test. Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 which then exactly states that µ0 is accepted, since the tobs is within the critical values. 17 / 50 Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 18 / 50 Hypothesis test with alternatives Hypothesis test with alternatives Hypothesis test with alternatives Example - PC screens So far - implied: (= non-directional) Produkt specification The alternative to H0 : µ = µ0 is : H1 : µ 6= µ0 A manufacturer of computer screens claims that screens in average uses 83W. (And implied: below 83W would be just fine for the company then) Furthermore we can assume, that the usage is normally distributed with some variance σ 2 (W)2 . The underlying insight that makes this a one-sided situation is that having a mean usage below 83W would be just fine for the company. BUT there are other possible settings, e.g. one-sided (=directional), ”less”: The alternative to H0 : µ ≥ µ0 is : H1 : µ < µ0 Or one-sided (=directional), ”greater”: The alternative to H0 : µ ≤ µ0 is : H1 : µ > µ0 Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 20 / 50 Per Bruun Brockhoff ([email protected]) Hypothesis test with alternatives Hypothesis test with alternatives Example - PC screens 21 / 50 Hypothesis test - the general method Generally a hypothesis test consists of the following steps: Null hypothesis: H0 : µ ≥ 83. The alternative: H1 : µ < 83 With the purpose to be able to reject(=falsify) that the usage could be greater. 1 Formulate the hypotheses and choose the level of significance α (choose the "risk-level") 2 Calculate, using the data, the value of the test statistic 3 Calculate the p-value using the test statistic and the relevant sampling distribution, and compare the p-value and the significance level α and make a conclusion 4 (Alternatively, make a conclusion based on the relevant critical value(s)) IF an external should document the claim wrong: Null hypothesis: H0 : µ ≤ 83. The alternative: H1 : µ > 83 With the purpose to be able to reject(=falsify) that the usage is no larger than 83. Introduction to Statistics, Lecture 5 Fall 2015 Metode 3.36. Steps by hypothesis tests - an overview IF the company should document the claim: Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 22 / 50 Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 23 / 50 Hypothesis test with alternatives Hypothesis test - the general method Hypothesis test with alternatives Hypothesis test - the general method The two-sided (non-directional) one-sample t-test again Det one-sided (directional) one-sample t-test Method 3.37. The level α test is: 1 Compute t obs as before Methode 3.38. The level α one-sided (”less”) test is: 2 Compute the evidence against the null hypothesis H0 : µ = µ0 vs. the alternative hypothesis H1 : µ 6= µ0 by the 1 Compute tobs as before 2 Compute the evidence against the null hypothesis H0 : µ ≥ µ0 vs. the alternative hypothesis H1 : µ < µ0 by the p–value = 2 · P (T > |tobs |) p–value = P (T < tobs ) where the t-distribution with n − 1 degrees of freedom is used. where the t-distribution with n − 1 degrees of freedom is used. 3 If p–value < α: We reject H0 , otherwise we accept H0 . 3 If p–value < α: We reject H0 , otherwise we accept H0 . 4 The rejection/acceptance conclusion could alternatively, but equivalently, be made based on the critical value(s) ±t1−α/2 : If |tobs | > t1−α/2 we reject H0 , otherwise we accept H0 . 4 The rejection/acceptance conclusion could alternatively, but equivalently, be made based on the critical value(s) tα : If tobs < tα we reject H0 , otherwise we accept H0 . Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Hypothesis test with alternatives Fall 2015 24 / 50 Hypothesis test - the general method Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Hypothesis test with alternatives The one-sided (directional) one-sample t-test Fall 2015 25 / 50 Hypothesis test - the general method Example - PC screens Can we reject the claim of the producer? Metode 3.39. The level α one-sided (”greater”) test is: 1 Compute tobs as before 2 Compute the evidence against the null hypothesis H0 : µ ≤ µ0 vs. the alternative hypothesis H1 : µ > µ0 by the p–value = P (T > tobs ) A group of consumers wants to test the manufacturer’s claim and 12 measurements of the usage are performed: 82 86 84 84 92 83 93 80 83 84 85 86 From this we estimate the mean usage at x̄ = 85.17 and s = 3.8099 Hence, one-sided ”greater” is the relevant test: Null hypothesis: H0 : µ ≤ 83. The alternative: H1 : µ > 83 where the t-distribution with n − 1 degrees of freedom is used. 3 If p–value < α: We reject H0 , otherwise we accept H0 . 4 The rejection/acceptance conclusion could alternatively, but equivalently, be made based on the critical value(s) t1−α : If tobs > t1−α we reject H0 , otherwise we accept H0 . Compute the test-statistic and the p-value: tobs = 85.17 − 83 √ = 1.97 3.8099/ 12 p-value = P (T > 1.97) = 0.0373 Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 26 / 50 Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 27 / 50 Hypothesis test with alternatives Hypothesis test - the general method Hypothesis test with alternatives Example - PC screens Hypothesis test - the general method Errors in hypothesis testing Conclusion using α = 0.05: So we reject the null hypothesis. We have documented that the screen’s mean usage is significantly greater than 83W. x <- c(82, 86, 84, 84, 92, 83, 93, 80, 83, 84, 85, 86) t.test(x, mu = 83, alt = "greater") ## ## ## ## ## ## ## ## ## ## ## Two kind of errors can occur (but only one at a time!) Type I: Rejection of H0 when H0 is true Type II: Non-rejection (acceptance) of H0 when H1 is true One Sample t-test The risks of the two types or errors: data: x t = 1.97, df = 11, p-value = 0.037 alternative hypothesis: true mean is greater than 83 95 percent confidence interval: 83.192 Inf sample estimates: mean of x 85.167 Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Hypothesis test with alternatives Fall 2015 P (Type I error) = α P (Type II error) = β 28 / 50 Per Bruun Brockhoff ([email protected]) Hypothesis test - the general method Introduction to Statistics, Lecture 5 Hypothesis test with alternatives Court of law analogy Fall 2015 29 / 50 Hypothesis test - the general method Errors in hypothesis testing A man is standing in a court of law: Theorem 3.43: Significance level = The risk of a Type I error A man is standing in a court of law accused of criminal activity. The null- and the the alternative hypotheses are: H0 : The man is not guilty H1 : The man is guilty The significance level α in hypothesis testing is the overall Type I risk: P (Type I error) = P (Rejection of H0 when H0 is true) = α Two possible truths vs. two possible conclusions: That you cannot be proved guilty is not the same as being proved innocent: H0 is true H0 is false Or differently put: Accepting a null hypothesis is NOT a statistical proof of the null hypothesis being true! Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 30 / 50 Reject H0 Type I error (α) Correct rejection of H0 (Power) Per Bruun Brockhoff ([email protected]) Fail to reject H0 Correct acceptance of H0 Type II error (β) Introduction to Statistics, Lecture 5 Fall 2015 31 / 50 Planning: Power and sample size Planning: Power and sample size Planning, Power Planning and power What is the power of a future study/experiment: When the test to use has been set: If you know (or set/guess) four out of the following five pieces of information, you can find the fifth: The probability of detecting an (assumed) effect P (Reject H0 ) when H1 is true Probability of correct rejection of H0 The sample size n Challenge: The null hypothesis can be wrong in many ways! Practically: Scenario based approach Significance level α of the test. A change in mean that you would want to detect (effect size) µ0 − µ1 . E.g. "What if µ = 86, how good will my study be to detect this?" E.g. "What if µ = 84, how good will my study be to detect this?" etc Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 The population standard deviation, σ. The power (1 − β). 33 / 50 Per Bruun Brockhoff ([email protected]) Planning: Power and sample size 0.5 Under H 0 0.4 β Probability density Probability density Fall 2015 36 / 50 Under H 0 Under H 1 α Power 0.3 0.2 0.1 α β Power 0.3 0.2 0.1 β Power Power α 0.0 µ0 µ1 Introduction to Statistics, Lecture 5 βα 0.0 µ0 Low power Per Bruun Brockhoff ([email protected]) 34 / 50 High power example Under H 1 0.4 Fall 2015 Planning: Power and sample size Low power example 0.5 Introduction to Statistics, Lecture 5 µ1 High power Fall 2015 35 / 50 Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Planning: Power and sample size Planning: Power and sample size Planning, Sample size n Example - The power for n = 40 The big practical question: What should n be? power.t.test(n = 40, delta = 4, sd = 12.21, type = "one.sample", alternative= "one.sided") The experiment should be large enough to detect a relevant effect with high power (usually at least 80%): Metode 3.48: The one-sample, one-sided sample size formula: For the one-sided, one-sample t-test for given α, β and σ: z1−β + z1−α 2 n= σ (µ0 − µ1 ) Where µ0 − µ1 is the change in means that we would want to detect and z1−β , z1−α are quantiles of the standard normal distribution. Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 37 / 50 Planning: Power and sample size ## ## ## ## ## ## ## ## ## One-sample t test power calculation n delta sd sig.level power alternative = = = = = = Per Bruun Brockhoff ([email protected]) 40 4 12.21 0.05 0.65207 one.sided Introduction to Statistics, Lecture 5 Checking the normality assumption Fall 2015 38 / 50 The Normal QQ plot Example - The sample size for power= 0.80 Example - student heights - are they normally distributed? power.t.test(power = .80, delta = 4, sd = 12.21, type = "one.sample", alternative= "one.sided") x <- c(168,161,167,179,184,166,198,187,191,179) hist(x, xlab="Height", main="", freq = FALSE) lines(seq(160, 200, 1), dnorm(seq(160, 200, 1), mean(x), sd(x))) 0.02 0.03 58.984 4 12.21 0.05 0.8 one.sided Density = = = = = = 0.01 n delta sd sig.level power alternative 0.04 One-sample t test power calculation 0.00 ## ## ## ## ## ## ## ## ## 160 170 180 190 200 Height Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 39 / 50 Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 41 / 50 Checking the normality assumption The Normal QQ plot Checking the normality assumption The Normal QQ plot Example - 100 observations from a normal distribution: Example - student heights - ecdf xr <- rnorm(100, mean(x), sd(x)) hist(xr, xlab="Height", main="", freq = FALSE) lines(seq(130, 230, 1), dnorm(seq(130, 230, 1), mean(x), sd(x))) plot(ecdf(x), verticals = TRUE) xp <- seq(0.9*min(x), 1.1*max(x), length.out = 100) lines(xp, pnorm(xp, mean(x), sd(x))) 0.030 0.8 0.020 0.6 ● ● ● ● 0.4 0.010 Fn(x) ● ● 0.2 ● 0.000 Density 1.0 ecdf(x) ● 160 180 200 0.0 ● 140 220 Height 160 170 180 190 200 x Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Checking the normality assumption Fall 2015 42 / 50 Per Bruun Brockhoff ([email protected]) The Normal QQ plot Introduction to Statistics, Lecture 5 Checking the normality assumption Example - student heights - Normal Q-Q plot xr <- rnorm(100, mean(x), sd(x)) plot(ecdf(xr), verticals = TRUE) xp <- seq(0.9*min(xr), 1.1*max(xr), length.out = 100) lines(xp, pnorm(xp, mean(xr), sd(xr))) qqnorm(x) qqline(x) Per Bruun Brockhoff ([email protected]) 190 ● 180 Sample Quantiles ● ● ● 170 ● ●● ● ●● ● ●● ●● ●● ● ●● ● ● ●● ●● ●● ● ●● ● ● ● ● ● ● ●● ● ● ● ● ● ● ● ● ● ● ●● ● ● ● ● ● ● ● ●● ●● ● ● ● ● ● ● ● ●● ●● ●● ● ● ●● ● ● ● ● ● ● ● ● ● ●● ●● ●● ● 180 x Introduction to Statistics, Lecture 5 ● ● ● ● −1.5 160 45 / 50 ● ●● ● ● 160 1.0 0.8 0.6 0.4 0.0 0.2 Fn(x) 140 Fall 2015 Normal Q−Q Plot ecdf(xr) ●● ● ● ● 43 / 50 The Normal QQ plot Example - 100 observations from a normal distribution, ecdf: ● Fall 2015 200 −1.0 −0.5 0.0 0.5 1.0 1.5 Theoretical Quantiles Fall 2015 44 / 50 Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Checking the normality assumption The Normal QQ plot Checking the normality assumption Example - student heights - Normal Q-Q plot - compare with other simulated normally distributed data The Normal QQ plot Normal Q-Q plot 2 2 2 Method 3.52 - The formal definition (And remark 3.53) ● ● ● 0.5 1.0 1.5 ● ● ● ● −2 −1.5 −0.5 0.5 1.0 1.5 −1.5 −0.5 pi = 0.5 1.0 1.5 Theoretical Quantiles 2 Theoretical Quantiles 2 Theoretical Quantiles 2 Naive approach: ● ● −2 −0.5 ● ● ● −2 −1.5 ● 1 ● ● 0 1 ● ● −1 ● ● ● ● ● Sample Quantiles ● 0 ● −1 ● Sample Quantiles 1 0 ● ● −1 Sample Quantiles ● The ordered observations x(1) , . . . , x(n) are plotted versus a set of expected normal quantiles zp1 , . . . , zpn . Different definitions of p1 , . . . , pn exist: ● ● ● ● ● ● ● 1 In R, when n > 10: ● ● 0 ● ● −1 1 Sample Quantiles ● ● 0 ● ● −1 ● ● Sample Quantiles 1 0 ● ● ● ● ● ● ● pi = i − 0.5 , i = 1, . . . , n n+1 pi = i − 3/8 , i = 1, . . . , n n + 1/4 ● ● ● −2 −2 ● −1.5 −0.5 0.5 1.0 1.5 −2 −1 Sample Quantiles ● ● ● ● −1.5 Theoretical Quantiles −0.5 0.5 1.0 1.5 −1.5 −0.5 Theoretical Quantiles 0.5 1.0 1.5 Theoretical Quantiles In R, when n ≤ 10: 2 2 ● 2 ● i , i = 1, . . . , n n ● ● 1 ● ● ● 0 ● ● 0 ● ● ● −1 Sample Quantiles ● ● ● −1 0 ● ● 1 Sample Quantiles 1 ● ● −1 Sample Quantiles ● ● ● ● ● ● ● −0.5 0.5 1.0 1.5 −2 −2 −2 ● ● −1.5 ● ● −1.5 −0.5 0.5 1.0 1.5 −1.5 −0.5 Theoretical Quantiles Quantiles Per Bruun Brockhoff ([email protected]) Introduction to Theoretical Statistics, Lecture 5 Checking the normality assumption 0.5 1.0 1.5 Theoretical Quantiles Fall 2015 46 / 50 Transformation towards normality Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Checking the normality assumption Example - Radon data Fall 2015 Transformation towards normality Example - Radon data - log-transformed are closer to a normal distribution ## READING IN THE DATA radon<-c(2.4, 4.2, 1.8, 2.5, 5.4, 2.2, 4.0, 1.1, 1.5, 5.4, 6.3, 1.9, 1.7, 1.1, 6.6, 3.1, 2.3, 1.4, 2.9, 2.9) ##A HISTOGRAM AND A QQ-PLOT par(mfrow=c(1,2)) hist(radon) qqnorm(radon,ylab = 'Sample quantiles',xlab = "Normal quantiles") qqline(radon) Histogram of radon ##TRANSFORM USING NATURAL LOGARITHM logRadon<-log(radon) hist(logRadon) qqnorm(logRadon,ylab = 'Sample quantiles',xlab = "Normal quantiles") qqline(logRadon) Normal Q−Q Plot Histogram of logRadon Normal Q−Q Plot 7 7 ● ● 1.5 1.0 Sample quantiles 5 4 3 2 ● ● ● ● ● ● ● 0.5 ●● ●● ● ●● ● ● ● ●● ● 1 1 Frequency 5 4 3 ● ●● 2 2 3 4 Sample quantiles 5 ●● ● ● ● ●● 6 6 6 ● Frequency 47 / 50 ● 1 2 3 4 radon Per Bruun Brockhoff ([email protected]) 5 6 7 ● −2 ● ● 0 1 0 ●● −1 0 1 2 0.0 Normal quantiles Introduction to Statistics, Lecture 5 0.5 1.0 logRadon Fall 2015 48 / 50 Per Bruun Brockhoff ([email protected]) 1.5 2.0 −2 ● −1 0 1 2 Normal quantiles Introduction to Statistics, Lecture 5 Fall 2015 49 / 50 Checking the normality assumption Transformation towards normality Overview 1 Motivating example - sleeping medicine 2 One-sample t-test and p-value p-values and hypothesis tests (COMPLETELY general) Critical value and confidence interval 3 Hypothesis test with alternatives Hypothesis test - the general method 4 Planning: Power and sample size 5 Checking the normality assumption The Normal QQ plot Transformation towards normality Per Bruun Brockhoff ([email protected]) Introduction to Statistics, Lecture 5 Fall 2015 50 / 50