* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Operating System Concept

Survey

Document related concepts

Transcript

Chapter I

Designed by .VAS

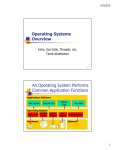

An operating system is a program that

manages the Computer Hardware .

Functions :

◦ Resource Allocation & Related Function

◦ User Interface function

Hardware

OS

Application

Program

User

Mainframe Systems

◦ For commercial & Scientific Application

◦ Batch Systems –

Similar kind of program

collect into single batch for

processing .

Multiprogrammed Systems

It increases CPU utilization by organizing jobs so

that the CPU always has one to execute.

Time-Sharing Systems

◦ Time sharing (or multitasking) is a logical

extension of multiprogramming.

◦ User interaction with the computer system.

◦ A time-shared operating system uses CPU

scheduling and multiprogramming to provide

each user with a small portion of a time-shared

computer.

Desktop Systems

◦ Personal computers PCs appeared in the 1970s.

◦ Used for maximizing user convenience and

responsiveness , instead of maximizing CPU and

peripheral utilization.

◦ Include PCs running Microsoft Windows and the

Apple Macintosh.

Multiprocessor Systems

◦ Most systems to date are single-processor systems .

◦ Multiprocessor systems (also known as parallel systems

or tightly coupled systems) are growing in importance.

Such systems have more than one processor

◦ Advantages : 1. Increased throughput

2. Economy of scale

3. Increased reliability

Distributed Systems

◦ A network, in the simplest terms,

communication path between two or

systems.

is a

more

◦ Distributed systems depend on networking for their

functionality.

◦ LAN , WAN , MAN

Program in execution .

Process Needs

◦

◦

◦

◦

CPU time,

Memory,

Files

I/O devices

Creating and deleting both User and System

processes

Suspending and resuming processes

Providing mechanisms for process synchronization

Providing mechanisms for process communication

Providing mechanisms for deadlock handling.

CPU and multiple device controllers that are

connected through a common bus .

Small Computer-Systems Interface (SCSI).

◦ Controller can have seven or more devices attached to

it.

◦ Device controller is responsible for moving the data

between the peripheral devices that it controls and its

local buffer storage.

I/O Interrupts

◦ To start an I/O operation ,

◦ CPU loads the appropriate registers within

the Device controller.

◦ Device controller examines the contents of

these registers to determine what action to

take .

◦ If read request found , controller will start

the transfer of data from the device to its

local buffer .

I/O Interrupts ( cont..)

◦ After transfer of data is complete .

◦ Device controller informs the CPU .

◦ It accomplishes this communication by

triggering an interrupt.

Courses of action :◦ synchronous I/O.

◦ asynchronus I/O

Two I/O Methods

DMA ( Direct Memory Access )

◦ Used for high-speed I/O devices.

◦ Device controller transfers an entire block of

data directly to or from its own buffer storage

to memory .

◦ No intervention by the CPU .

◦ Interrupt is generated per block, rather than

the one interrupt per byte .

◦ The DMA controller interrupts the CPU when

the transfer has been completed.

PROGRAM INPUT/

OUTPUT

Computer programs must be in main memory

(also called random-access memory or RAM)

to be executed.

Semiconductor technology called dynamic

random-access memory (DRAM) .

CPU automatically loads instructions from main

memory for execution.

Storage device hierarchy

Process is a program in execution .

Process State

Process Control Block

Process Scheduling

New: The process is being created.

Running: Instructions are being executed.

Waiting: The process is waiting for some event to

occur (such as an I/O completion or reception of

a signal).

Ready: The process is waiting to be assigned to a

processor.

Terminated: The process has finished execution.

Process state: The state may be new, ready,

running, waiting, halted, and So on

Program

counter:

The

counter

indicates

the

address of the next instruction to be executed for

this process.

CPU registers: The registers vary in number and

type, depending on the computer architecture.

They include accumulators, index registers, stack

pointers, and general-purpose registers .

Scheduling Queues :-

processes enter the

system, they are put into a job queue .

The processes that are residing in main

memory and are ready and waiting to execute

are kept on a list called the ready queue.

Ready-queue header contains pointers to the

first and final PCBs in the list.

The ready queue and various I/O device queues

Queuing-diagram representation of process scheduling

Schedulers - process migrates between the

various scheduling queues throughout its

lifetime.

Long term Scheduler

- Selects processes

from this pool and loads them into memory .

Short term Scheduler - Selects from among

the processes that are ready to execute, and

allocates the CPU to one of them.

Addition of medium-term scheduling to the queuing diagram.

Switching the CPU to another process requires

saving the state of the old process and loading

the saved state for the new process.

Process Creation - process may create several

new processes, via a create-process system

call, during the course of execution.

Process Termination - process terminates

when it finishes executing its final statement

and asks the operating system to delete it by

using the exit system call.

a tree processes on typical Unix system

Process is cooperating if it can affect or be

affected by the other processes executing in

the system.

Several Reasons for allowing Cooperating

process –

Information sharing

Computation speedup

Modularity

Convenience

It is LWP – Light Weight Process .

Basic unit of CPU utilization .

Thread ID, Program counter, Register set, and

Stack.

A traditional (or heavyweight) process has a

single thread of control.

If process has multiple threads of control, it can

do more than one task at a time.

Example of MT.

◦ web browser

◦ word processor

Problem of ST .

◦ Web Server – Services to Client

Play a vital role in remote procedure call

(RPC) systems

Responsiveness ◦ Multithreading an interactive application may

allow a program to continue running even if part

of it is blocked or is performing a lengthy

operation .

Resource sharing –

◦ Threads

share

the

memory

and

the

resources of the process to which they

belong.

Economy –

◦ Allocating memory and resources for process creation

is

costly.

Alternatively,

because

threads

share

resources of the process to which they belong, it is

more

economical

to

create

and

context

switch

threads.

Utilization of multiprocessor architectures –

◦ Increased in a multiprocessor architecture, where each

thread may be running in parallel on a different

processor.

user threads –

◦ Supported above the kernel and are implemented

by a thread library at the user level

kernel threads –

◦ Supported directly by the operating system:

The

kernel

performs

thread

creation,

scheduling, and management in kernel

space.

Many-to-One Model

• Thread

management

is

done in user space,

so it is efficient,

but

the

entire

process will block

if a thread makes a

blocking

call

system

One-to-one Model

• One-to-one model maps each user thread to a kernel thread. It

provides more concurrency than the many-to-one model by allowing

another thread to run when a thread makes a blocking system call;

Many-to-Many Model

• Many-to-many model

multiplexes many userlevel threads to

a

smaller

number

threads.

or

of

equal

kernel

Fork-EXEC is a commonly used technique in

Unix / Linux whereby an executing process

spawns a new program.

fork() is the name of the system call that

the parent process uses to "divide" itself

("fork" into two identical processes).

Cancellation –

◦ 1.

Asynchronous

cancellation:

One

thread

immediately terminates the target thread.

◦ 2. Deferred cancellation: The target thread can

periodically check if it should terminate, allowing

the target thread an opportunity to terminate itself

in an orderly fashion.

Signal Handling

◦ 1. A signal is generated by the occurrence of a

particular event.

◦ 2. A generated signal is delivered to a process.

◦ 3. Once delivered, the signal must be handled.

Signal Handler

◦ 1. A default signal handler

Default signal handler that is run by the kernel

when handling the signal.

◦ 2. A user-defined signal handler

User-defined function is called to handle the

signal rather than the default action.

Problem for responding client.

◦ Web Server Problem – Multiple request handling.

◦ Unlimited threads could exhaust system resources,

such as CPU time or memory.

◦ Thread Pools (TP)

TP Create a number of threads at process startup

and place them into a pool , where they sit and

wait for work.

Server receives a request, it awakens a thread from

this pool-if one is available-passing it the request

to service

Once the thread completes its service, it

returns to the pool awaiting more work .

If the pool contains no available thread, the

server waits until one becomes free.

Threads belonging to a process share the

data of the process.

Each thread might need its own copy of

certain data in future .

Call such data thread-specific data.

Assigning

Unique

Identifier

to

each

transaction and associate UID to each thread.

POSIX - Portable Operating System Interface .

Defining an API for thread creation and

synchronization.