* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Document

Data assimilation wikipedia , lookup

Forecasting wikipedia , lookup

Choice modelling wikipedia , lookup

Interaction (statistics) wikipedia , lookup

Time series wikipedia , lookup

Regression toward the mean wikipedia , lookup

Instrumental variables estimation wikipedia , lookup

Regression analysis wikipedia , lookup

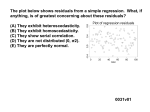

Relationships • If we are doing a study which involves more than one variable, how can we tell if there is a relationship between two (or more) of the variables ? • Association Between Variables : Two variables measured on the same individuals are associated if some values of one variable tend to occur more often with some values of the second variable than with other values of that variable. • Response Variable : A response variable measures an outcome of a study. • Explanatory Variable : An explanatory variable explains or causes changes in the response variable. 2.1: Scatterplots • A scatterplot shows the relationship between two variables. • The values of one variable appear on the horizontal axis, and the values of the other variable appear on the vertical axis. • Always plot the explanatory variable on the horizontal axis, and the response variable as the vertical axis. Example: If we are going to try to predict someone’s weight from their height, then the height is the explanatory variable, and the weight is the response variable. • The explanatory variable is often denoted by the variable x, and is sometimes called the independent variable. • The response variable is often denoted by the variable y, and is sometimes called the dependent variable. Scatterplots Example: Do you think that a father’s height would affect a son’s height? We are saying that given a father’s height, can we make any determinations about the son’s height ? The explanatory variable is : The father’s height The response variable is : The son’s height Father’s Height Son’s Height Data Set : 64 68 68 70 72 74 75 75 76 77 65 67 70 72 75 70 73 76 77 76 Father’s Height Son’s Height 64 68 68 70 72 Father’s Height 65 67 70 72 75 74 75 75 76 77 Son’s Height 70 73 76 77 76 Response Variable (Son’s Height) 76 72 Explanatory Variable (Father’s Height) 68 64 64 68 72 76 Father’s Height Son’s Height 64 68 68 70 72 Father’s Height 65 67 70 72 75 74 75 75 76 77 Son’s Height 70 73 76 77 76 76 72 68 64 64 68 72 76 Father Examining A Scatterplot • In any graph of data, look for the overall pattern and for striking deviations from that pattern. • You can describe the overall pattern of a scatterplot by the form, direction, and strength of the relationship. • Strength : How closely the points follow a clear form. • An important kind of deviation is an outlier, an individual that falls outside the overall pattern of the relationship. • Two variables are positively associated when above-average values of one tend to accompany above average values of the other and below average values also tend to occur together. • Two variables are negatively associated when above-average values of one accompany below-average values of the other; and vice versa. Direction Type of associations between X and Y. 1. Two variables are positively associated if small values of X are associated with small values of the Y, and if large values of X are associated with large values of Y. There is an upward trend from left to right. Positive Association Y . . . . . . . .. . . . X Direction Type of associations between X and Y. 2. Two variables are negatively associated if small values of one variable are associated with large values of the other variable, and vice versa. There is a downward trend from left to right. Negative Association Y .. . . . . . .. . . .. X Form Describe the type of trend between X and Y. 1. Linear - points fall close to a straight line. Linear Association Y . . . . . . . .. . . . X Form Describe the type of trend between X and Y. 1. Linear - points fall close to a straight line. 2. Quadratic - points follow a parabolic pattern. Quadratic Association Y .. . . . . . . .. . . . . . . . . . . X Form Describe the type of trend between X and Y. 1. Linear - points fall close to a straight line. 2. Quadratic - points follow a parabolic pattern. 3. Exponential - points follow a curved pattern. Exponential Growth Y . . .. . ... . . . . .. . . . . .. . . . . . .. . . . .. . .. . .. .. . .. . . X Strength Measures the amount of scatter around the general trend. LinearThe closer the points fall to a straight line, the stronger the relationship between the two variables. Strong Association Y . . . . . . . .. . . . X Moderate Association Y . . . . . . . . . . .. . . . .. . . . . . . . . . X Weak Association Y . . . . . . . . . . . . . . . . . . . . . . . . . X Examining A Scatterplot Consider the previous scatterplot : Direction : Going up 76 Form : Linear 72 Association : Positive Strength : Strong 68 Outliers : None 64 64 68 72 76 Father Example : The following is a scatterplot of data collected from states about students taking the SAT. The question is whether the percentage of students from a state that takes the test will influence the state’s average scores. For instance, in California, 45 % of high school graduates took the SAT and the mean verbal score was 495. Direction : Downward Form : Curved Association : Negative Strength : Strong Outliers : Maybe §2.2: Correlation Recall that a scatterplot displays the form, direction, and strength of the relationship between two quantitative variables. Linear relationships are important because they are the easiest to model, and are fairly common. We say a linear relationship is strong if the points lie close to a straight line, and weak if the points are scattered around the line. Correlation (r) measures the direction and the strength of the linear relationship between two quantitative variables. The + / - sign denotes a positive or negative association. The numeric value shows the strength. If the strength is strong, then r will be close to 1 or -1. If the strength is weak, then r will be close to 0. Correlation Examples Correlation =0 Correlation = 0.5 Correlation = 0.9 Correlation = - 0.3 Correlation = - 0.7 Correlation = - 0.99 Which has the better correlation ? Correlation So, how do we find the correlation ? Suppose we have data on variables x and y for n individuals. The means and standard deviations of the two variables are x and sx for the x-values, and y and sy for the y-values. 1 r= n-1 ( x - x i s )( y - y i x n r x i 1 i s y y i nx y n n 2 2 2 2 x nx y ny i i i 1 i 1 Question : Will outliers effect the correlation ? ) Example: Recall the scatterplot data for the heights of fathers and their sons. Father’s Height 64 68 68 70 72 Son’s Height 65 67 70 72 75 Father’s Height 74 75 75 76 77 Son’s Height 70 73 76 77 76 We decided that the father’s heights was the explanatory variable and the son’s heights was the response variable. The average of the x terms is 71.9 and the standard deviation is 4.25 The average of the y terms is 72.1 and the standard deviation is 4.07 r= xi 64 68 68 70 72 74 75 75 76 77 1 n-1 xi - x -7.9 -3.9 -3.9 -1.9 0.1 2.1 3.1 3.1 4.1 5.1 ( xi - x sx )( yi - y sy ) xi - x sx yi yi - y yi - y sy -1.86 -0.92 -0.92 -0.45 0.02 0.49 0.73 0.73 0.96 1.20 65 67 70 72 75 70 73 76 77 76 -7.1 -5.1 -2.1 -0.1 2.9 -2.1 0.9 3.9 4.9 3.9 -1.75 -1.25 -0.52 -0.02 0.71 -0.52 0.22 0.95 1.20 0.95 xi xi - x xi - x sx 64 68 68 70 72 74 75 75 76 77 -7.9 -3.9 -3.9 -1.9 0.1 2.1 3.1 3.1 4.1 5.1 -1.86 -0.92 -0.92 -0.45 0.02 0.49 0.73 0.73 0.96 1.20 1 r = 10 - 1 1 = 9 yi - y yi yi - y sy 65 67 70 72 75 70 73 76 77 76 -7.1 -5.1 -2.1 -0.1 2.9 -2.1 0.9 3.9 4.9 3.9 -1.75 -1.25 -0.52 -0.02 0.71 -0.52 0.22 0.95 1.20 0.95 [ (-1.86)(-1.75) + (-0.92)(-1.25) + ….. + (1.20)(0.95)] [ (3.24) + (1.14) + ….. + (1.14) ] = 0.87 Shortcut Calculations 10 10 10 i 1 i 1 i 1 2 x 719, y 721, x i i i 51859 10 10 i 1 i 1 2 y i 52133, x i y i 51975 r 51975 10*71.9*72.1 51859 10*71.9 52133 10*72.1 2 2 0.87 Facts about Correlation Correlation makes no distinction between explanatory and response variables. The correlation between x and y is the same as the correlation between y and x. Correlation requires that both variables be quantitative. We cannot compute a correlation between a categorical variable and a quantitative variable or between two quantitative variables. r does not change when we do transformations. The correlation between height and weight is the same whether height was measured in feet or centimeters or weight was measured in kilograms or pounds. This happens because all the observations are standardized in the Calculation of correlation. The correlation r itself has no unit of measurement, it is just a number. Exercise What’s wrong with these statements? 1. At AU there is no correlation between the ethnicity of students and their GPA. 2. The correlation between height and weight of stat202 students (a) is 2.61 (b) is 0.61 inches per pound (c) is 0.61, so the corr. between weight and height is -0.61 (d) is 0.61 using inches and pounds, but converting inches to centimeters would make r > 0.61 (since an inch equals about 2.54 centimeters). §2.3: Least-Squares Regression • Correlation measures the direction and strength of a straight-line (linear) relationship between two quantitative variables. • We have tried to summarize the data by drawing a straight-line the through the data. • A regression line summarizes the relationship between two variables. • These can only be used in one setting : when one variable helps explain or predict the other variable. Regression Line • A regression line is a straight line that describes how a response variable y changes as an explanatory variable x changes. We often use a regression line to predict the value of y for a given value of x. Regression, unlike correlation, requires that we have an explanatory variable and a response variable. • If a scatterplot displays a linear pattern, we can describe the overall pattern by drawing a straight line through the points. • This is called fitting a line to the data. • This is a mathematical model which we can use to make predictions based on the given data. Example: Recall the data we were using before where we compared the heights of fathers and sons. Father’s Height 64 68 68 70 72 Son’s Height 65 67 70 72 75 Father’s Height 74 75 75 76 77 The first thing we did was to plot the points. Son’s Height 70 73 76 77 76 Example: Recall the data we were using before where we compared the heights of fathers and sons. 76 72 68 64 64 68 72 76 Father The line which is closest to all the points is the regression line. Least-Squares Regression Line • The least squares regression line of y on x is the line that makes the sum of the squares of the vertical distances of the data points from the line as small as possible. Equation of the Least-Squares Regression Line • Imagine we have data on an explanatory variable x and a response y for n individuals. • Assume the mean for the explanatory variable is x and the standard deviation is sx • Assume the mean for the response variable is standard deviation is sy • Assume the correlation between x and y is r. y and the Equation of the Least-Squares Regression Line • The equation of the least-squares regression line of y on x is : y = a + bx slope intercept The slope is b : b=r The intercept is a : sy sx ( ) a= y - b x Interpretation of Regression Coefficients The Y-Intercept a is the value of the response variable, y, when the explanatory variable, x, is zero. The Slope, b is the change in the response variable, y, for a unit increase in the explanatory variable, x. Example: What if we want to find the least-squares regression line where we will predict the son’s height from the father’s height ? Note: The father’s heights are the explanatory variable, and the son’s height is the response variable. We need the means and the standard deviations : The average of the x terms is 71.9 and the standard deviation is 4.25 The average of the y terms is 72.1 and the standard deviation is 4.07 The correlation between the two variables is 0.87 x = 71.9 sx = 4.25 sy = 4.07 y = 72.1 r = 0.87 The equation for the regression line is : y = a + bx sy We need to find the slope : b = r sx b= ( ) ( ) 0.87 4.07 4.25 Next, find the intercept : = 0.8331529 a= y - b x a = 72.1 - (0.8331529)(71.9) = 12.196307 So, the equation for the regression line is : y = a + bx y = 12.2 + .83x Making Predictions We can use the regression line to make some predicts. Example : Based on the previous data, we can predict the son’s height from the father’s height. y = 12.2 + .83x Q: If the father’s height is 70 inches, what is our prediction for the son’s height? A: y = 12.2 + .83(70) = 70.1 Note: These predictions are only good on relevant data!! y = 12.2 + .83x 76 72 68 64 64 68 72 76 Father Notes On Regression b=r sy sx ( ) • This equation says that a change of one standard deviation in x corresponds to a change of r standard deviations in y. • The point ( x , y ) is always on the regression line. 2 • The square of the correlation, r , is the fraction of the variation in the values of y that is explained by the least-squares regression of y on x. Example: The straight line relationship between father’s heights and son’s height is r2 = (0.87) 2 = 0.7569 explains the variation in heights. Residuals Analysis • A regression line is a mathematical model for the overall pattern of a linear relationship between an explanatory variable, and a response variable. • The regression line is chosen so that the vertical distances to the line from all the points is as small as possible. • A residual is the difference between an observed value of the response variable, and the value predicted by the regression line. Residual = observed y - predicted y Residual = y - y Example of Residuals Go back to our favorite example : Father’s Height Son’s Height 64 65 68 67 68 70 70 72 72 75 Father’s Height 74 75 75 76 77 78 Son’s Height 70 73 76 77 76 y = 0.8293x + 12.47 R2 = 0.7525 76 74 72 Series1 70 Linear (Series1) 68 66 64 60 65 70 75 80 Example of Residuals Go back to our favorite example : Father’s Height Son’s Height 64 65 68 67 68 70 70 72 72 75 Father’s Height 74 75 75 76 77 Son’s Height 70 73 76 77 76 We found the regression line to be: y = 12.47 + .8293x So, when the father’s height is 64 inches, we expect the son to be how tall? y = 12.47 + (.8293)(64) = 65.5452 However, the actual height of the son is 65 inches, so the residual is : 65 - 65.5452 = -0.5452 This tells us the point is .5452 units below the regression line. Son’s Height Predicted Height Residual y = 12.47 + .8293x Residual = y - y 65 67 70 72 75 70 73 76 77 76 65.5452 68.8624 68.8624 70.521 72.1796 73.8382 74.6675 74.6675 75.4968 76.3261 -0.5452 -1.8624 1.1376 1.479 2.8204 -3.8382 -1.6675 1.3325 1.5032 -0.3261 Average = 0.0037678 • Again, we could have drawn our line anywhere on the graph • The least squares regression line has the property that the mean of the least-squares residuals is always zero! Residual Plot • A residual plot is a scatterplot of the regression residuals against the explanatory variable. Residual plots help us assess the fit of the regression line. • The regression line shows the overall pattern of the data. So, the residual plot should *not* have a pattern. Residual Plot Example : What does this residual plot show us : This indicates the relationship between the explanatory variable and the response variable is curved, and not linear. Regression should not be used in this example. Residual Plot Example : What does this residual plot show us : This shows that the variation of the response variable about the line increases as the explanatory variable increases. The predictions for y will be better on the less variable part, than the more variable part. Residual Plot Here is what our residual plot would look like : 4 2 0 -2 -4 64 66 68 70 72 74 76 Outliers and Influential Observations Consider our favorite example : Father’s Height 64 68 68 70 72 Son’s Height 65 67 70 72 75 Father’s Height 74 75 75 76 77 64 What happens if we add in an outlier ? How does this change our results? Son’s Height 70 73 76 77 76 82 Correlation & Scatterplot • The correlation drops from 0.87 to 0.28 82 y = 0.2914x + 52.258 R2 = 0.0784 80 78 76 74 Series1 72 Linear (Series1) 70 68 66 64 63 68 73 78 Outliers and Influential Observations Consider the following scatterplot : There is one outlier : Outliers and Influential Observations • An outlier has a large residual from the regression line. • This could be called an outlier in the y direction. • If you look at the previous picture, there is an “outlier” in the x direction This is called an influential observation. Outliers and Influential Observations • An influential observation is a score which is far from the other data points in the x direction. • Note that it should still be close to the regression line, otherwise we would label it an outlier. • An influential observation is a score that is extreme in the x direction with no other points around it. • These values will pull the regression line towards itself. Outliers and Influential Observations Outliers and Influential Observations • An influential observation is a score which is far from the other data points in the x direction. • Note that it should still be close to the regression line, otherwise we would label it an outlier. • An influential observation is a score that is extreme in the x direction with no other points around it. • These values will pull the regression line towards itself. So, an observation is influential if removing it would markedly change the result of the calculation. Influential Observations Q: How can we check data for influential observations ? A1 : residuals ? A2 : Scatterplot ? Sort of. A3 : Remove the point and see what happens ?