* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download DATAMINING - E

Survey

Document related concepts

Transcript

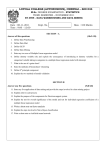

NEHRU ARTS AND SCIENCE COLLEGE T.M PALAYAM, COIMBATORE PG & RESEARCH DEPARTMENT OF COMPUTER SCIENCE QUESTION BANK CLASS: III B. Sc (CS) SUBJECT NAME: DATA MINING UNIT-1 SECTION-A ONE MARKS: 1. The Data accessed is usually a different version from that of the original operational database. a) Query b) Data c) Output d) Model 2. The Output of the data mining query probably is not a subset of the database. a) Query b) Data c) Output d) Model 3. A Predictive Model makes a prediction about values of data using known results found from different data. a) Predictive model b) Descriptive model c) Both a& b d) None 4. A Descriptive model identifies patterns or relationships in data. a) Predictive model b) Descriptive model c) Both a& b d) None 5. Classification maps data into predefined groups or classes. a) Classification b) Regression c) Prediction d) Time series 6. A Regression is used to map a data item to a real valued predication variable. a) Classification b) Regression c) Prediction d) Time series 7. Clustering is similar to classification except that the groups are not predefined. a) Regression b) Clustering c) Association d) Summarization 8. A Summarization maps data into subsets with associated simple descriptions. a) Query b) Model c) Summarization d) Association 9. Link analysis is alternatively referred to as Affinity analysis. a) Clustering b) Prediction c) Link analysis d) None 10. Sequential analysis is also known as Sequence discovery. a) Selection b) Sequence analysis c) Preprocessing d) Data mining 11. Both a & b is used to determine sequential patterns in data. a) Sequence analysis b) Sequence discovery c) Both a & b d) None 12. KDD stands for Knowledge Discovery in Databases. a) Knowledge Discovery in Databases b) Knowledge Detection in Databases c) Knowledge Discovery in Data mining d) Knowledge Domain in Databases 13. KDD is the process of finding useful information and patterns in data. a) CAD b) DTD c) KDD d) CD 14. The KDD consists of 5 steps. a) 3 b) 4 c) 5 d) 6 15. The data needed for the data mining process may be obtained from many different & heterogeneous data sources is Selection. a) Transformation b) Data mining c) Selection d) Evaluation 16. The data from different sources must be converted into a common format for processing is Transformation. a) Transformation b) Data mining c) Selection d) Evaluation 17. The data to be used by the process may have incorrect or missing data is Pre processing. a) Transformation b) Data mining c) Selection d) Pre-processing 18. Visualization refers to the visual presentation of data. a) Graphical b) Icon based c) Visualization d) Pixel based 19. Geometric techniques include the box plot and scatter diagram techniques. a) Graphical b) Icon based c) Visualization d) Geometric 20. Some attributes in the database might not be of interest to the data mining task being developed is Irrelevant data. a) Missing data b) Irrelevant data c) Multimedia data d) None 21. A Conventional database scheme may be composed of many different attributes is High dimensionality. a) High dimensionality b) Low dimensionality c) Medium Dimensionality d) All of these 22. Outliers often many data entries that do not fit nicely into derived model. a) Large dataset b) Outliers c) Selection d) Application 23. A large database can be viewed as using Approximation. a) Large dataset b) Outliers c) Selection d) Approximation 24. A segmentation a database is partitioned into disjoined groupings of similar tuples called Segments. a) Segments b) Association c) Dimensional d) Outliers 25. Data mining can consists of 3 parts. a) 3 b) 4 c) 5 d) 6 SECTION-B 5 MARKS: 1. Write a short note on Data mining Vs Knowledge discovery in databases. 2. Write a short note on Development of Data mining. 3. Write a short note on Summarization. 4. Write a short note on Sequence Discovery. 5. Write a short note on Social implications of data mining. SECTION-C 8 MARKS: 1. Explain in detail about Data mining from a database perspective. 2. Explain in detail about, i) Classification ii) Regression iii) Time series analysis 3. Explain in detail about, i) Predication ii) Clustering iii) Association Rules 4. Explain in detail about Data mining Issues. 5. Explain in detail about Data mining Metrics. UNIT-2 SECTION-A ONE MARKS: 1. Parametric model describe the relationship between input & output through the use of algebraic equations. a) Parametric model b) Non-parametric model c) Both a & b d) None 2. The squared error is often examined for a specific predication to measure accuracy rather than to look at the average difference. a) RMS B) Squared error c) Unbiased d) Biased 3. RMS stands for Root Mean Square. a) Root Mean Square b) Root Median Square c) Range Mean Square d) Range Median Square 4. The RMS may also be used to estimate error or as another statistic to describe a distribution. a) RMS B) Squared error c) Unbiased d) Biased 5. Pointer estimation refers to the process of estimating a population parameter. a) Parametric model b) Non-parametric model c) Both a & b d) Pointer estimation 6. MLE stands for Maximum Likelihood Estimate. a) Maximum Likelihood Estimate b) Maximum Likelihood Effort c) Maximum Likelihood Error d) Maximum Likelihood Extent 7. Expectation Maximization algorithm is an approach that solves the estimation problem with incomplete data. a) RMS B) Squared error c) Unbiased d) Expectation Maximization 8. Frequency Distribution provides an even better model of data. a) Histogram b) Frequency distribution c) Both a & b d) None 9. Hypothesis testing attempts to find a model that explains the observed data by first creating a hypothesis. a) Alternative hypothesis b) Hypothesis testing c) Both a & b d) None 10. Correlation can be used to evaluate the strength of a relationship between two variables. a) Linear b) Correlation c) Hypothesis d) RMS 11. Linear regression assumes that a linear relationship exists between the input data the output data. a) Linear regression b) Correlation c) Hypothesis d) RMS 12. A Decision tree is a predictive modeling technique used in classification tasks. a) Decision tree b) Correlation c) Input database d) Binary search 13. A Decision tree is a tree where the root and each internal node is labeled with a question. a) Input tree b) Output tree c) Decision tree d) All of these 14. Decision tree consists of 3 parts. a) 2 b) 3 c) 4 d) 5 15. Neural networks is also known as Artificial Neural Networks. a) Artificial Neural Networks b) Artificial Neural data c) Artificial Network data d) Artificial Neural interface 16. ANN stands for Artificial Neural Networks. a) a) Artificial Neural Networks b) Artificial Neural data c) Artificial Network data d) Artificial Neural interface 17. A neural network consists of 3 parts. a) 2 b) 3 c) 4 d) 5 18. An activation function may also known as Firing rule. a) Firing rule b) Threshold c) Linear d) All of these 19. An activation function is sometimes called a Both a & b. a) Processing element function b) Squashing function c) Both a& b d) None 20. The linear threshold function also called a Both a & b. a) Ramp function b) Piecewise function c) Both a & b d) None 21. Genetic Algorithm are examples of evolutionary computing methods are optimization type algorithms. a) Gaussian law b) Genetic algorithm c) Hyperbolic tangent d) None 22. A Genetic algorithm is a computational model consisting of 5 parts. a) 3 b) 4 c) 5 d) 6 23. The precise algorithm that indicates how to combine the given set of individuals to produce new once is crossover algorithm. a) Crossover algorithm b) Genetic algorithm c) Hyperbolic tangent d) None 24. A Linear activation function produces a linear output value based on the input. a) Linear b) Threshold c) Activation d) Genetic algorithm 25. A neural network consists of 2 parts. a) 2 b) 3 c) 4 d) 5 SECTION-B 5 MARKS: 1. Write a short note on Point estimation. 2. Write a short note on Models based on summarization. 3. Write a short note on Bayes Theorem. 4. Write a short note on Hypothesis Testing. 5. Write a short note on Regression & Correlation. SECTION-C 8 MARKS: 1. Explain in detail about Similarity measures. 2. Explain in detail about Decision trees. 3. Explain in detail about neural networks. 4. Explain in detail about Activation functions. 5. Explain in detail about Genetic algorithms. UNIT-3 SECTION-A ONE MARKS: 1. Regression problems deal with estimation of an output value based on input values. a) Classification b) Data Mining c) Regression d) Statistical 2. ROC Stands for Both a & b. a) Relative Operating Characteristic b) Receiver Operating Characteristic c) Both a & b d) None 3. KNN Stands for K Nearest Neighbors. a) K nearest Neighbors b) K Notification Neighbors c) K Notation Neighbors d) None 4. CART is a technique that generates a binary decision tree. a) KNN b) CART c) ROC d) RRC 5. RBF Stands for Both a & b. a) Radial Function b) Radial Basis Function c) Both a & b d) None 6. RBF is a class of functions whose value decreases with the distance from a central point. a) RBF b) KNN c) CART d) ROC 7. A Perceptrons is a single neuron with multiple inputs & one output. a) Perceptrons b) Rule based algorithm c) Generating Rules d) None 8. Multiple Independent approaches can be applied to a classification problem. a) Multiple Dependent b) Multiple Independent c) Both a & b d) None 9. DCS Stands for Dynamic Classifier Selection. a) Data Classifier Selection b) Date Class Selection c) Dynamic Classifier Selection d) Dynamic Class Selection. 10. AVC Stands for Attribute Value Class. a) Attribute Value Class b) Attribute Virtual Class c) Attribute Virtual Collections d) Attribute Value Collections. 11. CART Stands for Classification & Regression Trees. a) Class & Regression Trees b) Classification & Regression Trees c) Class & Rotational Trees d) Classification & Rotational Trees 12. A Subtree is replaced by a leaf node if this replacement results in an error rate close to that of the original tree. a) Selection Tree b) Sub Tree c) Regression Tree d) None 13. ID3 technique to building a decision tree is based on information theory & attempt to minimize the expected number of comparison. a) ID2 b) ID3 c) Both a & b d) None 14. A tuple is classified based on the region into which it falls. a) Tuple b) Decision Tree c) Sub Tree d) Classification 15. The data are divided into regions based on class is Division. a) Division b) Prediction c) tuple d) Tree 16. The formulas are generated to predict the output class value is Prediction. a) Division b) Prediction c) tuple d) Tree 17. Classification accuracy is usually calculated by determining the percentage of tuples placed in the correct class. a) Classification b) Division c) Trees d) Prediction 18. Missing Data values cause problems during both the training phase & to the classification process. a) Decision tree b) Missing tree c) Classification tree d) prediction tree 19. Missing Data is the training data must be handled & may produce an inaccurate result. a) Decision tree b) Missing tree c) Classification tree d) prediction tree 20. There are 3 methods used to solve the classification problem. a) 2 b) 3 c) 4 d) 5 21. The Logistic curve gives a value between 0 & 1 so it can be interpreted as the probability of class membership. a) Plain curve b) Logistic curve c) Linear curve d) Non-linear curve 22. Regression can be used to perform 2 approaches. a) 2 b) 3 c) 4 d) 5 23. The common classification scheme based on the use of distance measures is KNN. a) KNN b) CART c) SRT d) ROC 24. The classification problem using decision trees is 2 processes. a) 2 b) 3 c) 4 d) 5 25. Pruning remove redundant comparison or remove sub trees to achieve better performance. a) Pruning b) KNN c) Training tree d) Decision tree SECTION-B 5 MARKS: 1. Write a short note on Issues in classification. 2. Write a short note on Regression. 3. Write a short note on Bayesian classification. 4. Write a short note on Simple approach. 5. Write a short note on K Nearest neighbors SECTION-C 8 MARKS: 1. Explain in detail about Decision tree based algorithm. 2. Explain in detail about, i) ID3 ii) C4.5 3. Explain in detail about Neural Network based algorithms. 4. Explain in detail about, i) CART ii) Scalable DT techniques 5. Explain in detail about Rule based Algorithm. UNIT-4 SECTION-A ONE MARKS: 1. Clustering is similar to classification in that data are grouped. a) Records b) Clustering c) Grouping d) Database Segmentation 2. Dynamic data in the data base implies that cluster membership may change over time. a) Static data b) Dynamic data c) Both a & b d) None 3. Outliers are sample points with values much different from those of the remaining set of data. a) Outliers b) Hierarchical data c) Static data d) Dynamic data 4. Outlier detection is also known as Outlier Mining. a) Outlier Method b) Outlier Mining c) Outlier Methodology d) None 5. Outlier detection is the process of identifying outliers in a set of data. a) Outlier Method b) Outlier Mining c) Outlier Methodology d) None 6. Agglomerative Algorithm start with each individual item in its own cluster & iteratively merge clusters. a) Agglomerative Algorithm b) Divisive Algorithm c) Partitional Algorithm d) Clustering Algorithm 7. Single link technique is based on the idea of finding maximal connected components in a graph. a) Single link b) Multi link c) Scatter link d) Partition link 8. MST Stands for Minimum Spanning Tree. a) Minimum Spanning Tree b) Minimum Spanning Task c) Minimum Spanning Technique d) Minimum Spanning Tendency 9. A Clique is a maximal graph in which there is an edge between any two vertices. a) Clique b) Outliers c) Mining d) Spanning tree 10. Partitional clustering creates the cluster in one step as opposed to several steps. a) Agglomerative Algorithm b) Divisive Algorithm c) Partitional Algorithm d) Clustering Algorithm 11. K-Means clustering is an iterative clustering algorithm in which items are moved among sets of clusters. a) K-Means clustering b) Agglomerative Algorithm c) Divisive Algorithm c) Partitional Algorithm 12. PAM Stands for Partitioning Around Medoids. a) Problem Around Medoids b) Problem Associate Medoids c) Partitioning Around Medoids d) Partitioning Around Methods 13. PAM is also known as K-Mediods algorithm. a) a) Problem Around Medoids b) Problem Associate Medoids c) Partitioning Around Medoids d) K-Medoids Algorithm 14. BEA Stands for Bond Energy Algorithm. a) Bong energy algorithm b) Bond Estimate algorithm c) Both a & b d) None 15. Neural Networks use unsupervised learning attempt to find features in the data that characterize the desired output. a) Neural network b) Bond energy network c) Organizing map d) None 16. SOFM Stands for Self Organizing Feature Maps. a) Self Orient Feature Maps b) Self Orient Feature Method c) Self Orient Feature Mapping d) Service Orient Feature Maps 17. BIRCH is designed for clustering a large amount of metric data. a) SOM b) SOFM c) BIRCH d) BEA 18. DBSCAN is to create cluster with a minimum size & density. a) DBSCAN b) SOFM c) BIRCH d) BEA 19. CURE algorithm is to handle outliers. a) Cure b) SOFM c) BIRCH d) BEA 20. ROCK algorithm is divided into 3 parts. a) 2 b) 3 c) 4 d) 5 21. ROCK is target to both Boolean data & categorical data. a) ROCK b) SOFM c) BIRCH d) BEA 22. SOFM is also known as SOM. a) BIRCH b) BEA c) SOM d) ROCK 23. Partitional algorithm is also known as Non-hierarchical algorithm. a) Hierarchical b) Non-hierarchical c) Both a & b d) None 24. Clustering can be divided into 4 algorithms. a) 2 b) 3 c) 4 d) 5 25. Hierarchical algorithm can be divided into 2 algorithm. a) 2 b) 3 c) 4 d) 5 SECTION-B 5 MARKS: 1. Write a short note on Similarity & Distance measures. 2. Write a short note on Outliers. 3. Write a short note on Bond energy algorithm. 4. Write a short note on nearest neighbor algorithm. 5. Write a short note on Minimum spanning tree. SECTION-C 8 MARKS: 1. Explain in detail about squared error clustering algorithm. 2. Explain in detail about K-means clustering. 3. Explain in detail about PAM Algorithm. 4. Explain in detail about Hierarchical Algorithms. 5. Explain in detail about page clustering with genetic algorithms. UNIT-5 SECTION-A ONE MARKS: 1. Association rules are frequently used by retail stores to assist in marketing. a) Association rule b) Large item set c) Apriori d) None 2. Large item set is the number of occurrence is above a threshold. a) Association rule b) Large item set c) Apriori d) None 3. Apriori algorithm is the most well known association rule algorithm is used in commercial products. a) Apriori b) Association c) Large item set d) None 4. Partitioning algorithm is able to adapt better to limited main memory. a) Apriori b) Association c) Large item set d) Partitioning algorithm 5. Data parallelism is also known as Task parallelism. a) Task parallelism b) Increment parallelism c) Both a & b d) None 6. Distributed association rule algorithm strive to parallelize either a data is known as data parallelism. a) Task parallelism b) Increment parallelism c) Data parallelism d) None 7. CDA Stands for Count Distribution algorithm. a) Count Distribution algorithm b) Count Distribution attributes c) Cost Distribution algorithm d) Cost Distribution attributes 8. Task parallelism is the candidates are partitioned & counted separately at each processor. a) Task parallelism b) Increment parallelism c) Both a & b d) None 9. DDA Stands for Data Distribution Algorithm. a) Data Distribution Algorithm b) Domain Distribution Algorithm c) Data Distribution Attribute d) Digital Distribution Algorithm 10. Data Distribution Algorithm is the demonstrates task parallelism. a) Data Distribution Algorithm b) Domain Distribution Algorithm c) Data Distribution Attribute d) Digital Distribution Algorithm 11. The investigation strategy has been limits to the use of association rules for market basket data is Data Source. a) Task Source b) Data Source c) Both a & b d) None 12. The most common data structure used to store the candidate item sets & their counts is Hash tree. a) Task Source b) Data Source c) Hash tree d) All of these 13. Hash tree provide an efficient technique to store, access, & count item sets. a) Task source b) Hash Tree c) Data source d) None 14. Incremental updating approaches have addressed the issues of how to modify the association rules are performed in the database. a) Single level b) Multi level c) Incremental updating d) Apriori 15. A variation of generation rules is Multiple-level association rules. a) Single level b) Multiple level c) Incremental updating d) Apriori 16. A quantitative association rule is one that involves Both a & b. a) categorical b) Quantitative data c) Both a & b d) None 17. A Correlation Rules is defined as a set of item set that are correlated. a) Correlation rules b) Correlation task c) Correlation task d) Data Mining 18. The problem in the multiple minimum supports is Rare item problem. a) Rare item b) Data item c) Categorical d) All of these. 19. Multiple-level is the item set may occur from any level in the hierarchy. a) Single level b) Multiple level c) Incremental updating d) Apriori 20. Sampling algorithm facilitate counting of item sets with large databases. a) Task source b) Hash Tree c) Data source d) Sampling 21. An algorithm Apriori-gen is used to generate the candidate item set for each pass. a) Apriori-gen b) Association c) Large item set d) Partitioning algorithm 22. The item sets are also said to be Downward Closed. a) Upward closed b) Downward closed c) Both a & b d) None 23. To finding large item set is quite easy but their cost is high. a) High b) Low c) Very high d) Very low 24. A database in which an association rule is to be found is viewed as a set of tuples. a) Tuples b) Date item set c) Apriori d) All of these 25. Apriori algorithm is to generate candidate item sets of a particular size & the database to count these to see if they are large. a) Apriori b) Association c) Partitioning d) None SECTION-B 5 MARKS: 1. Write a short note on Large Item sets. 2. Write a short note on Incremental Rules. 3. Write a short note on measuring the quality of rules. 4. Write a short note on Comparing Approaches. 5. Write a short note on Apriori algorithm. SECTION-C 8 MARKS: 1. Explain in detail about Basic Algorithm. 2. Explain in detail about Parallel & Distributed Algorithm. 3. Explain in detail about Generalized Association Rules. 4. Explain in detail about Quantitative Association Rules. 5. Explain in detail about Multiple-level Association Rules.