The Learnability of Quantum States

... “If the possible hypotheses have sufficiently fewer bits than the data you’ve collected, and if one of those hypotheses succeeds in explaining your data, then that hypothesis will probably also explain most of the data you haven’t collected” In particular: If you want to output a hypothesis from set ...

... “If the possible hypotheses have sufficiently fewer bits than the data you’ve collected, and if one of those hypotheses succeeds in explaining your data, then that hypothesis will probably also explain most of the data you haven’t collected” In particular: If you want to output a hypothesis from set ...

Document

... physical paths will be paths of extremal size change. We’ll add a function just to make it interesting: ...

... physical paths will be paths of extremal size change. We’ll add a function just to make it interesting: ...

Here - Millersville University

... Do voters make judgments about political candidates based on his/her facial appearance? Can you correctly predict the outcome of an election, more often than not, simply by choosing the candidate whose face is judged to be more competentlooking? Researchers investigated this question in a study publ ...

... Do voters make judgments about political candidates based on his/her facial appearance? Can you correctly predict the outcome of an election, more often than not, simply by choosing the candidate whose face is judged to be more competentlooking? Researchers investigated this question in a study publ ...

Optional Stopping Theorem. 07/27/2011

... yourself from this game. It is also called a stopping time. You can decide whether to exit the game at the moment n only basing on the past: using the values of X1 , . . . , Xn , which you already know by this moment. Speaking formally, the event {τ = n} depends only on X1 , . . . , Xn . So we consi ...

... yourself from this game. It is also called a stopping time. You can decide whether to exit the game at the moment n only basing on the past: using the values of X1 , . . . , Xn , which you already know by this moment. Speaking formally, the event {τ = n} depends only on X1 , . . . , Xn . So we consi ...

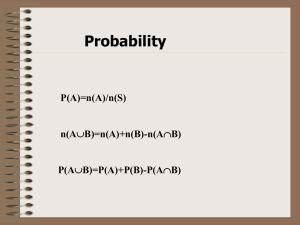

Number of times resulting in event Total number of times experiment

... Law of Large Numbers states that as an experiment is repeated many times the empirical probability will approach the theoretical probability. Properties of probability 1. The probability of an event that can never occur is 0. 2. The probability of an event that will always occur is 1. 3. The probabi ...

... Law of Large Numbers states that as an experiment is repeated many times the empirical probability will approach the theoretical probability. Properties of probability 1. The probability of an event that can never occur is 0. 2. The probability of an event that will always occur is 1. 3. The probabi ...

Chapter.14.Reading.Guide

... Formal Probability 1.) If the probability is 0, the event can’t occur, and likewise if it has probability 1, it always occurs. A probability is a number between 0 and 1. For any event A, 0 < P(A) < 1. 2.) If a random phenomenon has only one possible outcome, it’s not very interesting (or very random ...

... Formal Probability 1.) If the probability is 0, the event can’t occur, and likewise if it has probability 1, it always occurs. A probability is a number between 0 and 1. For any event A, 0 < P(A) < 1. 2.) If a random phenomenon has only one possible outcome, it’s not very interesting (or very random ...

The Scientific Method (Powerpoint)

... dry air meeting warm, wet air then we can predict when and where it will rain, by tracking air currents, temperature, and moisture. ...

... dry air meeting warm, wet air then we can predict when and where it will rain, by tracking air currents, temperature, and moisture. ...

Some Inequalities and the Weak Law of Large Numbers

... Thus the chance that Y deviates from its mean by more than k standard deviations is less than 1/k 2 for any random variable Y . For k = 1 this is non-informative, since k 2 = 1. For k = 2 this is 0.25 – in other words, the chance is less than a quarter that Y deviates by more than 2 standard deviati ...

... Thus the chance that Y deviates from its mean by more than k standard deviations is less than 1/k 2 for any random variable Y . For k = 1 this is non-informative, since k 2 = 1. For k = 2 this is 0.25 – in other words, the chance is less than a quarter that Y deviates by more than 2 standard deviati ...