A Critical Review of the Notion of the Algorithm in Computer Science

... It computedone step at a time, was by practical necessity limited to a finite number of steps and was limited to a finite number of exactly formulated hypotheses (instructions). The electronic digital computer was an incarnation of the mathematician’s effective solution procedure. The mathematicians ...

... It computedone step at a time, was by practical necessity limited to a finite number of steps and was limited to a finite number of exactly formulated hypotheses (instructions). The electronic digital computer was an incarnation of the mathematician’s effective solution procedure. The mathematicians ...

Chapter 8: Dynamic Programming

... Example 2: Coin-row problem There is a row of n coins whose values are some positive integers c₁, c₂,...,cn, not necessarily distinct. The goal is to pick up the maximum amount of money subject to the constraint that no two coins adjacent in the initial row can be picked up. E.g.: 5, 1, 2, 10, 6, 2 ...

... Example 2: Coin-row problem There is a row of n coins whose values are some positive integers c₁, c₂,...,cn, not necessarily distinct. The goal is to pick up the maximum amount of money subject to the constraint that no two coins adjacent in the initial row can be picked up. E.g.: 5, 1, 2, 10, 6, 2 ...

Exam and Answers for 1999/00

... In addition, when the goal state is reached (R, after expanding O), there is still a lower cost node (L). This needs to be expanded to ensure it does not lead to a lower cost solution. In fact, the goal state, in the way the algorithm was presented in the lectures, would not be recognised as it woul ...

... In addition, when the goal state is reached (R, after expanding O), there is still a lower cost node (L). This needs to be expanded to ensure it does not lead to a lower cost solution. In fact, the goal state, in the way the algorithm was presented in the lectures, would not be recognised as it woul ...

External Memory Value Iteration

... on large state spaces that cannot fit into the RAM. We call the new algorithm External Value Iteration. Instead of working on states, we chose to work on edges for reasons that shall become clear soon. In our case, an edge is a 4-tuple (u, v, a, h(v)) where u is called the predecessor state, v the s ...

... on large state spaces that cannot fit into the RAM. We call the new algorithm External Value Iteration. Instead of working on states, we chose to work on edges for reasons that shall become clear soon. In our case, an edge is a 4-tuple (u, v, a, h(v)) where u is called the predecessor state, v the s ...

1 Divide and Conquer with Reduce

... Thus far, we made the assumption that the combine function has constant cost (i.e., both its work and span are constant), allowing us to state the cost specifications of reduce on a sequence of length n simply as O(n) work and O(log n). While this bound applies readily to problems such as the one fo ...

... Thus far, we made the assumption that the combine function has constant cost (i.e., both its work and span are constant), allowing us to state the cost specifications of reduce on a sequence of length n simply as O(n) work and O(log n). While this bound applies readily to problems such as the one fo ...

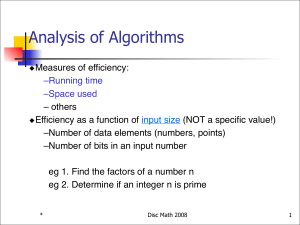

Analysis of Algorithms

... 2. Ω() used for lower bounds “grows faster than”! 3. Θ() used for denoting matching upper and lower bounds. “grows as fast as”! These are bounds on running time, not for the problem! The thumbrules for getting the running time are! 1. Throw away all terms other than the most significant one -- Calcu ...

... 2. Ω() used for lower bounds “grows faster than”! 3. Θ() used for denoting matching upper and lower bounds. “grows as fast as”! These are bounds on running time, not for the problem! The thumbrules for getting the running time are! 1. Throw away all terms other than the most significant one -- Calcu ...

M211 (ITC450 earlier)

... When developing software it is important to know how to solve problems in a computationally efficient way. Algorithms describe methods for solving problems under the constraints of the computers resources. Often the goal is to compute a solution as fast as possible, using as few resources as possibl ...

... When developing software it is important to know how to solve problems in a computationally efficient way. Algorithms describe methods for solving problems under the constraints of the computers resources. Often the goal is to compute a solution as fast as possible, using as few resources as possibl ...

REVISITING THE INVERSE FIELD OF VALUES PROBLEM

... Also an analytic approach using the Lagrange multipliers formalism makes sense, however, this is only feasible for low dimensions. We are interested in finding solution vectors in cases of dimensions larger than those where algebraic or analytic methods can provide one, such as n being of the order ...

... Also an analytic approach using the Lagrange multipliers formalism makes sense, however, this is only feasible for low dimensions. We are interested in finding solution vectors in cases of dimensions larger than those where algebraic or analytic methods can provide one, such as n being of the order ...

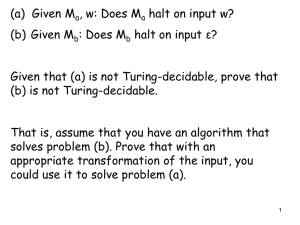

Lecture 17

... Show that if x is a yes instance for П1, then R(x) is a yes instance for П2 Show that if x is a no instance for П1, then R(x) is a no instance for П2 – Often done by showing that if R(x) is a yes instance for П2, then x must have been a yes instance for П1 ...

... Show that if x is a yes instance for П1, then R(x) is a yes instance for П2 Show that if x is a no instance for П1, then R(x) is a no instance for П2 – Often done by showing that if R(x) is a yes instance for П2, then x must have been a yes instance for П1 ...

Hidden Markov Model Cryptanalysis

... Compute a separate inference of the key K for each trace, Kr, for trace r For the r +1 trace, use Pr [Kr | yr] posterior distribution of keys as inputs We “propagate” biases derived in prior trace analyses to the following trace ...

... Compute a separate inference of the key K for each trace, Kr, for trace r For the r +1 trace, use Pr [Kr | yr] posterior distribution of keys as inputs We “propagate” biases derived in prior trace analyses to the following trace ...

T(n)

... When looking for a recursive solution, it is paradoxically often easier to work with a more general version of the problem. ...

... When looking for a recursive solution, it is paradoxically often easier to work with a more general version of the problem. ...

Performance analysis and optimization of parallel Best

... combinatorial optimization problems, such as: optimal route planning, robot navigation, optimal sequence alignments, among others (Russel & Norvig, 2003). One of the most widely used heuristic search algorithms for that purpose is A* (Hart, et al., 1968), a variant of Best-First Search, which requir ...

... combinatorial optimization problems, such as: optimal route planning, robot navigation, optimal sequence alignments, among others (Russel & Norvig, 2003). One of the most widely used heuristic search algorithms for that purpose is A* (Hart, et al., 1968), a variant of Best-First Search, which requir ...

Lecture 4 — August 14 4.1 Recap 4.2 Actions model

... Next, we obtain a high probability regret bound for the randomized ExpWeights algorithm. To derive the result, we use Azuma-Hoeffding inequality which is a generalization of Chernoff bound. Before we state the theorem, we need to define martingale difference sequence. Let X1 , X2 , . . . be a sequen ...

... Next, we obtain a high probability regret bound for the randomized ExpWeights algorithm. To derive the result, we use Azuma-Hoeffding inequality which is a generalization of Chernoff bound. Before we state the theorem, we need to define martingale difference sequence. Let X1 , X2 , . . . be a sequen ...

FAST Lab Group Meeting 4/11/06

... • There exists a generalization (Gray and Moore NIPS 2000; Riegel, Boyer, and Gray TR 2008) of the Fast Multipole Method (Greengard and Rokhlin 1987) which: – specializes to each of these problems – is the fastest practical algorithm for these problems – elucidates general principles for such proble ...

... • There exists a generalization (Gray and Moore NIPS 2000; Riegel, Boyer, and Gray TR 2008) of the Fast Multipole Method (Greengard and Rokhlin 1987) which: – specializes to each of these problems – is the fastest practical algorithm for these problems – elucidates general principles for such proble ...

124370-hw2-1-

... 9. [3 pts] Given the following input array [40, 10, 30, 80, 5, 60] show as a “heap” (in binary tree form). Note that this initial “heap” may not satisfy the max-heap property. ...

... 9. [3 pts] Given the following input array [40, 10, 30, 80, 5, 60] show as a “heap” (in binary tree form). Note that this initial “heap” may not satisfy the max-heap property. ...

dynamic price elasticity of electricity demand

... corresponding to logarithmic demand function is given by: the initial and the final load (kW) per hour, ...

... corresponding to logarithmic demand function is given by: the initial and the final load (kW) per hour, ...

Self-Improving Algorithms Nir Ailon Bernard Chazelle Seshadhri Comandur

... distribution, the algorithm should be able to spot that and learn to be more efficient. The obvious analogy is data compression, which seeks to exploit low entropy to minimize encoding size. The analogue of Shannon’s noiseless coding theorem would be here: Given an unknown distribution D, design a s ...

... distribution, the algorithm should be able to spot that and learn to be more efficient. The obvious analogy is data compression, which seeks to exploit low entropy to minimize encoding size. The analogue of Shannon’s noiseless coding theorem would be here: Given an unknown distribution D, design a s ...