Entropy And Entropy-based Features In Signal Processing K

... Entropy (or an entropy-based feature) can be computed from any finite set of values, e.g. a parametric vector, a discrete spectral density estimate, or directly from a segment of a digital signal. We used the following algorithms to compute the entropy: ...

... Entropy (or an entropy-based feature) can be computed from any finite set of values, e.g. a parametric vector, a discrete spectral density estimate, or directly from a segment of a digital signal. We used the following algorithms to compute the entropy: ...

Entropy

... Depending on the topic and the context in which it is being used, the term entropy has been used to describe any of numerous phenomena. The word entropy was introduced in 1865 by Rudolf Clausius, a German physicist. Two main areas, thermodynamic entropy (including statistical mechanics) and informat ...

... Depending on the topic and the context in which it is being used, the term entropy has been used to describe any of numerous phenomena. The word entropy was introduced in 1865 by Rudolf Clausius, a German physicist. Two main areas, thermodynamic entropy (including statistical mechanics) and informat ...

Problem Set 5 - 2004

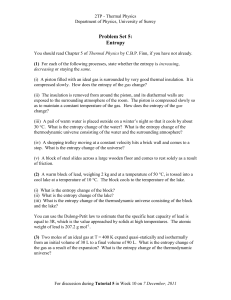

... You should read Chapter 5 of Thermal Physics by C.B.P. Finn, if you have not already. (1) For each of the following processes, state whether the entropy is increasing, decreasing or staying the same. (i) A piston filled with an ideal gas is surrounded by very good thermal insulation. It is compresse ...

... You should read Chapter 5 of Thermal Physics by C.B.P. Finn, if you have not already. (1) For each of the following processes, state whether the entropy is increasing, decreasing or staying the same. (i) A piston filled with an ideal gas is surrounded by very good thermal insulation. It is compresse ...

PY2104 - Introduction to thermodynamics and Statistical physics

... where a and R are constants. Find the specific heat at constant pressure, cp . 7) Consider a thermally isolated system, consisting of two parts, A and B separated by a thermally conducting and movable partition. The volume of part A is V and that of part B is 2V . The system is filled with ideal gas ...

... where a and R are constants. Find the specific heat at constant pressure, cp . 7) Consider a thermally isolated system, consisting of two parts, A and B separated by a thermally conducting and movable partition. The volume of part A is V and that of part B is 2V . The system is filled with ideal gas ...

Lecture 4

... with n1 , n2 . . . integers, so that p1 = n1 2π h̄/L = n1 h/L. The allowed momentum states form a “cubic” lattice in 3N dimensional momentum space with cube edge h/L and so the volume of phase space per state is (h/L)3N L3N = h3N . The factor of N ! arises because from quantum mechanics we recogniz ...

... with n1 , n2 . . . integers, so that p1 = n1 2π h̄/L = n1 h/L. The allowed momentum states form a “cubic” lattice in 3N dimensional momentum space with cube edge h/L and so the volume of phase space per state is (h/L)3N L3N = h3N . The factor of N ! arises because from quantum mechanics we recogniz ...

entropy - Helios

... temperature of one mole of a monatomic ideal gas 1 degree K in a constant volume process? How much heat does it take to change the temperature of one mole of a monatomic ideal gas 1 degree K in a constant pressure process? ...

... temperature of one mole of a monatomic ideal gas 1 degree K in a constant volume process? How much heat does it take to change the temperature of one mole of a monatomic ideal gas 1 degree K in a constant pressure process? ...

Lecture #6 09/14/04

... What are the least probable numbers? What are the odds of getting the least probable numbers? So as we increase the number of identical particles, the probability of seeing extreme events decreases. ...

... What are the least probable numbers? What are the odds of getting the least probable numbers? So as we increase the number of identical particles, the probability of seeing extreme events decreases. ...

thus

... in the average energy of the particles, (i.e. an increase in the value of U/n), which for fixed value of V and n, U will increase. Also as T increases the value of β decreases and the shape of the exponential distribution changes will be as shown in figure (4.3). As the macrostate of the system is ...

... in the average energy of the particles, (i.e. an increase in the value of U/n), which for fixed value of V and n, U will increase. Also as T increases the value of β decreases and the shape of the exponential distribution changes will be as shown in figure (4.3). As the macrostate of the system is ...

Thermodynamics

... Section 19.3 Molecules can undergo three kinds of motion: In translational motion the entire molecule moves in space. Molecules can also undergo vibrational motion, in which the atoms of the molecule move toward and away from one another in periodic fashion, and rotational motion, in which the entir ...

... Section 19.3 Molecules can undergo three kinds of motion: In translational motion the entire molecule moves in space. Molecules can also undergo vibrational motion, in which the atoms of the molecule move toward and away from one another in periodic fashion, and rotational motion, in which the entir ...

Lecture 5 Entropy

... Given some information or constraints about a random variable, we should choose that probability distribution for it, which is consistent with the given information, but has otherwise maximum uncertainty associated with it. ...

... Given some information or constraints about a random variable, we should choose that probability distribution for it, which is consistent with the given information, but has otherwise maximum uncertainty associated with it. ...

Internal energy is a characteristic of a given state – it is the same no

... Calculate entropy change in phase change or calculate entropy change with small temperature change (approximate with average temperature) Small temp change or small energy transfer between two objects at different temps. Look at total entropy! The equations show that the entropy for a closed system ...

... Calculate entropy change in phase change or calculate entropy change with small temperature change (approximate with average temperature) Small temp change or small energy transfer between two objects at different temps. Look at total entropy! The equations show that the entropy for a closed system ...

H-theorem

In classical statistical mechanics, the H-theorem, introduced by Ludwig Boltzmann in 1872, describes the tendency to increase in the quantity H (defined below) in a nearly-ideal gas of molecules. As this quantity H was meant to represent the entropy of thermodynamics, the H-theorem was an early demonstration of the power of statistical mechanics as it claimed to derive the second law of thermodynamics—a statement about fundamentally irreversible processes—from reversible microscopic mechanics.The H-theorem is a natural consequence of the kinetic equation derived by Boltzmann that has come to be known as Boltzmann's equation. The H-theorem has led to considerable discussion about its actual implications, with major themes being: What is entropy? In what sense does Boltzmann's quantity H correspond to the thermodynamic entropy? Are the assumptions (such as the Stosszahlansatz described below) behind Boltzmann's equation too strong? When are these assumptions violated?↑