Orthogonal matrices, SVD, low rank

... Let v1 be a unit vector such that kAv1 k is maximal and define u1 = Av1 /kAv1 k2 . Then by Cauchy-Schwarz, together with the definition of the 2-norm, we have kAk2 = hAv1 , u1 i = hv1 , A∗ u1 i ≤ kv1 kkA∗ u1 k2 = kA∗ u1 k2 ≤ kA∗ k2 . Now define w1 = A∗ u1 /kA∗ u1 k2 , and use the same argument to ge ...

... Let v1 be a unit vector such that kAv1 k is maximal and define u1 = Av1 /kAv1 k2 . Then by Cauchy-Schwarz, together with the definition of the 2-norm, we have kAk2 = hAv1 , u1 i = hv1 , A∗ u1 i ≤ kv1 kkA∗ u1 k2 = kA∗ u1 k2 ≤ kA∗ k2 . Now define w1 = A∗ u1 /kA∗ u1 k2 , and use the same argument to ge ...

Benjamin Zahneisen, Department of Medicine, University of Hawaii

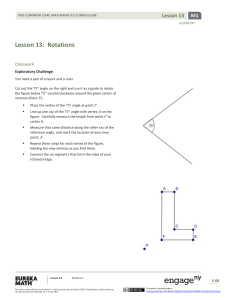

... correction techniques in MRI since it both describes transformations within a given reference frame (i.e changes relative to an initial position) and transformations between reference frames (external tracking devices). The most common description is given in terms of 4x4 homogeneous matrices, where ...

... correction techniques in MRI since it both describes transformations within a given reference frame (i.e changes relative to an initial position) and transformations between reference frames (external tracking devices). The most common description is given in terms of 4x4 homogeneous matrices, where ...

I n - USC Upstate: Faculty

... Definition: Let A and B be two matrices. These matrices are the same, that is, A = B if they have the same number of rows and columns, and every element at each position in A equals the element at corresponding position in B. * This is not trivial if elements are real numbers subject to digital appr ...

... Definition: Let A and B be two matrices. These matrices are the same, that is, A = B if they have the same number of rows and columns, and every element at each position in A equals the element at corresponding position in B. * This is not trivial if elements are real numbers subject to digital appr ...

Overview Quick review The advantages of a diagonal matrix

... The goal in this section is to develop a useful factorisation A = PDP −1 , for an n × n matrix A. This factorisation has several advantages: it makes transparent the geometric action of the associated linear transformation, and it permits easy calculation of Ak for large values of k: ...

... The goal in this section is to develop a useful factorisation A = PDP −1 , for an n × n matrix A. This factorisation has several advantages: it makes transparent the geometric action of the associated linear transformation, and it permits easy calculation of Ak for large values of k: ...

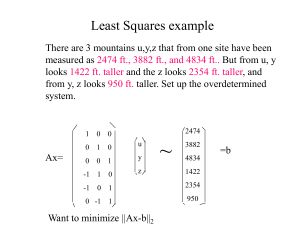

leastsquares

... •Does not require decomposition of matrix •Good for large sparse problem-like PET •Iterative method that requires matrix vector multiplication by A and AT each iteration •In exact arithmetic for n variables guaranteed to converge in n iterations- so 2 iterations for the exponential fit and 3 iterati ...

... •Does not require decomposition of matrix •Good for large sparse problem-like PET •Iterative method that requires matrix vector multiplication by A and AT each iteration •In exact arithmetic for n variables guaranteed to converge in n iterations- so 2 iterations for the exponential fit and 3 iterati ...

Matrix - University of Lethbridge

... • by b. Then the linear system Ax = b has unique solution x = (x1, x2, . . . , xn), ...

... • by b. Then the linear system Ax = b has unique solution x = (x1, x2, . . . , xn), ...

5.6 Using the inverse matrix to solve equations

... Provided you understand how matrices are multiplied together you will realise that these can be written in matrix form as ...

... Provided you understand how matrices are multiplied together you will realise that these can be written in matrix form as ...

![1. Let A = 1 −1 1 1 0 −1 2 1 1 . a) [2 marks] Find the](http://s1.studyres.com/store/data/005284378_1-9abef9398f6a7d24059a09f56fe1ac13-300x300.png)