CS-485: Capstone in Computer Science

... NN is trained rather than programmed to perform the given task since it is difficult to separate the hardware and software in the structure. We program not solution of tasks but ability of learning to solve the tasks ...

... NN is trained rather than programmed to perform the given task since it is difficult to separate the hardware and software in the structure. We program not solution of tasks but ability of learning to solve the tasks ...

PowerPoint - University of Virginia

... • Mapping from st to st+Dt is nonlinear and has a great range – Position and velocity can vary from +/- inf – Sigmoid can capture nonlinearities, but its range is limited • Could use many sigmoids and shift/scale them to cover the space ...

... • Mapping from st to st+Dt is nonlinear and has a great range – Position and velocity can vary from +/- inf – Sigmoid can capture nonlinearities, but its range is limited • Could use many sigmoids and shift/scale them to cover the space ...

Syllabus P140C (68530) Cognitive Science

... – Even if some units do not work, information is still preserved – because information is distributed across a network, performance degrades gradually as function of damage – (aka: robustness, fault-tolerance, graceful degradation) ...

... – Even if some units do not work, information is still preserved – because information is distributed across a network, performance degrades gradually as function of damage – (aka: robustness, fault-tolerance, graceful degradation) ...

Learning about Learning - by Directly Driving Networks of Neurons

... desired behavior? Why does that learning process take time? To tackle questions like these, we reverse the normal order of operations in systems neuroscience: instead of teaching animals a new behavior and then searching for its neural correlate, we specify a neural activity pattern and then through ...

... desired behavior? Why does that learning process take time? To tackle questions like these, we reverse the normal order of operations in systems neuroscience: instead of teaching animals a new behavior and then searching for its neural correlate, we specify a neural activity pattern and then through ...

Slide ()

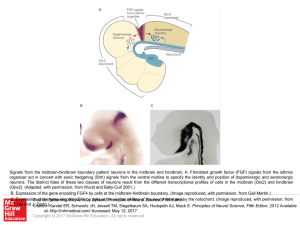

... Signals from the midbrain-hindbrain boundary pattern neurons in the midbrain and hindbrain. A. Fibroblast growth factor (FGF) signals from the isthmic organizer act in concert with sonic hedgehog (Shh) signals from the ventral midline to specify the identity and position of dopaminergic and serotone ...

... Signals from the midbrain-hindbrain boundary pattern neurons in the midbrain and hindbrain. A. Fibroblast growth factor (FGF) signals from the isthmic organizer act in concert with sonic hedgehog (Shh) signals from the ventral midline to specify the identity and position of dopaminergic and serotone ...

Slide ()

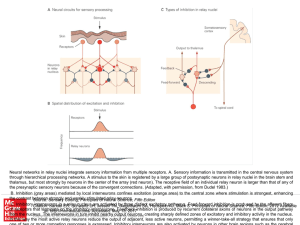

... Neural networks in relay nuclei integrate sensory information from multiple receptors. A. Sensory information is transmitted in the central nervous system through hierarchical processing networks. A stimulus to the skin is registered by a large group of postsynaptic neurons in relay nuclei in the br ...

... Neural networks in relay nuclei integrate sensory information from multiple receptors. A. Sensory information is transmitted in the central nervous system through hierarchical processing networks. A stimulus to the skin is registered by a large group of postsynaptic neurons in relay nuclei in the br ...

Artificial Neural Networks

... change according to the input pattern, thus improving the grade of service while maximizing network utilization. Two schemes are considered in this approach ...

... change according to the input pattern, thus improving the grade of service while maximizing network utilization. Two schemes are considered in this approach ...

Artificial Neural Networks

... splits into thousands of branches. At the end of the branch, a structure called a synapse converts the activity from the axon into electrical effects that inhibit or excite activity in the connected neurons. When a neuron receives excitatory input that is sufficiently large compared with its inhibit ...

... splits into thousands of branches. At the end of the branch, a structure called a synapse converts the activity from the axon into electrical effects that inhibit or excite activity in the connected neurons. When a neuron receives excitatory input that is sufficiently large compared with its inhibit ...

download

... small demonstration program written in Java (Java Applet), and a series of questions which are intended as an invitation to play with the programs and explore the possibilities of different algorithms. The aim of the applets is to illustrate the dynamics of different artificial neural networks. Emph ...

... small demonstration program written in Java (Java Applet), and a series of questions which are intended as an invitation to play with the programs and explore the possibilities of different algorithms. The aim of the applets is to illustrate the dynamics of different artificial neural networks. Emph ...

hebbRNN: A Reward-Modulated Hebbian Learning Rule for

... Software Archive: http://dx.doi.org/10.5281/zenodo.154745 ...

... Software Archive: http://dx.doi.org/10.5281/zenodo.154745 ...

Experimenting with Neural Nets

... Replicate the table you did for #14 in Practice 1. After experimenting with “Backpropagation” (on the Learning tab), try out “Backprop-momentum”, experimenting with parameters to try and get it to learn. Congratulations, you are doing neural smithing! Write up your experimental results and any concl ...

... Replicate the table you did for #14 in Practice 1. After experimenting with “Backpropagation” (on the Learning tab), try out “Backprop-momentum”, experimenting with parameters to try and get it to learn. Congratulations, you are doing neural smithing! Write up your experimental results and any concl ...

Neural Networks 2 - Monash University

... The SOM for Data Mining The is a good method for obtaining an initial understanding of a set of data about which the analyst does not have any opinion (e.g. no need to estimate number of clusters) The map can be used as an initial unbiased starting point for further analysis. Once the clusters ...

... The SOM for Data Mining The is a good method for obtaining an initial understanding of a set of data about which the analyst does not have any opinion (e.g. no need to estimate number of clusters) The map can be used as an initial unbiased starting point for further analysis. Once the clusters ...

ANN

... is treated as though it were an incomplete or error-ridden version of one of the stored examples. ...

... is treated as though it were an incomplete or error-ridden version of one of the stored examples. ...

Computational Intelligence in R

... system identification and modeling are required tasks. Effective tools to address these problems are available from Computational Intelligence (CI). It is a field within the Artificial Intelligence that has emerged during last years. This field is concerned with computational methods inspired on nat ...

... system identification and modeling are required tasks. Effective tools to address these problems are available from Computational Intelligence (CI). It is a field within the Artificial Intelligence that has emerged during last years. This field is concerned with computational methods inspired on nat ...

Slide ()

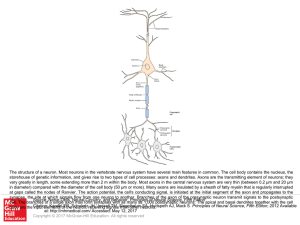

... The structure of a neuron. Most neurons in the vertebrate nervous system have several main features in common. The cell body contains the nucleus, the storehouse of genetic information, and gives rise to two types of cell processes: axons and dendrites. Axons are the transmitting element of neurons; ...

... The structure of a neuron. Most neurons in the vertebrate nervous system have several main features in common. The cell body contains the nucleus, the storehouse of genetic information, and gives rise to two types of cell processes: axons and dendrites. Axons are the transmitting element of neurons; ...

Introduction to ANNs

... asection through an animal retina, one of the first ever visualisations of a neural network produced by Golgi and Cajal who received a Nobel Prize in 1906. You can see roundish neurons with their output axons. Some leave the area (those at the bottom which form the ‘optic nerve’) and other axons inp ...

... asection through an animal retina, one of the first ever visualisations of a neural network produced by Golgi and Cajal who received a Nobel Prize in 1906. You can see roundish neurons with their output axons. Some leave the area (those at the bottom which form the ‘optic nerve’) and other axons inp ...

Neural Oscillators on the Edge: Harnessing Noise to Promote Stability

... Abnormal neural oscillations are implicated in certain disease states, for example repetitive firing of injured axons evoking painful paresthesia, and rhythmic discharges of cortical neurons in patients with epilepsy. In other clinical conditions, the pathological state manifests as a vulnerability ...

... Abnormal neural oscillations are implicated in certain disease states, for example repetitive firing of injured axons evoking painful paresthesia, and rhythmic discharges of cortical neurons in patients with epilepsy. In other clinical conditions, the pathological state manifests as a vulnerability ...

NEURAL NETWORKS

... can perform the basic logic operations NOT, OR and AND. As any multivariable combinational function can be constructed using these operations, digital computer hardware of great complexity can be constructed using these simple neurons as building blocks. The above network has its knowledge pre-coded ...

... can perform the basic logic operations NOT, OR and AND. As any multivariable combinational function can be constructed using these operations, digital computer hardware of great complexity can be constructed using these simple neurons as building blocks. The above network has its knowledge pre-coded ...

Connectionism - Birkbeck, University of London

... Fig. 2. A three-layered feed-forward neural network with three units in the input layer, four units in the hidden layer, and two units in the output layer. A key property of neural networks is their ability to learn. Learning in neural networks is based on altering the extent to which a given neuron ...

... Fig. 2. A three-layered feed-forward neural network with three units in the input layer, four units in the hidden layer, and two units in the output layer. A key property of neural networks is their ability to learn. Learning in neural networks is based on altering the extent to which a given neuron ...

NeuralNets

... • Like a ball rolling down a hill, we should gain speed if we make consistent changes. It’s like an adaptive stepsize. • This idea is easily implemented by changing the gradient as follows: ...

... • Like a ball rolling down a hill, we should gain speed if we make consistent changes. It’s like an adaptive stepsize. • This idea is easily implemented by changing the gradient as follows: ...

Artificial Neural Networks

... asection through an animal retina, one of the first ever visualisations of a neural network produced by Golgi and Cajal who received a Nobel Prize in 1906. You can see roundish neurons with their output axons. Some leave the area (those at the bottom which form the ‘optic nerve’) and other axons inp ...

... asection through an animal retina, one of the first ever visualisations of a neural network produced by Golgi and Cajal who received a Nobel Prize in 1906. You can see roundish neurons with their output axons. Some leave the area (those at the bottom which form the ‘optic nerve’) and other axons inp ...

Supervised learning

... If examples are “good” and if weight are correctly preset, the network will converge rapidly (i.e. will stop with D = |ei-edi| < d). ...

... If examples are “good” and if weight are correctly preset, the network will converge rapidly (i.e. will stop with D = |ei-edi| < d). ...