* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Linear codes. Groups, fields and vector spaces

Fundamental theorem of algebra wikipedia , lookup

Hilbert space wikipedia , lookup

Determinant wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Cross product wikipedia , lookup

Jordan normal form wikipedia , lookup

System of linear equations wikipedia , lookup

Eigenvalues and eigenvectors wikipedia , lookup

Exterior algebra wikipedia , lookup

Tensor operator wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Laplace–Runge–Lenz vector wikipedia , lookup

Geometric algebra wikipedia , lookup

Matrix multiplication wikipedia , lookup

Euclidean vector wikipedia , lookup

Vector space wikipedia , lookup

Covariance and contravariance of vectors wikipedia , lookup

Matrix calculus wikipedia , lookup

Linear algebra wikipedia , lookup

Cartesian tensor wikipedia , lookup

Four-vector wikipedia , lookup

Information and Coding

Theory

Linear Codes.

Groups, fields and vector spaces - a brief

survey.

Codes defined as vector subspaces. Dual

codes.

Juris Viksna, 2017

Codes – how to define them?

In most cases it would be natural to use binary block codes that maps

input vectors of length k to output vectors of length n.

For example: 101101

110011

etc.

11100111

01000101

Thus we can define code as an injective mapping from vector space V

with dimension k to vector space W with dimension n.

V

W

Such definition essentially is used in the original Shanon’s theorem.

Codes – how to define them?

Simpler to define and use are linear codes that can be defined by

multiplication with matrix of size kn (called generator matrix).

What should be the elements of

vector spaces V and W?

In principle in most cases it will be sufficient to have just 0-s and 1-s,

however, to define vector space in principle we need a field – an

algebraic system with operations “+” and “” defined and having similar

properties as we have in ordinary arithmetic (think of real numbers).

Field with just “0” and “1” may look very simple, but it turns out that to

get some real progress we will need more complicated fields, just that

elements of these fields themselves will be regarded as (most often)

binary vectors.

Groups - definition

Consider set G and binary operator +.

Definition

Pair (G,+) is a group, if there is eG such that for all a,b,cG:

1) a+bG

2) (a+b)+c = a+(b+c)

3) a+e = a and e+a = a

4) there exists inv(a) such that a+ inv(a)= e and inv(a)+a = e

5) if additionally a+b = b+a, group is commutative (Abelian)

If group operation is denoted by “+” then e is usually denoted by 0 and

inv(a) by a.

If group operation is denoted by “” hen e is usually denoted by 1 and

inv(a) by a1 (and ab are usually written as ab).

It is easy to show that e and inv(a) are unique.

Groups - definition

Definition

Pair (G,+) is a group, if there is eG such that for all a,b,cG:

1)

2)

3)

4)

a+bG

(a+b)+c = a+(b+c)

a+e = a and e+a = a

there exists inv(a) such that a+ inv(a)= e and inv(a)+a = e

Examples:

(Z,+), (Q,+), (R,+)

(Z2,+), (Z3,+), (Z4,+)

(Q{0},), (R{0},) (but not (Z{0},))

(Z2{0},), (Z3{0},) (but not (Z4{0},))

A simple non-commutative group:

x,y, where x: abccab ( rotation) and y: abcacb ( inversion).

Groups - definition

(H,+) is a subgroup of (H,+) if HG and (H,+) is a group.

H<G - notation that H is a subgroup of G.

x1, x1,,xk - a subgroup of G generated by x1, x1,,xk G.

o(G) - number of elements of group G.

For first few lectures we just need to remember group definition.

Other facts will become important when discussing finite fields.

We will consider only commutative and finite groups!

Lagrange theorem

Theorem

If H < G then o(H) | o(G).

Proof

• For all aG consider sets aH = {ah | hH} (these are called cosets).

• All elements of a coset aH are distinct and |aH| = o(H).

• Each element gG belongs to some coset aH (for example ggH).

• Two cosets aH and bH are either identical or disjoint.

• Thus G is a union of disjoint cosets, each having o(H) elements, hence

o(H) | o(G).

Fields - definition

Consider set F and binary operators “+” and “”.

Definition

Triple (F,+, ) is a field, if 0,1F such that for all a,b,c,dF and d0:

1) a+bF and ab F

2) a+b=b+a and ab= ba

3)(a+b)+c=a+(b+c) and (ab)c=a(bc)

4) a+0=a and a1 = a

5) there exist a,d1F such that a+( a)= 0 and dd1=1

6) a(b+c)=ab+bc

We can say that F is a field if both (F,+) and (F{0}, ) are commutative

groups and operators “+” and “” are linked with property (6).

Examples:

(Q,+,), (R,+,), (Z2,+,), (Z3,+,)

(but not (Z4, ,+,))

Finite fields - some examples

[Adapted from V.Pless]

Finite fields - some examples

Note that we could use alternative notation and consider the field

elements as 2-dimensional binary vectors (and usual vector addition

corresponds to operation “+” in field!) :

0=(0 0), 1=(0 1), =(1 0), =(1 1).

[Adapted from V.Pless]

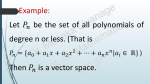

Vector spaces - definition

What we usually understand by vectors?

In principle we can say that vectors are n-tuples of the form:

(x1,x2,,xn)

and operations of vector addition and multiplication by scalar are defined

and have the following properties:

(x1,x2,,xn)+(y1,y2,,yn)=(x+y1,x+y2,,x+yn)

a(x1,x2,,xn)=(ax1,ax2,,axn)

The requirements actually are a bit stronger – elements a and xi should

come from some field F.

We might be able to live with such a definition, but then we will link a

vector space to a unique and fixed basis and often this will be technically

very inconvenient.

Vector spaces - definition

Let (V,+) be a commutative group with identity element 0, let F be a

field with multiplicative identity 1 and let “” be an operator mapping

FV to V.

Definition

4-tuple (V,F,+,) is a vector space if (V,+) is a commutative group with

identity element 0 and for all u,vV and all a,bF:

1)

2)

3)

4)

a(u+v)=au+av

(a+b)v=av+bv

a(bv)=(ab)v

1v=v

The requirement that (V,+) is a group is used here for brevity of

definition – the structure of (V,+) will largely depend from F.

Note that operators “+” and “” are “overloaded” – the same symbols are

used for field and vector space operators.

Vector spaces - definition

Definition

4-tuple (V,F,+,) is a vector space if (V,+) is a commutative group with

identity element 0 and for all u,vV and all a,bF:

1)

2)

3)

4)

a(u+v)=au+av

(a+b)v=av+bv

a(bv)=(ab)v

1v=v

Usually we will represent vectors as n-tuples of the form (x1,x2,,xn),

however such representations will not be unique and will depend from a

particular basis of vector space, which we will chose to use (but 0 will

always be represented as n-tuple of zeroes (0,0,,0)).

Vector spaces - some terminology

A subset S of vectors from vector space V is a subspace of V if S is

itself a vector space. We denote this by S⊑V.

A linear combination of v1,,vkV (V being defined over a field F) is a

vector of the form a1v1++akvk where aiF.

For given v1,,vkV a set of all linear combinations of these vectors are

denoted by v1,,vk.

It is easy to show that v1,,vk⊑V.

We also say that vectors v1,,vk span the subspace v1,,vk.

A set of vectors v1,,vk is (linearly) independent if a1v1++akvk 0

for all a1,,akF such that ai 0 for at least one index i.

Independent vectors - some properties

A set of vectors v1,,vk is (linearly) independent if a1v1++akvk 0

for all a1,,akF such that ai 0 for at least one index i.

Assume that vectors v1,v2,v3,,vk are independent. Then independent

will be also the following:

• any permutation of vectors v1,v2,v3,,vk (this doesn’t change the set :)

• av1,v2,v3,,vk , if a 0

• v1+v2,v2,v3,,vk

Moreover, these operations do not change subspace spanned by initial

vectors v1,,vk.

Vector spaces - some results

Theorem 1

If v1,,vk V span the entire vector space V and w1,,wr V is an

independent set of vectors then r k.

Proof

Suppose this is not the case and r k+1. Since v1,,vk=V we have:

w1 = a11v1+ +a1kvk

w2 = a21v1+ +a2kvk

..........................................

wk = ak1v1+ +akkvk

wk+1 = a(k+1)1v1++a(k+1)kvk

We can assume that a11 ≠ 0 (at least some scalar should be non-zero due

to independence of wi's).

Vector spaces - some results

Thus, we have b1w1 = v1++a1kvk for some non-zero b1.

Subtracting the value b1w1ai1 from all wi's with i >1 gives us a new

system of equations with independent set of k+1 vectors on left side.

By repeating this process k+1 time by starting with the i-th equation in

each iteration we end up with set of k+1 independent vectors, where the

i-th vector is expressed as a sum of no more than k+1–i vi's. However,

this means that k+1-st vector will be 0 and this contradicts that set of

k+1 vectors is independent.

Vector spaces - basis

Theorem 2

If two finite sets of independent vectors span a space V, then there are

the same number of vectors in each set.

Proof

Let k be a number of vectors in the first set and r the number of vectors

in the second set. By Theorem 1 we have k r and r k.

We will be interested in vector spaces that are spanned by a finite

number of vectors, so we assume this from now.

We say that a set of vectors v1,,vkV is a basis of V if they are

independent and span V.

Vector spaces - basis

Theorem 3

Let V be a vector space over a field F. Then the following hold:

1) V has a basis.

2) Any two bases of V contain the same number of vectors.

3) If B is a basis of V then every vector in V has a unique

representation as a linear combination of vectors in B.

Proof

1. Let v1V and v1 ≠ 0. If V = v1 we are finished. If not, there is a

vector v2Vv1 and v2 ≠ 0. If V = v1,v2 we are finished. If not,

continue until we obtain a basis for V.

The process must terminate by our assumption that V is spanned by a

finite number k of vectors and the result given by Theorem 1 that the

basis cannot have more than k vectors.

Vector spaces - basis

Theorem 3

Let V be a vector space over a field F. Then the following hold:

1) V has a basis.

2) Any two bases of V contain the same number of vectors.

3) If B is a basis of V then every vector in V has a unique

representation as a linear combination of vectors in B.

Proof

2. This is a direct consequence from Theorem 2.

3. Since the vectors in B span V, any vV can be written as

v = a1b1+...+akbk .

If we also have v = c1b1+...+ckbk then a1b1+...+akbk = c1b1+...+ckbk

and, since bi's are independent, ai = ci for all i.

Vector spaces - dimension

The dimension of a vector space V, denoted by dim V, is the number of

vectors in any basis of V. By Theorem 3 the dimension of V is well

defined.

Independent vectors – few more properties

Assume that set of vectors v1,,vk V is independent and in some fixed

basis b1,,bkV we have representations vi = ai1b1+...+aikbk. Then

independent will be also the following sets of vectors obtained from

v1,,vk by:

• for some j,k swapping all aij-s with aik-s

• for some j and non-zero cF replacing all aij-s with caij-s (*)

• for some k replacing all aij-s with aij+aik-s (**)

Note however that these operations can change subspace spanned by

initial vectors v1,,vk.

Vector spaces and rangs of matrices

If M is a matrix whose elements are contained in a field F, then the row

rank of M, denoted by rr(M), is defined to be dimension of the subspace

spanned by rows of M.

The column rank of M , denoted by rc(M), is defined to be dimension

of the subspace spanned by columns of M.

An nn matrix A is called nonsingular if rr(A) = n. This means that the

rows of A are linearly independent.

Vector spaces and rangs of matrices

If M is a matrix, the following operations on its rows are called

elementary row operations:

1) Permuting rows.

2) Multiplying a row by a nonzero scalar.

3) Adding a scalar multiple of one row to another row.

Similarly, we can define the following to be elementary column

operations:

4) Permuting columns.

5) Multiplying a column by a nonzero scalar.

6) Adding a scalar multiple of one column to another column.

As we saw, none of these operations affects rr(M) or rc(M), although

1,2,3 and 4,5,6 correspondingly could change M column/row spaces.

Vector spaces - matrix row-eschelon form

A matrix M is said to be in row-echelon form if, after possibly

permuting its columns,

I

M =

A

0 0

where I is kk identity matrix.

Lemma

If M is any matrix, then M can by applying elementary operations 1,2,3,4

it can be transformed into a matrix M in row echelon form.

Vector spaces - matrix row-eschelon form

Lemma

If M is any matrix, then M can by applying elementary operations 1,2,3,4

it can be transformed into a matrix M in row echelon form.

Proof (sketch)

Proceed iteratively in steps 1...k as follows:

Step i

• Perform row permutation (if such exists) that places in i-th position

row with a non-zero element in i-th column. Let it be row v. If there

are no appropriate permutation, perform first column permutation that

places non-zero column in i-th position.

• Add to each of other rows vector av, where a is chosen such that after

the addition the row has value 0 in i-th position.

Vector spaces - matrix row-eschelon form

Theorem

The row rank rr(M) of matrix M, equals its column rank rc(M).

Proof

Transform M into M in row echelon form applying elementary

operations 1,2,3,4. This doesn’t change either rr(M) or rc(M), it is also

obvious that for matrix M we have rr(M) = rc(M) = k, where k is the

size of identity matrix I.

I

M =

0

A

0

Linear codes

Message

source

Encoder

Channel

x = x1,...,xk

message

c = c1,...,cn

codeword

y=c+e

received

vector

Decoder

Receiver

x'

estimate of

message

e = e1,...,en

error from

noise

Generally we will define linear codes as vector spaces – by taking C to

be a k-dimensional subspace of some n-dimensional space V.

Codes and linear codes

Let V be an n-dimensional vector space over a finite field F.

Definition

A code is any subset CV.

Definition

A linear (n,k) code is any k-dimensional subspace C⊑V.

Whilst this definition is largely “standard”, it doesn’t distinguish any

particular basis of V. However the properties and parameters of code

will vary greatly with selection of particular basis. So in fact we

assume that V is given already with some fixed basis b1,,bnV and

will assume that all elements of V and C will be represented in this

particular basis. At the same time we might be quite flexible in

choosing a specific basis for C.

Codes and linear codes

Let V be an n-dimensional vector space over a finite field F.

Definition

A linear (n,k) code is any k-dimensional subspace C⊑V.

Example (choices of bases for V and code C):

Basis of V (fixed):

Set of V elements:

Set of C elements:

2 alternative bases

for code C:

001,010,100

{000,001,010,011,100,101,110,111}

{000,001,010,011}

001,010

001,011

Essentially, we will be ready to consider alternative bases, but will stick

to “main one” for representation of V elements.

Hamming code [7,4]

What to do, if there are errors?

- we assume that the number of errors is as small as possible - i.e.

we can find the code word c (and the corresponding x) that is

closest to received vector y (using Hamming distance)

- consider vectors a = 0001111, b = 0110011 and c = 1010101,

-- if y is received, compute ya, yb and yc (inner products), e.g.,

for y = 1010010 we obtain ya = 1, yb = 0 and yc = 0.

-- this represents a binary number (100 or 4 in example above) and

we conclude that error is in 4th digit, i.e. x = 1011010.

Easy, bet why this method work?

Vector spaces – dot (scalar) product

Let V be a k-dimensional vector space over field F. Let b1,,bkV be

some basis of V. For a pair of vectors u,vV, such that u=a1b1+...+akbk

and v=c1b1+...+ckbk their dot (scalar) product is defined by:

The question whether for an inner

product defined in abstract way there

Thus operator “” maps VV to will

F. be a basis that will allow to

compute it as a scalar product by

formula above is somewhat tricky and

Lemma

depend

from particular

For u,v,wV and all a,bF the may

following

properties

hold: vector

space V.

u·v = a1·c1 +...+ ak·ck

1) u·v = v·u.

2) (au+bv)·w = a(u·v)+b(v·w).

3) If u·v = 0 for all v in V, then u = 0.

Note. The Lemma above can also be used as a more abstract definition of

inner product.

Vector spaces – dot (scalar) product

Let V be a k-dimensional vector space over field F. Let b1,,bkV be

some basis of V. For a pair of vectors u,vV, such that u=a1b1+...+akbk

and v=c1b1+...+ckbk their dot (scalar) product is defined by:

u·v = a1·c1 +...+ ak·ck

Two vectors u and v are said to be orthogonal if u·v = 0. If C is a

subspace of V then it is easy to see that the set of all vectors in V that are

orthogonal to each vector in C is a subspace, which is called the space

orthogonal to C and denoted by C.

For us important and not too obvious result!

Theorem

If C is a subspace of V, then dim C + dim C = dim V.

Vector spaces and linear transformations

Definition

Let V be a vector space over field F. Function f : VV is called a linear

transformation, if for all u,vV and all aF the following hold:

1)af(u) = f(au).

2)f(u)+f(v) = f(u+v).

The kernel of f is defined as ker f ={vV | f(v) = 0}.

The range of f is defined as range f ={f(v) | vV}.

It is quite obvious property of f linearity that vector sums and scalar

products doesn't leave ker f or range f .

Thus ker f ⊑V and range f ⊑V.

Vector spaces and linear transformations

Theorem (rank-nullity theorem)

dim (ker f) + dim (range f) = dim V.

ker f

range f

v0

0

Proof?

Chose some basis u1,,uk of ker f and some basis w1,,wn of range f.

Then try to show that u1,,uk, v1,,vn is a basis for V, where wi = f(vi)

(vectors vi may not be uniquely defined, but is sufficient to chose

arbitrary pre-images of wi -s).

Rank-nullity theorem

Theorem

dim (ker f) + dim (range f) = dim V.

[Adapted from R.Milson]

Rank-nullity theorem

[Adapted from R.Milson]

Dimensions of orthogonal vector spaces

Theorem

If C is a subspace of V, then dim C + dim C = dim V.

C

C

0

Proof?

We could try to reduce this to “similarly looking” equality dim V = dim

(ker f) + dim (range f).

However how we can define a linear transformation from dot product?

Dimensions of orthogonal vector spaces

Theorem

If C is a subspace of V, then dim C + dim C = dim V.

Proof

However how we can define a linear transformation from dot product?

Let u1,,uk be some basis of C. We define transformation f as follows:

for all vV: f(v) = (vu1) u1 + + (vuk) uk

Note that vui F, thus f(v)C. Therefore we have:

• ker f = C (this directly follows form definition of C)

• range f = C (this follows form definition of f)

Thus from rank-nullity theorem: dim C + dim C = dim V.

Linear codes

Message

source

Encoder

Channel

x = x1,...,xk

message

c = c1,...,cn

codeword

y=c+e

received

vector

Decoder

Receiver

x'

estimate of

message

e = e1,...,en

error from

noise

Generally we will define linear codes as vector spaces – by taking C to

be a k-dimensional subspace of some n-dimensional space V.

Codes and linear codes

Let V be an n-dimensional vector space over a finite field F.

Definition

A code is any subset CV.

Definition

A linear (n,k) code is any k-dimensional subspace C⊑V.

Whilst this definition is largely “standard”, it doesn’t distinguish any

particular basis of V. However the properties and parameters of code

will vary greatly with selection of particular basis. So in fact we

assume that V is given already with some fixed basis b1,,bnV and

will assume that all elements of V and C will be represented in this

particular basis. At the same time we might be quite flexible in

choosing a specific basis for C.

Codes and linear codes

Let V be an n-dimensional vector space over a finite field F together

with some fixed basis b1,,bnV.

Definition

A linear (n,k) code is any k-dimensional subspace C⊑V.

Definition

The weight wt(v) of a vector vV is a number of nonzero components of

v in its representation as a linear combination v = a1b1+...+anbn.

Definition

The distance d(v,w) between vectors v,wV is a number of distinct

components in their representation in given basis.

Definition

The minimum weight of code C⊑V is defined as minvC,v0 wt(v).

Codes and linear codes

Theorem

Linear (n,k,d) code can correct any number of errors not exceeding

t = (d1)/2.

Proof

The distance between any two codewords is at least d.

So, if the number of errors is smaller than d/2 then the closest

codeword to the received vector will be the transmitted one

However a far less obvious problem: how to find which codeword is the

closest to received vector?

Coding theory - the main problem

A good (n,k,d) code has small n, large k and large d.

The main coding theory problem is to optimize one of the parameters n,

k, d for given values of the other two.

Generator matrices

Definition

Consider (n,k) code C⊑V. G is a generator matrix of code C, if

C = {vG | vV} and all rows of G are independent.

It is easy to see that generator matrix exists for any code – take any

matrix G rows of which are vectors v1,,vk (represented as n-tuples in

the initially agreed basis of V) that form a basis of C. By definition G

will be a matrix of size kn.

Obviously there can be many different generator matrices for a given

code. For example, these are two alternative generator matrices for the

same (4,3) code:

1 0 0 1

0 0 1 1

G1 0 1 0 1 G2 1 1 1 1

0 0 1 1

1 0 1 0

Equivalence of codes

Definition

Codes C1,C2⊑V. are equivalent, if a generator matrix G2 of C2 can be

obtained from a generator matrix G1 of C1 by a sequence of the following

operations:

1)permutation of rows

2)multiplication of a row by a non-zero scalar

3)addition of one row to another

4)permutation of columns

5)multiplication of a column by a non-zero scalar

Note that operations 1-3 actually doesn’t change the code C1. Applying

operations 4 and 5 C1 could be changed to a different subspace of V,

however the weight distribution of code vectors remains the same.

In particular, if C1 is (n,k,d) code so is C2. In binary case vectors of C1

and C2 would differ only by permutation of positions.

Generator matrices

Definition

A generator matrix G of (n,k) code C⊑V is said to be in standard form

if G = (I,A), where I is kk identity matrix.

Theorem

For code C⊑V there is an equivalent code C that has a generator matrix

in standard form.

Proof

We have already shown that each matrix can be transformed in rowechelon form by applying the same operations that define equivalent

codes. Since for (n,k) code generator matrix must have rank k, we obtain

that in this case I should be kk identity matrix (i.e. we can’t have rows

with all zeroes).

Dual codes

Definition

Consider code C⊑V. A dual or orthogonal code of C is defined as

C = {vV | wC: vw = 0}.

It is easy check that C ⊑V, i.e. C is a code. Note that actually this is

just a re-statement of definition of orthogonal vector spaces we have

already seen.

Remember theorem we have proved shortly ago:

If C is a subspace of V, then dim C + dim C = dim V.

Thus, if C is (n,k) code then C is (nk,k) code and vice versa.

There are codes that are self-dual, i.e. C = C.

Dual codes - some examples

For the (n,1) -repetition code C, with the generator matrix

G = (1 1 … 1)

the dual code C is (n, n1) code with the generator matrix

G, described by:

1 1 0 0 ... 0

1 0 1 0 ... 0

G

..

1 0 0 0 ... 1

Dual codes - some examples

[Adapted from V.Pless]

Dual codes – parity checking matrices

Definition

Let code C⊑V and let C be its dual code. A generator matrix H of C is

called a parity checking matrix of C.

Theorem

If kn generator matrix of code C⊑V is in standard form if G = (I,A)

then (kn)n matrix H = (AT,I) is a parity checking matrix of C.

Proof

It is easy to check that any row of G is orthogonal to any row of H (each

dot product is a sum of only two non-zero scalars with opposite signs).

Since dim C + dim C = dim V, i.e. k + dim C = n we have to

conclude that H is a generator matrix of C.

Note that in binary vector spaces H = (AT,I) = (AT,I).

Dual codes – parity checking matrices

Theorem

If kn generator matrix of code C⊑V is in standard form if G = (I,A)

then (kn)n matrix H = (AT,I) is a parity checking matrix of C.

So, up to the equivalence of codes we have an easy way to obtain a

parity check matrix H from a generator matrix G in standard form and

vice versa.

Example of generator and parity check matrices in standard form:

1 0 0 1 1 0 0

G 0 1 0 0 1 1 0

0 0 1 0 0 1 1

1

1

H

0

0

0

1

1

0

0

0

1

1

1

0

0

0

0

1

0

0

0

0

1

0

0

0

0

1