* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download ppt

Wake-on-LAN wikipedia , lookup

Remote Desktop Services wikipedia , lookup

Deep packet inspection wikipedia , lookup

Zero-configuration networking wikipedia , lookup

Internet protocol suite wikipedia , lookup

Recursive InterNetwork Architecture (RINA) wikipedia , lookup

Cracking of wireless networks wikipedia , lookup

Hypertext Transfer Protocol wikipedia , lookup

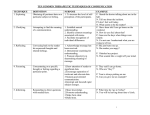

Real-Time Messaging Protocol wikipedia , lookup

UniPro protocol stack wikipedia , lookup

Midterm Review In class, 3:30-5pm, Mon. 2/11 Close Book One 8.5” by 11” sheet of paper permitted (single side) • Homework 1 graded, and hw2 soon • Solutions will be posted after the class • Project 2 released Lecture 1 • Internet Architecture • Network Protocols • Network Edge • A taxonomy of communication networks Packet Switching: Statistical Multiplexing 10 Mbs Ethernet A B statistical multiplexing C 1.5 Mbs queue of packets waiting for output link D E Sequence of A & B packets does not have fixed pattern statistical multiplexing. In TDM each host gets same slot in revolving TDM frame. Packet Switching versus Circuit Switching • Is it true for Packet Switching or Circuit Switching? – Network resources (e.g., bandwidth) divided into “pieces” for allocation – Great for bursty data – Resource piece idle if not used by owning call (no sharing) – Have excessive congestion: packet delay and loss – Protocols needed for reliable data transfer, congestion control Datagram Packet Switching • Each packet is independently switched – Each packet header contains destination address which determines next hop – Routes may change during session • No resources are pre-allocated (reserved) in advance • Example: IP networks Lecture 2 • Network access and physical media • Internet structure and ISPs • Delay & loss in packet-switched networks • Protocol layers, service models Internet structure: network of networks • “Tier-3” ISPs and local ISPs – last hop (“access”) network (closest to end systems) – Tier-3: Turkish Telecom, Minnesota Regional Network local ISP Local and tier3 ISPs are customers of higher tier ISPs connecting them to rest of Internet Tier 3 local local ISP Tier-2 ISP ISP ISP ISP Tier-2 ISP Tier 1 ISP Tier 1 ISP Tier-2 ISP local local ISP ISP local NAP Tier 1 ISP Tier-2 ISP local ISP Tier-2 ISP local ISP Four sources of packet delay • 1. processing: • 2. queueing – check bit errors – time waiting at output link for transmission – determine output link – depends on congestion level of router transmission A propagation B processing queueing Delay in packet-switched networks 3. Transmission delay: 4. Propagation delay: • R=link bandwidth (bps) • d = length of physical link • L=packet length (bits) • s = propagation speed in medium (~2x108 m/sec) • time to send bits into link = L/R transmission A • propagation delay = d/s Note: s and R are very different quantities! propagation B processing queueing Internet protocol stack • application: supporting network applications – FTP, SMTP, STTP • transport: host-host data transfer – TCP, UDP • network: routing of datagrams from source to destination – IP, routing protocols • link: data transfer between neighboring network elements – PPP, Ethernet • physical: bits “on the wire” application transport network link physical Application Layer • Principles of app layer protocols • Web and HTTP • FTP • Electronic Mail: SMTP, POP3, IMAP • DNS • Socket Programming • Web Caching HTTP connections Nonpersistent HTTP Persistent HTTP • At most one object is sent over a TCP connection. • Multiple objects can be sent over single TCP connection between client and server. • HTTP/1.0 uses nonpersistent HTTP • HTTP/1.1 uses persistent connections in default mode • HTTP Message, Format, Response, Methods • HTTP cookies Response Time of HTTP Nonpersistent HTTP issues: Persistent without pipelining: • requires 2 RTTs per object • client issues new request only when previous response has been received • OS must work and allocate host resources for each TCP connection • but browsers often open parallel TCP connections to fetch referenced objects Persistent HTTP • server leaves connection open after sending response • subsequent HTTP messages between same client/server are sent over connection • one RTT for each referenced object Persistent with pipelining: • default in HTTP/1.1 • client sends requests as soon as it encounters a referenced object • as little as one RTT for all the referenced objects FTP: separate control, data connections • FTP client contacts FTP server at port 21, specifying TCP as transport protocol • Client obtains authorization over FTP control connection client TCP control connection port 21 TCP data connection port 20 FTP server • Client browses remote directory • Server opens a second TCP by sending commands over data connection to transfer control connection. another file. • When server receives a • Control connection: “out of command for a file transfer, the band” server opens a TCP data connection to client • FTP server maintains “state”: current directory, earlier • After transferring one file, authentication server closes connection. Electronic Mail: SMTP [RFC 2821] • uses TCP to reliably transfer email message from client to server, port 25 • direct transfer: sending server to receiving server • three phases of transfer – handshaking (greeting) – transfer of messages – closure • command/response interaction – commands: ASCII text – response: status code and phrase • messages must be in 7-bit ASCII DNS name servers • no server has all name-to-IP Why not centralize DNS? address mappings • single point of failure • traffic volume • distant centralized database • maintenance doesn’t scale! local name servers: – each ISP, company has local (default) name server – host DNS query first goes to local name server authoritative name server: – for a host: stores that host’s IP address, name – can perform name/address translation for that host’s name DNS example root name server Root name server: • may not know authoritative name server • may know intermediate name server: who to contact to find authoritative name server • Iterated vs. recursive queries 6 2 7 local name server dns.eurecom.fr 1 8 3 intermediate name server dns.nwu.edu 4 5 authoritative name server dns.cs.nwu.edu requesting host surf.eurecom.fr www.cs.nwu.edu Web caches (proxy server) Goal: satisfy client request without involving origin server • user sets browser: Web accesses via cache • browser sends all HTTP requests to cache origin server client Proxy server – object in cache: cache returns object – else cache requests object from origin server, then returns object to client • Why web caching? client origin server Caching example (3) Install cache origin servers • suppose hit rate is .4 Consequence • 40% requests will be satisfied almost immediately public Internet • 60% requests satisfied by origin server • utilization of access link reduced to 60%, resulting in negligible delays (say 10 msec) • total delay = Internet delay + access delay + LAN delay = .6*2 sec + .6*.01 secs + milliseconds < 1.3 secs 1.5 Mbps access link institutional network 10 Mbps LAN institutional cache Transport Layer • Transport-layer services • Multiplexing and demultiplexing • Connectionless transport: UDP • Principles of reliable data transfer • TCP – Segment structures – Flow control – Congestion control Demultiplexing • UDP socket identified by two-tuple: (dest IP address, dest port number) • When host receives UDP segment: – checks destination port number in segment – directs UDP segment to socket with that port number • TCP socket identified by 4-tuple: – source IP address – source port number – dest IP address – dest port number • recv host uses all four values to direct segment to appropriate socket UDP: User Datagram Protocol [RFC 768] 32 bits source port # dest port # length checksum Application data (message) UDP segment format Why is there a UDP? • no connection establishment (which can add delay) • simple: no connection state at sender, receiver • small segment header • no congestion control: UDP can blast away as fast as desired Rdt1.0: reliable transfer over a reliable channel • underlying channel perfectly reliable – no bit errors – no loss of packets • separate FSMs for sender, receiver: – sender sends data into underlying channel – receiver read data from underlying channel Wait for call from above rdt_send(data) packet = make_pkt(data) udt_send(packet) sender Wait for call from below rdt_rcv(packet) extract (packet,data) deliver_data(data) receiver Rdt2.0: channel with bit errors • underlying channel may flip bits in packet – recall: UDP checksum to detect bit errors • the question: how to recover from errors: – acknowledgements (ACKs): receiver explicitly tells sender that pkt received OK – negative acknowledgements (NAKs): receiver explicitly tells sender that pkt had errors – sender retransmits pkt on receipt of NAK • new mechanisms in rdt2.0 (beyond rdt1.0): – error detection – receiver feedback: control msgs (ACK,NAK) rcvr->sender rdt2.0: FSM specification rdt_send(data) snkpkt = make_pkt(data, checksum) udt_send(sndpkt) rdt_rcv(rcvpkt) && isNAK(rcvpkt) Wait for Wait for call from ACK or udt_send(sndpkt) above NAK rdt_rcv(rcvpkt) && isACK(rcvpkt) L sender receiver rdt_rcv(rcvpkt) && corrupt(rcvpkt) udt_send(NAK) Wait for call from below rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) extract(rcvpkt,data) deliver_data(data) udt_send(ACK) rdt2.0 has a fatal flaw! What happens if ACK/NAK corrupted? • sender doesn’t know what happened at receiver! • can’t just retransmit: possible duplicate What to do? • sender ACKs/NAKs receiver’s ACK/NAK? What if sender ACK/NAK lost? • retransmit, but this might cause retransmission of correctly received pkt! Handling duplicates: • sender adds sequence number to each pkt • sender retransmits current pkt if ACK/NAK garbled • receiver discards (doesn’t deliver up) duplicate pkt stop and wait Sender sends one packet, then waits for receiver response rdt2.1: sender, handles garbled ACK/NAKs rdt_send(data) sndpkt = make_pkt(0, data, checksum) udt_send(sndpkt) rdt_rcv(rcvpkt) && ( corrupt(rcvpkt) || Wait for Wait for isNAK(rcvpkt) ) ACK or call 0 from udt_send(sndpkt) NAK 0 above rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) && isACK(rcvpkt) rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) && isACK(rcvpkt) L rdt_rcv(rcvpkt) && ( corrupt(rcvpkt) || isNAK(rcvpkt) ) udt_send(sndpkt) L Wait for ACK or NAK 1 Wait for call 1 from above rdt_send(data) sndpkt = make_pkt(1, data, checksum) udt_send(sndpkt) rdt2.2: a NAK-free protocol • same functionality as rdt2.1, using NAKs only • instead of NAK, receiver sends ACK for last pkt received OK – receiver must explicitly include seq # of pkt being ACKed • duplicate ACK at sender results in same action as NAK: retransmit current pkt rdt3.0: channels with errors and loss New assumption: underlying channel can also lose packets (data or ACKs) – checksum, seq. #, ACKs, retransmissions will be of help, but not enough Q: how to deal with loss? – sender waits until it is certain that data or ACK lost, then retransmits – yuck: drawbacks? Approach: sender waits “reasonable” amount of time for ACK • retransmits if no ACK received in this time • if pkt (or ACK) just delayed (not lost): – retransmission will be duplicate, but use of seq. #’s already handles this – receiver must specify seq # of pkt being ACKed • requires countdown timer rdt3.0 sender rdt_send(data) sndpkt = make_pkt(0, data, checksum) udt_send(sndpkt) start_timer rdt_rcv(rcvpkt) L rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) && isACK(rcvpkt,1) rdt_rcv(rcvpkt) && ( corrupt(rcvpkt) || isACK(rcvpkt,0) ) timeout udt_send(sndpkt) start_timer rdt_rcv(rcvpkt) && notcorrupt(rcvpkt) && isACK(rcvpkt,0) stop_timer stop_timer timeout udt_send(sndpkt) start_timer L Wait for ACK0 Wait for call 0from above L rdt_rcv(rcvpkt) && ( corrupt(rcvpkt) || isACK(rcvpkt,1) ) Wait for ACK1 Wait for call 1 from above rdt_send(data) sndpkt = make_pkt(1, data, checksum) udt_send(sndpkt) start_timer rdt_rcv(rcvpkt) L Go-Back-N Sender: • k-bit seq # in pkt header • “window” of up to N, consecutive unack’ed pkts allowed • ACK(n): ACKs all pkts up to, including seq # n - “cumulative ACK” – may deceive duplicate ACKs (see receiver) • Single timer for all in-flight pkts • timeout(n): retransmit pkt n and all higher seq # pkts in window Selective Repeat • receiver individually acknowledges all correctly received pkts – buffers pkts, as needed, for eventual in-order delivery to upper layer • sender only resends pkts for which ACK not received – sender timer for each unACKed pkt • sender window – N consecutive seq #’s – again limits seq #s of sent, unACKed pkts Selective repeat: sender, receiver windows TCP segment structure URG: urgent data (generally not used) ACK: ACK # valid PSH: push data now (generally not used) RST, SYN, FIN: connection estab (setup, teardown commands) Internet checksum (as in UDP) 32 bits source port # dest port # sequence number acknowledgement number head not UA P R S F len used checksum Receive window Urg data pnter Options (variable length) application data (variable length) counting by bytes of data (not segments!) # bytes rcvr willing to accept TCP Round Trip Time and Timeout EstimatedRTT = (1- )*EstimatedRTT + *SampleRTT • Exponential weighted moving average • influence of past sample decreases exponentially fast • typical value: = 0.125 TCP Flow control: how it works • Rcvr advertises spare room by including value of RcvWindow in segments (Suppose TCP receiver discards out-of-order segments) • spare room in buffer = RcvWindow = RcvBuffer-[LastByteRcvd LastByteRead] • Sender limits unACKed data to RcvWindow – guarantees receive buffer doesn’t overflow TCP Congestion Control • end-end control (no network assistance) How does sender perceive congestion? • sender limits transmission: • loss event = timeout or 3 duplicate acks LastByteSent-LastByteAcked CongWin • Roughly, rate = CongWin Bytes/sec RTT • CongWin is dynamic, function of perceived network congestion • TCP sender reduces rate (CongWin) after loss event three mechanisms: – AIMD – slow start – conservative after timeout events Summary: TCP Congestion Control • When CongWin is below Threshold, sender in slow-start phase, window grows exponentially. • When CongWin is above Threshold, sender is in congestion-avoidance phase, window grows linearly. • When a triple duplicate ACK occurs, Threshold set to CongWin/2 and CongWin set to Threshold. • When timeout occurs, Threshold set to CongWin/2 and CongWin is set to 1 MSS. Refinement • After 3 dup ACKs: – CongWin is cut in half – window then grows linearly • But after timeout event: – Enter “slow start” – CongWin instead set to 1 MSS; – window then grows exponentially – to a threshold, then grows linearly Philosophy: • 3 dup ACKs indicates network capable of delivering some segments • timeout before 3 dup ACKs is “more alarming” Why is TCP fair? Two competing sessions: • Additive increase gives slope of 1, as throughout increases • multiplicative decrease decreases throughput proportionally equal bandwidth share R loss: decrease window by factor of 2 congestion avoidance: additive increase loss: decrease window by factor of 2 congestion avoidance: additive increase Connection 1 throughput R