* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download ima

Survey

Document related concepts

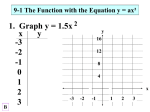

Transcript

“Expansion” in Power Law and Scale Free Graphs Milena Mihail Georgia Tech with Christos Gkantsidis, Christos Papadimitriou and Amin Saberi 1 Graphs with Skewed Degree Sequences Communication Networks Metabolic Networks This Talk: Algorithmic Issues, “Expansion”, spectral gap determine 2 performace of key algorithms. How does Congestion Scale? Demand: n2, uniform. What is load of max congested link, in optimal routing ? Sprint ISPs: 900-14K Routers: 500-200K 500K-3B AT&T WWW: P2P: hundred Ks 3 Real Internet Topologies E[degree]~3 Degrees not Concentrated around mean Not Erdos-Renyi CAIDA http://www.caida.org 4 Degree-Frequency Power Law Models by Kumar et al 00, x Bollobas et al 01, x Fabrikant et al 02 frequency Erdos-Renyi sharp concentration 1 2 3 4 5 E[d] = const., but No sharp concentration 10 degree 100 5 Power Laws [Interdomain Routing: Faloutsos et al 99] [WWW: Kumar et al 99, Barabasi-Albert 99] Degree-Frequency Rank-Degree Eigenvalues (Adjacency Matrix) 6 Models for Power Law Graphs EVOLUTIONARY Macroscopic : Growth & Preferential Attachment Simon 55, Barabasi-Albert 99, Kumar et al 00, Bollobas-Riordan 01. Microscopic : Growth & Multiobjective Optimization, QoS vs Cost Fabrikant-Koutsoupias-Papadimitriou 02. STRUCTURAL (aka CONFIGURATIONAL) “Random” graph with “power law” degree sequence. 7 Structural Random Graph Model Given Choose random perfect matching over minivertices Molley&Reed 95-98, Aiello,Chung,Lu 00, Tagmunarunkit et al 02 8 Congestion in the “Core” Theorem [Gkantsidis,MM, Saberi 02]: For a random graph arising from degree sequence O(n½ ) ≥ d1≥d2≥…≥dn ≥3 there is a flow that routes demand di * dj between all vertices i and j with max link congestion O(n log2n) almost surely. 9 Proof : Step 1 : Approximation algorithms for multicommodity flow reduce congestion to conductance (special case of sparsest cut). Step 2 : Bound conductance - MAIN LEMMA. 10 Proof, Step 1 : Reduce to Conductance By Maximum multi-commodity flow, [Leighton & Rao 95] 11 Proof, Step 2 : Main Lemma [Gkantsidis,MM, Saberi 02]: 12 Proof of MAIN LEMMA: 13 Proof of MAIN LEMMA: 14 Proof of MAIN LEMMA: Stirling 15 Proof of MAIN LEMMA: BIG SMALL ignore Stirling 16 In an Evolutionary Model ? Growth with Pref. Attachment One vertex at a time New vertex attaches to d existing vertices 17 Reduction to Random Matching [Bollobas & Riordan 01] t=5 t=1 t=2 t=3 t=4 18 Reduction to Random Matching [Bollobas & Riordan 01] t=5 t=1 t=2 t=3 t=4 19 Reduction to Random Matching [Bollobas & Riordan 01] 20 In an Evolutionary Model ? Growth with Pref. Attachment Theorem [MM, Saberi 02]: For a graph grown with preferential attachment with d ≥ 3 there is a flow that routes demand di * dj between all vertices i and j with max link congestion O(n log n) almost surely. Main Lemma: almost surely. Open Question: Analyze a graph grown one vertex or edge at a time, where with probability a new vertex comes and attaches preferentially and with probability a new edge grows preferentially between existing vertices. 21 Spectral Implication Theorem: Eigenvalue separation for stochastic normalization of adjacency matrix [Alon 85, Jerrum&Sinclair 88] 22 Spectra of “Real” Internet 23 Spectral Implications Theorem: Eigenvalue separation for stochastic normalization of adjacency matrix [Alon 85, Jerrum&Sinclair 88] On the eigenvalue Power Law [M.M. & Papadimitriou 02] Rank-Degree Eigenvalues (Adjacency Matrix) Using matrix perturbation [Courant-Fisher theorem] in a sparse random graph model . 24 Theorem : [M.M. & Papadimitriou 02] Wwith probability at least Ffor large enough 25 Proof : Step 1. Decomposition LR = Vertex Disjoint Stars - LR-extra LL RR 26 Proof: Step 2: Vertex Disjoint Stars Degrees of each Vertex Disjoint Stars Sharply Concentrated around its Mean d_i Hence Principal Eigenvalue Sharply Concentrated around 27 Proof: Step 3: LL, RR, LR-extra LR-extra has max degree LL has edges RR has max degree 28 Proof: Step 3: LL, RR, LR-extra LR-extra has max degree LL has edges RR has max degree 29 Proof: Step 4: Matrix Perturbation Theory Vertex Disjoint Stars have principal eigenvalues All other parts have max eigenvalue QED 30 Implication for Info Retrieval Term-Norm Distribution Problem : Spectral filtering, without preprocessing, reveals only the large degrees. 31 Implication for Info Retrieval Term-Norm Distribution Problem : Spectral filtering, without preprocessing, reveals only the large degrees. Local information. No “latent semantics”. 32 Implication for Information Retrieval Term-Norm Distribution Problem : Application specific preprocessing (normalization of degrees) reveals clusters: WWW: related to searching, Kleinberg 97 IR, collaborative filtering, … Internet: related to congestion, Gkantsidis et al 02 Open : Formalize “preprocessing”. 33 Further Directions: Generalize theory of Regular Expanders • Routing Integral paths? Peleg&Upfal’88 … Broder,Frieze&Upfal’01 Short paths? Kleinberg&Rubinfeld’97 Reliability? •Cover time? (Experimental work: Gkantsidis, MM, Zegura 02.) •Hitting time? •Planted model? Related to Crawling Related to Searching Information Retrieval 34 Metabolic Networks Statistics of fixed size subgraphs? Related to “motifs” in metabolic networks. Model (explain) heavy tailed statistics in noncoding part of DNA? Related to stages of species evolution. 35 Evaluation of Synthetic Topology Generators Core of the Network Entire Topology 36