* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download X - Physics

History of network traffic models wikipedia , lookup

Dragon King Theory wikipedia , lookup

Central limit theorem wikipedia , lookup

Birthday problem wikipedia , lookup

History of statistics wikipedia , lookup

Karhunen–Loève theorem wikipedia , lookup

Foundations of statistics wikipedia , lookup

Poisson distribution wikipedia , lookup

Probability and Statistics

Many of the process involved with detection of particles are statistical in nature

Number of ion pairs created when proton goes through 1 cm of gas

Energy lost by an electron going through 1 mm of lead

The understanding and interpretation of all experimental data depend

on statistical and probabilistic concepts:

“The result of the experiment was inconclusive so we had to use statistics”

how do we extract the best value of a quantity from a set of measurements?

how do we decide if our experiment is consistent/inconsistent with a given theory?

how do we decide if our experiment is internally consistent?

how do we decide if our experiment is consistent with other experiments?

Definition of probability: Lets define probability by example:

Suppose we have N trials and a specified event occurs r times.

For example the trial could be rolling a dice and the event could be rolling a 6.

We define the probability (P) of an event (E) occurring as:

P(E) = r/N when N

Examples: coin toss P(heads) = 0.5

six sided dice P(6) = 1/6

(P(1) = P(2) = P(3) = P(4) = P(5) = P(6) for “honest” die)

Remember: P(heads) should approach 0.5 the more times you toss the coin.

Obviously for a single coin toss we can never get P(heads) = 0.5!

880.A20 Winter 2002

Richard Kass

Probability and Statistics

By definition probability is a non-negative real number bounded by 0P 1

If P = 0 then the event never occurs

If P = 1 then the event always occurs

intersection, union

Events are independent if: P(AB)=P(A)P(B)

Events are mutually exclusive (disjoint) if: P(AB)=0 or P(AB)= P(A)+P(B)

The sum (or integral) of all probabilities if they are mutually exclusive must = 1.

Probability can be a discrete or a continuous variable.

In the discrete case only certain values of P are allowed.

example of discrete case: tossing a six-sided dice.

P(xi) = Pi here xi = 1, 2, 3, 4, 5, 6 and Pi = 1/6 for all xi.

another example is tossing a coin. Only 2 choices, heads or tails.

For both of the above discrete examples (and in general) when we sum over all

mutually exclusive possibilities:

P xi 1

i

880.A20 Winter 2002

Richard Kass

Probability and Statistics

Continuous Probability: In this case P can be any number between 0 and 1.

We can define a “probability density function”, pdf, f (x)

f x dx dP x a x dx with a a continuous variable

The probability for x to be in the range a x b is:

b

P(a x b) f x dx

a

Just like the discrete case the sum of all probabilities must equal 1.

For the continuous case this means:

We say that f(x) is normalized to one.

f x dx 1

NOTE: The probability for x to be exactly some number is zero since:

x a

f x dx 0

x a

Aside: Probability theory is an interesting branch of mathematics.

Calculus of Probabilities set theory.

880.A20 Winter 2002

Richard Kass

Probability and Statistics

Examples of some common P(x)’s and f(x)’s:

Discrete = P(x)

Continuous = f(x)

binomial

uniform, i.e. = constant

Poisson

Gaussian

exponential

chi square

How do we describe a probability distribution?

mean, mode, median, and variance

For a continuous distribution these quantities are defined by:

Mean

average

xf(x)dx

Mode

most probable

f x

0

x x a

Median

50% point

a

0.5

f (x)dx

Variance

width of distribution

2

f (x)x dx

2

For discrete distribution the mean and variance are defined by:

n

n

2

xi / n

( xi )2 / n

i 1

880.A20 Winter 2002

i 1

Richard Kass

Probability and Statistics

Some continuous pdfs.

v=1 Cauchy (Breit-Wigner)

v=gaussian

Chi-square distribution

880.A20 Winter 2002

u

Student t distribution

Richard Kass

Probability and Statistics

We use results from probability and statistics as a way of indicating how

“good” a measurement is.

The most common quality indicator is relative precision.

Relative precision = [uncertainty of measurement]/measurement

Uncertainty in measurement is usually square root of variance:

= standard deviation

Example: we measure table to be 10 inches with uncertainty of 1 inch.

the relative precision is 1/10=0.1 or 10% (% relative precision)

is usually calculated using the technique of “propagation of errors”.

However this is not what most people think it is!

We will discuss this in more detail soon.

880.A20 Winter 2002

Richard Kass

Probability and Statistics

Some comments on accuracy and precision:

Accuracy: The accuracy of an experiment refers to how close the experimental measurement

is to the true value of the quantity being measured.

Precision: This refers to how well the experimental result has been determined, without

regard to the true value of the quantity being measured.

Note: Just because an experiment is precise it does not mean it is accurate!!

The above figure shows various measurements of the neutron lifetime over the years.

Note the big jump downward in the 1960’s. Are any of these measurements accurate?

880.A20 Winter 2002

Richard Kass

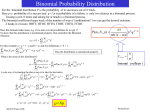

Binomial Probability Distributions

For the binomial distribution P is the probability

of m successes out of N trials. Here p is probability of a success

and q=1-p is probability of a failure.

P(m, N , p)

N!

p m q N m

m!( N m)!

Does this formula make sense, e.g. if we sum over all possibilities do we get 1?

To show that this distribution is normalized properly, first remember the Binomial

Theorem:

k

k k l l

k

(a b) a b

l 0 l

For this example a = q = 1 - p and b = p, and (by definition) a +b = 1.

N

N

N

P(m,

N,

p)

mp m q N m ( p q)N 1

Thus the distribution is normalized properly.

m0

m0

Tossing a coin N times

and asking for m heads

is a binomial process.

What is the mean of this distribution?

N

mP(m, N, p)

m 0

N

P(m, N, p)

N

mP(m,N, p)

m 0

N

N

mmp

m

q

N m

m0

m0

A cute way of evaluating the above sum is to take the derivative:

N

N

N

N p m q N m 0 m

N p m 1q N m

N p m (N m)(1 p)N m 1

m

p

m 0 m

m 0

m 0 m

N

N

mmp

m0

p

1

m 1

q

N m

N

N

mp

m

(N m)(1 p)

N m 1

m0

N

N

N

N

N

pm q N m (1 p) 1 N

p m (1 p) N m (1 p)1

m

m

m

mmpm (1 p) N m

m0

m0

m0

N

p 1 (1 p)1 N(1) (1 p)1

= Np for a binomial distribution

880.A20 Winter 2002

Richard Kass

Binomial Probability Distribution

What’s the variance of a binomial distribution?

Using a trick similar to the one used for the average we find:

N

2

(m ) P(m, N , p)

2 m 0

N

Npq

P(m, N , p )

m 0

Example 1: Suppose you observed m special events (or successes) in a sample of N

events. The measured probability (sometimes called “efficiency”) for a special event to

occur is m / N . What is the error ( standard deviation or ) in ? Since N is a

fixed quantity it is plausible (we will show it soon) that the error in is related to the

error ( standard deviation or m) in m by:

m / N .

This leads to:

m / N Npq / N N (1 ) / N (1 ) / N

This is sometimes called "error on the efficiency".

Thus you want to have a sample (N) as large as possible to reduce the uncertainty in the

probability measurement!

Note: , the “error in the efficiency” 0 as 0 or 1.

880.A20 Winter 2002

Richard Kass

Binomial Probability Distributions

Example 2: Suppose a baseball player's batting average is 0.333 (1 for 3 on average).

Consider the case where the player either gets a hit or makes an out (forget about walks here!).

In this example: p = prob. for a hit = 0.333 and q = 1 - p = 0.667 (prob. for "no hit").

On average how many hits does the player get in 100 at bats?

= Np = 100(0.33) = 33 hits

What's the standard deviation of the number of hits in 100 at bats?

= (Npq)1/2 = (100·0.33·0.67)1/2 4.7 hits

Thus we expect 33 ± 5 hits per 100 at bats

Consider a game where the player bats 4 times:

Probability of 0/4 = (0.67)4 = 20%

Probability of 1/4 = [4!/(3!1!)](0.33)1(0.67)3 = 40%

Probability of 2/4 = [4!/(2!2!)](0.33)2(0.67)2 = 29%

Probability of 3/4 = [4!/(1!3!)](0.33)3(0.67)1 = 10%

Probability of 4/4 = [4!/(0!4!)](0.33)4(0.67)0 = 1%

Note: the probability of getting at least one hit is: 1 - P(0) = 0.8

880.A20 Winter 2002

Richard Kass

Poisson Probability Distribution

Another important discrete distribution is the Poisson distribution. Consider the following conditions:

a) p is very small and approaches 0.

For example suppose we had a 100 sided dice instead of a 6 sided dice.

Here p = 1/100 instead of 1/6. Suppose we had a 1000 sided dice, p = 1/1000...etc

b) N is very large, it approaches .

For example, instead of throwing 2 dice, we could throw 100 or 1000 dice.

The number of counts

c) The product Np is finite.

in a time interval is a

A good example of the above conditions occurs when one considers radioactive decay.

20

Suppose we have 25 mg of an element. This is 10 atoms.

Poisson process.

Suppose the lifetime () of this element = 1012 years 5x1019 seconds.

The probability of a given nucleus to decay in one second = 1/ = 2x10-20/sec.

For this example:

N = 1020 (very large) p = 2x10-20 (very small)

Np = 2 (finite!)

We can derive an expression for the Poisson distribution by taking the appropriate limits of the binomial distribution.

P(m, N, p)

Using condition b) we obtain:

q

N m

(1 p)

N!

p mq N m

m!(N m)!

N!

N(N 1) (N m 1)(N m)!

Nm

(N m)!

(N m)!

N m

Putting this altogether we obtain:

p2 (N m)(N m 1)

( pN)2

pN

1 p(N m)

... 1 pN

e

2!

2!

N m p me p N e m

P(m, N, p)

m!

m!

Here we've let = pN.

It is easy to show that: = Np = mean of a Poisson distribution

2 = Np = = variance of a Poisson distribution.

Note: m is always an integer 0 however, does not have to be an integer.

880.A20 Winter 2002

Richard Kass

Poisson Probability Distribution

Radioactivity Example:

a) What’s the probability of zero decays in one second if the average = 2 decays/sec?

e 2 20 e2 1

2

P(0,2)

e 0.135 13.5%

0!

1

b) What’s the probability of more than one decay in one second if the average = 2 decays/sec?

e 2 20 e 2 21

2

2

P( 1,2) 1 P(0,2) P(1,2) 1

1 e 2e 0.594 59.4%

0!

1!

c) Estimate the most probable number of decays/sec?

P(m, ) m* 0

We want:

m

To solve this problem its convenient to maximize

lnP(m, ) instead of P(m, ).

e m

ln P(m, ) ln (

) m ln ln m!

m!

In order to handle the factorial when take the derivative we use Stirling's Approximation:

ln(m!) mln(m)-m

ln P(m, )

( m * ln ln m *!)

( m * ln m * ln m * m*) ln ln m * 1 1 0

m

m

m

m* =

In this example the most probable value for m is just the

m average of the distribution. Therefore if you observed m

events in an experiment, the error on m is

.

Caution: The above derivation is only approximate since we used Stirlings Approximation which is only

valid for large m. Another subtle point is that strictly speaking m can only take on integer values

while is not restricted to be an integer.

0.4

0.5

0.35

poisson

binomialN=3, p=1/3

0.3

0.3

Probability

Comparison of

Binomial and Poisson

distributions with mean

=1.

Probability

0.4

0.2

binomialN=10,p=0.1

poisson

0.25

0.2

0.15

0.1

0.1

0.05

0

0

880.A20 Winter 2002

1

2 m 3

4

5

0

0.0

1.0

2.0

3.0

m

4.0

5.0

6.0

7.0

Richard Kass

Not much

difference

between

them here!

Gaussian Probability Distribution

The Gaussian probability distribution (or “bell shaped curve” or Normal

distribution) is perhaps the most used distribution in all of science. Unlike the

binomial and Poisson distribution the Gaussian is a continuous distribution. It is

given by:

p(y)

1

e

2

Plot of gaussian pdf

y

2 2

2

with = mean of distribution (also at the same place as mode and median)

2 = variance of distribution

y is a continuous variable (-y

The probability (P) of y being in the range [a, b] is given by an integral:

1

p(x)

e

2

P(x)

(x )2

2

2

gaussian

2

( y )

1 b 22

P(a y b)

dy

e

2 a

x

Since this integral cannot be evaluated in closed form for arbitrary a and b (at least

no one's figured out how to do it in the last couple of hundred years) the values of

the integral have to be looked up in a table.

The total area under the curve is normalized to one.

In terms of the probability integral we have:

1

P( y )

e

2

( y ) 2

2 2

dy 1

Quite often we talk about a measurement being a certain number of

standard deviations () away from the mean () of the Gaussian.

We can associate a probability for a measurement to be

|- n| from the mean just by calculating the area outside of this region.

n

Prob. of exceeding ±n

0.67

0.5

1

0.32

2

0.05

3

0.003

4

0.00006

880.A20 Winter 2002

It is very unlikely (<0.3%) that a

measurement taken at random from

a gaussian pdf will be more than

3from the true mean of the

distribution.

Richard Kass

Central Limit Theorem

Why is the gaussian pdf so important ?

“Things that are the result of the addition of lots of small effects tend to become Gaussian”

The above is a crude statement of the Central Limit Theorem:

A more exact statement is:

Let Y1, Y2,...Yn be an infinite sequence of independent random variables each with the

2

same probability distribution. Suppose that the mean () and variance ( ) of this

distribution are both finite. Then for any numbers a and b:

Actually, the Y’s can

Y Y 2 Y n n

lim P a 1

b

n

n

1

2

b

1/2 y2

e

dy

a

be from different pdf’s!

Thus the C.L.T. tells us that under a wide range of circumstances the probability

distribution that describes the sum of random variables tends towards a Gaussian

distribution as the number of terms in the sum .

Alternatively,

Y

Y

lim P a

b lim P a

b

/ n

n

n

m

1 b 1/2 y 2

dy

e

2

a

Note: m is sometimes called “the error in the mean” (more on that later).

For CLT to be valid:

and of pdf must be finite

No one term in sum should dominate the sum

880.A20 Winter 2002

Richard Kass

Central Limit Theorem

Best illustration of the CLT.

a) Take 12 numbers (ri) from your computer’s random number generator

b) add them together

c) Subtract 6

d) get a number that is from a gaussian pdf !

Computer’s random number generator gives numbers distributed uniformly in the interval [0,1]

A uniform pdf in the interval [0,1] has =1/2 and 2=1/12

12

12

12

ri 12

ri 12 (1 / 2)

ri 12 (1 / 2)

Y

Y

Y

Y

n

1

2

3

n

i 1

i 1

i 1

P a

b P a

b P 6

6 P 6

6

n

n

(1 / 12 ) 12

(1 / 12 ) 12

12

1 6 ( y 2 / 2)

P 6 ri 6 6

dy

e

2 6

i 1

Thus the sum of 12 uniform

random numbers

minus 6 is distributed as if it

came from a gaussian pdf

with =0 and =1.

A) 5000 random numbers

C) 5000 triplets (r1+ r2+ r3)

of random numbers

B) 5000 pairs (r1+ r2)

of random numbers

D) 5000 12-plets (r1+ ++r12)

of random numbers.

E) 5000 12-plets

E

(r + ++r -6) of

1

12

random numbers.

Gaussian

=0 and =1

-6

880.A20 Winter 2002

0

+6

Richard Kass

Propagation of errors

Suppose we measure the branching fraction BR(D0+-) using the number

of produced D0 mesons (Nproduced), the number of D0+- decays found

(Nfound), and the efficiency for finding a D0+– decay ().

BR(D0+-)=Nfound/(Nproduced),

If we know the uncertainties (’s) of Nproduced, Nfound, and what is the

uncertainty on BR(D0+-) ?

More formally we could ask, given that we have a functional relationship between several

measured variables (x, y, z), i.e.

Q = f(x, y, z)

What is the uncertainty in Q if the uncertainties in x, y, and z are known? Usually when we talk

about uncertainties in a measured variable such as x we usually assume that the value of x

represents the mean of a Gaussian distribution and the uncertainty in x is the standard deviation

() of the Guassian distribution. A word of caution here, not all measurements can be

represented by Gaussian distributions, but more on that later!

To answer this question we use a technique called Propagation of Errors.

880.A20 Winter 2002

Richard Kass

Propagation of errors

To calculate the variance in Q as a function of the variances in x and y we use the following:

2

2

Q2 2x Q / x 2y Q / y 2 x y Q / x Q / y

Note: if x and y are uncorrelated (xy =0) then the last term in the above equation is 0.

Assume we have several measurement of the quantities x (e.g. x1, x2...xi) and y (e.g. y1, y2...yi).

We can calculate the average of x and y using:

N

N

x xi / N and y yi / N

i 1

i1

Qi f(xi, yi)

Q f(x, y) evaluated at the average values

Now expand Qi about the average values:

Q

Q

Qi f ( x , y ) (xi x )

(yi y )

higher order terms

x x

y y

Let's define:

Assume that we neglect the higher order terms (i.e. the measured values are close to the average values). We can

rewrite the above as:

Q

Q

Qi Q (x i x )

(yi y )

x x

y y

We would like to find the variance of Q. By definition the variance of Q is just:

2

1 N

(Qi Q)

N i1

2

Q

880.A20 Winter 2002

Richard Kass

Propagation of errors

If we expand the summation using the definition of Q - Qi we get:

2

2

2

2

N

1 N

Q

1 N

Q

2

2 (x )(y ) Q Q

Q (xi x )

(yi y )

i

x

i

y

x

x y

y

N i1

N

i1

N

i1

x

x

y

y

Since the derivatives are all evaluated at the average values ( x, y) we can pull the derivatives outside

of the summations. Finally, remembering the definition of the variance we can write:

2

2

N

2

2 Q

2 Q

2 Q Q

(x )(y )

Q x

y

i

x

i

y

x

y

N x y i 1

y

x

x

y

If the measurements are uncorrelated then the summation in the above equation will be very close to

zero (if the variables are truly uncorrelated then the sum is 0) and can be neglected. Thus for

uncorrelated variables we have:

2

2

2

2 Q

2 Q

Q x

y

uncorrelated errors

x

y

y

x

If however x and y are correlated, then we define xy using: xy

1 N

(xi x )(yi y )

N i 1

The variance in Q including correlations is given by:

2

2

2

2 Q

2 Q

2Q Q

Q x

y

xy

x

x y

y

x

x

y

correlated errors

y

Example: Error in BR(D0+– ). Assume: Nproduced =106 103, Nfound =10 3, = 0.02 0.002

2

BR N

2

BR

2

pr

BR

2

N fd

N

pr

6

2

2

BR

BR

2

2

N pr

N fd

2

2

N

fd

N 2

pr

2

2

N fd

1

N

pr

2

2 N fd

2

N pr

2

10

1

10

10

9

( 4 10 6 )

1.6 10 4

12

6

4

6

0.02 10

0.02 10

4 10 10

880.A20 Winter 2002

Richard Kass

2

Propagation of errors

Example: The error in the average.

The average of several measurements each with the same uncertainty () is given by:

2

2

x1 x2 xn

n

2

2

1

1

1

1

2x

2 2 n 2

x 2x 2

1 x

n x

n

n

n

n

1

x 2

n

2

2

2

2

2

n

“error in the mean”

This is a very important result! It says that we can determine the mean better by combining measurements.

Unfortunately, the precision only increases as the square root of the number of measurements.

Do not confuse with !

is related to the width of the pdf (e.g. gaussian) that the measurements come from.

It does not get smaller as we combine measurements.

A slightly more complicated problem is the case of the weighted average or unequal ’s:

x1

x2

xn

12 22

n2

1 / 12 1 / 22 1 / n2

Using same procedure

as above we obtain:

880.A20 Winter 2002

2

1

1 / 12

1 / 22

1 / n2

“error in the weighted mean”

Richard Kass

Propagation of errors

Problems with Propagation of Errors:

In calculating the variance using propagation of errors we usually assume that we are dealing with Gaussian like errors

for the measured variable (e.g. x). Unfortunately, just because x is described by a Gaussian distribution

does not mean that f(x) will be described by a Gaussian distribution.

Example: when the new distribution is Gaussian.

Let y = Ax, with A = a constant and x a Gaussian variable. Let the pdf for x be gaussian:

p(x, x , x )dx

e

( x x ) 2

2 2x

x 2

dx

Then y = Ax and y = Ax. Putting this into the above equation we have:

p(x, x , x )dx

e

Ae

y 2

dx

e

( y / A y / A )

2

2

x 2

( y y ) 2

2 2y

( x x )

2 2x

dx

e

2( / A )

2

y

2

y / A 2

dx

( y y ) 2

2 2y

y 2

dy

p( y, y , y )dy

Thus the new pdf for y, p(y, y, y) is also given by a Gaussian probability distribution function.

100

y = 2x with x = 10 2

dN/dy

80

24

y

x

60

Start with a gaussian with =10, =2.

Get another gaussian with =20, = 4

40

20

0

880.A20 Winter 2002

0

10

20

y

30

40

Richard Kass

Propagation of errors

Example when the new distribution is non-Gaussian: Let y = 2/x

The transformed probability distribution function for y does not have the form of a Gaussian pdf.

100

y = 2/x with x = 10 2

dN/dy

80

2

2/

y

x x

Start with a gaussian with =10, =2.

DO NOT get another gaussian !

Get a pdf with = 0.2, = 0.04.

This new pdf has longer tails than a gaussian pdf.

60

40

Prob(y>y+5y) =5x10-3, for gaussian 3x10-7

20

0

0.1

0.2

0.3

0.4

0.5

0.6

y

Unphysical situations can arise if we use the propagation of errors results blindly!

Example: Suppose we measure the volume of a cylinder: V = R2L.

Let R = 1 cm exact, and L = 1.0 ± 0.5 cm.

Using propagation of errors we have: V = R2L = /2 cm3.

and V = ± /2 cm3

However, if the error on V (V) is to be interpreted in the Gaussian sense then

the above result says that there’s a finite probability (≈ 3%) that the volume (V) is < 0 since V is

only two standard deviations away from than 0!

Clearly this is unphysical ! Care must be taken in interpreting the meaning of V.

880.A20 Winter 2002

Richard Kass

Maximum Likelihood Method (MLM)

Suppose we are trying to measure the true value of some quantity (xT). We make repeated

measurements of this quantity {x1 , x2 ...x n} . The standard way to estimate xT from our

measurements is to calculate the mean value of the measurements:

N

xi

x i 1

N

and set xT x .

Does this procedure make sense?

The MLM answers this question and provides a method for estimating parameters from existing data.

Statement of the Maximum Likelihood Method (MLM):

Assume we have made N measurements of x {x1, x 2 ... xn }.

Assume we know the probability distribution function that describes x: f (x, a )

Assume we want to determine the parameter a.

The MLM says that we pick asuch as to maximize the probability of getting the

measurements (the xi's) that we obtained!

How do we use the MLM?

The probability of measuring x1 is f (x1 , a )

The probability of measuring x2 is f (x2 , a )

The probability of measuring xn is f (xn , a)

If the measurements are independent, the probability of getting our measurements is:

L f (x1 , a ) f (x2 ,a ) f (x n , a ) .

L is called the Likelihood Function. It is convenient to write L as:

N

L f (xi ,a )

i 1

We want to pick the a that maximizes L. Thus we want to solve:

880.A20 Winter 2002

L

0

a a a *

Richard Kass

Maximum Likelihood Method (MLM)

In practice it is easier to maximize lnL rather than L itself since lnL turns the product into

a summation. However, maximizing lnL gives the same asince L and lnL are both

maximum at the same time.

N

ln L ln f ( x i , a )

i 1

The maximization condition is now:

ln L

a

( ln f ( xi ,a ))

0

i 1 a

a a *

N

a a *

Note: acould be an array of parameters or just a single variable. The equations to

determine a range from simple linear equations to coupled non-linear equations.

Example: Let f(xa) be given by a Gaussian distribution and let athe mean of the Gaussian. We

want the best estimate of a from our set of n measurements (x1, x2, ..xn). Let’s assume that is the

same for each measurement.

1

f ( xi , a )

e

2

The likelihood function for this problem is:

(x a)2

n

( x a )2

(x i a ) 2

22

( x

a)2

(x

a )2

n

n ( x a )2

i

2 2

i

1

2

n

1

1

1

i

2 2

2 2

2 2

2 2

L f ( xi ,a )

e

e

e

e

e 1

i 1

i 1

2

2

2

We want to find the a that maximizes the likelihood function (actually we will maximize lnL).

n ( x a )2

ln L

1

i

n

ln

0

2 i1 2 2

a

a

n

n

Since is the same for each data point we can factor it out:

n

xi

i1

n

a 0 or

i 1

Finally, solving for a we have:

n

xi

na

i 1

Average !

1 n

xi

n i1

For the case where the are different for each data point we get the weighted average:

n

xi

( 2 )

i 1

a n 1i

( 2 )

a

i 1

880.A20 Winter 2002

i

Richard Kass

Maximum Likelihood Method (MLM)

Example: Let f(x,a) be given by a Poisson distribution with athe mean of the Poisson.

We want the best estimate of a from our set of n measurements (x1, x2, ..xn). We want the

best estimate of a from our set of n measurements (x1, x2, ..xn). The Poisson distribution

is a discrete distribution, so (x1, x2, ..xn) are integers. The Poisson distribution is given by:

ea a x

f (x, a )

x!

The likelihood function for this problem is:

n

xi

e aa i e a a 1 e aa 2 e a a n e na a i 1

..

x1 !

x2 !

xn !

x1 !x2 !.. xn !

i1

i1 xi !

We want to find the a that maximizes the likelihood function (actually we will maximize

lnL).

n

x

n

L f (xi , a )

x

x

x

n

xi

d ln L

d

d ln L

na ln a xi ln(x1 !x 2 !.. xn !) or

n i 1 0

da

da

da

a

i1

1 n

a xi .

Average !

n i1

n

Some general properties of the maximum likelihood method:

a) For large data samples (large n) the likelihood function, L, approaches a Gaussian distribution.

b) Maximum likelihood estimates are usually consistent.

By consistent we mean that for large n the estimates converge to the true value of the

parameters we wish to determine.

c) For many instances the estimate from MLM is unbiased.

Unbiased means that for all sample sizes the parameter of interest is calculated correctly.

d) The maximum likelihood estimate of a parameter is the estimate with the smallest variance. Cramer-Rao bound

We say the estimate is efficient.

e) The maximum likelihood estimate is sufficient.

By sufficient we mean that it uses all the information in the observations (the xi’s).

f) The solution from MLM is unique.

The bad news is that we must know the correct probability distribution for the problem at hand!

880.A20 Winter 2002

Richard Kass

Maximum Likelihood Method (MLM)

How do we calculate errors (’s) using the MLM?

Start by looking at the case where we have a gaussian pdf. The likelihood function is:

1

L f (xi ,a )

e

i 1

i 1 2

n

n

(xi a ) 2

2 2

n

1

e

2

(x1 a )2

2 2

e

(x 2 a ) 2

2 2

e

(xn a )2

2 2

n

n ( x a )2

i

2 2

1 i1

e

2

It is easier to work with lnL:

( xi a ) 2

ln L n ln( 2 )

2

i 1 2

n

If we take two derivatives of lnL with respect to a we get:

2 ln L

n ( xi a )

n

a i 1 2

a 2

2

n (x a )

ln L

2 i 2

a

i 1 2

For the case of a gaussian pdf we get the familiar result:

2 ln L

2

a

2

n

a

2

1

The big news here is that the variance of the parameter of interest

is related to the 2nd derivative of the likelihood function.

Since our example uses a gaussian pdf the result is exact. More important, the result is

asymptotically true for ALL pdf’s since for large samples (n) all likelihood functions

become “gaussian”.

880.A20 Winter 2002

Richard Kass

Maximum Likelihood Method (MLM)

The previous example was for one variable. We can generalize the result to the case where

we determine several parameters from the likelihood function (e.g. a1, a2, … an):

2 ln L

Vij

a a

i j

1

Here Vij is a matrix, (the “covariance matrix” or “error matrix”) and it is evaluated at the values

of (a1, a2, … an) that maximize the likelihood function.

In practice it is often very difficult or impossible to evaluate the 2 nd derivatives.

The procedure most often used to determine the variances in the parameters relies on the property

that the likelihood function becomes gaussian (or parabolic) asymptotically.

We expand lnL about the ML estimate for the parameters. For the one parameter case we have:

ln L

1 2 ln L

*

ln L(a ) ln L(a )

(a a )

(a a * )2

2

a a *

2! a a *

*

Since we are evaluating lnL at the value of a (=a*) that maximizes L, the term with the 1st derivative is zero.

Using the expression for the variance of a on the previous page and neglecting higher order terms we find:

ln L(a ) ln Lmax

(a a * )2

n2

*

or ln L(a n a ) ln Lmax

2

2

2 a

Thus we can determine the n limits on the parameters by finding the values where lnL

decreases by n2/2 from its maximum value.

880.A20 Winter 2002

Richard Kass

This is

what

MINUIT

does

Maximum Likelihood Method (MLM)

Example: MLM and determining slope and intercept of a line

Assume we have a set of measurements: (x1, y1), (x2, y22… (xn, ynnand the

points are thought to come from a straight line, y=a+bx, and the measurements come

from a gaussian pdf. The likelihood function is:

1

L f (xi , a , b)

e

i1

i1 i 2

n

n

( y i q (x i ,a , b )) 2

2

2 i

1

e

i1 i 2

n

( yi a b x i ) 2

2

2 i

We wish to find the a and b that maximizes the likelihood function L.

Thus we need to take some derivatives:

ln L

n

1

(yi a bxi )2

ln

0

a

a i 1

2 i2

i 2

2

ln L n

1

(yi a 2 bxi ) 0

ln

b

b i 1 i 2

2 i

We have to solve the two equations for the two unknowns, a and b .

We can get an exact solution since these equations are linear in a and b.

yi n x 2i n yi x i n x i

2 2 2 2

i1 i i1 i

i1 i i1 i

a n

2

n x

1 n x

2 i2 ( i2 )2

i1 i i1 i

i1 i

n

880.A20 Winter 2002

1 n x i yi n yi n x i

2 2 2 2

i1 i i1 i

i1 i i1 i

and b n

2

n x

1 n x

2 i2 ( i2 )2

i1 i i1 i

i1 i

n

Richard Kass

Chi-Square (c2) Distribution

Chi-square (c2) distribution:

Assume that our measurements (xii’s) come from a gaussian pdf with mean =.

n ( x )2

2

i

Define a statistic called chi-square: c

i2

i 1

It can be shown that the pdf for

c2

is:

2

p( c ,n)

1

2 n/ 21 c 2 /2

[

c

]

e

2n /2 (n / 2)

2

0 c

This is a continuous pdf.

It is a function of two variables, c2 and n = number of degrees of freedom. ( = "Gamma Function“)

A few words about the number of degrees of freedom n:

n = # data points - # of parameters calculated from the data points

Reminder: If you collected N events in an experiment and you histogram

your data in n bins before performing the fit, then you have n data points!

For n 20, P(c2>y) can

be approximated using a

gaussian pdf with

y=(2c2) 1/2 -(2n-1)1/2

EXAMPLE: You count cosmic ray events in 15 second intervals and sort the data into 5 bins:

number of intervals with 0 cosmic rays

2

number of intervals with 1 cosmic rays

7

c2 distribution for different degrees of freedom v

number of intervals with 2 cosmic rays

6

number of intervals with 3 cosmic rays

3

number of intervals with 4 cosmic rays

2

RULE of THUMB

Although there were 36 cosmic rays in your sample you have only 5 data points.

A good fit has

EXAMPLE: We have 10 data points with and the mean and standard deviation of the data set.

If we calculate and from the 10 data point then n = 8

c2/DOF 1

If we know and calculate OR if we know and calculate then n = 9

If we know and then n = 10

880.A20 Winter 2002

Richard Kass

MLM, Chi-Square, and Least Squares Fitting

Assume we have n data points of the form (yi,i) and we believe a functional

relationship exists between the points:

y=f(x,a,b…)

In addition, assume we know (exactly) the xi that goes with each yi.

We wish to determine the parameters a, b,..

A common procedure is to minimize the following c2 with respect to the parameters:

n

c

2

[ yi f ( xi , a, b,..)]2

i 1

i2

If the yi’s are from a gaussian pdf then minimizing the c2 is equivalent to the MLM.

However, often times the yi’s are NOT from a gaussian pdf.

In these instances we call this technique “c2 fitting” or “Least Squares Fitting”.

Strictly speaking, we can only use a c2 probability table when y is from a gaussian pdf.

However, there are many instances where even for non-gaussian pdf’s the above sum approximates c2 pdf.

From a common sense point of view minimizing the above sum makes sense

regardless of the underlying pdf.

880.A20 Winter 2002

Richard Kass

Least Squares Fitting

Example: Leo’s 4.8 (P107) The following data from a radioactive source

was taken at 15 s intervals. Determine the lifetime (t) of the source.

The pdf that describes radioactivity (or the decay of a charmed particle) is:

Technically the pdf is |dN(t)/(N(0)dt)| =N(t)/(N(0)t).

N (t ) N (0)et / t

As written the above pdf is not linear in t. We can turn this into a linear problem by

taking the natural log of both sides of the pdf.

ln( N (t )) ln( N (0)) t / t y C Dt

We can now use the methods of linear least squares to find D and then t.

In doing the LSQ fit what do we use to weight the data points ?

The fluctuations in each bin are governed by Poisson statistics: 2i=Ni.

However in this problem the fitting variable is lnN so we must use propagation of errors

to transform the variances of N into the variances of lnN.

y2 N2 y / N 2 ( N ) ln N / N 2 ( N )1 / N 2 1 / N

Leo has a “1” here

880.A20 Winter 2002

i

1

2

3

4

5

6

7

8

9

10

ti

0

15

30

45

60

75

90

105

120

135

Ni

106

80

98

75

74

73

49

38

37

22

yi = lnNi

4.663

4.382

4.585

4.317

4.304

4.290

3.892

3.638

3.611

3.091

Richard Kass

Least Squares Fitting

The slope of the line is given by:

n

D

1

n

t i yi

n

2

2

i 1 i i 1 i

n

5

ti

2 2

i 1 i i 1 i

n 1 n t2

2 i2

i 1 i i 1 i

D

yi

n

(

Line of “best fit”

4.5

ti

2

)

2

Y(x)

i 1 i

4

652 132800 2780.3 33240

0.00903

2

652 2684700 (33240)

3.5

3

-20

Thus the lifetime (t) = -1/D = 110.7 s

0

The error in the lifetime is:

n

D2

2

i 1 i

n 1 n t2

n t

2

i

(

2 2 i2 )

i 1 i i 1 i

i 1 i

t D2

2

1

t / D

2

652

1.01 10 6

2

652 2684700 (33240)

t D 1/ D

t = 110.7 ± 12.3 sec.

2

1.005 103

9.03 10

3 2

12.3 s

20

40

60

t

80

100 120 140

Caution: Leo has a factor of ½ in his

error matrix (V-1)ij, Eq 4.72.

He minimizes:

y f ( xi , a, b,...) 2

S [ i

]

i

Using MLM we minimized:

( yi f ( xi , a, b,...) 2

ln L

2 i2

Note: fitting without weighting yields: t=96.8 s.

880.A20 Winter 2002

Richard Kass

Hypothesis testing

The goal of hypothesis testing is to set up a procedure(s) to allow us to decide

if a model is acceptable in light of our experimental observations.

Example: A theory predicts that BR(B)= 2x10-5 and you measure (42) x10-5 .

The hypothesis we want to test is “are experiment and theory consistent?”

Hypothesis testing does not have to compare theory and experiment.

Example: CLEO measures the Lc lifetime to be (180 7)fs while SELEX measures (198 7)fs.

The hypothesis we want to test is “are the lifetime results from CLEO and SELEX consistent?”

There are two types of hypotheses tests: parametric and non-parametric

Parametric: compares the values of parameters (e.g. does the mass of proton = mass of electron ?)

Non-parametric: deals with the shape of a distribution (e.g. is angular distribution consistent with being flat?)

Consider the case of neutron decay. Suppose we have two

theories that both predict the energy spectrum of the

electron emitted in the decay of the neutron. Here a

parametric test might not be able to distinguish between

the two theories since both theories might predict the

same average energy of the emitted electron.

However a non-parametric test would be able to

distinguish between the two theories as the shape of the

energy spectrum differs for each theory.

880.A20 Winter 2002

Richard Kass

Hypothesis testing

A procedure for using hypothesis testing

a)

b)

c)

d)

e)

Measure (or calculate) something

Find something that you wish to compare with your measurement (theory, experiment)

Form a hypothesis (e.g. my measurement is consistent with the PDG value)

Calculate the confidence level that the hypothesis is true

Accept or reject the hypothesis depending on some minimum acceptable confidence level

Problems with the above procedure

a)

b)

c)

What is a confidence level ?

How do you calculate a confidence level?

What is an acceptable confidence level ?

How would we test the hypothesis “the space shuttle is safe?”

Is 1 explosion per 10 launches safe? Or 1 explosion per 1000 launches?

A working definition of the confidence level:

The probability of the event happening by chance.

Example: Suppose we measure some quantity (X) and we know that it is described by a gaussian

pdf with =0 and =1. What is the confidence level for measuring X 2 (i.e. 2 from the mean)?

2

2

P( X 2) P( , , x)dx P(0,1, x)dx

2

x

1

2

e

2

2

dx 0.025

Thus we would say that the confidence level for measuring X 2 is 0.025 or 2.5%

and we would expect to get a value of X 2 one out of 40 tries if the underlying pdf is gaussian.

880.A20 Winter 2002

Richard Kass

Hypothesis testing

A few cautions about using confidence limits

a) You must know the underlying pdf to calculate the limits.

Example: suppose we have a scale of known accuracy ( = 10 gm ) and we weigh

something to be 20 gm. Assuming a gaussian pdf we could calculate a 2.5% chance that

our object weighs ≤ 0 gm?? We must make sure that the probability distribution is

defined in the region where we are trying to extract information.

b) What does a confidence level really mean? Classical vs Baysian viewpoints

Example: Suppose we measure a value of x for the mean of a Gaussian distribution with

an unknown mean . Suppose we know the standard deviation () of the distribution. It

is tempting to say:

“The probability that lies in the interval [x-2, x+2] = 95%”

However according to Classical probability this is a meaningless statement! By definition

the mean () is a constant, not a random variable, thus does not have a probability

distribution associated with it! What we can say is that we will reject any value of that

gives a probability of ≤ 5% of obtaining our (measured) value of x. Here we are assuming

that we are really measuring . But how do we really know what we are measuring?

880.A20 Winter 2002

Richard Kass

Hypothesis testing

Hypothesis testing for gaussian variables:

We wish to test if a quantity we have measured (=average of n measurements )

is consistent with a known mean (0).

Test

= o

Conditions

2 known

Test Statistic

Test Distribution

o

Gaussian

/ n

avg.0

t(n 1)

2

o

unknown

= o

s/ n

In the above chart t(n-1) stands for the “t-distribution” with n-1 degrees of freedom.

Example: Do free quarks exist? Quarks are nature's fundamental building blocks and are

thought to have electric charge (|q|) of either (1/3)e or (2/3)e (e = charge of electron). Suppose

we do an experiment to look for |q| = 1/3 quarks.

We measure: q = 0.90 ± 0.2 This gives and

Quark theory: q = 0.33

This is

We want to test the hypothesis = o when is known. Thus we use the first line in the table.

o 0.9 0.33

z

2.85

/ n

0.2 / 1

We want to calculate the probability for getting a z 2.85, assuming a Gaussian pdf.

2.85

2.85

prob(z 2.85) P( , ,x)dx P(0,1, x)dx

1

2

e

x2

2

dx 0.002

2.85

The CL here is just 0.2 %! What we are saying here is that if we repeated our experiment 1000

times then the results of 2 of the experiments would measure a value q 0.9 if the true mean

was q = 1/3. This is not strong evidence for q = 1/3 quarks!

880.A20 Winter 2002

Richard Kass

Hypothesis testing

Do charge 2/3 quarks exist?

If instead of q = 1/3 quarks we tested for q = 2/3 what would we get for the CL?

Now we have = 0.9 and = 0.2 as before but o = 2/3.

We now have z = 1.17 and prob(z 1.17) = 0.13 and the CL = 13%.

Now free quarks are starting to get believable!

Another variation of the quark problem

Suppose we have 3 measurements of the charge q:

q1 = 1.1, q2 = 0.7, and q3 = 0.9

We don't know the variance beforehand so we must determine the variance from our data.

Thus we use the second test in the table.

= (q1+ q2+ q3)/3 = 0.9

n

2

s

2

(qi )

0.2 2 (0.2) 2 0

0.04

n 1

2

o 0.9 0.33

z

4.94

s/ n

0.2 / 3

i1

In this problem z is described by Student’s t-distribution.

Note: Student is the pseudonym of statistician W.S. Gosset who was employed by a famous English brewery.

Just like the Gaussian pdf, in order to evaluate the t-distribution one must resort to a look up table (see

for example Table 7.2 of Barlow).

In this problem we want prob(z 4.94) when n-1 = 2. The probability of z 4.94 is 0.02.

This is about 10X greater than the 1st part of this example where we knew the variance ahead of time.

880.A20 Winter 2002

Richard Kass

Hypothesis testing

Tests when both means are unknown but come from a gaussian pdf:

Test

1 - 2 = 0

1

1 - 2 =0

2

Conditions

and 22 known

12 = 22 = 2

unknown

Test Statistic

1 2

12 / n 22 / m

1 2

12 22

unknown

t (n+m-2)

Q 1 / n 1 / m

Q2

1 - 2 =0

Test Distribution

Gaussian

avg.0

( n1)s12 ( m1)s 2

2

n m 2

1 2

s12

/ n

s22

approx. Gaussian

avg.0

/m

n and m are the number of measurements for each mean

Example: Do two experiments agree with each other?

CLEO measures the Lc lifetime to be (180 7)fs while SELEX measures (198 7)fs.

z

1 2

198 180

1.82

2

2

2

2

1 / n 2 / m

(7 ) (7 )

2

1.82

1.82

1.82

1.82

P( z 1.82) 1 P( , , x)dx 1 P(0,1, x)dx 1

1

2

1.82 x

e 2

dx 1 0.93 0.07

1.82

Thus 7% of the time we should expect the experiments to disagree at this level.

But, is this acceptable agreement?

880.A20 Winter 2002

Richard Kass

Hypothesis testing

A non-gaussian example, Poisson distribution

The following is the numbers of neutrino events detected in 10 second intervals

by the IMB experiment on 23 February 1987 around which time the supernova

S1987a was first seen by experimenters:

#events

0

1

2

3

4

5

6

7

8 9

#intervals

1024

860

307

58

15

3

0

0

0 1

Assuming the data is described by a Poisson distribution.

calculate the average and compute the average number

events expected in an interval.

8

(# events ) (# intervals )

i0

8

#intervals

0.774

= 0.777 if we include

interval with 9 events

i0

We can calculate a c2 assuming the data are described by a Poisson distribution:

i8

e n

The predicted number of intervals is given by: # intervals #intervals

i0

n!

8 (# intervals prediction ) 2

Note: we use 2=prediction for a Poisson

c 2

3.6

prediction

i0

(# intervals prediction ) 2

2

i0

8

c 2

#events

0

1

2

3

4

5

6

7

8

9

#intervals

1064

823

318

82

16

2

0.3

0.03

0.003

0.0003

There are 7 (= 9-2) DOF’s here and the probability of c2/D.O.F. = 3.6/7 is high (≈80%), indicating a good fit to a Poisson

(# intervals prediction ) 2

2

3335 and c /D.O.F. = 3335 / 8 417.

prediction

i 0

9

However, if the last data point is included: c 2

The probability of getting a c2/D.O.F. which is this large from a Poisson distribution with = 0.774 is 0.

Hence the nine events are most likely coming from the supernova explosion and not just from a Poisson.

880.A20 Winter 2002

Richard Kass

Confidence Intervals

Confidence intervals (CI) are related to confidence limits (CL).

To calculate a CI we assume a CL and find the values of the parameters that give us the CL.

Caution

CI’s are not always uniquely defined.

We usually seek the minimum interval or symmetric interval.

Example: Assume we have a gaussian pdf with =3 and =1. What is the 68% CI ?

We need to solve the following equation:

0.68 ab G ( x,3,1)dx

Here G(x,3,1) is the gaussian pdf with =3 and =1.

There are infinitely many solutions to the above equation.

We seek the solution that is symmetric about the mean ():

0.68 ccG ( x,3,1)dx

To solve this problem we either need a probability table, or remember that 68% of the

area of a gaussian is within of the mean.

Thus for this problem the 68% CI interval is: [2,4]

Example: Assume we have a gaussian pdf with =3 and =1.

What is the one sided upper 90% CI ?

Now we want to find the c that satisfies:

0.9 c G ( x,3,1)dx

Using a table of gaussian probabilities we find 90% of the area in the interval [-, +1.28]

Thus for this problem the 90% CI is: [-, 4.28]

880.A20 Winter 2002

Richard Kass

Confidence Intervals

Suppose an experiment is looking for the X particle but observes no candidate events.

What can we say about the average number of X particles expected to have been produced?

First, we need to pick a pd. Since events are discrete we need a discrete pd Poisson.

Next, how unlucky do you want to be ? It is common to pick 10% of the time to be unlucky.

We can now re-state the question as:

“Suppose an experiment finds zero candidate events. What is the 90% CL upper limit on the

average number of events () expected assuming a Poisson pd ?”

Thus we need to solve for in the following equation:

e n

CL 0.9

n 1 n!

In practice it is much easier to solve for 1-CL:

So, if =2.3 then 10% of the time

e n

e n

1 CL 1

e ln(1 CL) we should expect to find 0 candidates.

There was nothing wrong with our

n!

n 1 n!

n0

experiment. We were just unlucky.

For our example, CL=0.9 and therefore =2.3 events.

Example: Suppose an experiment finds one candidate event. What is the 95% CL upper limit

on the average number of events () ?

1 e n

e n

The 5% includes 1 AND 0 events.

1 CL 1

e e 4.74

n!

n!

n2

n 0

Here we are saying that we would get 2 or more events 95% of the time if =4.74.

PDG 1994 has a good Table (17.3, P1280) for these types of problems.

880.A20 Winter 2002

Richard Kass

Maximum Likelihood Method Example

Example: Exponential decay:

pdf : f (t ,t ) et / t / t

n

n

i 1

i 1

L eti / t / t and ln L n ln t ti / t

Generate events according to an exponential distribution with t0= 100

Calculate lnL vs t(time) and find maximum of LnL and the points

where LnL =Lmax-1/2 (“1 points”)

-5.613 10 4

-62

lnL

lnL

-5.613 10 4

-63

-5.613 10 4

lnL

lnL

-64

y = m3-(m0-m1)^ 2/(2*m2^ 2)

Value

Error

m1

100.8

0.013475

m2

1.01 0.0088944

m3

-56128

0.034297

Chisq

0.055864

NA

R

0.99862

NA

-65

-66

-5.613 10 4

-5.613 10 4

-67

0

100

200

t

300

400

500

600

Log-likelihood function for 10 events

LnL max for t=189

1 points: (140, 265)

L not gaussian

880.A20 Winter 2002

-5.613 10 4

97

98

99

100

t

101

102

103

104

Log-likelihood function for 104 events

LnL max for t=100.8

1 points: (99.8, 101.8)

L is fit by a gaussian

Richard Kass

Maximum Likelihood Method Example

How do we calculate confidence intervals for our MLM example?

For the case of 104 events we can just use gaussian stats since the likelihood

function is to a very good approximation gaussian. Thus the “1 points” will give

us 68% of the area under gaussian curve, the “2 points” points ~95% of area, etc.

Unfortunately, the likelihood function for the 10 event case is NOT

approximated by a gaussian. So the “1 points” do not necessarily give you

68% of the area under the gaussian curve.

In this case we can calculate a confidence interval about the mean using a Monte

Carlo calculation as follows:

1)

2)

3)

4)

Generate a large number (e.g. 107) of 10 event samples each sample having a mean

lifetime equal to our original 10 event sample (t*=189)

For each 10 event sample calculate the maximum of the log-likelihood function (=ti)

Make a histogram of the ti’s. This histogram is the pdf for t.

To calculate a X% confidence interval about the mean, find the region where

X%/2 of the area is in the region [tL, t*] and X% is in the region [t*, tH].

NOTE: since the pdf may not be symmetric around its mean, we may not be able

to find equal area regions below and above the mean.

880.A20 Winter 2002

Richard Kass

Maximum Likelihood Method Example

7 10 5

6 10

106

5

Linear

4 10 5

events/10

5 10 5

events/10

105

t*

3 10 5

10

4

100

1 10 5

10

0

100

t

t*

1000

2 10 5

0

Semi-log

1

200

300

400

500

0

100

200

t

300

400

500

Above is the histogram or pdf of 107 ten event samples each with t*=189. By counting

events (i.e. integrating) in an interval around t*, the histogram (actually, I printed out

the number of events in one unit steps from 0 to 650) gives the following:

54.9% of the area is in the region (0t189)

“±1 region”: 34% of area in regions (139t189) and (189t263)

90% CI region: 45% of area in regions (117t189)] and (189t421)

The upper 95% region (i.e. 47.5% of the area above the mean) is not defined.

Very close

To likelihood

result

NOTE: the variance of an

exponential distribution can be calculated analytically:

t2 (t t )2et / t dt / t t 2 / n

0

Thus for the 10 event sample we expect = 60, not too far off from the 68% CI!

For the 104 event sample, the CI’s from the ML estimate of and the analytic

are essentially identical (both give =1.01).

880.A20 Winter 2002

Richard Kass

Confidence Regions

Often we have a problem that involves two or more parameters. In these instances

it makes sense to define confidence regions rather than an interval.

Consider the case where we are doing a MLM fit to two variables a, b.

Previously we have seen that for large samples the Likelihood function becomes “gaussian”:

ln L(a ) ln Lmax

(a a * )2

n2

*

or ln L(a n a ) ln Lmax

2

2

2 a

We can generalize this to two correlated variables a, b:

ln L(a , b ) ln Lmax

(a a * )2 ( b b * )2

ab

1

(a a * )( b b * )

2

with

a b

a b

2(1 2 ) a2

b2

The contours of constant probability are given by:

1 (a a * ) 2 ( b b * ) 2

(a a * )( b b * )

2

Q

a b

(1 2 ) a2

b2

Q=1 contains 39% of the area

Q=2.3 contains 68% of the area

Q=4.6 contains 0% of the area

Q=6.2 contains 95% of the area

Q=9.2 contains 99% of the area

880.A20 Winter 2002

Integral of c2 pdf with 2 DOF’s can

done analytically:

Q 1q

e 2 dq

P(q Q) 1

20

1

Q

1 e 2

Richard Kass

Confidence Regions

Example: The CLEO experiment did a maximum likelihood analysis to search

for B+ and Bk+ events. The results of the MLM fit are:

N + =164, N k+ =255, =0.5 (warning: these are made up numbers!)

N + and N k+ are highly correlated, since at high momentum (>2GeV) CLEO

has a hard time separating ’s and k’s.

The contours of constant probability are given by:

1 ( N 25) 2 ( N k 16) 2

(a 25)( b 16)

2

(

0

.

5

)

Q

2

2

2

5 4

(1 .5 )

5

4

40

99%, Q=9.2

95%, Q=6.2

N +

30

68%,Q=2.3

20

10

39%, Q=1

0

0

880.A20 Winter 2002

10

20

N k+

30

40

Richard Kass