* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Norm and Derivatives

Survey

Document related concepts

Transcript

Theory of Differentiation in

Statistics

Mohammed Nasser

Department of Statistics

1

Relation between Statistics and

Differentiation

Statistical

Concepts/Techniques

Use of Differentiation

Theory

Study of shapes of univariate An easy application of firstpdfs

order and second-order

derivatives

Calculation/stablization of

An application of Taylor’s

variance of a random variable theorem

Calculation of Moments from

MGF/CF

Differentiating MGF/CF

2

Relation between Statistics and

Differentiation

Description of a density/ a

model

Optimize some risk

functional/regularized

functional/ empirical risk

functional with/without

constraints

Influence function to assess

robustness of a statistical

dy/dx=k, dy/dx=kx

Needs heavy tools of

nonlinear optimization

Techniques that depend on

multivariate differential

calculus and functional

differential calculus

An easy application of

directional derivative in

function space

3

Relation between Statistics and

Differentiation

Classical delta theorem to

find asymptotic distribution

Von Mises Calculus

Relation between probability

measures and probability

density functions

An application of ordinary

Taylor’s theorem

Extensive application of

functional differential

calculus

Radon Nikodym theorem

4

Monotone Function

f(x)

Monotone

Decreasing

Monotone

Increasing

Strictly

Increasing

Non

Decreasing

Strictly

Decreasing

Non

Increasing

5

Increasing/Decreasing test

f : R

R

f ( x ) x 3

f : R R

f ( x ) x 3

6

Example of Monotone Increasing Function

0

f : R

R

f ( x ) x 3

7

Maximum/Minimum

a

b

Is there any sufficient condition that

guarantees existence of global

max/global min/both?

8

Some Results to Mention

If the function is continuous and its domain is compact,

the function attains its extremum

It’s a very general result It holds for any compact

space other compact set of Rn.

Any convex ( concave) function attains its global min (

max).

Without satisfying any of the above conditions some

functions may have global min ( max).

Firstly, proof of

existence of

Then

Calculation of

extremum

9

What Does" f I ( x0 ) 0" Say about f

Fermat’s Theorem: if f has local maximum or minimum

at c, and if f I (c) exist, then f I (c) 0, but converse is not

true

10

Concavity

II

•If f ( x) 0 for all x in (a,b), then the graph of f concave on (a,b).

•If f II ( x) 0 for all x in (a,b), then the graph of f concave on (a,b).

•If f II (c) 0 then f has a point of inflection at c.

c

Point of inflection

11

Maximum/Minimum

Let f(x) be a differential function on an interval I

•f is maximum at c I if f I (c) 0 and f II (c) 0

•f is maximum at c I if f (c) 0 and f (c) 0

I

II

•If f I ( x) 0for all x in an interval, then f is maximum at first

end point of the interval if left side is closed and

minimum at last end point if right side is closed.

I

f

•If ( x) 0for

all x in an interval, then f is minimum at first

end point of the interval if left side is closed and

maximum at last end point if right side is closed.

12

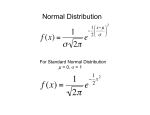

Normal Distribution

The probability density function is given as,

1

f ( x)

e

2

1 x

2

2

lt f (x ) 0

x

continuous on R

f(x)>=0

Differentiable on

R

Convex

Concave

Convex

13

Normal Distribution

Now,

1

f ( x)

e

2

1 x

2

2

1 1 x

log f log

2 2

1 I

x 1

f 0

f

1 x

I

f

f 0

x

2

Take log both side

Put first derivative

equal to zero

14

Normal Distribution

f

II

1

2

1

2

1

2

( x ) f

I

f

f

I

f

Since x

f 0

Therefore f is maximum at x

15

Normal Distribution

Put 2nd derivative equal to zero

0

1

2 (x ) f

f

II

I

f 0

x

f f 0

2

x

( x )2

2

1 0

2 0

{x ( )}{x ( )} 0

x

Therefore f has point of inflection at

x

16

Logistic Distribution

The distribution function is defined as,

ex

F ( x)

; x

x

1 e

Convex

Concave

17

Logistic Distribution

Take first derivative with respect to x

ex

F ( x)

0; x

x 2

(1 e )

I

Therefore F is strictly increasing

Take2nd derivative and put equal to zero

x

x

e

(

1

e

)

F II ( x)

0

x 3

(1 e )

e x (1 e x ) 0

ex 1

x log( 1) 0

x 0

Therefore F has a point of inflection at x=0

18

Logistic Distribution

Now we comment that F has no maximum and

minimum.

Since,

F II ( x) 0; x ( ,0)

F II ( x) 0; x (0, )

Therefore F is convex on ( ,0) and concave on (0, ).

19

Variance of a Function of Poisson Variate

Using Taylor’s Theorem

We know that,

Mean , E (Y ) , V ariance,V (Y )

We are interested to find the Variance of g (Y ) Y

V ( Y ) ?

Given that ,

1 21

g(Y ) Y g (Y ) Y

2

1

2

1 2

1 1

I

I

g ()

g ()

2

4

I

20

Variance of a Function of Poisson Variate

Using Taylor’s Theorem

The Taylor’s series is defined as,

g (Y ) g ( ) g I ( )(Y ) o( Y )

g (Y ) g ( ) g I ( )(Y )

V ( g (Y )) 0 g ( ) V (Y )

I

2

1 1

V (Y )

4

1

4

Therefore the variance of

1

Y is

4

21

Risk Functional

Risk functional, RL,P(g)=

L( x, y, g ( x))dP( x, y)

X Y

L( x, y, g ( x))dP( y / x)dPX

XY

Population Regression functional /classifier, g* RL,P ( g * ) inf RL,P ( g )

g: X Y

P is chosen by nature , L is chosen by the scientist

Both RL,P(g*) and g* are uknown

From sample D, we will select gD by a learning

method(???)

22

Empirical risk minimization

Empirical Risk functional,

Problems of

empirical risk

minimization

R L ,P ( g )

n

=

L( x, y, g ( x))dPn ( x, y)

X Y

L( x, y, g ( x))dPn ( y / x) dPn X

XY

1 n

L( xi , yi , g ( xi ))

n i 1

23

What Can We Do?

We can restrict the set of functions over which we

minimize empirical risk functionals

modify the criterion to be minimized (e.g. adding a penalty

for `complicated‘ functions). We can combine two.

Regularization

Structural risk

Minimization

24

Regularized Error Function

In linear regression, we minimize the error function:

1 l

2

2

( f ( xi ) yi ) w

2l i 1

2

Replace the quadratic error function by Є-insensitive error

l

function:

1 2

C E ( f ( xi ) y ) w

2

i 1

An example of Є-insensitive error function:

25

Linear SVR: Derivation

Meaning of equation 3

26

Linear SVR: Derivation

vs.

●

● ●

●

●

●

Complexity

Sum of errors

●

Case I:

“tube”

complexity

Case II:

“tube”

complexity

27

Linear SVR: Derivation

C is small

● ●

●

●

●

●

• The role

of C

C is big

●●

●

●

●

●

●

●

Case I:

“tube”

complexity

Case II:

“tube”

complexity

28

Linear SVR: derivation

●

● ●

●

●

●

Subject to:

●

29

Lagrangian

Minimize:

l

l

L

0 w ( n n* ) xn

w

n 1

l

L

0 ( n n* ) 0

b

n 1

L

0 n n C

n

L

0 an* n* C

*

n

l

f(x)=<w,x>=

l

( n ) xn , x ( n n* ) xn , x

n 1

*

n

n 1

Dual var. αn,αn*,μn,μ*n >=0

l

l

1 2 l

* *

L C ( n ) w ( n n n n ) n ( n yn w, xn b) n* ( n* yn w, xn b)

2

n 1

n 1

n 1

n 1

*

n

30

Dual Form of Lagrangian

Maximize:

1 l

W ( a, a )

2 n 1

0 n C

*

l

(

m 1

l

n

l

)( m ) xn , xm ( n ) ( n n* ) yn

*

n

*

m

n 1

*

n

n 1

0 n* C

l

(

n 1

n

n* ) 0

Prediction can be made using:

l

f ( x) ( n ) x, xn b

n 1

*

n

???

31

How to determine b?

Karush-Kuhn-Tucker (KKT) conditions implies( at the

optimal solutions:

n ( n yn w, xn b) 0

( yn w, xn b) 0

(C n ) n 0

Support vectors are

*

n

*

n

(C ) 0

*

n

*

n

points that lie on the

boundary or outside the

tube

These equations implies many important things.

32

Important Interpretations

i i* 0, i.e. i i* 0 (why??)

i 0 i 0,

and i* 0

i* 0

i* C n* yn w, xn b 0

n* w, xn b yn

w, xn b yn

33

Support Vector: The Sparsity

of SV Expansion

i 0 yi f ( xi )

0 f ( xi ) yi

*

i

and

yi f ( xi ) i 0

f ( xi ) yi 0

*

i

34

Maximize:

1 l

W ( , )

2 n 1

0 n C

*

Dual Form of Lagrangian

(Nonlinear case)

l

(

m 1

l

n

l

)( m )k ( xn , xm ) ( n ) ( n n* ) yn

*

n

*

m

n 1

*

n

n 1

0 n* C

l

*

(

i i)0

i 1

Prediction can be made using:

l

f ( x) (an an* )k ( x, xn ) b

n 1

35

Non-linear SVR: derivation

Subject to:

36

Non-linear SVR: derivation

Subject to:

Saddle point of L has to be found:

min with respect to

max with respect to

37

Non-linear SVR: derivation

...

38

What is Differentiation?

f,a nonlinear function

U

A Banach Space

V,

Another

B-space

Differentiation is nothing but local linearization

In differentiation we approximate a non-linear

function locally by a (continuous) linear function

39

Fréchet Derivative

f ( x h) f ( x) f ( x)h

0

Definition 1 hLt

0

h

| f ( x h) f ( x) f ( x)h |

Lt

0

h 0

|h|

It

can be easily generalized to Banach space valued

B ,

function, f: B ,

1

1

2

2

|| f ( x h) f ( x) f ( x)h ||2

Lt

0

h 0

|| h ||1

f (x ) : B 1 B 2 is a linear map. It can be shown,.

every linear map between infinite-dimensional spaces is not

always continuous.

40

Frécehet Derivative

We have just mentioned that Fréchet recognized , the definition 1

could be easily generalized to normed spaces in the following

way:

lim

h 0

f ( x h) f ( x) df ( x)( h)

h1

2

0

f ( x h) f ( x) df ( x)( h)

lim

0 ... ... ... ... (2)

h 0

h1

Where and the set of all continuous linear functions between

B1and B2 If we write, the remainder of f at x+h, ;

41

Rem(x+h)= f(x+h)-f(x)-df(x)(h)

S Derivative

Then 2 becomes

lim

h 0

Re m( x h)

h1

2

0

Re m( x h)

lim

0 ... ... .... ... (3)

h 0

h1

Soon the definition is generalized (S-differentiation ) in general

topological vector spaces in such a way ; i) a particular case of the

definition becomes equivalent to the previous definition when ,

domain of f is a normed space, ii) Gateaux derivative remains the

weakest derivative in all types of S-differentiation.

42

S Derivatives

Definition 2

Let S be a collection of subsets of B1 , let t R. Then f is Sdifferentiable at x with derivative df(x) L( B , B ) if A S

1

2

Re m( x h)

0 as t 0 uniformly in h A

t

Definition 3

When S= all singletons of B1, f is called Gâteaux differentiable

with Gâteaux derivative . When S= all compact subsets of B1, f is

called Hadamard or compactly differentiable with Hadamard or

compact derivative . When S= all bounded subsets of B1, f is

called or boundedly differentiable with or bounded derivative .

43

Equivalent Definitions of Fréchet derivative

(a) For each bounded set, E B1 ,

uniformly

h E

R( x th)

0 as

t

t 0

in R,

(b) For each sequence,{hn } B1 and each sequence {tn } R /{0} 0 ;

R( x t n hn )

0 as n

tn

44

(c) R( x h) 0 as h 0

h1

(d) R( x th) 0 as t 0 Uniformly in

h {h B1 : h 1 1}

R( x th)

0 as t 0 Uniformly in

t

h {h B1 : h 1 1}

t

(e)

Statisticians generally uses this form or its some slight

modification

45

Relations among Usual Forms of Definitions

DG (x) Set of Gateaux differentiable function at x DG (x) set

of Hadamad differentiable function at x DG (x) set Frechet

differentiable function x. In application to find Frechet or

Hadamard derivative generally we shout try first to determine

the form of derivative deducing Gateaux derivative acting on

h,df(h) for a collection of directions h which span B1. This

reduces to computing the ordinary derivative (with respect to

R) of the mapping t f ( x th) at t 0,which is much related

to influence function, one of the central concepts in robust

statistics. It can be easily shown that,

(i) When B1=R with usual norm, they will three coincide

(ii) When B1, a finite dimensional Banach space, Frechet and

46

Hadamard derivative are equal. The two coincide with familiar

total derivative.

Properties of Fréchet derivative

Hadamard

diff. implies continuity but Gâteaux does

not.

Hadamard diff. satisfies chain rule but Gâteaux does

not.

Meaningful Mean Value Theorem, Inverse Function

Theorem, Taylor’s Theorem and Implicit Function

Theorem have been proved for Fréchet derivative

47

T [(1 )F x ] T (F )

IF (T , x ; F ) lt

0

T [(F ( x F )] T (F )

= lt

0

48

T (F ) (x )dF

Counting

Lebesgue

T (F ) (x )dF (x ) f (x )dx

(x ) (x )dF

i 1

i

X T ( Fn ) xdFn

49

Mathematical Foundations of

Robust Statistics

d 1(F,G) <δ

d 2(T(F),T(G))

<ε

T(G)≈T(F)+ TF (G F )

1

n (T(G)-T(F))≈ n TF (G F )

1

50

Mathematical Foundations

of Robust Statistics

51

Mathematical Foundations

of Robust Statistics

52

Mathematical Foundations

of Robust Statistics

53

Given a Measurable Space (W,F),

There exist many measures on F.

If W is the real line, the standard measure is “length”.

That is, the measure of each interval is its length. This

is known as “Lebesgue measure”.

The -algebra must contain intervals. The smallest algebra that contains all open sets (and hence intervals) is

call the “Borel” -algebra and is denoted B.

A course in real analysis will deal a lot with the measurable

space (, B) .

54

Given a Measurable Space (W,F),

A measurable space combined with a measure is called a

measure space. If we denote the measure by , we would

write the triple: (W,F,.

Given a measure space (W,F,, if we decide instead to use a

different measure, say u, then we call this a “change of

measure”. (We should just call this using another measure!)

Let and u be two measures on (W,F), then

u is “absolutely continuous” with respect to if

( A) 0 u ( A) 0

(Notation u )

u and are “equivalent” if

( A) 0 u ( A) 0

55

The Radon-Nikodym Theorem

If u<< then u is actually the integral of a function wrt .

d

du

du

g

d

g

du

du

du

d gd

d

du

u ( A) du

d gd

d

A

A

A

u ( A) gd

A

g is known as the RadonNikodym derivative and denoted:

d

du

g

d

56

The Radon-Nikodym Theorem

If u<< then u is actually the integral of a function wrt .

Idea of proof: Create the function through its superlevel sets

Consider the set function (this is actually a signed measure)

(u )( A) u ( A) ( A)

Choose and let A be the largest set such that

u ( A) ( A) 0 for all A A

(You must prove such an A exists.)

Then A is the -superlevel set of g.

Now, given superlevel sets, we can construct a function by:

g ( ) sup{ | A }

57

The Riesz Representation Theorem:

All continuous linear functionals on Lp are given by integration

q

against a function g L with 1p 1q 1

That is, let L : Lp be a cts. linear functional.

y L( f )

Then: L( f )

fgd

Note, in L2 this becomes:

L( f ) fgd f , g

58

The Riesz Representation Theorem:

All continuous linear functionals on Lp are given by integration

q

against a function g L with 1p 1q 1

What is the idea behind the proof:

Linearity allows you to break things into building blocks,

operate on them, then add them all together.

What are the building blocks of measurable functions.

Indicator functions! Of course!

Let’s define a set valued function from indicator functions:

u ( A) L(1A )

59

The Riesz Representation Theorem:

All continuous linear functionals on Lp are given by integration

q

against a function g L with 1p 1q 1

A set valued function u ( A) L(1A )

How does L operate on simple functions

n

n

n

i 1

i 1

i 1

L( ) L( i 1Ai ) i L(1Ai ) iu ( A)

This looks like an integral with u the measure! L( ) du

But, it is not too hard to show that u is a (signed) measure.

(countable additivity follows from continuity).

Furthermore, u<<. Radon-Nikodym then says du=gd.

60

The Riesz Representation Theorem:

All continuous linear functionals on Lp are given by integration

q

against a function g L with 1p 1q 1

A set valued function u ( A) L(1A )

How does L operate on simple functions

n

n

n

i 1

i 1

i 1

L( ) L( i 1Ai ) i L(1Ai ) iu ( A)

This looks like an integral with u the measure! L( ) gd

For measurable functions it follows from limits and continuity.

L( ) fgd

The details are left as an “easy” exercise for the reader...

61

A probability measure P is a measure that satisfies P (W) 1

That is, the measure of the whole space is 1.

A random variable is a measurable function.

X ( )

The expectation of a random variable is its integral:

E ( X ) XdP

A density function is the Radon-Nikodym derivative wrt

Lebesgue measure:

dP

E ( X ) XdP xf X ( x)dx

fX

dx

62

A probability measure P is a measure that satisfies P (W) 1

That is, the measure of the whole space is 1.

In finance we will talk about expectations with respect

to different measures.

P

E P ( X ) XdP

Q

E Q ( X ) XdQ

And write expectations in terms of the different measures:

dP

P

E ( X ) XdP X

dQ XdQ E Q ( X )

dQ

dP

or dP dQ

where

dQ

63