* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Session 8

Survey

Document related concepts

Transcript

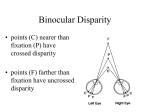

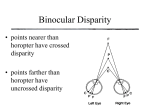

Perception, Illusion and VR HNRS 299, Spring 2008 Lecture 8 Seeing Depth 1 How can we see in depth? Problem: When light is focused on the retina to form an image, we go from 3 dimensions in the world to 2 dimensions on the image. We have lost the depth dimension. Question: How, then, can we see depth? Answers: Monocular cues to depth: from texture, motion, shading, etc. Binocular stereopsis: This uses the slightly different images on the two retinae to compute relative depth. 2 Monocular cues to Depth When we view a scene with one eye, we still interpret the 3D scene fairly accurately. The brain uses cues to the distance to estimate how far away things are. Some of these are listed here: •Height in the visual field •Size of familiar objects (and relative object size) •Texture gradients •Linear perspective •Shading and shadows •Relative Motion (motion parallax) 3 Height in the Visual Field In normal images, things that are farther away often have images that are higher in the visual field. The visual system uses this height in the visual field as a cue to distance. We then adjust our interpretation of the size of the object based on this distance. (Note: familiar object size also gives a cue to depth here). 4 Relative object size •Recall that with perspective projection, the image size of a nearby object is larger than for a distant object. •Therefore, 2 similar objects of different sizes may be perceived at different distances. •Demo: http://psych.hanover.edu/krantz/art/rel_size.html •This cue works better when the objects are familiar and have known sizes (see previous slide). 5 Texture Gradients •When we view a textured surface, the nearby texture is larger and coarser and the more distant texture is more fine grained. The texture forms a gradient from course to fine. •This is a consequence of perspective projection. Demo: http://psych.hanover.edu/krantz/art/texture.html 6 Linear Perspective Because the size of images decreases with distance, the gap between 2 parallel lines will become smaller as the lines recede into the distance. This is known as linear perspective. Our visual system uses this convergence of lines as a cue to distance. Demo: http://psych.hanover.edu/krantz/art/linear.html 7 Shape from Shading Shading in an image can give cues to the 3D shape of an object. The brain appears to assume the light source is above the scene. In these images, when the shadow is on the bottom, we see bumps. 8 When it is on the top, we see indentations. Motion Parallax As we learned in the motion lecture, for a moving observer, the images of nearby objects move faster than the images of distant objects. The difference in image speed, known as motion parallax, can signal depth differences. Demo: http://psych.hanover.edu/krantz/MotionParallax/MotionParallax.html Structure from motion: Relative motion in the image can also be used to determine the 3D shape of objects. Demo: http://www.viperlib.org/ (General depth images, page 7, rotating cylinder). 9 Moving Shadows Combining motion and shadows can lead to some interesting perceptions of motion in depth. Demo: http://www.viperlib.org/ General Depth Images, page 1. The only difference in these images is the position of the shadow. 10 Binocular Stereo The fact that our two eyes see the world from different positions allows the brain to use differences in the image to compute depth. This is known as stereopsis or stereo vision. A 3D Mars Rover When viewed with a red filter over the left eye and a cyan filter over the right eye, this image shows a 3 dimensional view of Mars. 11 Binocular Stereo •The image in each of our two eyes is slightly different. •Images in the plane of fixation fall on corresponding locations on the retina. •Images in front of the plane of fixation are shifted outward on each retina. They have crossed disparity. •Images behind the plane of fixation are shifted inward on the retina. They have uncrossed disparity. 12 Crossed and uncrossed disparity Disparity is the amount that the image locations of a given feature differ in the two eyes. 1 uncrossed (negative) disparity plane of fixation 2 crossed (positive) disparity 13 Stereo processing To determine depth from stereo disparity: 1) Extract the "features" from the left and right images 2) For each feature in the left image, find the corresponding feature in the right image. 3) Measure the disparity between the two images of the feature. 4) Use the disparity to compute the 3D location of the feature. 14 The Correspondence problem •How do you determine which features from one image match features in the other image? (This problem is known as the correspondence problem). •This could be accomplished if each image has well defined shapes or colors that can be matched. •Problem: Random dot stereograms. Left Image Right Image Making a stereogram 15 Random Dot Stereogram 16 Problem with Random Dot Stereograms •In 1980's Bela Julesz developed the random dot stereogram. •The stereogram consists of 2 fields of random dots, identical except for a region in one of the images in which the dots are shifted by a small amount. •When one image is viewed by the left eye and the other by the right eye, the shifted region is seen at a different depth. •No cues such as color, shape, texture, shading, etc. to use for matching. •How does the brain know which dot from left image matches which dot from the right image? •Auto-stereograms (magic eye) are versions of random dot stereograms. 17 Some neurons are sensitive to disparity Some neurons in V1 and MT are sensitive to the disparity of a stimulus. In V1, most neurons prefer a disparity near zero. Tuned Excitatory Tuned Inhibitory 18 Near and Far cells Some cells are broadly tuned for disparity, preferring either near objects or far objects. 19 Testing for Disparity tuning Movie: http://www.viperlib.org/ (General depth images, page 5) Torsten Wiesel tests a cell for sensitivity to depth. 20