* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Lecture 11

Pattern recognition wikipedia , lookup

Scalar field theory wikipedia , lookup

Perturbation theory wikipedia , lookup

Computational electromagnetics wikipedia , lookup

Computational complexity theory wikipedia , lookup

Dijkstra's algorithm wikipedia , lookup

Natural computing wikipedia , lookup

Inverse problem wikipedia , lookup

Corecursion wikipedia , lookup

Knapsack problem wikipedia , lookup

Multiplication algorithm wikipedia , lookup

Lateral computing wikipedia , lookup

Simplex algorithm wikipedia , lookup

Genetic algorithm wikipedia , lookup

Secretary problem wikipedia , lookup

Multi-objective optimization wikipedia , lookup

Weber problem wikipedia , lookup

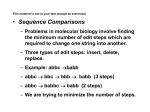

Dynamic Programming • • • • • Optimization Problems Dynamic Programming Paradigm Example: Matrix multiplication Principle of Optimality Exercise: Trading post problem Optimization Problems • In an optimization problem, there are typically many feasible solutions for any input instance I • For each solution S, we have a cost or value function f(S) • Typically, we wish to find a feasible solution S such that f(S) is either minimized or maximized • Thus, when designing an algorithm to solve an optimization problem, we must prove the algorithm produces a best possible solution. Example Problem You have six hours to complete as many tasks as possible, all of which are equally important. Task A - 2 hours Task D - 3.5 hours Task B - 4 hours Task E - 2 hours Task C - 1/2 hour Task F - 1 hour How many can you get done? • Is this a minimization or a maximization problem? • Give one example of a feasible but not optimal solution along with its associated value. • Give an optimal solution and its associated value. Dynamic Programming • Dynamic programming is a divide-and-conquer technique at heart • That is, we solve larger problems by patching together solutions to smaller problems • Dynamic programming can achieve efficiency by storing solutions to subproblems to avoid redundant computations – We typically avoid redundant computations by computing solutions in a bottom-up fashion Efficiency Example: Fibonacci numbers • F(n) = F(n-1) + F(n-2) – F(0) = 0 – F(1) = 1 • Top-down recursive computation is very inefficient – Many F(i) values are computed multiple times • Bottom-up computation is much more efficient – Compute F(2), then F(3), then F(4), etc. using stored values for smaller F(i) values to compute next value – Each F(i) value is computed just once Recursive Computation F(n) = F(n-1) + F(n-2) ; F(0) = 0, F(1) = 1 Recursive Solution: F(6) = 8 F(4) F(5) F(4) F(3) F(2) F(1) F(0) F(2) F(1) F(3) F(3) F(1) F(0) F(2) F(1) F(0) F(1) F(2) F(1) F(0) F(2) F(1) F(1) F(0) Bottom-up computation We can calculate F(n) in linear time by storing small values. F[0] = 0 F[1] = 1 for i = 2 to n F[i] = F[i-1] + F[i-2] return F[n] Moral: We can sometimes trade space for time. Key implementation steps • Identify subsolutions that may be useful in computing whole solution – Often need to introduce parameters • Develop a recurrence relation (recursive solution) – Set up the table of values/costs to be computed • The dimensionality is typically determined by the number of parameters • The number of values should be polynomial • Determine the order of computation of values • Backtrack through the table to obtain complete solution (not just solution value) Example: Matrix Multiplication • Input – List of n matrices to be multiplied together using traditional matrix multiplication – The dimensions of the matrices are sufficient • Task – Compute the optimal ordering of multiplications to minimize total number of scalar multiplications performed • Observations: – Multiplying an X Y matrix by a Y Z matrix takes X Y Z multiplications – Matrix multiplication is associative but not commutative Example Input • Input: – M1, M2, M3, M4 • • • • M1: 13 x 5 M2: 5 x 89 M3: 89 x 3 M4: 3 x 34 • Feasible solutions and their values – – – – – ((M1 M2) M3) M4:10,582 scalar multiplications (M1 M2) (M3 M4): 54,201 scalar multiplications (M1 (M2 M3)) M4: 2856 scalar multiplications M1 ((M2 M3) M4): 4055 scalar multiplications M1 (M2 (M3 M4)): 26,418 scalar multiplications Identify subsolutions • Often need to introduce parameters • Define dimensions to be (d0, d1, …, dn) where matrix Mi has dimensions di-1 x di • Let M(i,j) be the matrix formed by multiplying matrices Mi through Mj • Define C(i,j) to be the minimum cost for computing M(i,j) Develop a recurrence relation • Definitions – M(i,j): matrices Mi through Mj – C(i,j): the minimum cost for computing M(i,j) • Recurrence relation for C(i,j) – C(i,i) = ??? – C(i,j) = ??? • Want to express C(i,j) in terms of “smaller” C terms Set up table of values • Table – The dimensionality is typically determined by the number of parameters – The number of values should be polynomial C 1 1 0 2 3 4 2 3 4 0 0 0 Order of Computation of Values • Many orders are typically ok. – Just need to obey some constraints • What are valid orders for this table? C 1 2 3 4 1 0 1 2 3 0 4 5 0 6 2 3 4 0 Representing optimal solution C 1 2 3 4 P 1 2 3 4 1 0 5785 1530 2856 1 0 1 1 3 0 1335 1845 2 0 2 3 0 9078 3 0 3 0 4 2 3 4 0 P(i,j) records the intermediate multiplication k used to compute M(i,j). That is, P(i,j) = k if last multiplication was M(i,k) M(k+1,j) Pseudocode int MatrixOrder() forall i, j C[i, j] = 0; for j = 2 to n for i = j-1 to 1 C(i,j) = mini<=k<=j-1 (C(i,k)+ C(k+1,j) + di-1dkdj) P[i, j]=k; return C[1, n]; Backtracking Procedure ShowOrder(i, j) if (i=j) write ( “Ai”) ; else k = P [ i, j ] ; write “ ( ” ; ShowOrder(i, k) ; write “ ” ; ShowOrder (k+1, j) ; write “)” ; Principle of Optimality • In book, this is termed “Optimal substructure” • An optimal solution contains within it optimal solutions to subproblems. • More detailed explanation – Suppose solution S is optimal for problem P. – Suppose we decompose P into P1 through Pk and that S can be decomposed into pieces S1 through Sk corresponding to the subproblems. – Then solution Si is an optimal solution for subproblem Pi Example 1 • Matrix Multiplication – In our solution for computing matrix M(1,n), we have a final step of multiplying matrices M(1,k) and M(k+1,n). – Our subproblems then would be to compute M(1,k) and M(k+1,n) – Our solution uses optimal solutions for computing M(1,k) and M(k+1,n) as part of the overall solution. Example 2 • Shortest Path Problem – Suppose a shortest path from s to t visits u – We can decompose the path into s-u and u-t. – The s-u path must be a shortest path from s to u, and the u-t path must be a shortest path from u to t • Conclusion: dynamic programming can be used for computing shortest paths Example 3 • Longest Path Problem – Suppose a longest path from s to t visits u – We can decompose the path into s-u and u-t. – Is it true that the s-u path must be a longest path from s to u? • Conclusion? Example 4: The Traveling Salesman Problem What recurrence relation will return the optimal solution to the Traveling Salesman Problem? If T(i) is the optimal tour on the first i points, will this help us in solving larger instances of the problem? Can we set T(i+1) to be T(i) with the additional point inserted in the position that will result in the shortest path? No! T(4) T(5) Shortest Tour Summary of bad examples • There almost always is a way to have the optimal substructure if you expand your subproblems enough • For longest path and TSP, the number of subproblems grows to exponential size • This is not useful as we do not want to compute an exponential number of solutions When is dynamic programming effective? • Dynamic programming works best on objects that are linearly ordered and cannot be rearranged – – – – characters in a string files in a filing cabinet points around the boundary of a polygon the left-to-right order of leaves in a search tree. • Whenever your objects are ordered in a left-toright way, dynamic programming must be considered. Efficient Top-Down Implementation • We can implement any dynamic programming solution top-down by storing computed values in the table – If all values need to be computed anyway, bottom up is more efficient – If some do not need to be computed, top-down may be faster Trading Post Problem • Input – n trading posts on a river – R(i,j) is the cost for renting at post i and returning at post j for i < j • Note, cannot paddle upstream so i < j • Task – Output minimum cost route to get from trading post 1 to trading post n Longest Common Subsequence Problem • Given 2 strings S and T, a common subsequence is a subsequence that appears in both S and T. • The longest common subsequence problem is to find a longest common subsequence (lcs) of S and T – subsequence: characters need not be contiguous – different than substring • Can you use dynamic programming to solve the longest common subsequence problem? Longest Increasing Subsequence Problem • Input: a sequence of n numbers x1, x2, …, xn. • Task: Find the longest increasing subsequence of numbers – subsequence: numbers need not be contiguous • Can you use dynamic programming to solve the longest common subsequence problem? Book Stacking Problem • Input – n books with heights hi and thicknesses ti – length of shelf L • Task – Assignment of books to shelves minimizing sum of heights of tallest book on each shelf • books must be stored in order to conform to catalog system (i.e. books on first shelf must be 1 through i, books on second shelf i+1 through k, etc.)