* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download ET4718 - Computer Programming 7

Survey

Document related concepts

Transcript

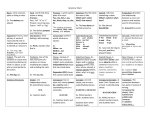

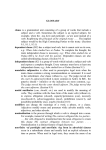

Studying the Protein Folding Problem by Means of a New Data Mining Approach by Huy N.A. Pham and Triantaphyllou Evangelos Department of Computer Science, Louisiana State University 298 Coates Hall, Baton Rouge, LA 70803 Email: [email protected] and [email protected] ICDM 2005 Workshop on Temporal Data Mining: Algorithms, Theory and Applications November 27-30, 2005, Houston, TX This research was done under the LBRN program (www.lbrn.lsu.edu) 1 Brief introduction The structure prediction problem for proteins plays an important role in understanding the protein folding process. This is an NP-problem. This research proposes a novel classification approach based on a new data mining technique. This technique tries to balance the overfitting and overgeneralization properties of the derived models. 2 Outline Introduction to: Classification The Protein Folding Problem Classification methods The overfitting and overgeneralization problem The Binary Expansion Algorithm (BEA) Experimental evaluation Summary 3 Introduction to Classification We are given a collection of records that consist the training set: Each record contains a set of attributes and the class that it belongs to. We are asked to find a model that describes the records of each class as a function of the values of their attributes. The goal is to use this model to classify new records for which we do not know the class in which they belong to. Typical Applications: Credit approval Target marketing Medical diagnosis Treatment effectiveness analysis 4 Introduction to the protein folding problem At least two distinct, though related, tasks can be stated: Structure Prediction Problem (Protein Folding Problem): given a protein amino acid sequence, determine its 3D folded shape. Pathway Prediction Problem: given a protein amino acid sequence and its 3D structure, determine the time-ordered sequence of folding events. 5 Introduction to the protein folding problem Cont'd Protein folding is the problem of finding the 3D structure of a protein from its amino acid sequence. There are 20 different types of amino acids (labelled with their initials as: A, C, G, ...) => A protein is a sequence of amino acids (e.g. AGGCT... ). The folding problem is to find how this amino acid chain (1D structure) folds into its 3D structure. => Classification problem. 6 Introduction to the protein folding problem Cont'd A protein is classified into one of four structural classes [Levitt and Chothia, 1976] according to its secondary structure components: all-α (α –helix) all-β (β – Strand) α/β α +β 7 Outline Introduction to Classification and Protein folding problem Classification methods The overfitting and overgeneralization problem The Binary Expansion Algorithm - BEA Experimental evaluation Summary 8 Classification methods Decision trees A flow-chart-like tree structure. An internal node denotes a test on an attribute. A branch represents an outcome of the test. Leaf nodes represent class labels or class distribution. Use the decision tree to classify an unknown sample. Bayesian classification Calculate explicit probabilities for hypothesis, among the most practical approaches to certain types of learning problems. Genetic algorithms Based on an analogy to biological evolution. 9 Classification methods - Cont'd Fuzzy set approaches Use values between 0.0 and 1.0 to represent the degree of membership. Attribute values are converted to fuzzy values. Compute the truth values for each predicted category. Rough set approaches Approximately or “roughly” define equivalent classes. K-Nearest Neighbor Algorithms Calculate the mean values of the K-nearest neighbors. 10 Classification methods - Cont'd Neural Networks (NNs) A problem-solving paradigm modeled after the physiological functioning of the human brain. The firing of a synapse is modeled by input, output, and threshold functions. The network “learns” based on problems to which answers are known and produces answers to entirely new problems of the same type. Support Vector Machines (SVMs) Data that are non-separable in N-dimensions have a higher chance of being separable if mapped into a space of higher dimension. Use a linear hyperplane to partition the high dimensional feature space. 11 Outline Introduction to Classification and Protein folding problem Classification methods The overfitting and overgeneralization problem The Binary Expansion Algorithm - BEA Experimental evaluation Summary 12 Overfitting and overgeneralization in Classification Algorithms have resulted in classification and prediction systems that are highly accurate or they are not so accurate for no apparent reason. A growing belief is that the root to that problem is the overfitting and overgeneralization behavior of such systems. Overfitting means that the extracted model describes the behavior of known data very well but does poorly on new data points. Overgeneralization occurs when the system uses the available data and then attempts to analyze vast amounts of data that has not seen yet. For example: The generated tree may overfit the training data. The SVMs method may overgeneralize the training data. => Develop an algorithm that balances overfitting and overgeneralization. 13 A multi-class prediction method One-vs-Others method (Dubchak et al 1999, Brown et al 2000) Partition the K classes into a two-class problem: one class contains proteins in one “true” class, and the “others” class combines all the other classes. A two-class classifier is trained for this two-class problem. Then partition the K classes into another two-class problem: one class contains another original class, and the “others” class contains the rest. Another two-class classifier is trained. This procedure is repeated for each of the K classes, leading to K two-class trained classifiers. 14 Outline Introduction to Classification and Protein folding problem Classification methods The overfitting and overgeneralization problem The Binary Expansion Algorithm - BEA Experimental evaluation Summary 15 Some basic concepts A clause: a description of a small area of the state space covering examples of a given class. Homogenous Clause (HC): an area covering a set of examples of a given class and unclassified examples uniformly. Any clause of a given class may be partitioned into of a set of smaller homogenous clauses. A Example: B, A1, A2 are homogenous clauses while A is a non-homogenous clause. A can be partitioned into two smaller homogenous clauses A1 and A2. The example is a 2D representation. The high dimension cases can be treated similarly. => Unclassified examples covered by clause B can more accurately be assumed to belong to the same class than those in the original clause A. 16 Some basic concepts - Cont'd Determining whether a clause is a homogenous clause can be decided by using its standard deviation. The clause is superimposed by a hyper-grid with sides of some length h. If all cells have the same density, then it is a (perfectly) homogenous clause. x 1 ( The density of a cell [Richard, 2001]: p( x) D h n nh where n = #(examples in the cell), D = #(dimensions), and + + + + + + + + A + + + + + ++ + + + + + B + + + + A is superimposed to a hyper-grid and the density of all cells can be computed + + => standard deviation = 0 B is superimposed to a hyper-grid and the density of all cells can be computed m D xim i 1 m 1 = a kernel function + + + + + + + + + + + + + + + => standard deviation > 0 + + + + + + + + + + + Determine the homogenous values of clauses A and B. 17 ) Some basic concepts - Cont'd The density: It expresses how many classified examples exist in a given clause of the state space. The density of a homogenous clause is the number of examples of a given class per a unit area. R_Unit R_Unit A B The Density of homogenous clause A > The density of homogenous clause B 18 BEA Main idea of the algorithm: Input: positive and negative examples Output: a suitable classification Find positive and negative homogenous clauses using any clustering algorithm. Sort homogenous clauses based on their densities. For each homogenous clause, one or more new areas are created by : If its density > a threshold then Expand it by: G' s radius C ' s radius 1 F ' s radius C ' s radius x 2 D F = expanded area, C = original area, and G = enveloping area. Accept some noisy examples. Else Reduce it into smaller homogenous clauses. Use expanded homogenous clauses for the new testing data. F’s radius = C’s radius + (G’s radius – C’s radius)/(2 *D) - G - - C - + + + + ++ - - D=6 Stopping conditions for expansion: F’s radius ≤ D * C’s radius #(Noisy points) ≤ (D * n) / 100 19 BEA - Cont'd Main Algorithm: Input: positive and negative examples Output: a suitable classification Step 1: Find positive and negative clauses using the k-means clusteringbased approach with the Euclidean distance. Step 2: Find positive and negative homogenous clauses from positive and negative clauses respectively. Step 3: Sort positive and negative homogenous clauses on densities. Step 4: FOR each homogenous clause C DO If (its density > a threshold = (max – min)/2 of densities) then Else - Expand C using its density D. - Accept (D*n)/100 noisy examples where n=#(its examples). Reduce C into smaller homogenous clauses by considering each cell of its hyper-grid as a new homogenous clause. 20 BEA - Cont'd Example: BEA in 2D Positive Clauses Expand Homogenous Clauses Extended HC 21 Correctness of improvement Definition: e is improved by e’, e > e’, if for all contexts C such that C[e] and C[e’] are closed, and if C[e] converges in n steps then C[e’] also converges in k steps where k ≤ n, [Sands, 2001]. BEA: Use k-means clustering based approach to find positive and negative sets. Let e denotes results obtained from k-means clustering based approach and e’ denote results obtained from BEA. Certainly C[e] and C[e’] are closed. Moreover C[e’] can accept more examples since all homogenous clauses are expanded from e. Accept noisy examples. e is improved by e’ or e is refined to e’. 22 Outline Introduction to Classification and Protein folding problem Classification methods The overfitting and overgeneralization problem The Binary Expansion Algorithm - BEA Experimental evaluation Summary 23 Accuracy measures for multi-class classification The accuracy of two-class problems involves calculating true positive rates and false positive rates. The accuracy, Q, of multi-class problems can be determined as true class rates, [Rost & Scander, 1993, Baldi et al, 2000], by: Q k wq i 1 i i qi = ci/ni where ni = #(examples in class ith) and ci = #(true examples in class ith). wi=ni/N where N = Total of examples of a given class. 24 Experiments Assess the algorithm for two-class problems. Source: http://www.csie.ntu.edu.tw/~cjlin/methods/guide/data/ Training set Testing set # Atts C.J.Lin’s SVMs Train_1(3089 exps) Test_1 (4000 exps) 4 96.9% Train_2(391 exps) Train_2 (391 exps) Cross validation Test_3 (41 exps) 20 85.2% 21 87.8% Train_3(1243 exps) BEA Training set Testing set #Fail Positive #Fail Negative Q Train_1 Test_1 Train_2 Test_3 22 (200 negative exps) 0 0 (0 negative exps) 99,25% Train_2 Train_3 9 (2000 positive exps) 0 0 (41 positive exps) 100% 100% 25 Experiments - Cont'd 100.0% 95.0% 90.0% 85.0% C.J.Lin's SVMs BEA 80.0% 75.0% Train_1 & Train_2 with Train_3 & Test_1 cross Test_3 validation The BEA provides 15.5% improvement in the classification accuracy vs. C.J.Lin’s SVMs. 26 Experiments - Cont'd Assess the algorithm for two-class problems. Source: http://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/binary Train Test Train Test Data #atts #exps Q w1a w2a w3a w4a w5a w6a 300 300 300 300 300 300 2477 3470 4912 7366 9888 17188 Data a3a #atts Q 122 #exps 3185 a4a 122 4781 90,17% a5a 122 6414 86,47 a6a 122 11220 82,17 a7a 122 16100 79,99 Train 85,97 % 85,40 85,08 84,64 84,18 Test Train Test Data w4a w1a w2a w3a w5a w6a #exps 7366 2477 3470 4912 9888 17188 Q Data a7a a3a #exps 16100 3185 94,98% a4a 4781 94,92 a5a 6414 94,92 a6a 11220 96,95 85,79% 86,57 86,16 85,41 84,83 Q 27 Experiments - Cont'd A test bed of the algorithm for the protein folding problem Source of data sets: http://www.nersc.gov/~cding/protein by Ding and Dubchak, 2001. Data types #atts # training exps # testing exps A.A.Composition (C) Secondary struc. (S) 21 22 605 605 385 385 Polarity (P) 22 605 385 Polarizability (Po) 22 605 385 Hydrophobicity (H) 22 605 385 Volume (V) 22 605 Six parameter datasets extracted from protein sequences. Use One-vs-Others method for the fourth-classes problem. Use the Independent Test method in experiments. 385 BEA represents a protein as a n dimensional vector corresponding to the composition of the n amino acids in the protein. 28 Experiments - Cont'd The average results obtained from [Ding and Dubchak, 2001] and [Zerrin, 2004] for the dataset with 27-class: Data types Q1 Q2 Q3 Q4 A.A.Composition 43.5% 20.5% 71.44% 66.66% Secondary struc. 43.2 36.8 Hydrophobicity 45.2 40.6 Polarity 43.2 41.1 volume 44.8 41.2 Polarizability 44.9 41.8 Q1: The average accuracy of the SVMs with the independent test method in [Ding and Dubchack, 2001, Table 6, p11]. Q2: The average accuracy of the Neural Networks with the independent test method in [Ding and Dubchack, 2001, Table 6, p11]. Q3: The average accuracy of the SVMsAAC method in [Zerrin, 2004]. Q4: The average accuracy of the SVMstrio AAC method in [Zerrin, 2004]. 29 Experiments - Cont'd Results obtained from BEA for 4-class: Data types all-α all-β α/β α+β Q5 A.A.Composition 87.27% 74.81% 71.43% 91.95% 81.37% Secondary struc. 87.23 72.21 66.75 91.17 79.34 Hydrophobicity 86.75 74.55 71.17 91.69 81.04 Polarity 87.27 73.51 70.13 91.95 80.72 Volume 87.01 74.29 71.43 91.95 81.17 Polarizability 86.75 74.29 70.13 91.95 80.78 30 Experiments - Cont'd 90.0% Ding's Neural Networks for 27-class 80.0% 70.0% Ding's S VMs for 27class 60.0% 50.0% S VMs-TrioAAC for 27-class 40.0% 30.0% S VMs-AAC for 27class 20.0% 10.0% BEA for 4-class 0.0% C S P Po H V The BEA provides: 10% improvement in classification accuracy as the SVMsAAC method at the data type of Amino Acid Composition. • • Approximately 36% improvement as Ding’s SVM. 31 Summary This research was done to: Enhance our understanding of the performance of a new data mining algorithm. Propose a new approach based on balancing overfitting and overgeneralization properties to enhance the performance of data mining algorithms. Make a contribution in a hot area in pure Bioinformatics by achieving highly accurate results in predicting protein folding properties. Future work to focus on: Test the BEA with other applications. Improve the performance of the approach by: Improving the accuracy of the algorithm by finding a suitable density for homogenous clauses. Decreasing the execution time by using parallel computing techniques. Studying a multi-class classification algorithm. 32 References Zerrin Isik et al, “Protein Structural Class Determination Using Support Vector Machines”, Lecture Notes in Computer Science-ISCIS 2004, vol: 3280, pp. 82, Oct. 2004. http://people.sabanciuniv.edu/~berrin/methods/fold-classification-iscis04.pdf A.C.Tan et al, “Multi-Class Protein Fold Classification Using a New Ensemble Machine Learning Approach”, Genome Informatics 14: 206–217, 2003. http://www.brc.dcs.gla.ac.uk/~actan/methods/actanGIW03.pdf Chris H.Q.Ding et al, “Multi-class protein fold recognition using Support Vector Machines and Neural Networks”, Bioinformatics, 17:349-358, 2001. http://www.kernel-machines.org/methods/upload_4192_bioinfo.ps D. Sands.: Improvement theory and its applications. In A. D. Gordon and A. M. Pitts, editors, Higher Order Operational Techniques in Semantics, Publications of the Newton Institute, pp 275-306. Cambridge University Press, 1998. 33 Thank you! Any questions? 34