* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Document

Survey

Document related concepts

Transcript

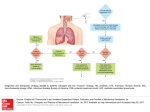

760 Lecture 3 Today’s lecture: 5/22/2017 760 Lecture 4 1 Today’s Agenda Give you a brief overview of Modern regression models – smoothing – PPR – Neural nets – Regression trees – MARS 5/22/2017 760 Lecture 4 2 Modern Regression Topics: • Smoothing – Loess – splines • Curse of dimensionality • Regression models in high dimensions – – – – PPR Neural nets Trees MARS 5/22/2017 760 Lecture 4 3 Smoothing Common methods – Loess (see V&R p 230, also 20x) – Smoothing Splines (see V&R p230, and below) – Regression splines (see V&R p 228) – Supersmoother (see V&R p 231) 5/22/2017 760 Lecture 4 4 Loess • Review 20x • At each data point, fit a weighted regression, with weights given by a kernel • Regression can be quadratic or linear • Use regression to predict fitted value at that point • Repeat for every point (subset if too many) 5/22/2017 760 Lecture 4 5 Smoothing splines • Data: (xi,yi), i =1,…,n: xi low dimension • Choose function f to minimise (for l fixed) n (y i 1 i 2 f ( xi )) l f ( x) dx 2 '' Measures closeness to data Measures smoothness Controls tradeoff between smoothness and closeness to data 5/22/2017 760 Lecture 4 6 Cross-validation • The parameter l chosen by “cross-validation” • Divide data into two sets, “training set” and “test set” • For fixed l, find the function fl that minimises the criterion • Calculate S(y-fl (x))2 summed over the test set • Repeat and average • Result is an estimate of the error Error(l) we get if we use fl to model new data • Choose l to minimise this error 5/22/2017 760 Lecture 4 7 Regression splines • • • • Piecewise cubics Chose knots ie equally spaced Generate a “spline basis” in R Fit using linear regression y b1 f1 ( x) b2 f 2 ( x) ... bk f k ( x) 5/22/2017 760 Lecture 4 8 Example Average height and weight of American women 30-39 > women height weight 1 58 115 2 59 117 3 60 120 4 61 123 5 62 126 6 63 129 7 64 132 8 65 135 9 66 139 10 67 142 11 68 146 12 69 150 13 70 154 14 71 159 15 72 164 5/22/2017 760 Lecture 4 9 R code > round(bs(women$height, df = 5),5) 1 2 3 4 5 [1,] 0.00000 0.00000 0.00000 0.00000 0.00000 [2,] 0.45344 0.05986 0.00164 0.00000 0.00000 [3,] 0.59694 0.20335 0.01312 0.00000 0.00000 [4,] 0.53380 0.37637 0.04428 0.00000 0.00000 [5,] 0.36735 0.52478 0.10496 0.00000 0.00000 [6,] 0.20016 0.59503 0.20472 0.00009 0.00000 [7,] 0.09111 0.56633 0.33673 0.00583 0.00000 [8,] 0.03125 0.46875 0.46875 0.03125 0.00000 [9,] 0.00583 0.33673 0.56633 0.09111 0.00000 [10,] 0.00009 0.20472 0.59503 0.20016 0.00000 [11,] 0.00000 0.10496 0.52478 0.36735 0.00292 [12,] 0.00000 0.04428 0.37637 0.53380 0.04555 [13,] 0.00000 0.01312 0.20335 0.59694 0.18659 [14,] 0.00000 0.00164 0.05986 0.45344 0.48506 [15,] 0.00000 0.00000 0.00000 0.00000 1.00000 reg.spline<- lm(weight ~ bs(height, df = 5), data = women) plot(women$height, women$weight) lines(women$height, predict(reg.spline)) 5/22/2017 760 Lecture 4 10 160 150 140 120 130 women$weight 58 60 62 64 66 68 70 72 women$height 5/22/2017 760 Lecture 4 11 The regression problem • The data follow a model y = f(x1,…,xK) + error where f is unknown smooth function and K is possibly large • We want to find an “automatic” estimate of f, to use for prediction • If K is small (1 or 2) we can use a smoother, but what if K is large? 5/22/2017 760 Lecture 4 12 Curse of dimensionality • Consider n points scattered at random in a K-dimensional unit sphere • Let D be the distance between the centre of the sphere to the closest point: D D K=1 (one dimensional) 5/22/2017 K=2 760 Lecture 4 13 Curse of dimensionality (cont) Median of distribution of D: n=100 n=200 n=500 n=1000 K=1 0.01 0.00 0.00 0.00 K=2 0.08 0.06 0.04 0.03 K=5 0.37 0.32 0.27 0.23 K=10 0.61 0.57 0.52 0.48 K=20 0.78 0.75 0.72 0.70 K=100 0.95 0.94 0.94 0.93 5/22/2017 760 Lecture 4 14 Moral • Smoothing doesn’t work in high dimensions: points are too far apart • Solution: pick estimate of f from a class of functions that is flexible enough to match f reasonably closely • We need to be able to compute the estimate easily 5/22/2017 760 Lecture 4 15 Familiar classes of functions • Linear functions • Additive functions (V&R p 232, HTF p 295, Ch9) – fitted by smoothing in one or two dimensions using the backfitting algorithm • These are easy to fit but not flexible enough • We need something better : will look at some other classes of functions 5/22/2017 760 Lecture 4 16 References • Projection pursuit: V&R p238, HTF p389 (Ch 9) • Trees: V&R p251, HTF p305 (Ch9) • MARS: HTF p 321 (Ch9) • Neural networks: V&R p243, HTF p392 (Ch9) 5/22/2017 760 Lecture 4 17 Projection pursuit y 1 (a1 ' x) 2 (a 2 ' x) a1 x a2 a1’x a2’x 5/22/2017 760 Lecture 4 18 Neural network x1 x2 x3 x4 x5 x6 y (a wi xi whh ( whi xi )) 5/22/2017 760 Lecture 4 19 Trees • These functions are multi-dimensional step functions • Can be described by “regression trees” 7 6 5 4 3 2 1 S6 5/22/2017 9 7 5 3 1 0 760 Lecture 4 S1 20 Trees (cont) X17 X26 Y=4 5/22/2017 X2 Y=1 Y=7 760 Lecture 3 Y=3 21 Trees (cont) y=7 y=1 6 5 y=3 y=4 X2 7 5/22/2017 760 Lecture 4 X1 22 MARS • Consider transformations of the predictors of the form h(xj) = max(0, xj-xij) and h(xj) = max(0, xij-xj) and all pairwise products of these. • Fit a model using linear regression and forward selection to these transformed variables until some fixed number of terms has been added. • Then do backward elimination for a fixed number of steps. This produces a sequence of models, pick the best predicting model using crossvalidation. 5/22/2017 760 Lecture 4 23 Software • Projection pursuit: ppr(formula, data=data) • Trees rpart(formula, data=data) • Neural networks nnet(formula, data=data) • Mars earth(formula, data=data) 5/22/2017 760 Lecture 4 24 Relationships PPR Regression functions Additive models Linear models Neural nets Trees MARS 5/22/2017 760 Lecture 4 25 Complexity • For PPR, measured by number of ridge functions • For Neural nets, measured by the number of units in the hidden layer • For trees, by the number of branches • For MARS, by the number of fitted terms • Caution: Models that are too complex will not be good for prediction – model noise as well as signal 5/22/2017 760 Lecture 4 26 Overfitting Size of error Correct model Test set Training set Model Complexity 5/22/2017 760 Lecture 4 27 Model choice • Want to chose model that predicts new data well • Divide existing data into training set (50%), test set (50%) and validation set (25%) • Fit models using training set • Estimate their prediction errors (sum of squares of prediction errors) using the test set or CV • Choose model having smallest prediction error on test set • Use validation set to get final estimate of prediction error 5/22/2017 760 Lecture 4 28 Example: CPU data Tables show mean and median in- and out-of sample prediction errors for 50 random splits of the data set $mean Insample Linear 0.18168065 FS 0.18355269 MARS 0.10098809 Tree 0.03665507 ppr 0.05520358 nnet 0.08363496 5/22/2017 $median outsample 0.2199499 0.2151103 0.2877227 0.2114495 0.1938958 0.2013799 Insample Linear 0.18045156 FS 0.18205086 MARS 0.10228148 Tree 0.03605472 ppr 0.05491079 nnet 0.08570877 760 Lecture 4 outsample 0.2125469 0.2092804 0.1960645 0.2110796 0.1893202 0.1821245 29 Boosting, Bagging, Random forests • Boosting: Essentially forward selection of basis functions. HCF Ch10 • Bagging: average predictors over bootstrap samples. HCF Ch 8 • Random Forests – bagging (modified) applied to trees. HCF Ch15 5/22/2017 760 Lecture 4 30